GDPR-Compliant Database in 2026: A Practical Blueprint (Not a Myth)

"gdpr compliant database" sounds like a product feature.

It isn't.

A database is only compliant if your controls work in real life and you can prove it fast, with evidence you can hand over without a fire drill.

Look, the quickest way to fail an audit is to treat GDPR like a hosting decision ("we're in the EU, we're fine"). Auditors don't care where your server sits if you can't show who accessed personal data, why you kept it, and how you delete it across the systems that quietly copy data everywhere (warehouse, logs, support tools, exports, backups).

Below is the controls-to-evidence map we use, plus an erasure/backups playbook and RoPA tables you can copy.

What you need (quick version)

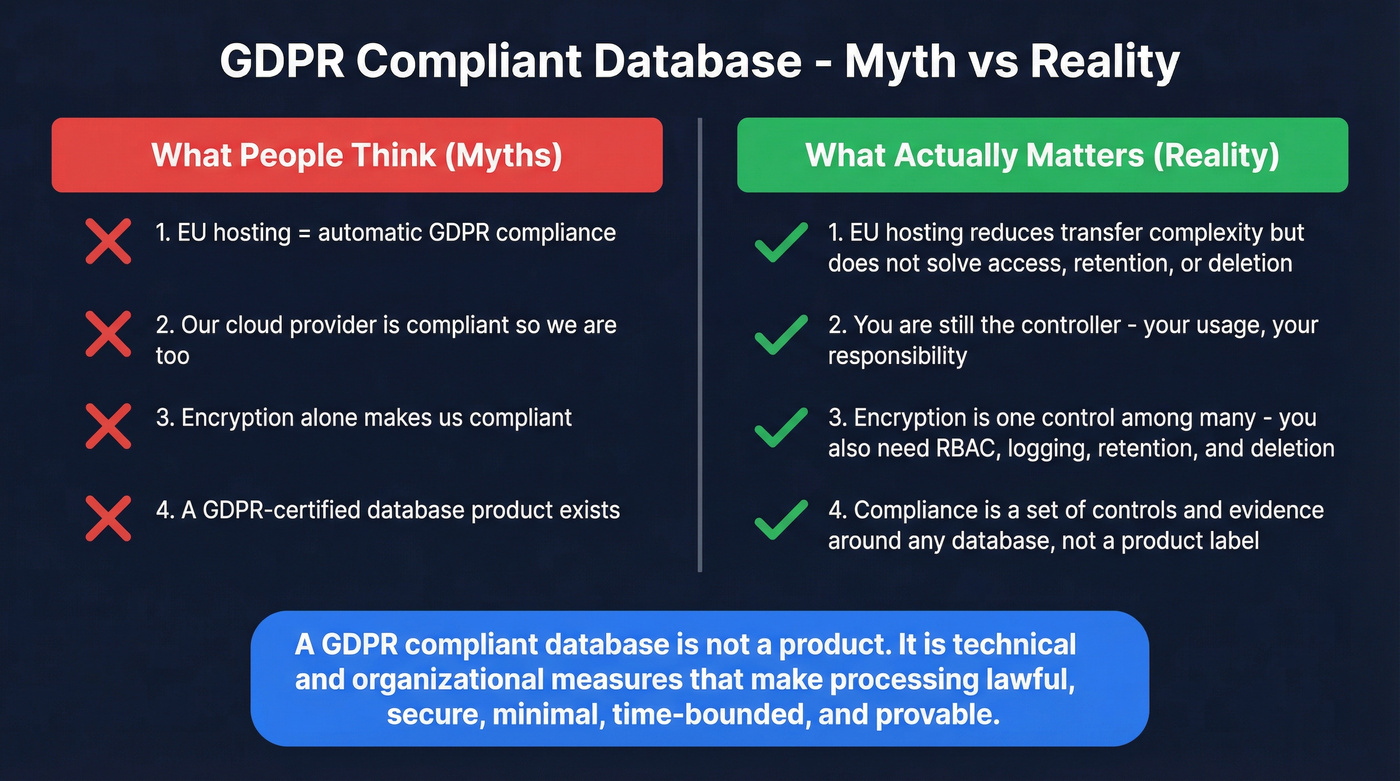

There's no such thing as a GDPR-certified database. GDPR compliance is about how you collect, store, access, use, share, and delete personal data - and whether you can prove it on demand.

Your goal isn't "feel compliant." Your goal is: produce evidence quickly (often within 24 hours) without heroics.

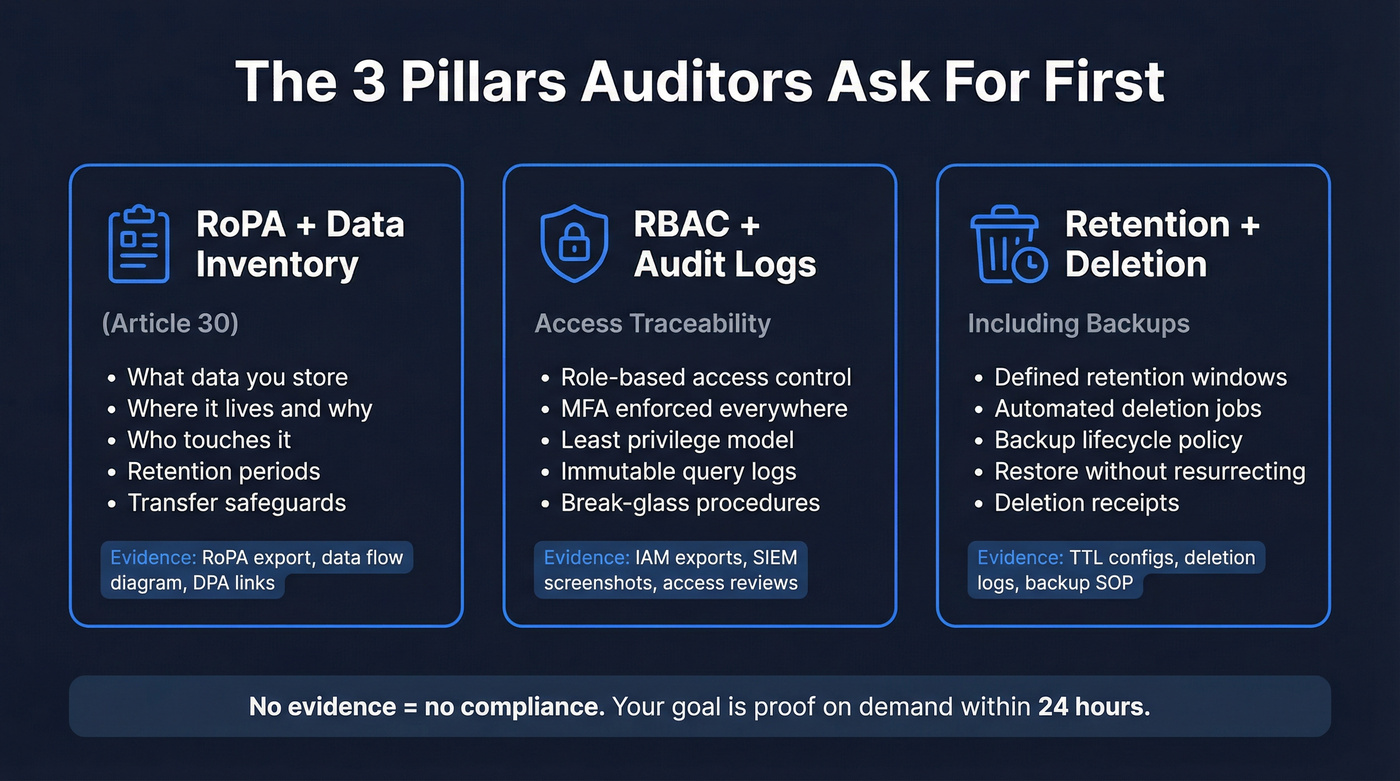

Start with the three things auditors and security teams ask for first:

- RoPA + data inventory (Article 30): a living record of what personal data you store, where it lives, why you process it, who touches it, retention, recipients, and transfer safeguards. Evidence examples: RoPA spreadsheet/database link, system inventory export, data flow diagram, vendor DPA links.

- RBAC + audit logs: role-based access control, MFA, least privilege, and logs that show who accessed what and when. Access without traceability is where "we're compliant" stories go to die. Evidence examples: IAM policy export, group membership history, SIEM screenshots showing query/admin events, break-glass tickets.

- Retention + deletion (including backups): defined retention windows, automated deletion workflows, and a plan for erasure that covers backups (including "beyond use" handling when immediate deletion isn't possible). Evidence examples: retention policy, TTL configs, deletion job run logs, deletion receipts, backup lifecycle policy.

Do these 3 things this week:

- Create a system inventory: list every system that stores personal data (prod DBs, analytics, support tools, data warehouse, backups). Assign an owner to each.

- Lock down access: enforce MFA, remove shared accounts, require approval for privileged DB access. Turn on audit logs and ship them somewhere immutable.

- Write your deletion reality: document retention periods, backup windows (30/60/90 days), and your "restore without resurrecting deleted data" procedure.

What a GDPR compliant database means (and what it doesn't)

A GDPR compliant database is a database environment where processing personal data follows GDPR principles and you can demonstrate that with controls and evidence.

GDPR's principles are the design constraints: lawfulness/fairness/transparency; purpose limitation; data minimization; accuracy; storage limitation; integrity and confidentiality; accountability. If your schema, pipelines, and access patterns violate those, you're not compliant - no matter what vendor logo is on the invoice.

Two misconceptions cause real damage.

Myth #1: "EU hosting = GDPR compliance." EU hosting can reduce transfer complexity, but it doesn't solve lawful basis, over-collection, retention, access sprawl, or DSAR execution. I've seen EU-hosted stacks fail because everyone had admin access and nobody could prove deletion.

Myth #2: "Our cloud provider is compliant, so we're compliant." Your provider can be a strong processor. You're still responsible for how your org uses the system as a controller (or as a processor for your customers).

Definition (useful in practice) A GDPR compliant database isn't a product. It's a set of technical and organizational measures around a database that make your processing lawful, secure, minimal, time-bounded, and provable.

If you want a vendor-style consolidation of best practices (not a primary authority), Alation's overview is a decent further read: https://www.alation.com/blog/gdpr-data-compliance-best-practices-2026/.

Does GDPR apply to your database? (scope, personal data, roles)

GDPR applies when you process personal data and you meet a scope trigger.

The scope triggers are simple: you're established in the EU and process personal data (even if processing happens elsewhere), or you're outside the EU but you offer goods/services to people in the EU or monitor their behavior in the EU.

Personal data isn't just "name + email." It's any information about an identified or identifiable person. Examples include:

- Name, address

- Passport/ID number

- Income

- IP address

- Any unique identifier tied to a person (including device IDs)

That matters for databases because engineers treat "logs" and "analytics events" as harmless. They aren't. IPs, user IDs, and event trails become personal data the moment you can tie them back to a person.

Roles matter because they change what you need from vendors:

- Controller: decides the purposes and means of processing. If it's your product and your customer data, you're usually the controller.

- Processor: processes personal data on behalf of a controller. Your database vendor, hosting provider, or tooling vendor is often a processor.

Practical takeaway: controllers need RoPA, lawful basis, DSAR workflows, and DPAs with processors. Processors still need security, auditability, and the ability to support customers' DSAR and deletion requirements.

GDPR compliant database controls checklist (requirement → control → evidence)

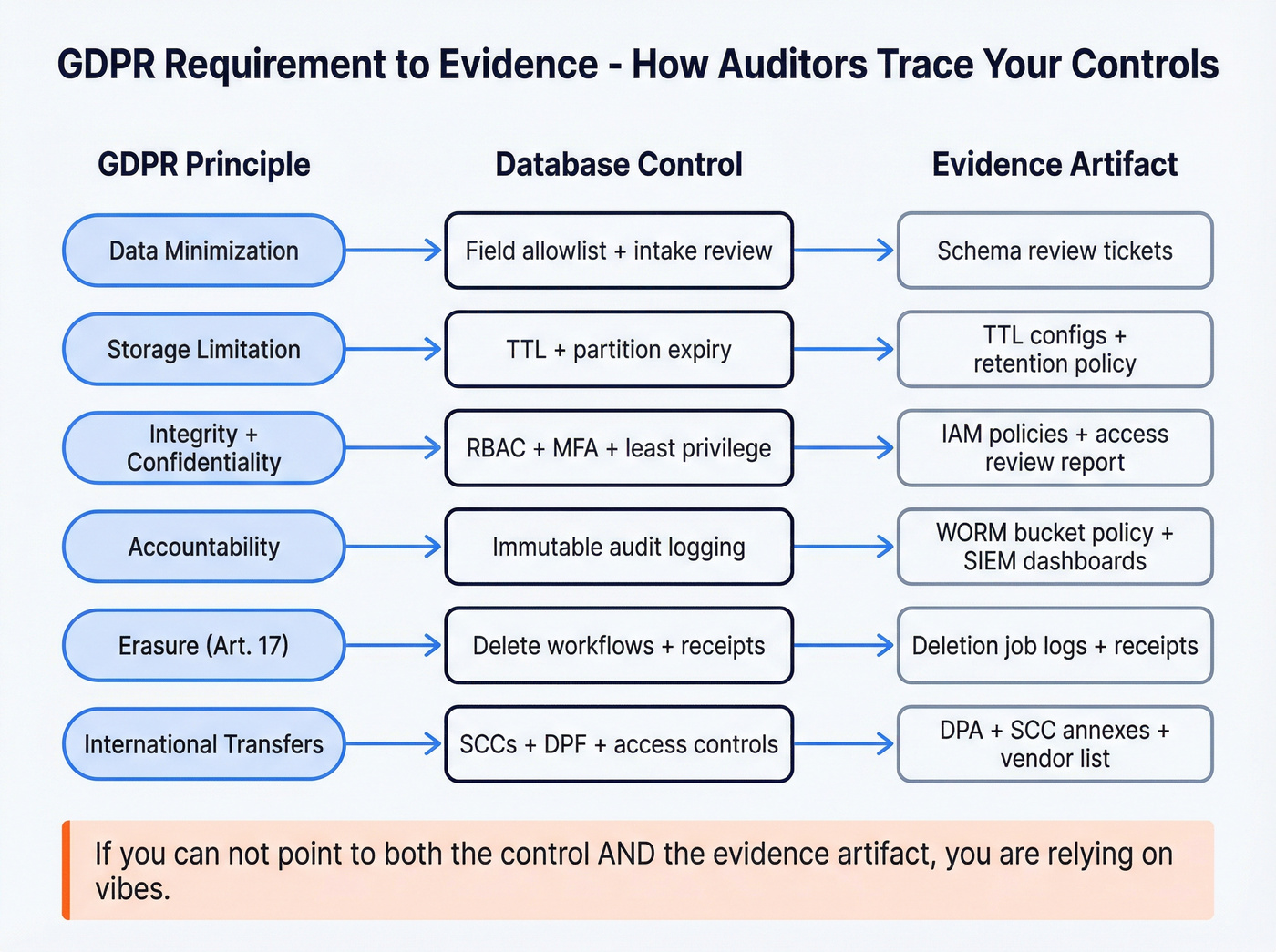

This is the mapping we use when we're sanity-checking a stack. If you can't point to the control and the evidence artifact, you're relying on vibes.

| GDPR req / principle | Database control | Evidence artifact |

|---|---|---|

| Lawfulness + transparency | Data purpose tags + collection source | Privacy notice link + collection event logs |

| Purpose limitation | Purpose-bound schemas + data contracts | Data contract docs + pipeline approvals |

| Data minimization | Field allowlist + intake review | Schema review tickets + rejected-field log |

| Accuracy | Update + correction flow | Change history + correction ticket trail |

| Storage limitation | Retention policies + TTL/partition expiry | TTL configs + retention policy |

| Integrity/confidentiality | RBAC + MFA + least privilege | IAM policies + access review report |

| Art. 32 security | Encryption in transit | TLS configs + cipher policy |

| Art. 32 security | Encryption at rest | KMS settings + storage encryption proof |

| Key management | Key rotation + key access restrictions | Rotation logs + KMS access logs |

| Accountability | Audit logging (auth + data access + admin) | Query/admin logs + SIEM dashboards |

| Audit logging | Immutable/WORM log storage | WORM bucket policy + retention lock proof |

| Accountability | Break-glass access procedure | Pager/ticket + approval + log entry |

| Privacy by design (Art. 25) | Pseudonymisation + join boundary | Architecture diagram + join audit logs |

| Integrity/confidentiality | Data masking/redaction for non-prod | Masking policy + non-prod access logs |

| Art. 32 risk-based | DPIA / risk assessment triggers | DPIA record + risk acceptance (if any) |

| Erasure (Art. 17) | Delete workflows + deletion receipts | Deletion job logs + receipts |

| Backups + erasure | "Beyond use" + overwrite schedule | Backup SOP + lifecycle policy + restore logs |

| Transfers | SCCs/DPF/BCRs + access controls | DPA + SCC annexes + vendor access list |

A couple opinionated notes:

- RBAC + audit logs are the backbone. In audits we've been part of, a common first request is: export access logs for this table for the last 30 days. "We don't log SELECTs" is an instant credibility hit.

- Retention + deletion is where teams get exposed. Everyone can delete a row. Almost nobody has a restore process that doesn't resurrect deleted records.

- If a vendor won't sign a DPA, don't put personal data in it. "We'll add it later" turns into permanent compliance debt.

Building a GDPR-compliant data stack starts with choosing vendors who take compliance seriously. Prospeo is fully GDPR compliant with DPAs available, global opt-out enforcement, and a Zero-Trust data partner policy - so your RoPA and DPIAs stay clean.

Stop auditing bad data. Start with a provider that passes the checklist.

Security and access governance that actually holds up in an audit

Security guidance gets hand-wavy fast, so here's the audit-grade version.

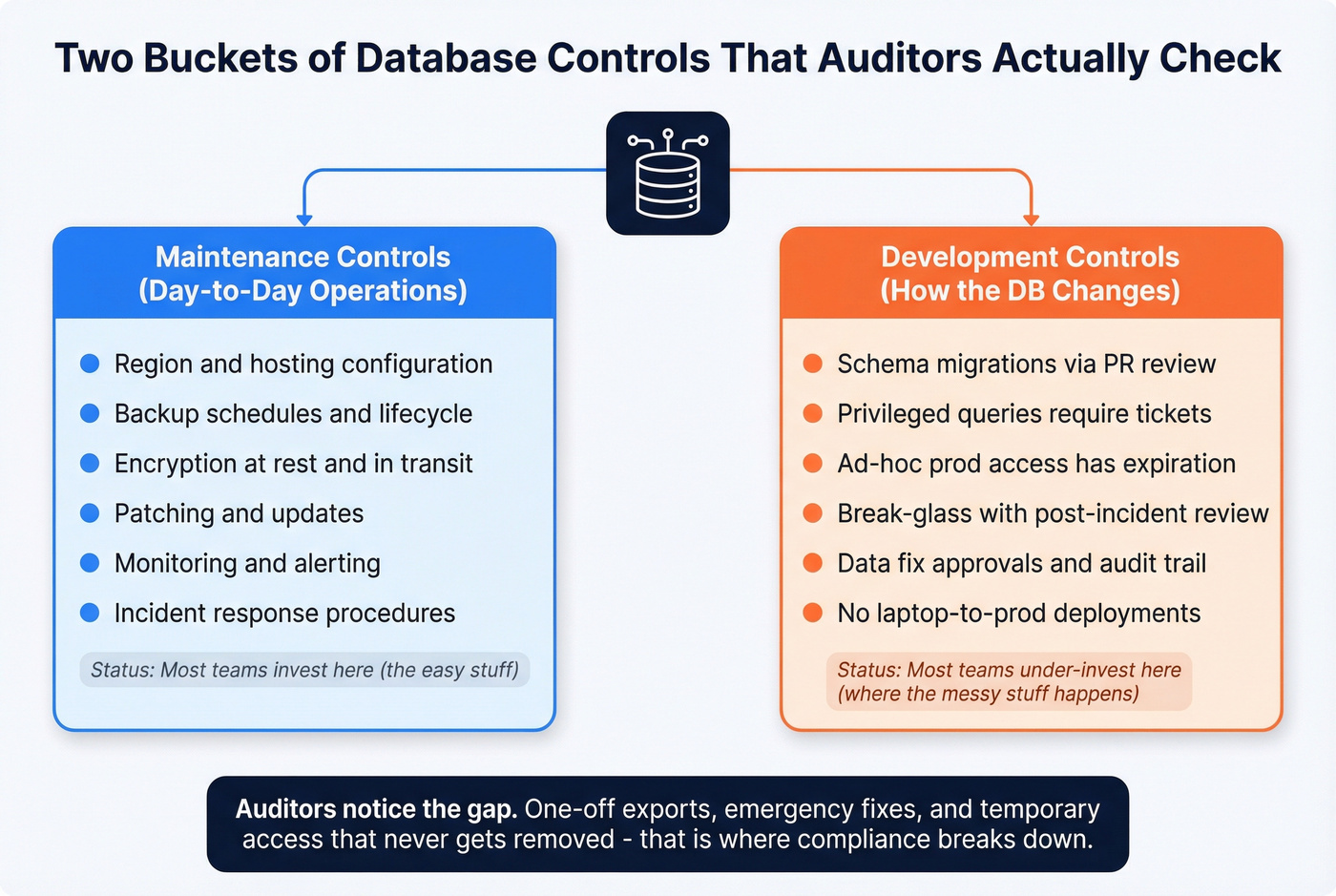

First, a framing that's genuinely useful in practice (and matches how database change tools think about the world): two buckets of controls.

- Maintenance controls: how the database is operated day-to-day (regions, backups, encryption, patching, monitoring, incident response).

- Development controls: how the database is changed (schema migrations, privileged queries, data fixes, approvals, and audit trails).

Most teams over-invest in maintenance ("we turned on encryption") and under-invest in development ("anyone can run a prod query if they ask nicely"). Auditors notice, because the second bucket is where the messy stuff happens: one-off exports, emergency fixes, and "temporary" access that never gets removed.

We reference ICO (UK GDPR) guidance in this section because it's operationally detailed and closely aligned with EU GDPR on these controls.

Bytebase's practitioner framing is worth skimming specifically for this maintenance vs development split and the "change workflow" mindset: https://www.bytebase.com/blog/database-compliance-for-gdpr/.

RBAC + MFA + least privilege (touches maintenance and development)

Checklist that works in real orgs:

- Enforce MFA for every human with console/DB access.

- Kill shared accounts. Use named identities and short-lived access where possible.

- Separate roles: read-only analytics, app service accounts, DBAs, security admins.

- Require approval for privileged access (break-glass is fine, but log it).

What to log (minimum viable):

- Authentication events (success/fail)

- Privilege changes (role grants, policy edits)

- Data access for sensitive tables (SELECTs on PII tables, exports)

- Admin actions (schema changes, backup restores)

Who approves access:

- Data owner (business) + platform/security (technical). If only IT approves, access creep wins every time.

Development control you can't skip: schema changes + ad-hoc prod queries

If you want one "grown-up" move that instantly improves compliance posture, it's this: treat schema changes and privileged queries like deployments.

- All schema changes go through PR/MR review.

- Migrations are applied via a controlled pipeline (not a laptop).

- Ad-hoc prod queries require a ticket, a reason, and an expiration.

- Emergency fixes use break-glass, then get a post-incident review.

This is where you create the evidence trail that proves accountability.

Encryption at rest/in transit + KMS/HSM basics (maintenance controls)

Do this by default unless you've got a very specific reason not to:

- TLS in transit for app-to-DB and admin tooling-to-DB.

- Encryption at rest using a managed KMS where possible.

- Rotate keys on a schedule and on incident events.

- Restrict who can use/decrypt keys (KMS permissions are part of your RBAC story).

Here's the thing: encryption doesn't fix over-permissioned access. If 40 people can query the table, encryption at rest just means the disk is safe when stolen.

Audit logging retention defaults (maintenance controls)

A retention pattern that balances cost and usefulness (common security practice, not a GDPR rule):

- 90 days "hot" (fast search, in your SIEM/log platform)

- 12 months archived (cheap storage, immutable/WORM if you can)

If you're higher-risk (health, finance, large-scale tracking), keep longer. If you're small, don't overbuild. Just make sure you can answer "who accessed this record?" for a reasonable period.

Pseudonymisation ≠ anonymisation (EDPB 2026) - schema patterns

EDPB guidance is blunt: pseudonymised data is still personal data if it can be attributed back to an individual using additional information.

That's not bad news. It's just reality.

Pseudonymisation reduces risk and supports privacy by design (Art. 25) and security (Art. 32). It also improves your legitimate interests story: when you pseudonymise event data, your balancing test gets easier because the impact of misuse drops sharply, and you can keep the utility while reducing identifiability across analytics, security, fraud, and abuse detection.

"Still personal data" explained in one paragraph

If you replace email = alice@company.com with user_id = 8f3a... but you keep a lookup table somewhere (or a system can re-identify), you're still processing personal data. You've reduced exposure, not eliminated it.

Practical schema pattern: split identifiers table + strict access boundary

A pattern that works:

- Table A (Identifiers): email, phone, name, address (locked down hard)

- Table B (Events/usage): user_id, timestamps, product events (broader access)

- Access boundary: only a small set of services/roles can join A-to-B.

- Log every join attempt and export.

This is one of the few "privacy by design" moves that also makes engineers happy because it reduces accidental leakage in analytics.

Right to erasure + backups: the "beyond use" playbook

Right to erasure is where a GDPR compliant database stops being theoretical.

Operationally, you've got one month to respond to an erasure request. And yes: if erasure applies and no exemption applies, you need to address live systems and backups.

One accuracy point that prevents overpromising: erasure isn't absolute. If you refuse or partially comply, document the exemption you rely on and keep it with the ticket.

When you can't immediately delete from backups (common), the practical approach regulators accept is "beyond use":

- Don't use the backup data for any other purpose

- Keep it only until it's overwritten on an established schedule

- Be clear with the individual about retention and overwrite timelines

I've watched teams burn weeks here because they treated backups as sacred and untouchable, then realized restores would resurrect deleted users. You want a boring, repeatable process that works at 2 a.m. during an incident.

DSAR/erasure workflow (intake → verify → execute → confirm)

A clean workflow:

- Intake: web form or email alias, ticket created, clock starts.

- Verify identity: match known identifiers; don't collect extra data "just to be safe."

- Scope the systems: use your inventory to list every system that might contain the person's data.

- Execute deletion:

- App DB: delete or irreversibly de-identify

- Data warehouse: delete partitions/rows or reprocess

- Logs: delete where feasible; otherwise minimize and restrict

- Log the deletion: keep a minimal deletion receipt (hash of identifier + timestamp + systems touched).

- Confirm: respond within one month with what you did and what happens with backups.

What to record in the erasure ticket (non-negotiable):

- Request date/time + channel

- Identity verification method used

- Systems searched (even if "no data found")

- Deletion action per system (delete vs de-identify vs restricted)

- Backup handling method + overwrite window

- Exemption relied on (if any) + approver

- Response date + response template used

Engineering effort (typical ranges): for a small product with a handful of systems, implementing a reliable DSAR/erasure pipeline is 2-10 engineering days. For a mid-market org with multiple warehouses, event streams, and third-party tools, it's 3-8 weeks of on-and-off work to get it right, mostly because you spend time chasing down shadow copies and rebuilding pipelines so deletion doesn't break reporting.

Backup retention defaults (30/60/90 days) + what to disclose

Most teams run backup retention like:

- 30 days (lean)

- 60 days (common)

- 90 days (conservative)

Pick one, document it, and disclose it in plain language: "We delete your data from production systems immediately. Encrypted backups roll off within 60 days. Until then, backups are restricted and not used for any other purpose."

Restore procedure: how to avoid "resurrecting" deleted records

This is the part everyone forgets.

If you restore a backup from last week, you can bring back records you already deleted. The fix is a deletion log (minimized) that's applied after restore.

Practical pattern:

- Maintain an append-only log of deletion identifiers (hashed email/user_id) + deletion timestamp.

- On restore, run a post-restore purge job that re-applies deletions across restored datasets.

- Restrict who can run restores, and log every restore event.

- Add a simple test: restore to staging monthly and verify deleted IDs stay deleted after the purge job.

For more implementation color, Verasafe's write-up summarizes the common regulator approach and the restore pitfall well: https://verasafe.com/blog/do-i-need-to-erase-personal-data-from-backup-systems-under-the-gdpr/.

Your compliance "database": RoPA (Article 30) as fields you can implement

Most RoPAs fail because they're written like a legal memo instead of an operational inventory.

The bar you should aim for is simple: RoPA must be granular and meaningful - detailed enough that you can answer "where is this person's data?" and "what do we delete?" without guessing.

We treat RoPA as an internal database (even if it starts as a spreadsheet). Every row is a processing activity tied to real systems and owners. Over time, this becomes part of your broader GDPR database compliance stack: inventory, access governance, deletion automation, and vendor evidence in one place.

The 3-week RoPA build method (fast, realistic, audit-friendly)

This is the approach that actually gets finished.

Week 1: questionnaire to every business area (collect reality, not opinions) Send a short form to Product, Engineering, Marketing, Sales, Support, Finance, HR, and Security. Ask for concrete answers:

- What processing activity is this? (e.g., "product analytics," "billing," "support tickets")

- What personal data fields are involved? (be specific: email, IP, device ID, payment token)

- Where does the data enter from? (signup form, API, import, web tracking, vendor sync)

- Where is it stored? (systems + regions + backups)

- Who has access? (teams/roles + any external vendors)

- What's the purpose and lawful basis? (contract, legitimate interests, legal obligation, consent)

- What's the retention period and deletion method? (TTL, job, manual, "beyond use" for backups)

- Any international access or subprocessors? (countries + safeguard: SCC/DPF/adequacy)

- Any high-risk elements? (large-scale tracking, sensitive data, children, profiling) → DPIA trigger

Week 2: workshops with IT/IG/legal (turn answers into a consistent model)

Meet with:

- IT/Platform/Security to validate systems, access paths, logs, and backup reality

- Information governance/privacy to normalize purposes, lawful bases, retention language

- Legal/procurement to link DPAs, SCCs, subprocessors, and transfer mechanisms

The goal is consistency: same column names, same categories, same "definition of done" for each row.

Week 3: reconcile with contracts + reality (the step everyone skips)

- Compare RoPA recipients/transfers against vendor contracts and subprocessor lists.

- Compare retention claims against actual TTLs, backup windows, and warehouse partitions.

- Compare "access" against IAM exports and group membership.

- Fix mismatches immediately; don't leave "TODO" in a RoPA.

Hot take: if your RoPA says "retention = 12 months" but your warehouse keeps events forever, you don't have a RoPA. You've got fiction.

What "granular and meaningful" looks like (and what fails)

Passes the smell test:

- "Customer support tickets in Zendesk: contact details + issue history; purpose = support; retention = 24 months; recipients = Zendesk; access = support team; transfers = SCCs; security = RBAC + MFA + encryption."

Fails:

- "We process customer data for business purposes."

If your RoPA can't answer "which system do we delete from?" it's not operational.

Table: Article 30 fields → suggested columns (controller + processor notes)

| Art. 30 required field | Recommended column | Example value | Owner/system |

|---|---|---|---|

| Controller details | Controller name | "Acme Ltd" | Legal |

| DPO/contact | DPO email | dpo@acme.com | Legal |

| Purposes | Purpose | "Billing" | Finance |

| Data subjects | Subject category | "Customers" | Product |

| Personal data cats | Data fields | "Email, IP" | Data Eng |

| Recipients | Recipient types | "Payment PSP" | Finance |

| Transfers | Transfer country | "US" | Security |

| Safeguards | Safeguard | "EU-US DPF" | Legal |

| Erasure timelines | Retention | "7 years" | Finance |

| Security measures | TOMs summary | "RBAC, KMS, audit logs" | Security |

| Processor role | Processor? | Yes/No | Legal |

| System of record | System | "Postgres" | Platform |

Example RoPA row (database-specific): product analytics events in a warehouse

Here's what a "database-ready" row looks like for a common pain point:

- Activity: Product analytics events (telemetry)

- Subjects: Users (customers + trial users)

- Fields: user_id (pseudonymous), IP address, device identifier, timestamps, event names, page/app context

- Source: SDK events from app + server logs

- Systems: event stream → warehouse (EU region) → BI tool; backups 60 days

- Purpose: product improvement + security/abuse detection

- Lawful basis: legitimate interests (with balancing test on file)

- Access: analytics role (no identifiers), security role (limited), break-glass for incident response

- Controls: pseudonymisation split-table pattern; masking in non-prod; audit logs for joins/exports

- Retention: raw events 13 months; aggregated metrics 24 months; deletion via partition drop + deletion log

- Recipients/transfers: BI vendor + support access; safeguard = SCCs/DPF as applicable; DPA link attached

- DPIA: triggered if adding cross-site tracking or profiling; record decision either way

That single row forces the right conversations: IP handling, join boundaries, retention, and vendor access.

For a clean enumerated list of required fields, ISMS.online reproduces Article 30 in a database-friendly way: https://www.isms.online/general-data-protection-regulation-gdpr/gdpr-article-30-compliance/.

International data transfers (EDPB): what counts as a transfer + safeguards

Transfers are where teams get stuck in the "EU hosting" trap.

A transfer happens when (1) you're subject to GDPR for the processing, (2) you disclose or make personal data available to another organization, and (3) that organization is in a non-EEA country. (EEA = EU + Iceland, Liechtenstein, Norway.)

That means remote access and subprocessors trigger transfers, even if your primary database is in Frankfurt. If a US-based support vendor can access EU customer records, you've got a transfer scenario to govern.

Safeguards you'll actually use:

- Adequacy decisions (simplest), including the United States for commercial orgs participating in the EU-US Data Privacy Framework, plus the UK, Japan, Switzerland, and others.

- Appropriate safeguards like SCCs or BCRs.

- Derogations as a last resort (don't build a business on these).

Checklist that keeps you sane:

- List every vendor/subprocessor with potential access to EEA personal data.

- For each: country, access type (storage vs support access), safeguard (DPF/SCC/BCR), and where the contract lives.

- Make sure your RoPA rows reference the safeguard.

Vendor due diligence: how to verify "GDPR-compliant" claims (procurement checklist)

"100% compliant" with no artifacts is marketing. Compliance is provable or it's not.

Demand this from any database vendor, cloud vendor, or SaaS tool that touches personal data:

- DPA (Data Processing Agreement) you can sign

- Subprocessors list with countries and purposes

- Data residency options (if offered) and what they cover (prod + backups + support access)

- Security controls: encryption at rest/in transit, access controls, logging, key management

- Transfer mechanism: SCCs, DPF participation, or equivalent safeguards

- DSAR support: how they help you delete/export data on request (and timelines)

- Breach process: notification timelines and contact path

- Evidence you can export: IAM exports, audit logs, admin action history, retention settings

Hard stance: if a vendor can't give you a DPA and a subprocessor list, treat them like a toy. Keep personal data out.

Knack's GDPR documentation is a good model for "evidence-forward" vendor pages: https://www.knack.com/gdpr/.

Budget reality (so procurement doesn't get surprised): pricing usually splits into (1) base platform cost and (2) security/compliance add-ons. Expect to pay extra for SSO/MFA enforcement, audit log export, longer log retention, dedicated support, and signed DPAs. Typical patterns by category:

- No-code/internal tools: ~$20-$200/user/month; SSO/audit logs often gated to higher tiers.

- Data warehouses/managed databases: usage-based (storage + compute) plus paid features for advanced security/auditing in some tiers.

- SIEM/log platforms: usage-based (GB/day ingestion) and can become your biggest compliance line item if you log everything without filtering.

If you mean a GDPR-compliant B2B contact database (for outreach)

This is where people get sloppy: "It's B2B, so GDPR doesn't apply." It applies when you process personal data - and work emails and direct dials are personal data.

The clean way to think about outreach is: lawful basis + transparency + opt-out + proof. If you can't do those four, don't run outbound. You'll burn deliverability, annoy the wrong people, and create a compliance mess you can't document.

In practice, the risk isn't just the CRM record. It's the whole flow of GDPR compliant sales data moving through enrichment, sequencing, exports, and suppression lists. If any step can't prove sourcing, lawful basis, or opt-out enforcement, your "compliant" story collapses.

Lawful basis for outreach: when legitimate interests fits (and when it doesn't)

Legitimate interests fits when:

- you've got a narrow ICP and a specific outreach purpose

- the privacy impact is limited and reasonable in context

- you provide clear notice and an upfront opt-out

- you honor opt-outs fast and permanently (suppression hygiene)

It doesn't fit when:

- you blast huge lists with no targeting

- you can't explain why you chose that person

- you can't honor opt-outs within 48 hours

- you can't document sourcing category at a high level

Use this / Skip this (hard rules)

Use a B2B contact database if:

- you can document a legitimate interests assessment (purpose/necessity/balancing)

- you can send a compliant notice (or at least a clear first-touch disclosure)

- you've got suppression lists wired into every tool that sends messages

- you can delete contacts across your CRM, sequences, and exports on request

Skip it if:

- you can't explain where the data category came from (even broadly)

- you can't keep suppression lists clean across tools and teammates

- you're relying on "we'll delete later" workflows

- you don't have a real owner for DSAR/erasure tickets

"Opt-in database" claims: useful, but not the whole story

Opt-in is one lawful approach, and it can reduce complaints. But it's not the only path. Plenty of compliant B2B outreach runs on legitimate interests - when it's targeted, transparent, and opt-out is immediate and enforced everywhere.

The label doesn't matter. Your process does.

Proof checklist for "GDPR-compliant leads" vendors (what to demand)

Ask for proof, not slogans, especially if you're buying lead data that will be exported into multiple tools.

- Signed DPA available

- Published subprocessors and countries

- Clear opt-out mechanism (and confirmation it's enforced globally)

- Transfer safeguards (SCCs / DPF / adequacy path)

- Data sourcing transparency (high-level is fine; secrecy isn't)

- Data freshness policy (stale data increases complaints and bounces)

- Suppression list handling (so opt-outs don't re-enter later)

A practical tool note (because someone always asks)

In our experience, the fastest way to keep outbound "clean" is to pick a data vendor that doesn't make you chase paperwork, and then be ruthless about suppression lists across every sender and export.

Prospeo comes up a lot here because it's the B2B data platform built for accuracy, it's GDPR compliant, it enforces global opt-out, and DPAs are available. We've used it for targeted list-building where the real win wasn't "more leads" - it was fewer bounces and fewer messy edge cases when someone asks, "Where'd we get this contact, and can we delete them everywhere today?"

Two common architecture traps (and how to avoid them)

If you're considering separate EU databases: what breaks

Teams love the idea of "just run a separate EU database." Sometimes it's right. Often it creates new problems you didn't budget for:

- Schema drift: EU and non-EU schemas diverge, then your app behaves differently by region.

- Reporting chaos: analytics becomes a reconciliation project instead of a dashboard.

- Incident response slows down: you now have two sets of logs, two restore paths, two runbooks.

- Access governance gets harder: more roles, more secrets, more places to forget to revoke access.

If you go multi-region, do it deliberately: one migration pipeline, one schema source of truth, one access model, and a documented transfer/access story for support teams.

If you're a small org living in spreadsheets: minimum viable controls

If you're a school, nonprofit, or tiny team with spreadsheets and a couple SaaS tools, don't overbuild. Do the minimum that reduces risk:

- Put spreadsheets in a controlled workspace with MFA and restricted sharing.

- Encrypt devices and use managed accounts (no personal Gmail).

- Keep a simple system inventory (even 10 rows is fine).

- Write a one-page retention rule ("delete after X months") and follow it.

- Create a basic DSAR mailbox + ticket template and practice one request end-to-end.

Small teams get in trouble not because they lack fancy tooling, but because they can't answer basic questions quickly.

FAQ

Is there such a thing as a "GDPR-certified" database?

No. GDPR doesn't certify databases as compliant products. Compliance depends on your processing controls: lawful basis, minimization, retention, access controls, audit logs, DSAR/erasure workflows, transfer safeguards, and evidence you can export within 24 hours.

Do I need to host my database in the EU to be GDPR compliant?

No. EU hosting doesn't equal compliance, and non-EEA hosting can still be compliant with the right safeguards. In practice, you need documented subprocessors/remote access plus a transfer mechanism like adequacy (for example, EU-US DPF) or SCCs, alongside strong security controls.

How can I honor right-to-erasure if data exists in backups?

Delete from live systems promptly, then handle backups via deletion or "beyond use" controls when immediate deletion isn't possible. Disclose overwrite timelines (commonly 30/60/90 days), keep backups encrypted and access-restricted, and maintain a deletion log so restores re-apply deletions.

What evidence should a vendor provide to prove GDPR compliance?

A vendor should provide a signed DPA, a subprocessors list (with countries/purposes), transfer safeguards (SCCs/DPF), DSAR support steps, and exportable proof like audit logs, admin history, and retention settings. If they can't produce those artifacts in 1-2 business days, don't put personal data in the tool.

What's a good free option for GDPR-compliant B2B contact data?

For small outbound tests, use tools that include opt-out handling and a DPA path. Prospeo's free tier includes 75 emails plus 100 Chrome extension credits per month. Pair it with strict suppression-list hygiene and a 48-hour opt-out SLA in your outbound tools.

Your audit checklist demands accuracy, data minimization, and provable deletion. Prospeo delivers 98% email accuracy through 5-step verification with spam-trap removal and honeypot filtering - refreshed every 7 days so you're never stuck with stale personal data.

GDPR demands accurate data. Prospeo delivers it at $0.01 per email.

Summary: what "compliant" looks like in real life

A GDPR compliant database is the one where you can point to (1) a living RoPA and system inventory, (2) tight access governance with audit logs you can export, (3) retention and deletion that includes backups, and (4) transfer and vendor paperwork that matches reality.

If you can't produce that evidence fast, you don't have compliance. You've got hope.