Search Intent Data in 2026: The Datasets, Signals, and Workflows That Make It Actionable

Everyone says they "use intent." Search intent data is where that claim either turns into a system you can defend in a room full of skeptics, or it collapses into a spreadsheet of vibes.

Treat intent like a dataset you can audit: evidence, timestamps, baselines, and a refresh cadence. If you can't explain why a label exists, you don't have intent data. You've got guesses with formatting.

What you need (quick version)

This is the minimum setup that works without turning into a six-month taxonomy project.

Your intent data checklist (keep it boring and measurable):

- One source of truth for queries/topics (GSC for SEO; topic feeds for B2B)

- A branded vs non-branded split

- Multi-intent labeling (more than one label per query/topic when the SERP is mixed)

- Validation tied to outcomes (CTR + conversions)

- A refresh cadence (SERPs drift; buyer research shifts)

Do these 3 things this week:

- Export GSC queries and split branded vs non-branded.

- Label intent at scale using rules first, then clustering for the messy middle.

- Validate with CTR + conversions, then refresh monthly (quarterly for stable head terms).

Once you have intent-qualified accounts/leads, you still need verified contacts to act.

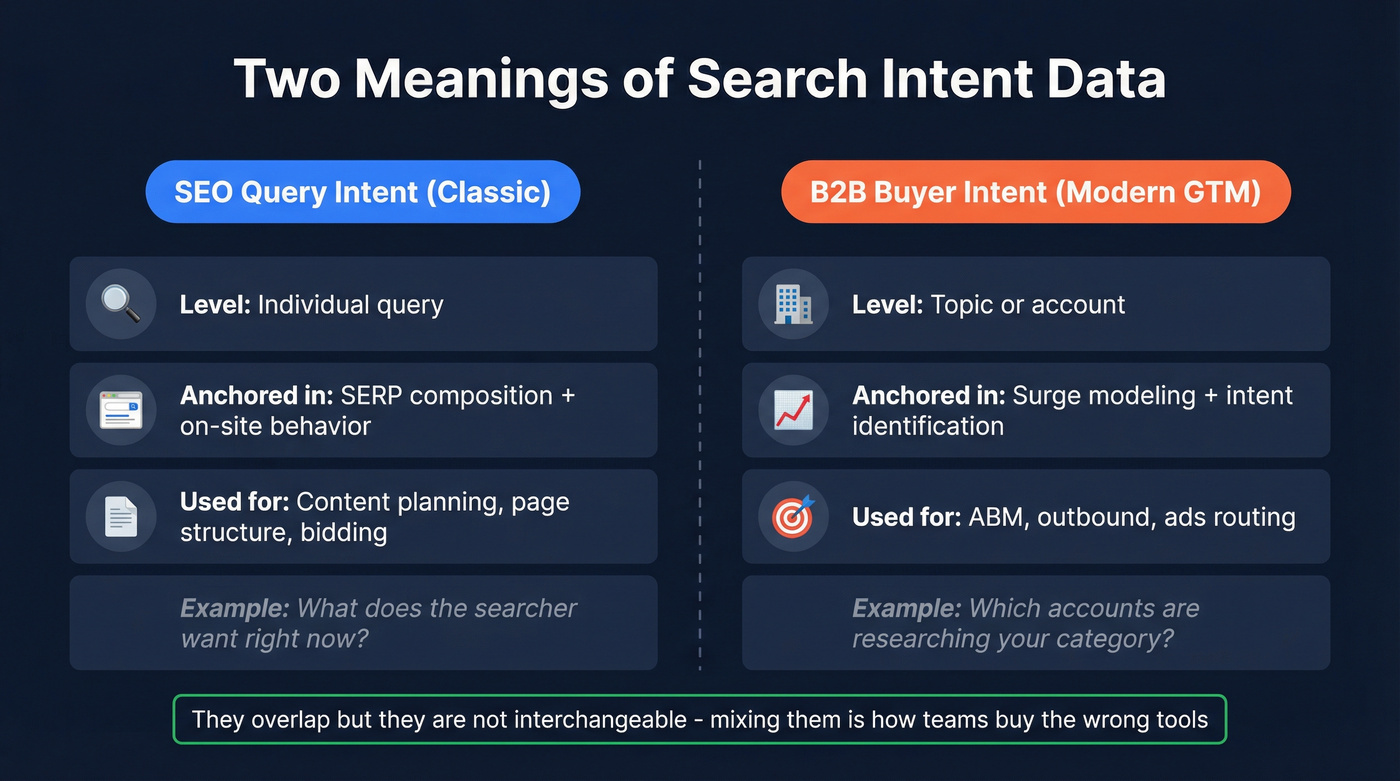

What "search intent data" means (two meanings)

This phrase gets used in two different ways, and mixing them is how teams buy the wrong tools and measure the wrong outcomes.

Meaning #1: SEO query intent (classic).

Query-level intent: what the searcher wants right now. You use it to decide what to publish, how to structure a page, what to put above the fold, and what to bid on.

Meaning #2: B2B buyer intent signals (modern GTM).

Topic-level intent: which accounts are researching your category. You use it to route signals into ads, outbound, and ABM plays, often using surge data to detect which accounts spiked on specific topics over a defined window.

They overlap, but they're not interchangeable:

- SEO intent is query-level and anchored in SERP composition + on-site behavior.

- Buyer intent is topic-level and anchored in surge modeling + intent identification.

Definition box: search intent data (practical definition)

Search intent data is a structured record of what people are trying to accomplish when they search, captured as query/topic signals plus evidence (SERP and engagement outcomes) and used to drive SEO decisions or B2B go-to-market actions.

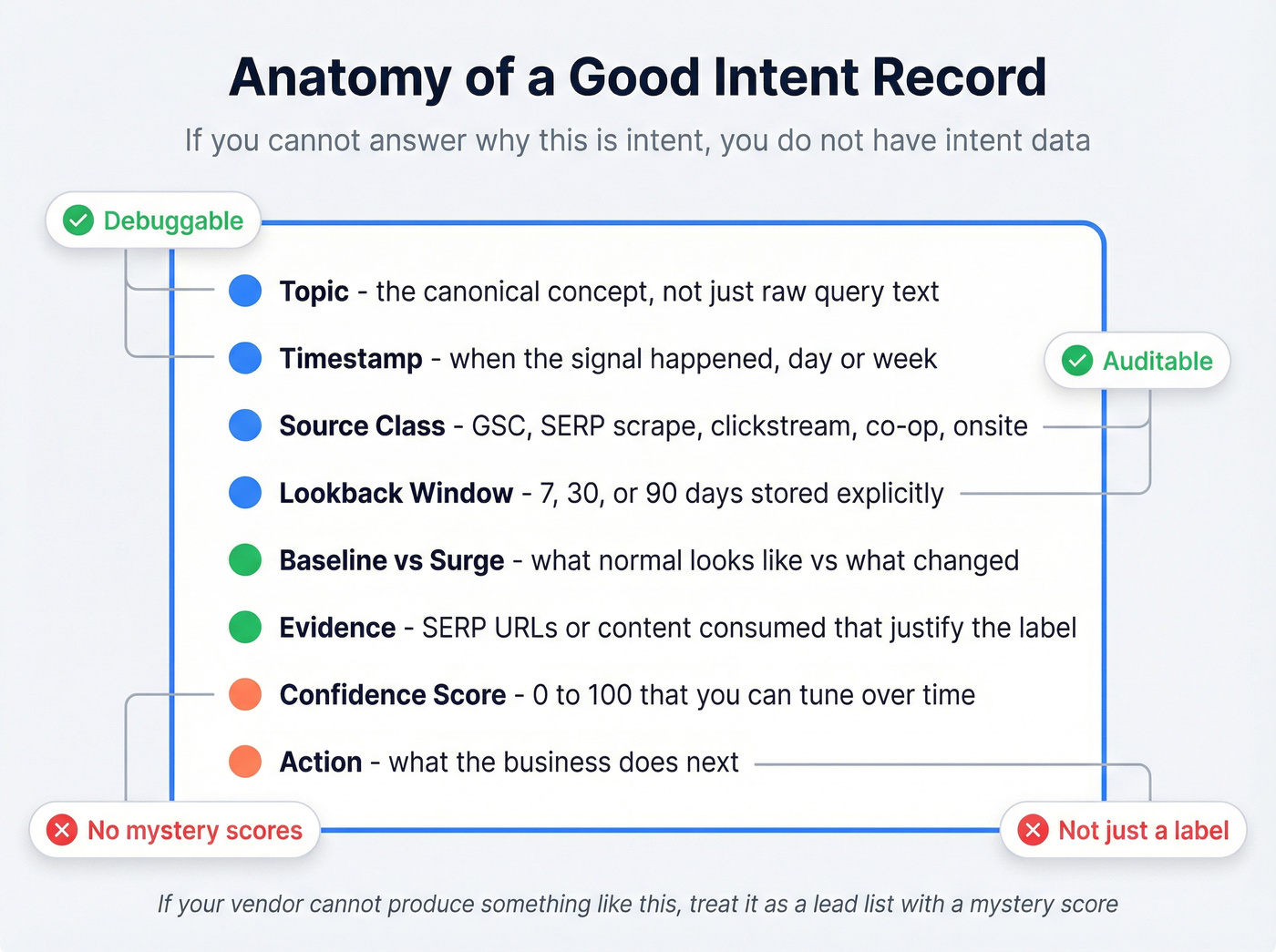

The intent dataset you actually need (fields + quality bar)

Most "intent datasets" fail for one reason: they're not debuggable. If you can't answer "why do we believe this is intent?" you don't have intent data - you have a label.

Good intent records include:

- Topic: the canonical concept (not just raw query text)

- Timestamp: when the signal happened (day/week)

- Source class: GSC, SERP scrape, clickstream, co-op, on-site behavior, etc.

- Lookback window: 7/30/90 days (stored explicitly)

- Baseline vs surge: what "normal" looks like vs what changed

- Evidence: SERP URLs, landing pages, or content consumed that justify the label

- Confidence score: 0-100 you can tune over time

- Action: what the business does next

Mini schema (starting point):

entity_type: query | topic | account | personentity_id: keyword string | topic_id | domain | hashed identifierintent_labels: [informational, commercial, transactional, navigational] (multi-label allowed)source_class: GSC | Ads | SERP | clickstream | co-op | onsite | emailwindow_days: 7 | 30 | 90baseline_value: numericcurrent_value: numericsurge_pct: numericevidence: [url1, url2, ...]confidence: 0-100action: create content | update page | bid | route to SDR | run ads

One filled-out intent record (what "auditable" looks like)

Below is a single record you can hand to SEO, RevOps, and Sales without starting a debate.

entity_type: query_cluster

entity_id: "crm automation software" # canonical cluster name

intent_labels: ["commercial", "transactional"] # mixed SERP

source_class: "SERP+GSC"

timestamp_week: "2026-02-02"

window_days: 28

baseline_value: 4200 # impressions / 28d (prior period)

current_value: 6100 # impressions / 28d (current)

surge_pct: 45.2

evidence:

- "https://example.com/serp-snapshot/crm-automation-2026-02-02"

- "https://competitor-a.com/pricing"

- "https://competitor-b.com/compare"

confidence: 84

action:

- "Update comparison page: add pricing table + migration section"

- "Create 'CRM automation pricing' child page and internal-link it"

- "Bid on cluster in paid search with 'demo' landing page"

If your vendor or internal model can't produce something like this (even without the exact URLs), don't treat it as intent. Treat it as a lead list with a mystery score.

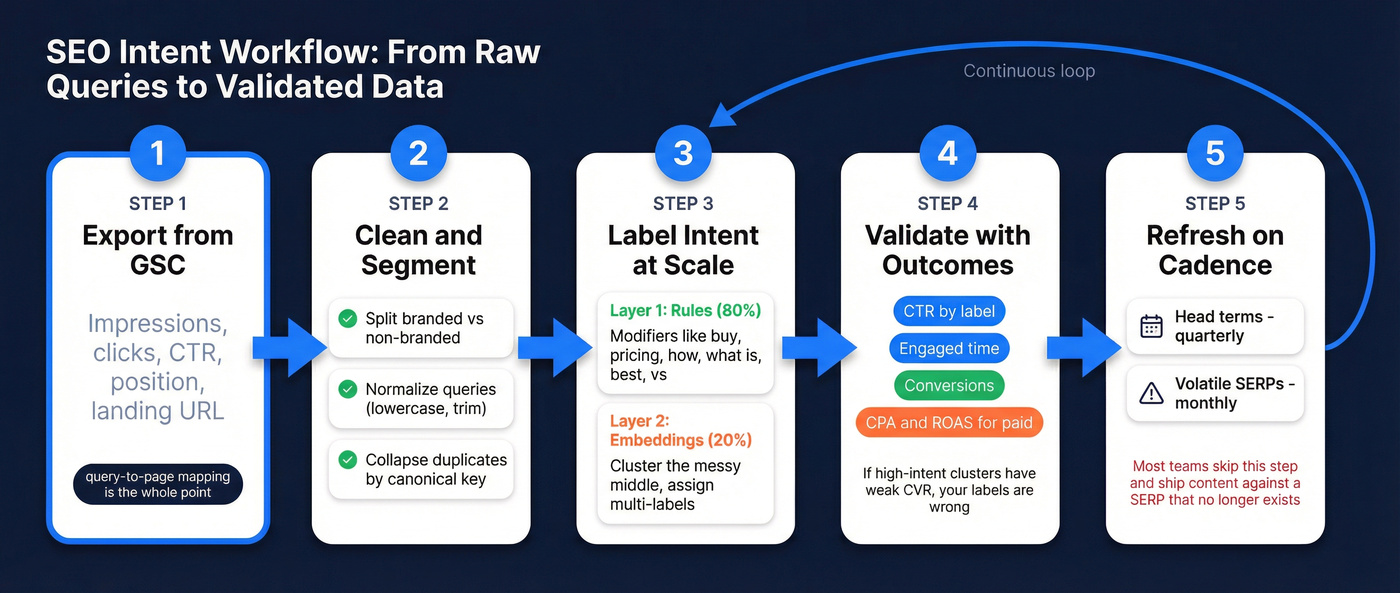

SEO workflow: GSC -> label -> validate -> refresh

This workflow produces a dataset that improves rankings and conversion rate, because it forces you to prove intent with outcomes.

You'll:

- export queries from Google Search Console,

- clean and segment them,

- label intent at scale,

- validate labels against performance and conversions, and

- refresh on a cadence.

Build the query export (and clean it first)

Export from Google Search Console -> Performance:

- impressions

- clicks

- CTR

- average position

- page (landing URL) when possible (query-to-page mapping is the whole point)

Clean step #1: split branded vs non-branded. Google's branded queries filter in GSC shows up for eligible properties. Use it when you have it, because branded queries inflate "intent" with navigational demand you already created.

Constraints that matter:

- It's limited to top-level properties (not URL-prefix properties or subdomain properties).

- It requires sufficient query volume.

If you don't have it, build a branded dictionary (brand name, product names, misspellings) and tag queries in your export.

Clean step #2: normalize queries. Lowercase, trim whitespace, remove obvious junk. Keep nuance. Don't aggressively stem everything into mush.

Clean step #3: collapse duplicates by canonical query. If you export across countries/devices, you'll get duplicates that behave the same. Keep dimensions, but store a canonical key.

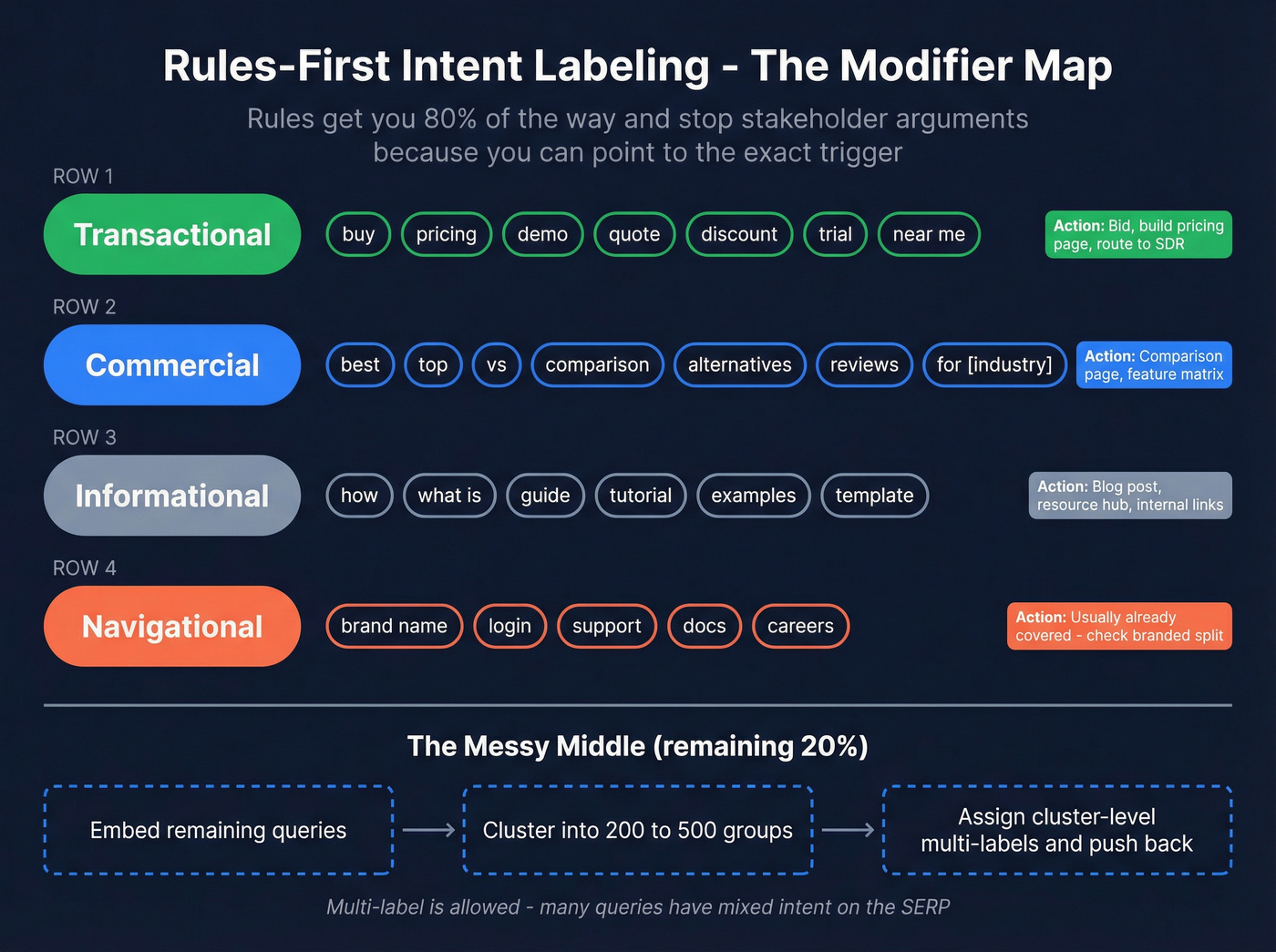

Label intent at scale (rules-first beats embeddings-first)

Here's a position I'll defend: for SEO, rules-first beats embeddings-first for the first 80%. It's faster, explainable, and easy to QA.

Layer 1: rules (fast, transparent). Use modifier rules that map cleanly to intent:

- Transactional: buy, pricing, demo, quote, discount, trial, "near me"

- Commercial investigation: best, top, vs, comparison, alternatives, reviews, "for [industry]"

- Informational: how, what is, guide, tutorial, examples, template

- Navigational: brand terms, login, support, docs, careers

Rules get you most of the way and stop stakeholder arguments because you can point to the exact trigger.

Layer 2: embeddings + clustering (the messy middle). Cluster the remaining queries so you review them in groups that mean the same thing.

A practical workflow:

- embed queries (optionally include top-ranking titles/snippets)

- cluster into 200-500 clusters depending on volume

- assign cluster-level intent labels (multi-label allowed)

- push labels back to individual queries

Validate intent alignment (make it earn its keep)

Validation is where "intent" stops being a taxonomy and becomes data you can trust.

Validation metrics (SEO):

- CTR by intent label (and by position bucket)

- engaged time (GA4 engagement metrics)

- bounce rate is noisy, so use it only directionally

- conversions (macro + micro: demo request, signup, newsletter, product click)

Then ask the only questions that matter:

- Are commercial queries landing on informational pages? Fix the landing page or build the missing page.

- Are transactional queries landing on comparisons? Add a direct path to pricing/demo.

- Are informational pages driving assisted conversions? Keep them, but tighten internal linking.

Validation metrics (paid search):

- CVR

- CPA

- ROAS

Paid search exposes bad labels fast. If your "high intent" cluster has weak CVR and ugly CPA, your labels are wrong or your landing page doesn't match the job.

Refresh cadence (the part teams skip)

SERPs drift. AI Overviews shift click behavior. Competitors change positioning. If you don't refresh, your labels become historical fiction.

Use a mixed cadence:

- Quarterly for stable head terms

- Monthly for volatile SERPs and fast-moving categories

Most teams fail here. They do one labeling sprint, celebrate, and ship content for months against a SERP that no longer exists.

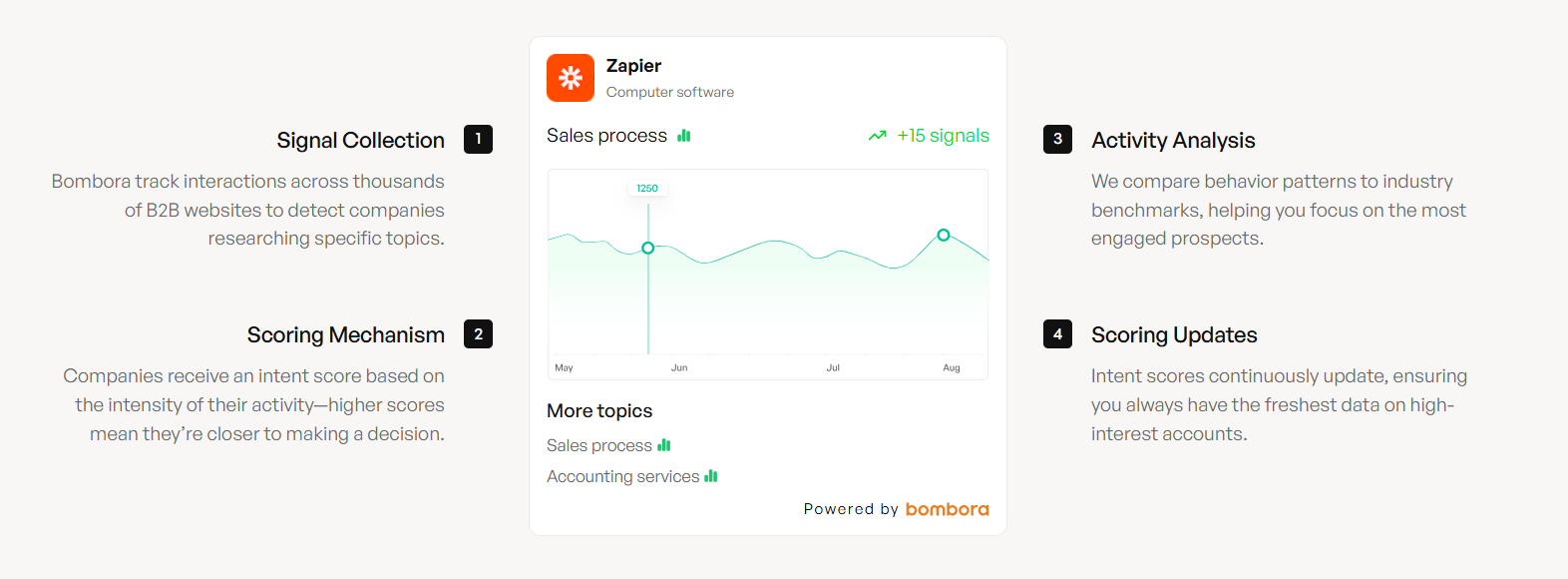

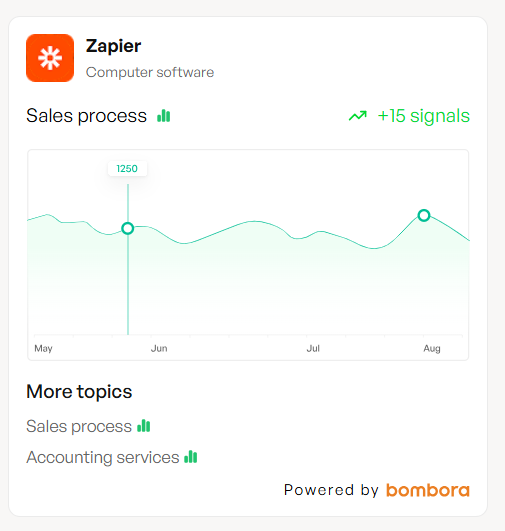

You built the intent dataset, labeled the queries, validated with conversions. Now you have surging accounts - but no verified contacts to reach. Prospeo tracks 15,000 intent topics via Bombora and pairs them with 143M+ verified emails at 98% accuracy, refreshed every 7 days.

Turn intent signals into booked meetings, not stale spreadsheets.

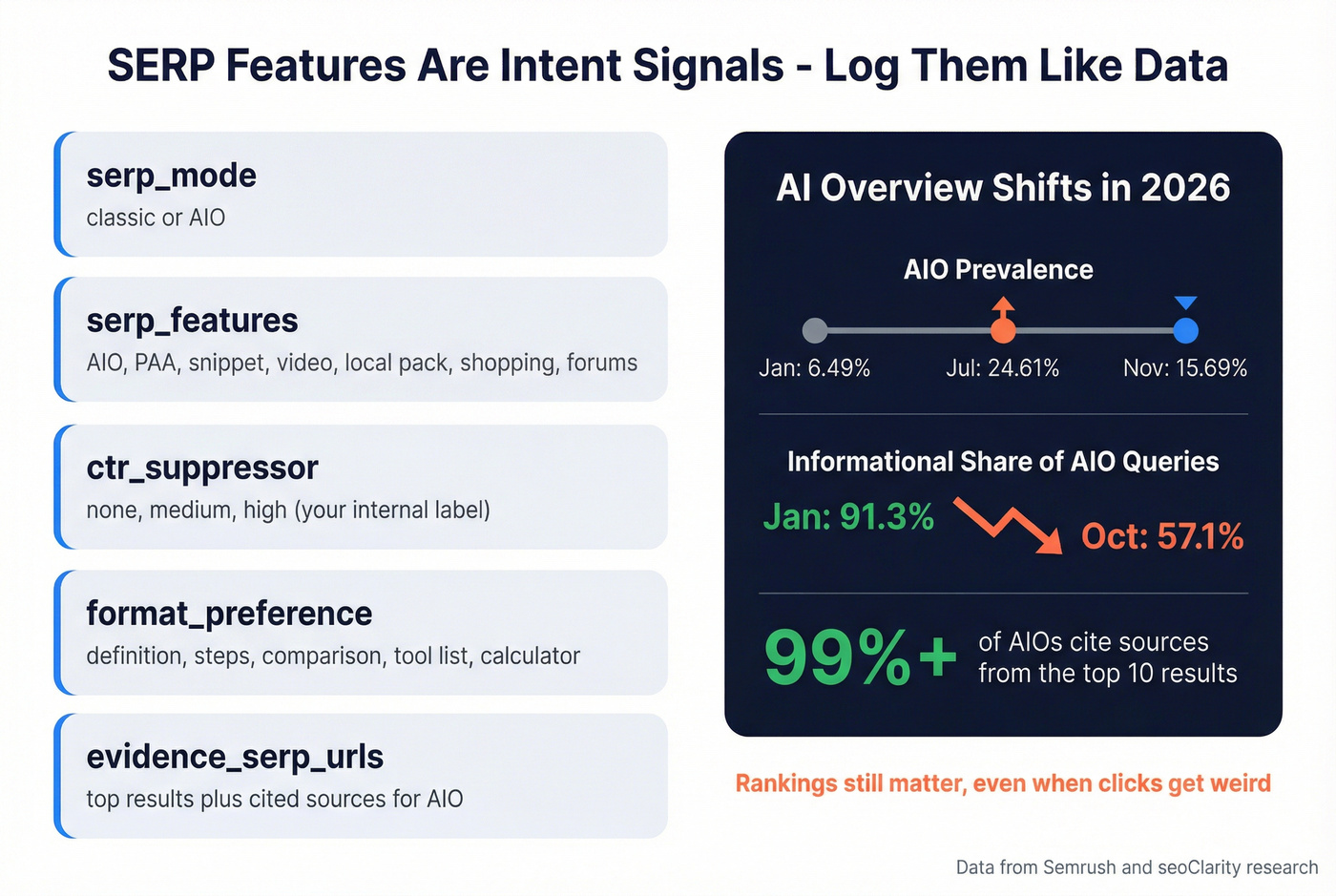

SERP features are now intent signals (AI Overviews, CTR suppression, and logging)

In 2026, the SERP isn't "ten blue links." It's a layout of modules, and those modules are intent signals you can store.

Semrush tracked AI Overview prevalence across 10M+ keywords, moving from 6.49% (Jan 2026) to 24.61% (Jul 2026), then down to 15.69% (Nov 2026). That kind of volatility is exactly why your refresh loop matters.

Even more important: AI Overviews stopped being purely top-of-funnel. Semrush saw informational share of AIO-triggering queries drop from 91.3% (Jan 2026) to 57.1% (Oct 2026), while commercial and transactional rose.

seoClarity's 432k-keyword dataset adds the tactical takeaway: >99% of AIOs cite sources from the top 10 results. Rankings still matter, even when clicks get weird.

What to log (turn SERP layout into fields)

If you want SERP features to become a usable dataset, store them like any other signal:

serp_mode: classic | AIOserp_features: [AIO, PAA, snippet, video, local_pack, shopping, forums]ctr_suppressor: none | medium | high (your internal label)format_preference: definition | steps | comparison | tool_list | calculator | templateevidence_serp_urls: top results + cited sources (for AIO)

This changes how you plan content. You stop asking "what's the intent label?" and start asking "what format wins in this SERP layout?"

Treat AIO as a CTR suppressor (and plan around it)

AIO presence is a practical modifier: it often reduces CTR for the entire page-one set, especially on definitional queries.

So do two things:

- Set expectations: your CTR baseline for AIO-heavy clusters is lower. Don't call it a content failure when clicks drop but impressions rise.

- Win the downstream: optimize for conversion paths that don't require a click from every impression: brand recall, retargeting pools, email capture, and "next-step" internal links for the clicks you do get.

Mini example: one cluster, two SERP layouts, two actions

Cluster: "endpoint security pricing"

- Week A SERP: AIO + "People Also Ask" + 2 pricing pages in top 5

intent_labels: transactional + commercialformat_preference: pricing table + FAQ + procurement notes- Action: build a pricing explainer page and add a "request quote" path above the fold.

Cluster: "what is endpoint security"

- Week B SERP: AIO + featured snippet + glossary results

intent_labels: informationalformat_preference: definition + steps + diagram- Action: publish a glossary-style page and internal-link to "pricing" and "comparison" children.

Same category. Different SERP. Different job. That's why logging SERP features belongs inside your dataset.

Search intent vs solution intent vs prompt intent (2026 taxonomy)

Look, "search intent" isn't enough anymore. In 2026, you also need to label how the user expects the answer to be delivered, because SERP modules and generative answers reward format as much as they reward relevance.

Add three fields to your schema:

intent_stage: learn | evaluate | buyintent_mode: search | solution | promptformat_preference: the output shape that wins

1) Search intent (classic): "best CRM automation tools"

intent_stage: evaluateintent_mode: searchformat_preference: comparison list + pros/cons + pricing

2) Solution intent (job-to-be-done): "CRM automation workflow template"

intent_stage: learn -> evaluate (bridges)intent_mode: solutionformat_preference: template + downloadable + examples

3) Prompt intent (generative): "Create a 7-step CRM automation plan for a 10-person sales team"

intent_stage: learnintent_mode: promptformat_preference: step list + checklist + copy/paste blocks

Prompt intent is where formatting becomes the strategy: tight definitions, structured steps, and reusable blocks that AI Overviews can cite.

Mixed intent is normal (stop forcing one label per keyword)

Here's the thing: single-label intent is a reporting convenience, not reality. If you force one label per query, you'll mis-route content decisions and misread performance.

BloomIntent's research on intent-level evaluation found 72% agreement between automated intent-level assessments and expert evaluators. That's strong enough to operationalize, as long as you allow multi-intent and keep evidence.

A clean way to handle mixed intent:

- store multiple labels

- store SERP composition (what Google is serving)

- store landing-page outcomes (what users reward)

Example: "CRM automation"

- Informational: "what is CRM automation"

- Commercial investigation: "best CRM automation tools"

- Transactional: "HubSpot automation pricing"

- Navigational: "Salesforce automation setup"

If your dataset allows multi-labels, you can see which intent your page actually satisfies, and which intent you're ignoring.

B2B workflow: surge -> identity -> route -> measure

Buyer intent is where search intent data turns into revenue motion, and it's also where vendors love to hide behind opaque scoring. The workflow that holds up in production is simple: detect surge, resolve identity, route actions, measure lift.

I've seen teams spend six figures on intent, then route every "spike" straight to SDRs with no frequency cap. Two weeks later, reps ignore the alerts, marketing gets blamed, and the tool becomes shelfware. That's not a data problem. It's a workflow problem.

Where buyer intent signals come from

Four buckets cover almost everything:

- First-party: website sessions, pricing page views, docs usage, email clicks, webinar attendance, form fills

- Second-party: partner signals (publisher or ecosystem partners sharing aggregated behavior)

- Third-party: topic research across a network, review-site activity, content consumption

- Zero-party: explicit self-reported data (surveys, quizzes, "tell us your role" flows)

How platforms know it's intent (surge above baseline)

Most platforms model intent as surge above baseline - the same core mechanic behind most B2B surge data products.

- Topic without time window = fit.

- Time window without topic = activity.

- Surge without baseline = volume.

A clean internal representation looks like:

account = acme.comtopic = endpoint securitywindow = 14 daysbaseline = 3 signals / 14dcurrent = 11 signals / 14dsurge = +266%confidence = 82

Identity resolution in plain English

Identity resolution connects anonymous behavior to an account or person. In practice, this is the intent identification step that determines whether a "topic spike" becomes something Sales can actually act on.

Common primitives:

- HEM (hashed email match)

- MAID (mobile advertising ID)

- Identity graph (mapping devices/cookies/hashed IDs to accounts)

What you get out the other side:

- Account-level intent: "this company is in-market."

- Person-level intent: "this person is researching."

Clear position: account-level intent is the default. Use person-level only when you have governance and a specific activation plan.

Activation path (routing rules that don't create chaos)

Activation is where intent stops being a dashboard.

A practical routing model:

- Tier A surge (high confidence + high fit): SDR sequence + retargeting ads + sales alert

- Tier B surge: ads + nurture + monitor

- Tier C: suppress from action; keep for reporting

Two rules prevent spam and distrust:

- Frequency cap: don't re-activate the same account every week because a score wiggles.

- Evidence requirement: store topics + time window + evidence categories/URLs so reps trust the signal.

This is where enrichment + verification tools (like Prospeo) attach reachable contacts to in-market accounts.

Intent sources compared (SEO vs clickstream vs buyer intent)

Not all "intent" is created equal, and it's definitely not all "who searched what."

Similarweb's methodology is a useful mental model because it breaks sourcing into Direct Measurement, Contributory Network, Partnerships, and Public Data Extraction.

| Source type | Granularity | Strengths | Biases/limits | Best for | Typical cost range |

|---|---|---|---|---|---|

| GSC (first-party) | Query + page | Real demand | Only your site | SEO wins | $0 |

| SERP scraping | Query + SERP | Evidence URLs | Volatile SERPs | Intent QA | $50-$500/mo |

| Clickstream | Domain/topic | Market view | Panel bias | Market intel | $500-$5k/mo |

| Buyer intent | Topic/account | Surge model | Opaque scoring | ABM routing | $30k-$100k+/yr |

| First-party web | Person/session | High signal | Needs volume | Scoring | $0-$500/mo |

What "panel bias" means in practice (and how to sanity-check it)

Panel bias shows up as over-representation of certain geos, devices, industries, or browsing contexts. In practice, it creates two common failure modes:

- You "discover" demand that's real for the panel but weak for your ICP.

- You miss demand that's strong in your ICP but under-sampled.

Sanity-check clickstream against GSC:

- Pick 20-50 non-branded topics you already rank for.

- Compare directionality: are both showing the same up/down trend over 8-12 weeks?

- If clickstream says a topic is exploding but your impressions stay flat, treat it as market intel, not an SEO roadmap.

When to buy vs build (decisive guidance)

- Build with GSC when you need query-level truth and you care about page decisions.

- Buy SERP scraping when you need evidence at scale (features, cited sources, competitor layouts).

- Buy clickstream when you need category and competitor context (share-of-search proxies, traffic shifts).

- Buy buyer intent when you have an activation engine (ads audiences, SDR capacity, routing rules). If you don't, you're buying a dashboard.

Privacy & compliance reality for third-party intent (TCF v2.3 + governance)

If you buy third-party intent, governance is part of the product. Treat it that way.

TCF v2.3 timeline:

- Released: Jun 19, 2026

- Transition ends: Feb 28, 2026

- After the deadline, TC strings created without the disclosedVendors segment are invalid (strings created before the deadline without it remain valid).

- disclosedVendors becomes mandatory so vendors can determine whether they were disclosed in the CMP UI.

Now add the legal reality: a TCF string can be treated as personal data when identification is reasonably likely, and joint controllership risk exists around parts of the framework. Your legal team won't treat "intent" as magic. They'll treat it as data processing.

What to ask vendors (copy/paste this into procurement)

Contract + governance

- DPA available, and whether they sign your DPA

- Sub-processor list (current + change notification policy)

- Data retention defaults and whether you can set retention by source class

- Data subject rights workflow (access/delete/opt-out) and SLA

Collection + consent

- Exact source classes used (co-op, bidstream, clickstream, first-party, public extraction)

- CMP disclosure mechanics: how disclosedVendors is handled and validated

- Whether they process person-level identifiers, and under what lawful basis

Activation controls

- Can you restrict to account-level only?

- Can you suppress accounts/people and propagate suppression downstream (CRM, ads, sequences)?

- Can you export evidence categories/URLs tied to each signal?

Security

- Data residency options

- Encryption at rest/in transit

- Access controls and audit logs

Governance isn't paperwork. It's how you prevent "intent" from becoming an un-auditable blob that nobody wants to own.

Evidence > intent score (how to evaluate any intent dataset)

The #1 practitioner complaint is opaque scores. They're right, and I'm honestly tired of watching teams get sold "AI intent" that can't answer basic questions like "what did the account actually do?"

Bombora's G2 reviews repeatedly call out a limitation: no individual-level data, which makes targeted outreach harder. 6sense gets praised for segmentation and alignment, but the learning curve shows up constantly in G2 feedback. Practitioners want evidence, not mystery numbers.

My rule: if you can't store evidence URLs or evidence categories, skip it.

Holdout test mini-protocol (30-60 days, no excuses)

This is how you prove intent data creates pipeline instead of noise.

1) Choose the unit: account-level randomization. Don't randomize by lead; you'll contaminate results when multiple contacts exist per account.

2) Build your sample:

Minimum: 200 accounts per group (treatment vs control). Better: 500+ per group if your sales cycle is long or your conversion rate is low, because small samples make every weekly spike look like a miracle and every dip look like a disaster.

3) Randomize and lock the rules:

Treatment group: receives intent-based activation (ads audiences + SDR sequences + routing alerts).

Control group: business-as-usual (no intent-based activation). Freeze routing rules so you don't "optimize" mid-test.

4) Prevent contamination: Exclude control accounts from retargeting audiences and SDR sequences. If SDRs work named accounts, split ownership so the same rep doesn't work both groups.

5) Run for 30-60 days: 30 days for short cycles; 60 days for enterprise cycles.

6) Measure success (pick 2-3 primary metrics):

- meetings booked

- opportunities created

- pipeline $ created (or stage-2+ progression)

If the lift isn't there, the fix is almost always evidence quality, routing discipline, or ICP mismatch, not "more scoring."

Contrarian box: the "intent score" isn't the product

The product is the workflow: evidence -> routing -> measured lift. A score's just compression. If you can't decompress it into evidence, reps won't use it.

How to automate intent labeling (rules + open models + QA)

Automate intent labeling with guardrails, not vibes.

A practical stack:

- Rules first for obvious modifiers (transparent, fast).

- Open model second for scale.

One option: HuggingFace's Intent-XS model. Use it as a bootstrap multi-label classifier, then make it earn trust:

- run it on a sample

- measure precision/recall by label

- fine-tune on your own SERP-reviewed training set

QA loop (monthly):

- Sample 50-100 queries per label

- Manually review the SERP for each sample

- Validate against CTR, engaged time, conversions (and Ads CVR/CPA when relevant)

- Update rules and retrain/fine-tune quarterly

Cost reality (what "intent data" typically costs in 2026)

SEO intent tooling is cheap. Buyer intent is expensive because you're paying for collection, identity, and activation.

Typical ranges in 2026:

- SMB SEO stack: $50-$300/month (research, SERP tracking, reporting)

- SERP scraping / monitoring: $50-$500/month

- Clickstream tools: $500-$5,000/month

- Enterprise buyer-intent platforms: $30,000-$100,000+/year

Prospeo is worth calling out here because it sits where a lot of teams get stuck: you finally have a clean list of in-market accounts, and then you can't reach the right people without bouncing your domain into the sun. Prospeo is "The B2B data platform built for accuracy" with 300M+ professional profiles, 143M+ verified emails, and 125M+ verified mobile numbers, plus real-time email/mobile verification (98% email accuracy) and a 7-day refresh cycle, so your activation doesn't run on stale contact data.

Skip this if you don't have any activation motion yet. If nobody's running outbound, ads audiences, or lifecycle routing, intent data won't save you.

Your intent workflow routes surging accounts to SDRs. But if the emails bounce, the whole system breaks. Prospeo's 5-step verification and 7-day refresh cycle mean your reps reach real buyers - not spam traps. At $0.01 per email, bad data costs more than good data.

Stop routing intent-qualified accounts into a bounce rate graveyard.

FAQ about search intent data

Is search intent data the same as buyer intent data?

Search intent data is usually query-level intent for SEO (what the searcher wants on the SERP), while buyer intent data is topic-level research used for B2B routing (which accounts are in-market). In practice, SEO uses SERP composition + engagement, and B2B uses surge above baseline + identity resolution.

How do I separate branded vs non-branded intent in Google Search Console?

Use the branded queries filter in GSC's Performance report when it's available, then export branded and non-branded segments separately. If it's not available, tag branded queries using a dictionary (brand name, product names, misspellings) and keep that dictionary versioned so your reporting stays consistent.

What lookback window should I use for intent (7, 30, 90 days)?

Use 7 days for fast-moving SERPs and campaign monitoring, 30 days for most SEO and pipeline reporting, and 90 days for strategic planning and seasonality. Store the window in your dataset every time. Intent without a time horizon turns into permanent "fit" scoring.

Is clickstream data "who searched what"?

Clickstream data is aggregated behavioral measurement from panels, partnerships, and direct measurement sources, then modeled to estimate market behavior. It's strong for directional insights like demand trends and competitor shifts, but it's not a literal list of named people and exact searches.

How do I turn intent signals into outbound without wrecking deliverability?

Route signals into a tightly scoped list, cap frequency, and verify contacts before you send. Keep hard bounces under 2% and aim for under 1% on new lists; once you cross that line, domains get burned fast and "intent" turns into a deliverability incident instead of pipeline.

Summary: make search intent data auditable, then make it usable

The win isn't a prettier taxonomy. It's a system you can debug: timestamps, windows, baselines, evidence, and a refresh loop that keeps up with SERP drift.

Do that, and "intent" stops being a buzzword and starts being a lever you can pull on purpose.