Win-Loss Analysis: The Complete Playbook for 2026

Your VP of Sales pulls up the quarterly review. The CRM says you're "losing on price" - it's the top closed-lost reason for the third straight quarter. But when you actually talk to the buyers who walked away, 40% of those "losses" were no-decisions. The deals didn't go to a competitor. They went nowhere. And the ones that did? Buyers say it wasn't price. It was a discovery call that felt like a product demo.

That gap - between what your CRM says happened and what actually happened - is where win loss analysis lives. It's wider than most teams realize. CRM loss data is wrong the vast majority of the time, which means you're making strategy decisions on a foundation of garbage.

Here's the thing: fixing this doesn't require a six-figure budget or a dedicated team. It requires a system. This playbook gives you that system, whether you're starting from zero or trying to rescue a program that's gone stale.

What Is Win-Loss Analysis (And What It Isn't)

Win loss analysis is a systematic process of interviewing buyers - both those who chose you and those who didn't - to understand the real reasons behind their decisions. The insights come from the buyer's perspective, not your team's assumptions.

It's not CRM reporting. CRM data tells you what reps entered in a dropdown. Buyer interviews tell you what actually happened in the buyer's mind during a six-month evaluation involving five stakeholders. Completely different thing.

It's not a deal post-mortem either. Post-mortems are internal, one-off reviews after a single deal. A structured win-loss program is external, systematic, and pattern-oriented. You're interviewing across dozens of deals to find trends that no single deal review would reveal.

The gap between internal perception and buyer reality is staggering: 50-70% of the time, sellers and buyers cite completely different reasons for lost deals. Sellers blame pricing and missing features. Buyers cite poor needs discovery, lack of differentiation, and slow response times.

Why Your CRM Data Is Lying to You

Let's be specific about how bad the problem is.

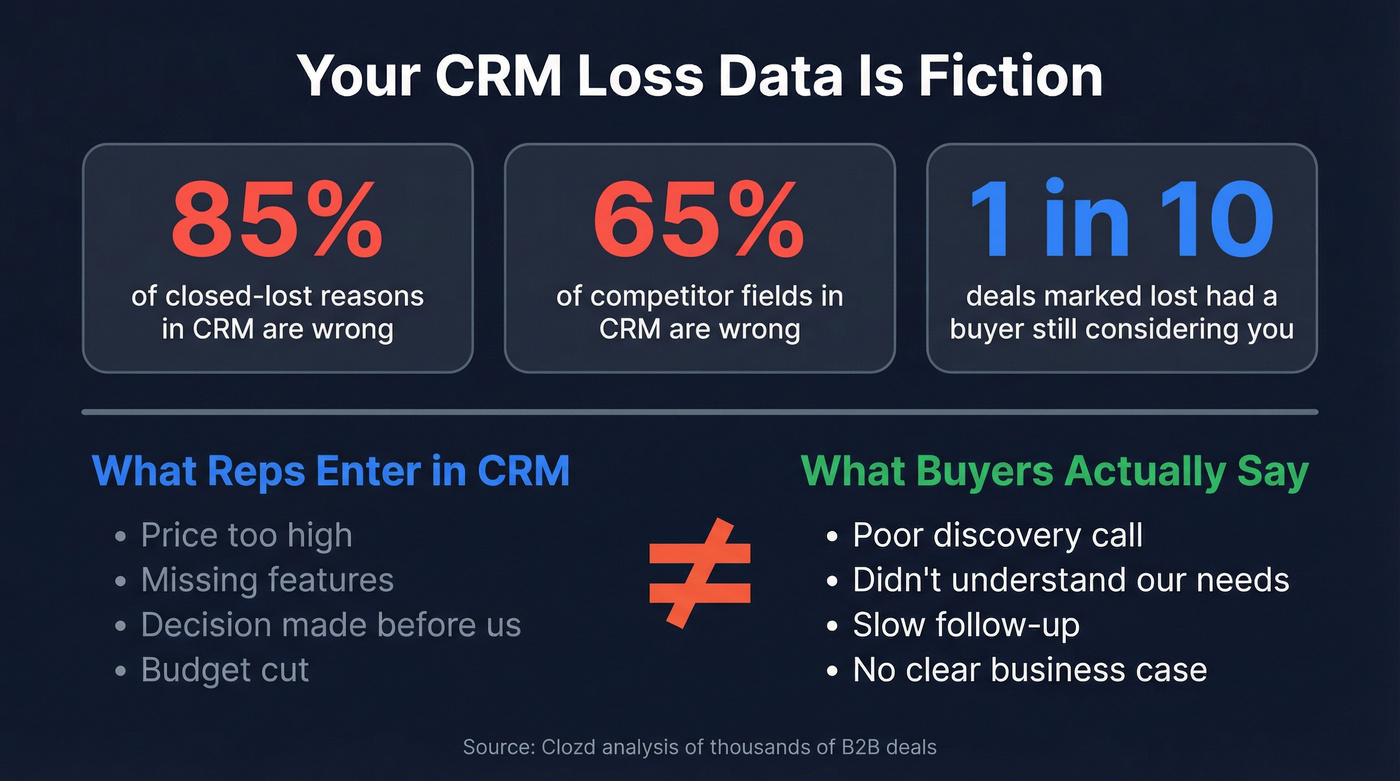

Clozd compared CRM data against direct buyer feedback across thousands of deals. The closed-lost reason in CRM is wrong 85% of the time. The competitor listed in CRM is wrong 65% of the time. You aren't losing to the competitor you think - frequently, you're losing to "no decision" or the status quo, but CRM doesn't reflect that.

And here's a stat that should make you uncomfortable: 1 in 10 deals marked as "lost" had a buyer who was still actively considering the vendor.

You're writing off deals that aren't even dead yet.

Why is CRM data so unreliable? Because reps fill in loss reasons that protect them. Price. Product gaps. "Decision was made before we got there." These are all factors outside the rep's control. Reps rarely cite their own performance, lack of follow-up, or failure to build a business case. That's human nature, not malice.

One product marketer in fintech nailed it on Reddit: CRM data ends up "incomplete, generic, or disconnected from any real GTM learnings." The blunt version? Your CRM loss reasons are a fiction your sales team writes to close out deals they don't want to think about anymore.

Surveys won't save you either. B2B survey response rates hover between 3% and 5%. A standard survey can't capture the nuance of a six-month sales cycle involving a buying committee of five people. You need conversations, not checkboxes.

More than 60% of the time, sales reps are wrong about why they win or lose. That's not a data quality issue. It's a structural blind spot that only buyer interviews can fix.

Win Rate Benchmarks You Should Know

Before you can improve your win rate, you need to know where you stand:

| Segment | Win Rate Range |

|---|---|

| Average B2B | ~21% |

| Enterprise (ACV >$100K) | 15-20% |

| Mid-market ($50-100K) | 20-25% |

| Known contacts | 37% |

| Cold outreach | 19% |

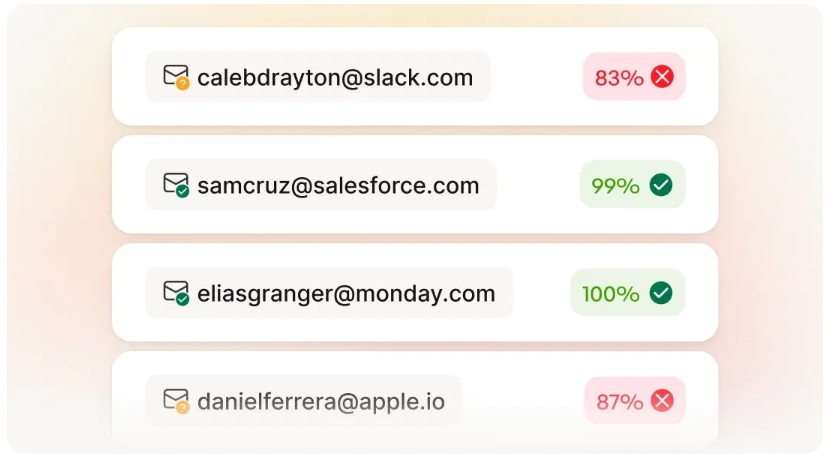

That known-contact number is striking. Deals where you already have a relationship convert at nearly double the rate of cold outreach. This alone justifies investing in data quality and relationship mapping - and it's one reason we've seen teams using Prospeo's enrichment workflows to map existing relationships before outbound even starts.

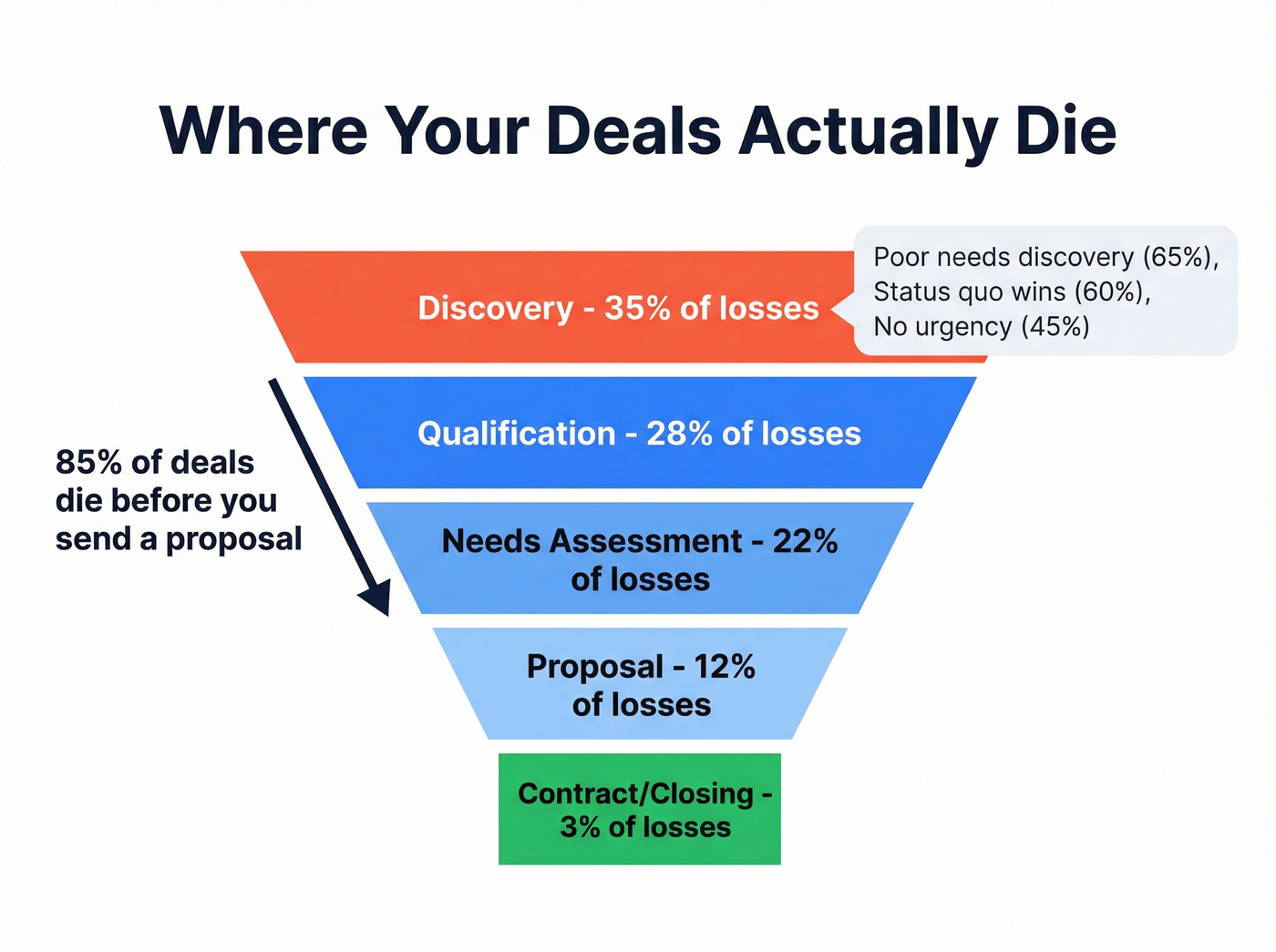

Here's where deals actually die:

| Stage | % of Losses |

|---|---|

| Discovery | 35% |

| Qualification | 28% |

| Needs Assessment | 22% |

| Proposal | 12% |

| Contract/Closing | 3% |

The majority of deals die before you ever send a proposal. Top reasons at the Discovery stage: poor discovery and not understanding buyer needs (65%), status quo wins (60%), and lack of urgency (45%).

This is why a thorough review of sales wins and losses matters more than sales pipeline management. You can't fix a leaky funnel by pouring more leads into the top. You fix it by understanding why 63% of your losses happen before you've even scoped the deal.

Your win-loss data says deals die at discovery because reps don't understand buyer needs. But 63% of losses happen before you even scope the deal - often because you're reaching the wrong stakeholders. Prospeo maps buying committees with 300M+ profiles, 30+ filters, and 98% email accuracy so your reps walk into discovery already knowing who matters.

Stop losing deals before they start. Fix your contact data first.

The Business Case - Getting Executive Buy-In

Here's the math that gets budget approved.

Take your annual lost revenue. For a company losing $10M in deals per year, Corporate Visions' 100,000-deal analysis says 53% were actually winnable. That's $5.3M in recoverable revenue. Even recovering 10% of that is $530K - more than enough to fund a program for years.

The research backs this up from multiple angles:

- Gartner: win-loss programs can improve win rates by up to 50%

- McKinsey: a 10-20% win-rate lift translates to 4-12% topline growth

- Forrester: basic buyer interview programs drive a 23% improvement in close rates within six months

- Direct feedback impact: sellers who receive buyer feedback achieve up to 40% better win rates

Even at half these numbers, the ROI justifies the investment ten times over.

The case studies are concrete. Simon Bouchez, CEO of Reveal, personally sponsored their win-loss program. Win rate increased 25%. His take: "Now able to make strategic decisions based on data rather than feelings." A European carrier used these insights to shift from feature-based selling to value-based selling, and revenue increased 16%.

Ryan Sorley, CEO of DoubleCheck Research, has the best advice for getting buy-in: go to each functional leader individually and ask what they want to learn. Sales wants to know why they're losing competitive deals. Product wants to know which feature gaps actually matter. Marketing wants to know which messaging resonates. When each leader has a stake in the outcome, the program gets stickier.

And here's the number that kills the "we can't afford it" objection: Gartner found that companies spending under $5,000 annually on win-loss achieved comparable insight quality to those investing $50,000+. The differentiator was consistency, not budget.

How to Build a Win-Loss Program Step by Step

Define Your Objectives and Selection Criteria

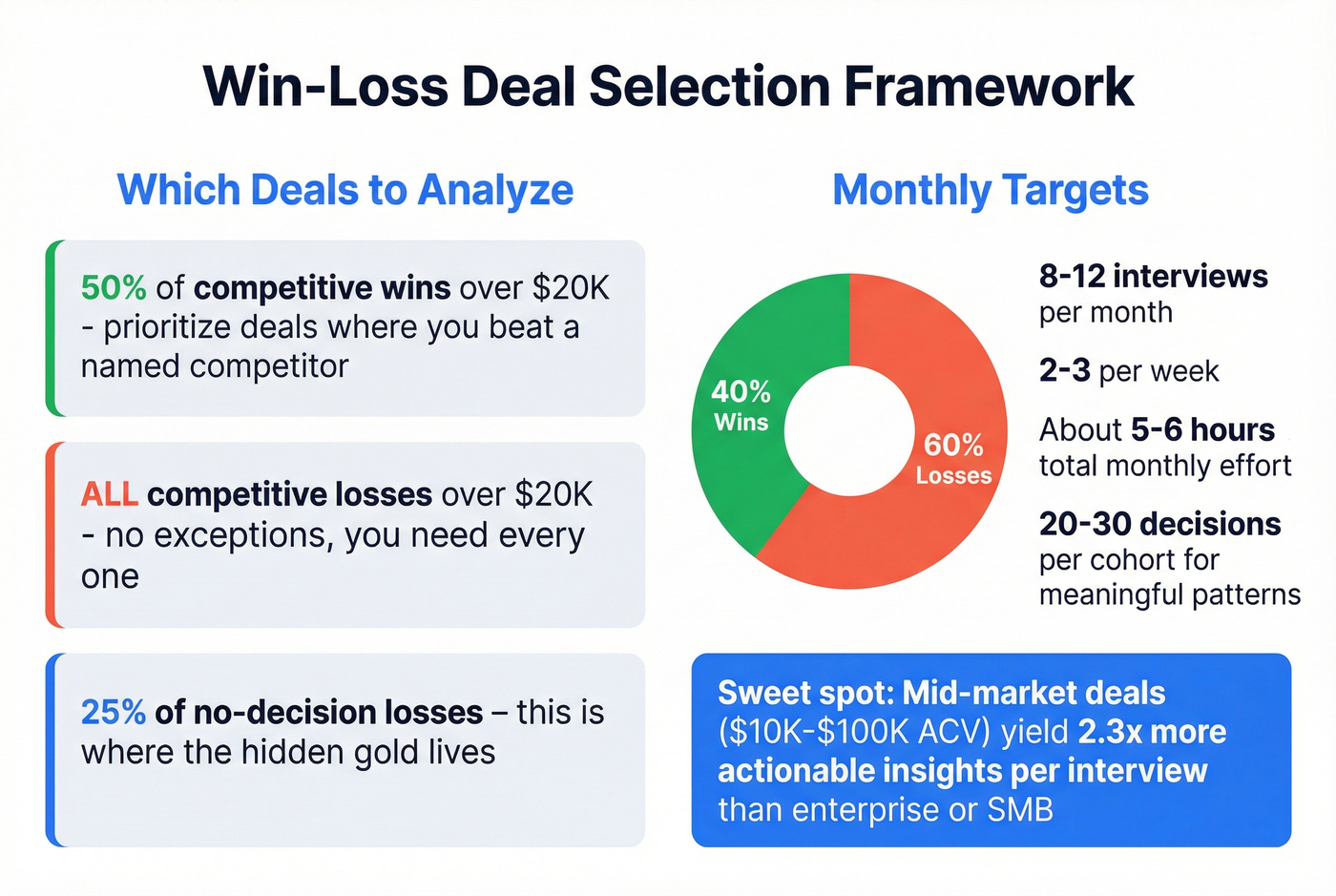

Don't try to analyze every deal. Analyze the right 20-30.

Here's a selection framework that works:

- 50% of competitive wins over $20K (prioritize deals where you beat a named competitor)

- All competitive losses over $20K (no exceptions - you need every one)

- 25% of no-decision losses (this is where the hidden gold lives)

Target 8-12 interviews per month. That's 2-3 per week, roughly 5-6 hours of total monthly effort including prep and analysis. Mix should be ~40% wins and 60% losses. You learn more from losses, but wins tell you what to double down on.

Mid-market deals ($10K-$100K ACV) yield 2.3x more actionable insights per interview than enterprise or SMB deals. Enterprise deals involve too many stakeholders to get a clean signal from one interview. SMB deals are often too simple to reveal systemic patterns.

You need 20-30 decisions per cohort for statistically meaningful patterns. A "cohort" is a segment - by competitor, deal size, loss stage, or vertical. Don't mix everything together and expect clarity.

Design Your Interview Guide

Your questions should map to the buyer's journey, not your internal process. Here are the 15 questions that matter most:

The Role & Awareness

- How did you play a role in the evaluation process?

- What made you realize you needed a solution in this space?

- How many people at your company were pushing for change?

Consideration & Requirements 4. What were your must-have requirements going in? 5. How did you build your shortlist? 6. What did your evaluation process look like?

Competition 7. Which other vendors did you evaluate? 8. How did you narrow down your shortlist? 9. What differentiated the vendors you considered?

Price & Value 10. How did our pricing compare to alternatives? 11. Was pricing a primary factor in your decision? 12. What would have made the investment easier to justify?

Decision 13. What was the final tipping point? 14. Who had the final say? 15. If you could change one thing about our sales process, what would it be?

Before designing your guide, do the internal pre-work. Ask each stakeholder: "What is most important to you right now?" and "If you had this information today, what would you do with it?" This ensures your questions align with what the business actually needs to learn.

Valerie Bonaldo, Director of PMM CI at Seismic, recommends keeping a core set of questions consistent but adding agile questions each quarter to test new messaging, product launches, or competitive shifts.

Questions That Kill Your Win-Loss Interviews

Don't ask about the buying process timeline - your sales team should've captured that during the deal. Don't ask satisfaction-validating questions like "Do you feel satisfied with your decision?" These put buyers on the defensive and hurt any future relationship.

And when a buyer says "price" - probe deeper. They're almost never talking about the number itself. They're saying your offer wasn't compelling enough to justify the premium. Follow up on functionality, trust, and experience.

Master the Interview - Timing, Outreach, and Technique

Timing is everything.

For wins, schedule interviews 30-45 days post-close - the buyer has had time to start implementation but still remembers the evaluation clearly. For losses, move fast: 7-14 days post-decision. Response rates drop 34% when outreach happens more than one week after deal closure, and buyer recall accuracy decreases ~15% per week.

The interviewer should NOT be the AE who worked the deal. Best options: Product Marketing (ideal for most companies), Founder/CEO (works for key accounts at smaller companies), or a third-party firm (most honest feedback, most expensive). One consistent interviewer is better than rotating - buyers open up more when the format feels practiced and professional.

Your opening line matters. Start with: "This isn't sales follow-up - I won't pitch you. We're genuinely trying to understand what we could have done differently." Curiosity-driven outreach achieves 40-55% acceptance rates versus 12-15% for generic survey requests. Provide specific time options - "Would Tuesday at 2pm or Thursday at 10am work?" - rather than open-ended requests. This increases scheduling rates by 28%.

Record every interview. Recorded interviews yield 3.7x more specific insights than note-based approaches. Keep sessions to 20-30 minutes. Use the Five Whys technique when you hit a surface-level answer - keep asking "why" until you reach the real driver.

Analyze, Tag, and Build the Narrative

Raw interviews are useless without a consistent tagging methodology. Every interview should be tagged with: competitor involved, deal stage at loss, loss reason (from the buyer's words, not CRM), product line, region, vertical, and deal size.

Three template types cover the analytical workflow:

- Data Collection & Organization - interview questions in columns, buyer names in rows, data validation dropdowns for competitor names

- Lost Deal Rationale Segmentation - calculates the percentage of each competitor's wins by decision reason (price, functionality, support, brand authority)

- Competitive Win Rate Segmentation - tracks demo experience and trial experience ratings by competitor

For your executive summary, here's the structure that works:

- Aggregate interview answers into trends - tag keywords, make answers trackable

- Highlight 2-3 most common trends (frequency equals trend)

- Include buyer quotes - a punchy quote from a lost deal at a recognizable brand catches executive attention more than data alone

- Layer quantitative CRM data on top of qualitative interview insights

- Calculate the Competitive Revenue Gap - how much revenue each competitor is taking from you

- Build a narrative connecting dots between departments

- Keep recommendations clear - 2-3 actionable items per quarter

Jennifer Roberts, Director of Marketing Strategy at ServiceTitan, puts it well: "Win-loss analysis lets you navigate stakeholders who think every competitor is different... You need the qualitative and quantitative together to see what really matters."

Tailor findings to each audience. Sales needs competitive battlecards. Product needs feature prioritization data. Marketing needs messaging validation. The same interviews fuel all three - but the packaging matters.

The Hidden 40% - No-Decision Deals

Up to 40% of your pipeline ends in no decision. Not a loss to a competitor. Not a win. Just... nothing.

I've seen teams obsess over competitive losses while ignoring a no-decision pile that's twice as large. A pipeline review that excludes no-decisions is only telling you half the story.

Here are the five categories of no-decision deals:

| Category | Win-Back Potential | What Happened | Win-Back Strategy |

|---|---|---|---|

| Status Quo | HIGH | Buyer didn't see urgency | Re-approach in 6 months - problem is likely worse |

| Discovery Gap | MEDIUM | Rep pitched, didn't consult | New entry point, stronger business case next budget cycle |

| Un-Ideal Customer | ZERO | Bad fit from the start | Don't re-approach - refine your ICP instead |

| ROI Fog | HIGH | Buyer couldn't justify spend | Re-approach in 90 days with custom ROI calculator |

| Bad Experience | LOW | Buyer felt pushed or unheard | Assign new AE; only discoverable via neutral interview |

Rex Galbraith, CRO at Consensus, coined the "Un-ideal Customer Profile" (UCP) concept - knowing who your products are NOT for. Identifying UCPs through buyer interviews improved his team's productivity by letting reps disqualify faster.

Howard Brown, CEO of Revenue.io, is blunt: "Every minute you spend on a no-decision deal is a minute less that you could be spending on a deal that would actually close." But the flip side is equally true - Status Quo and ROI Fog deals represent real revenue sitting on the table. The key is knowing which category each deal falls into.

The only way to categorize no-decision deals accurately is to interview the buyers. Your CRM will say "closed-lost" with a generic reason. The buyer will tell you they're still interested but couldn't get budget approval because your team never gave them an ROI framework to take to their CFO. That's a completely different problem with a completely different solution.

How to Start With Zero Budget

67% of successful win-loss programs began with zero dedicated budget. That's not a consolation stat - it's the norm.

Use what you have. Google Sheets for tracking. Zoom for recording. A consistent question set asked every time. That's your minimum viable program. Sarah Chen, Director of PMM at Lattice, conducted just 8 interviews in her first month and uncovered 3 critical product gaps causing 40% of recent losses. Eight interviews. One month. Three insights that changed the product roadmap.

Aim for 8-12 interviews per month. That's ~5-6 hours of total effort including prep, interviews, and analysis. It's a side project, not a full-time role.

Use curiosity framing in your outreach. "I'd love to learn what we could have done differently" dramatically outperforms "Would you be willing to provide feedback?" Marcus Johnson, VP of Product Strategy at Gainsight, hits 52% response rates with this approach versus 18% with generic requests.

Move fast on losses. Contact lost buyers within 7-14 days. Every week you wait, recall accuracy drops ~15% and response rates drop 34%.

Here's what to skip:

Don't buy a platform yet. Gartner found that $5K programs achieved comparable insight quality to $50K+ programs. The differentiator was consistency, not tooling.

Don't outsource interviews immediately. One Reddit practitioner dropped their third-party agency "due to budget and the per-interview cost being way too much." At $1,500-$3,000 per interview, that adds up fast. Start with your PMM or founder conducting interviews.

Don't "wing it." Informal, ad-hoc conversations with a handful of buyers yield biased, non-significant insights. A Google Sheet with consistent tagging beats a $50K platform used inconsistently. Every time.

Win-Loss Tools by Budget

Once you've proven the value with a zero-budget program, here's how the tool landscape breaks down:

| Tier | Budget | Tools | Best For |

|---|---|---|---|

| Free | $0 | Sheets + Zoom + process | Starting out, <$50K ARR |

| Mid-range | $5K-$30K/yr | Zime.ai, Diffly, Winxtra | Growing teams, AI assist |

| Enterprise | $30K-$100K+/yr | Klue, Clozd, Corporate Visions | Enterprise CI + scale |

| Per-interview | $1,500-$3K each | Anova, DoubleCheck, Satrix | Supplementing internal work |

Free tier: Don't underestimate this. A consistent process with Google Sheets and Zoom will outperform an expensive platform used sporadically. This is where most successful programs start.

Mid-range ($5K-$30K/year): Zime.ai is the standout - G2 rating of 4.8/5, AI-powered deal scoring, living playbooks, and behavior-driven leaderboards. Diffly focuses on automated interview analysis and competitive pattern detection, while Winxtra emphasizes workflow integration and cross-functional reporting. Expect custom pricing for all three; budget $5K-$15K/year for small teams.

Enterprise ($30K-$100K+/year): Klue combines an AI Interviewer with a full competitive intelligence platform - and combined win-loss + CI programs are nearly 3x more likely to report significant business impact. Clozd offers both Live and Flex interview formats, is ISO 27001 and 27701 certified, and counts Nitrogen, Qualtrics, and Clari as customers. Corporate Visions (TruVoice) brings their 100,000-deal research base to the table.

Per-interview services: Anova, DoubleCheck Research, and Satrix Solutions run $1,500-$3,000 per interview. Expensive for ongoing programs, but valuable for supplementing internal efforts on high-stakes deals or when you need a truly neutral third party.

Real talk on AI: 41% of teams are using AI in win-loss today, and another 41% are planning to start. AI excels at tagging themes, summarizing transcripts, and scaling coverage across more deals. What it can't do: build trust with a buyer on a recorded call, probe an emotional response about a bad sales experience, or read the hesitation in someone's voice when they say "pricing was fine." For teams with deal sizes under $50K, AI-assisted analysis with human interviews is the sweet spot. You don't need a $100K platform - you need a consistent human asking smart questions and a machine helping you find the patterns.

Common Mistakes That Kill Win-Loss Programs

1. Not formalizing the process. Occasional, ad-hoc conversations with a handful of buyers yield biased, non-significant insights. You need: learning objectives, deal selection criteria, a consistent interview guide, a next-steps structure, an org-wide memo, and a quarterly presentation. Without these, you're just having conversations.

2. Lack of executive support. Win-loss takes 3-9 months to show full value depending on your sales cycle. If your exec sponsor loses patience at month two, the program dies. Set expectations upfront: "We'll have initial patterns in 60 days. Statistically significant trends in 6 months."

3. Only asking salespeople. This is the most common and most damaging mistake. The CRO at Didomi said it plainly: "We nearly made a wrong product decision, wrongly thinking we were at a disadvantage for not having a particular feature." Internal-only feedback defaults to "pricing or product issues" because those are safe answers. Buyer interviews tell a different story. Traditional competencies like product knowledge and industry expertise are up to 31% less predictive of sales success than the skills buyers actually care about - and you'll never learn that from your reps alone.

4. Not using a neutral interviewer. Buyers won't give transparent feedback directly to the vendor who just lost their deal. Even if you can't afford a third party, use someone who wasn't involved in the deal.

5. Not performing regularly. The truth of today isn't the truth six months from now. Competitive landscapes shift. Messaging evolves. One-shot analysis misses all of this. Monthly interviews, quarterly reports, annual program reviews.

6. Restricting access to feedback. Keeping insights to 2-3 people wastes the full potential. Sales needs battlecards. Product needs feature prioritization data. Marketing needs messaging validation. CS needs onboarding feedback. In our experience, the programs that fail aren't under-resourced - they're under-distributed. Create a culture of feedback transparency, not a gated report.

The contrarian take here is simple: stop trying to analyze every deal. Analyze the right 20-30 per quarter. Depth beats breadth every time.

Real Results - What Win-Loss Analysis Actually Delivers

A 25% win rate increase. That's what Reveal saw after CEO Simon Bouchez personally sponsored their program and shifted from gut-feel decisions to data-driven strategy.

European carrier: Buyer interviews revealed they were selling on features when buyers cared about value. After shifting their approach, revenue increased 16%.

Lattice: Sarah Chen conducted just 8 interviews in month one. She uncovered 3 critical product gaps causing 40% of recent losses. Those gaps were invisible in CRM data.

Nitrogen: Dan Bolton, VP of Corporate Marketing, calls their investment "a no-brainer." Win rate climbed from ~30% to over 50% after implementing a structured program.

Fiix by Rockwell Automation: Built 250+ customer profiles and 80 competitor profiles in a single year through systematic buyer interviews. That's a competitive intelligence asset that compounds over time.

The biggest ROI from win loss analysis isn't improving your pitch. It's killing bad deals earlier. When you know your UCP, when you know which discovery gaps lead to no-decisions, when you know which competitor you actually lose to (not the one in CRM) - you stop wasting cycles on deals that were never going to close. Collecting winning deals data alongside loss data is what gives you the full picture: what to double down on and what to fix.

That's where the real money is.

Known contacts convert at 37% vs 19% for cold outreach - nearly double the win rate. Prospeo's CRM enrichment returns 50+ data points per contact at a 92% match rate, so your team builds on existing relationships instead of starting cold. At $0.01 per email, the ROI dwarfs any win-loss program budget.

Double your win rate by enriching the relationships you already have.

FAQ

What's the difference between win-loss analysis and a deal post-mortem?

A post-mortem is an internal, one-off review after a single deal; win loss analysis systematically interviews buyers across dozens of deals to identify recurring patterns from the buyer's perspective. Post-mortems catch isolated issues. A structured program catches systemic problems - like discovery gaps causing 35% of all losses - that no single review would reveal.

How many buyer interviews do I need for reliable insights?

Aim for 20-30 interviews per cohort, grouped by competitor, deal size, or loss stage. Start with 8-12 per month (~5-6 hours of effort) and expect initial patterns within 60 days. Statistically significant trends typically emerge within 6 months of consistent interviewing.

Who should own the win-loss program?

Product marketing owns the program at most B2B companies. PMM sits at the intersection of product, sales, and marketing - the three teams that benefit most. Sales ops and competitive intelligence teams are common co-owners who help distribute insights.

How do I get lost buyers to agree to an interview?

Use curiosity-driven outreach like "I'd love to learn what we could have done differently" within 7-14 days of a lost deal - this achieves 40-55% acceptance rates versus 12-15% for generic surveys. Provide two specific time options rather than open-ended scheduling, which boosts booking rates by 28%.

How do I make sure I'm contacting the right people for win-loss outreach?

Verify contact data before outreach - buyers change roles, and emails go stale fast. Use Prospeo's Email Finder to confirm current emails and direct dials so your interview requests reach the decision-maker, not a dead inbox. With a 7-day data refresh cycle, you're working with current information even on deals that closed months ago.