Signal-Based Selling AI in 2026: From Signals to Actions (Not Alerts)

Most "AI selling" stacks fail for one boring reason: they generate notifications, not decisions.

Signal-based selling AI only works when it behaves like an operating system: fit gates, stacked signals, enforced routing, and measurable SLAs. Reps get the next action inside the CRM, not another alert to ignore.

Look, if your average deal is small and your sales cycle's under 30 days, you probably don't need a fancy intent suite. You need tight first-party signals, ruthless fit gating, and fast follow-up.

What signal-based selling AI is (and what it replaces)

Signal-based selling AI is a system that detects buying-relevant behavior (signals), scores it, routes it to the right owner, and triggers a specific play fast enough that timing actually matters.

It replaces the old "database + sequences" mindset where reps blast a static list and hope the market's in the mood.

Alerts aren't decisions. An alert is "something happened." A decision is "this account is Tier A, assigned to this owner, with a 60-minute SLA, using Playbook #3."

I've watched teams spend months "implementing intent" and end up with weekly alert fatigue. The win is when signals become actions your team can execute without thinking.

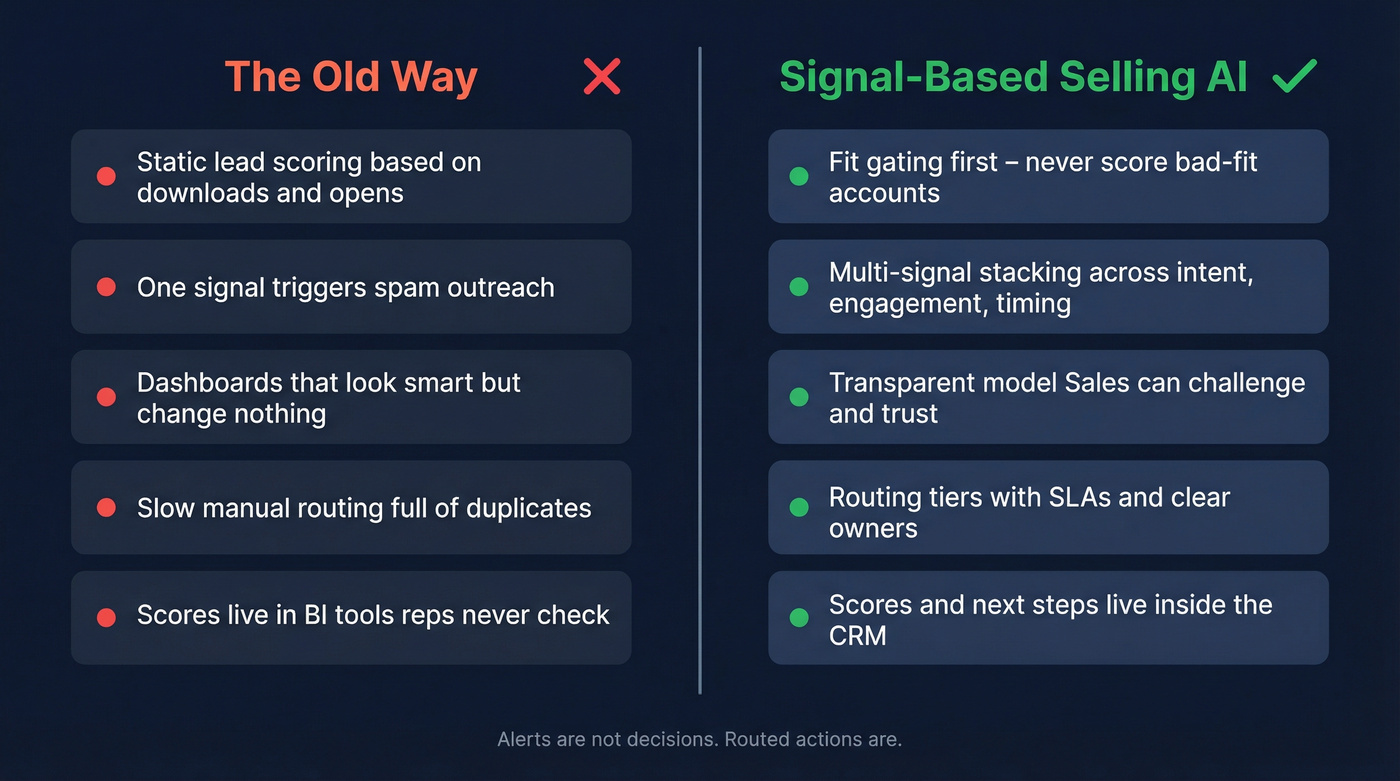

Old way (what you're replacing):

- Static lead scoring (downloads, opens) that doesn't map to revenue

- One-signal outreach ("visited pricing page" -> spam them)

- Dashboards that look smart but don't change rep behavior

- Routing that's slow, manual, and full of duplicates

New way (what works):

- Fit gating first (don't score bad-fit accounts)

- Multi-signal stacking (intent + engagement + timing)

- A transparent scoring model Sales can argue with (that's good)

- Routing tiers with SLAs and owners

- Scores and next steps living inside the CRM, not in BI

What you need (quick version)

You don't need 200 signals. You need 15 signals and three tiers.

That contrarian rule fixes most signal programs because it forces focus, governance, and real playbooks.

Start here (3 steps):

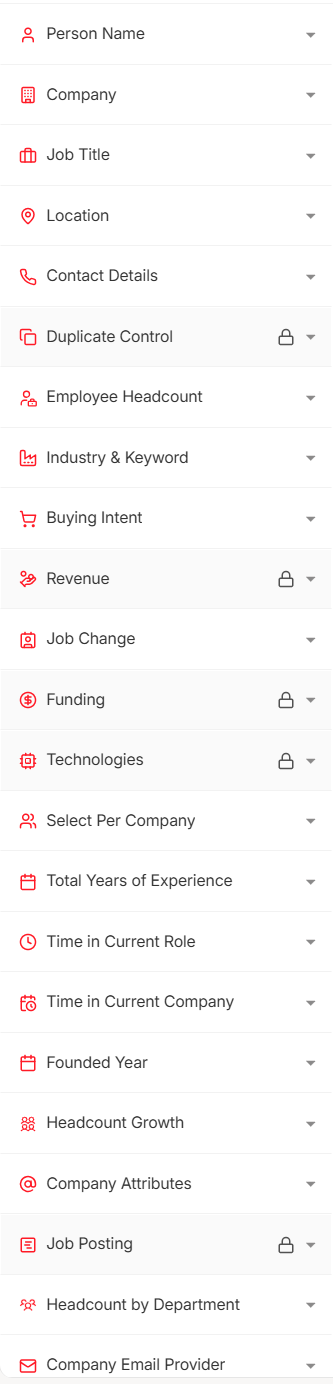

First-party engagement + fit gating Only score accounts that match ICP (industry, size, geo, tech). Then layer in engagement (pricing page, demo page, webinar attendance, email replies).

One third-party intent layer (topic-based) Pick one provider and one intent model (topics, not "magic AI intent"). You want directional in-market behavior, not perfection.

Verification + enrichment before outreach (deliverability protection) Don't let signals push bad contact data into sequences. Use a verification/enrichment layer (for example, Prospeo) before outreach.

Mini decision tree (do this / not that):

- If you've got <1,000 target accounts -> do account-level scoring, not lead-level chaos

- If Sales ignores alerts -> route to CRM tasks + sequences, not Slack pings

- If your bounce rate's >5% -> verify before outreach, even if it slows you down

- If you can't respond fast -> lower Tier A volume, don't pretend you'll follow up

One benchmark to anchor your SLAs: contacting a lead within an hour makes them about 7x more likely to qualify than waiting longer. Speed isn't a nice-to-have. It's the whole point of signals.

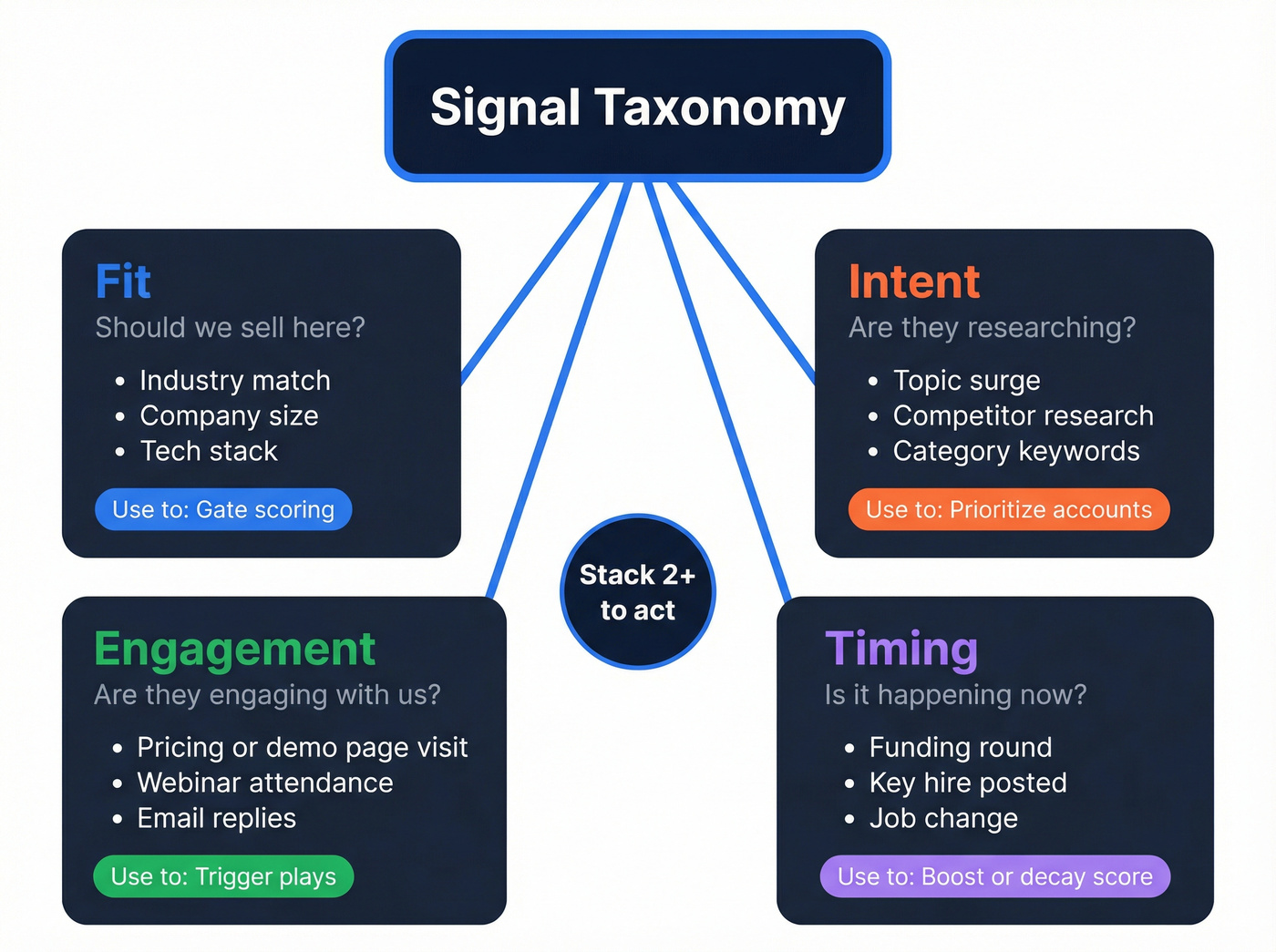

Signal taxonomy that actually works (fit, intent, engagement, timing)

Signal programs get messy when teams mix categories. Demandbase's framing is the simplest that holds up in production: fit, intent, engagement. Add timing as the "recency + change" layer, because a signal from 30 days ago is basically trivia.

Not all signals are equal. A "blog visit" isn't the same as "pricing page twice + competitor comparison + new VP Sales hired." Treating them equally is how you get false positives and reps who stop trusting the system.

A practical taxonomy (steal this)

| Category | What it answers | Examples | Typical use |

|---|---|---|---|

| Fit | "Should we sell here?" | Industry, size, geo, tech stack | Gate scoring |

| Intent | "Are they researching?" | Topic surge, competitor research | Prioritize |

| Engagement | "Are they engaging with us?" | Pricing/demo page, webinar, replies | Trigger plays |

| Timing | "Is it happening now?" | Funding, hiring, job change | Boost/decay |

Examples that actually move pipeline

- Pricing page / demo page views (first-party): strong, but only if fit is true

- Funding event: great timing signal, but noisy for smaller companies raising tiny rounds

- Hiring: "3 SDR roles" is a stronger buy signal than "1 marketing intern"

- Job change: new VP Sales / RevOps leader often resets vendors

- Tech change: competitor tool removed/added (strong when verified)

- Engagement spike then drop: often a buying committee forming elsewhere

You're not building a "signal lake." You're building a small set of signals your team agrees are meaningful, with clear recency windows and routing rules.

The core rule: stack signals (and why only ~10% is actionable)

Don't act on one signal. Stack them.

One signal is a rumor. Two or three signals is a pattern.

Use this / skip this rules

Use signals when:

- Fit is true and

- You have at least 2 categories firing (intent + engagement, or timing + intent, etc.) and

- The recency window's tight (hours/days, not weeks)

Skip signals when:

- Fit's unknown (you're scoring junk)

- It's a single weak engagement (one blog post)

- The signal's stale (older than your sales cycle's "attention span")

Why only ~10% is actionable (TAM math)

Treat this as a practitioner heuristic, not a law of physics: at any moment, a single-digit percent of your market's actively buying, and a slightly larger slice is open to a conversation. So if your "signals" are telling you 40% of your TAM is hot this week, your system's lying, or your thresholds are.

Two mini-scenarios

Bad scenario: "Visited pricing page" -> SDR spams 12 contacts -> 0 replies -> Sales blames intent data.

Good scenario: Fit match + "pricing page twice" + topic intent on your category + new RevOps hire -> Tier A routed to AE + SDR multi-threads -> meeting booked.

Signal-based selling is ops first, AI second. The best intent feed in the world's useless if it doesn't turn into routed work.

Your scoring model routes a Tier A account to an AE in under 60 minutes - then the email bounces. That's not a signal problem, it's a data problem. Prospeo's 5-step verification and 7-day refresh cycle keep your enrichment layer clean so signals actually convert to meetings, not bounces.

Stack signals on verified data or watch your SLAs mean nothing.

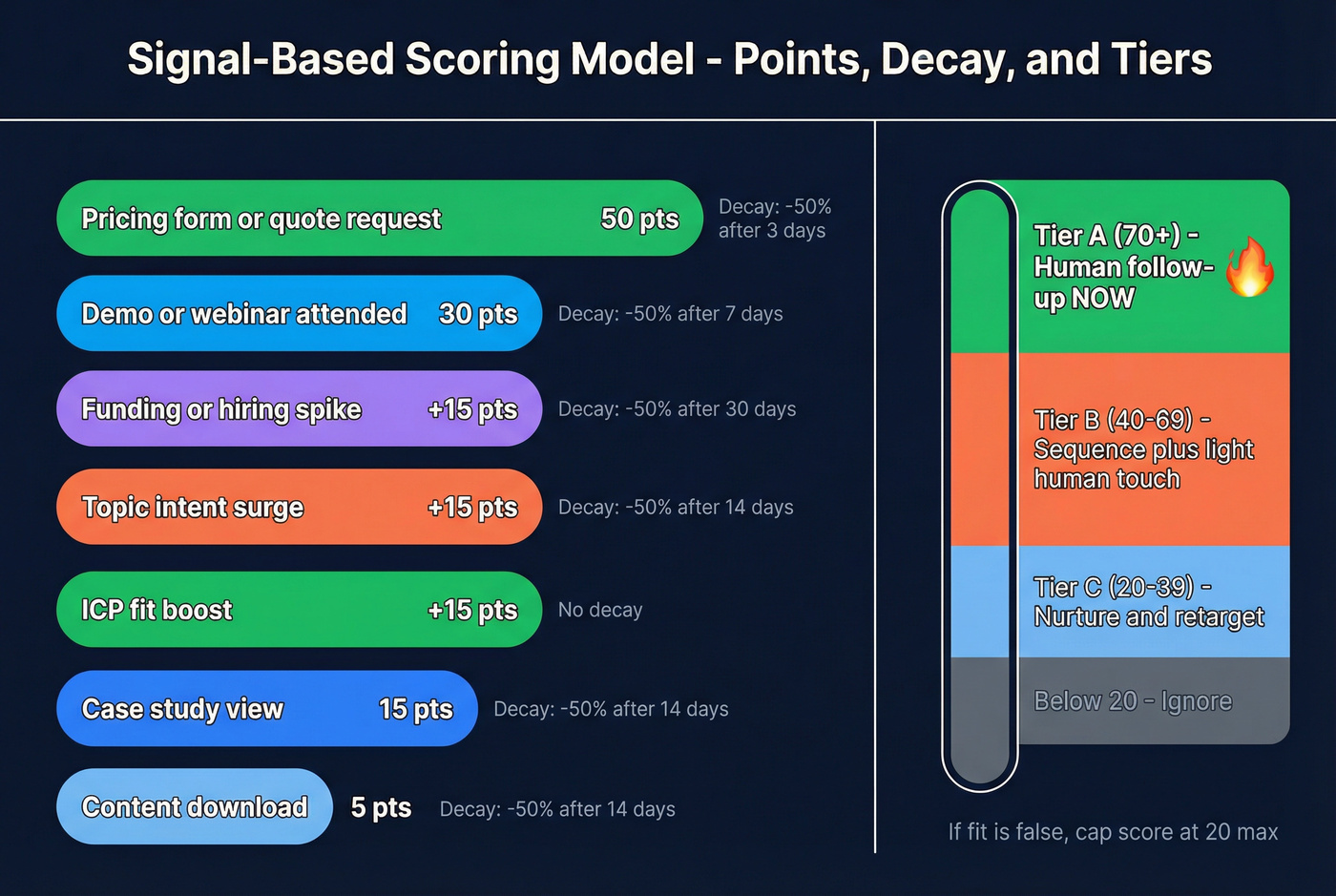

Copy-paste scoring model (weights, decay, thresholds)

If Sales doesn't believe your scoring, it doesn't exist. The fix is a model that's simple enough to explain on a whiteboard and strict enough to cut false positives.

This weighting template works because it maps to buying stages: 5 = early curiosity, 15 = evaluation behavior, 30 = strong hand-raise, 50 = explicit purchase intent.

Scoring model table (with decay + thresholds)

| Signal type | Example | Points | Decay rule | Notes |

|---|---|---|---|---|

| Early engagement | Content download | 5 | -50% after 14d | Don't overvalue |

| Eval engagement | Case study view | 15 | -50% after 14d | Good mid-funnel |

| High intent | Demo/webinar live | 30 | -50% after 7d | Recency matters |

| Purchase intent | Quote/pricing form | 50 | -50% after 3d | Route fast |

| Fit boost | ICP match | +15 | None | Gate/boost |

| Timing boost | Funding/hiring spike | +15 | -50% after 30d | Context signal |

| 3rd-party intent | Topic surge | +15 | -50% after 14d | Needs fit |

Thresholds (keep it boring):

- Tier A (Hot): >=70 -> human follow-up now

- Tier B (Warm): 40-69 -> sequence + light human touch

- Tier C (Nurture): 20-39 -> nurture, retarget, wait

- <20: ignore (or you'll recreate spray-and-pray)

Step-by-step calibration (so it doesn't become "RevOps astrology")

Start with fit gating. If fit's false, cap the score (for example, max 20). This prevents "students downloading ebooks" from looking like buyers.

Define recency windows per signal. Pricing page: 3-7 days. Hiring: 30-60 days. Topic intent: 7-14 days. Write it down.

Add decay. Default: halve the points when the recency window expires. Decay keeps your model honest.

Validate quarterly against revenue. Pull closed-won and compare win rate by score band, conversion to opportunity by band, and speed to first meeting by band.

Keep it transparent. If the model needs an LLM to explain it, Sales won't trust it. Simple beats "smart."

Reps don't need a mystical score. They need to know why now so they can write a better first line.

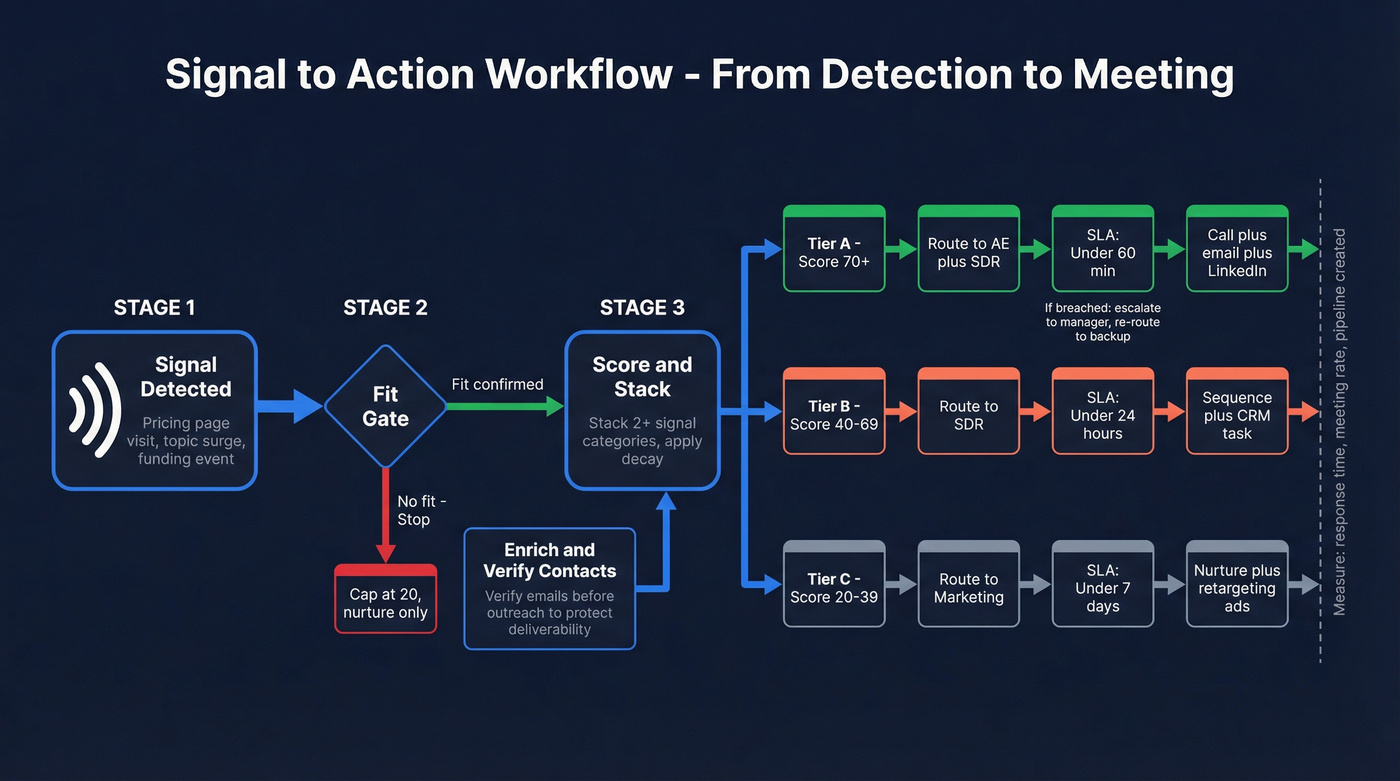

Signal-based selling AI workflow: routing tiers, SLAs, and playbooks

Signals don't create pipeline. Response creates pipeline.

This is where most programs die: they detect signals but can't route them cleanly, enrich fast enough, or enforce SLAs. So the "hot" account gets contacted tomorrow by the wrong rep with the wrong message, and everyone concludes signals don't work.

Routing matrix (3 tiers, clear owners)

| Tier | Definition | SLA | Owner | Next action |

|---|---|---|---|---|

| A | Score >=70 | <60 min | AE/SDR | Call + email |

| B | 40-69 | <24 hrs | SDR | Sequence + task |

| C | 20-39 | <7 days | Marketing | Nurture/ads |

The enforcement mechanics teams skip (and then wonder why nothing changes)

1) Instrument the SLA with timestamps (no timestamps = no SLA).

Add fields that make the SLA measurable: signal_created_at, routed_at, first_touch_at, sla_due_at, sla_status. If you can't compute first_touch_at automatically, you don't have an SLA. You've got hope.

2) Create a "no-owner" queue and treat it like a fire alarm. Every routing system produces edge cases: missing territory, unmapped account, duplicates, conflicting ownership. Don't let those silently die. Anything Tier A that sits unowned for 10 minutes should hit a monitored queue (RevOps + SDR manager). The queue should be small and embarrassing.

3) Handle duplicates and ownership conflicts with one rule, not debates. Pick a deterministic order and write it down:

- If an account has an open opportunity -> owner = opp owner

- Else if an account has an active AE -> owner = AE

- Else if inbound lead exists -> owner = inbound owner/queue

- Else -> owner = territory SDR

Then add a "conflict reason" field so you can audit why something got reassigned.

4) Escalate breaches automatically (and make the escalation hurt a little).

When Tier A breaches: create a task for the manager, post a single escalation message (one channel, not five), and re-route to a backup owner if untouched after X minutes. The point isn't punishment. The point is keeping "hot" from turning into "stale."

5) Cap Tier A volume so humans can act like humans. If Tier A is 200 accounts/day, your model's broken. Put a hard cap in place ("Max 15 Tier A accounts per AE per day"). Overflow goes to Tier B with an "overflow" reason.

This one move prevents burnout and keeps Tier A meaningfully prioritized.

5 ready-to-run plays (signal pattern -> who -> channel -> angle)

| Signal pattern (stacked) | Who to contact | Channel order | Message angle | Disqualifiers (skip if...) |

|---|---|---|---|---|

| Pricing page 2x + ICP fit + topic intent | Economic buyer + champion | Call -> email -> LI/ads | "You're likely comparing options. Here's the 2-minute decision shortcut." | Not ICP / student traffic / reseller |

| New RevOps/VP hire + ICP fit + competitor research | New leader + ops peer | Email -> call -> email | "New leader = new systems. Here's what teams standardize first." | Role is junior / consulting firm |

| Hiring spike in revenue roles + ICP fit + engagement | Hiring manager + ops | Call -> email | "Hiring means process breaks. Here's how teams avoid ramp chaos." | Hiring is internships only |

| Product usage spike (PLG) + ICP fit + admin activity | Admin + power user | In-app -> email -> call | "You've hit the 'needs governance' moment. Here's the upgrade path." | Usage is trial-only noise |

| Tech change detected + ICP fit + intent | Owner of the tool + AE sponsor | Email -> call | "Tool change suggests a project. Want a clean migration plan?" | Tech signal unverified |

Weekly ops review checklist (30 minutes, non-negotiable)

- Tier A volume per rep (are you overloading anyone?)

- SLA breach rate by tier (and top 3 breach reasons)

- Duplicate/ownership conflict count

- Meeting rate by tier (Tier A should win)

- Bounce rate + spam complaints (deliverability is your early warning)

- "No-owner" queue size and aging

Signal plumbing architecture (sources -> warehouse -> reverse ETL -> CRM -> sequences)

Dashboards are where signals go to die. Put the score and the "why" in the CRM record where reps live.

Here's an architecture that scales without heroics: sources (website events, product events, intent provider, enrichment provider, CRM activity) -> collection -> modeling -> reverse ETL -> activation.

Reverse ETL "last mile" (what actually matters)

Reverse ETL is the operational step: take clean warehouse outputs and push them back into Salesforce/HubSpot/Outreach/Salesloft.

A clean pattern:

- Build a warehouse model (SQL) like

account_signal_score_daily - Map fields to CRM properties

- Sync on a schedule (hourly or near-real-time for Tier A)

- Log every sync run with counts (inserted/updated/failed)

Field mapping examples (keep it minimal)

account_signal_score-> Account: "Signal Score"signal_tier-> Account: "Signal Tier"top_signal_reason-> Account: "Why now"last_signal_at-> Account: "Last Signal Timestamp"recommended_playbook-> Task/Lead: "Next Best Action"

If reps need to open BI to see who's hot, you've already lost. The score should sit next to the account name, with a task already created.

Failure modes & monitoring (the part that saves your quarter)

Here's the thing: the default state of data pipelines is "quietly broken."

Set alerts on reverse ETL job failure, "0 rows updated" when you expected volume, and auth/token expiration. Run daily diff checks (Tier A count swings, top 10 accounts by score, null-rate for critical fields). Use retries with backoff plus a dead-letter queue, and make someone clear it daily.

Track four operational KPIs: end-to-end latency (signal -> routed task), lead-to-account match rate, enrichment coverage rate, and SLA breach rate by tier.

Minimum viable signal stack (and common stacks in the wild)

You're assembling a system with four jobs:

- Detect signals

- Score and tier

- Route and enforce SLAs

- Activate with reachable contacts (verified email/mobile) and clean enrichment

Minimum viable stack blueprint (MVSS)

- CRM (Salesforce or HubSpot) as system of record

- One intent layer (topic-based)

- One enrichment/verification layer (protect deliverability)

- One automation layer (routing, tasks, sequences)

- Optional: warehouse + reverse ETL once you outgrow spreadsheets

Teams often see around 20% bounce reduction when they add verification to outbound lists. And if you've already damaged a domain, verification isn't "nice." It's the rebuild plan.

Tool map (job-to-be-done -> examples)

| Job | What "good" means | Example tools |

|---|---|---|

| Verified contacts | Low bounce, fresh | Prospeo, Cognism |

| Prospecting suite | Fast rep workflow | Apollo, ZoomInfo |

| Data orchestration | Custom recipes | Clay |

| Intent/ABM | Topics + orchestration | 6sense, Demandbase |

| AI prioritization | "Who matters now" | Amplemarket |

| Automation | Glue + retries | Make.com, Zapier |

| Destinations | Where reps act | SFDC/HubSpot/Outreach/Salesloft |

Prospeo (verified data + enrichment that keeps signals executable)

Prospeo is "The B2B data platform built for accuracy," and it's the one I'd put closest to activation because it keeps your hottest accounts reachable without trashing deliverability.

You get 300M+ professional profiles, 143M+ verified emails, and 125M+ verified mobile numbers, refreshed on a 7-day cycle (the industry average is about 6 weeks). Email accuracy is 98%, mobile pickup rate is 30%, API match rate is 92%, and enrichment match rate is 83% (meaning most leads you enrich come back with usable contact data), which matters a lot when Tier A routing can't afford to stall on missing fields.

In practice, the workflow's simple: filter by ICP, add an intent topic (15,000 topics, powered by Bombora), enrich into your CRM, and stamp last_verified_at so your routing rules can trust the contact record. That's how you keep "signal-based" from turning into "bounce-based."

Apollo (all-in-one that gets teams moving)

Apollo's the obvious starting point for SMB teams because it's an all-in-one: database, sequencing, and basic filters in one UI. It wins on speed-to-value.

Compared to ZoomInfo, Apollo usually wins on price and usability. ZoomInfo wins on enterprise workflow breadth and certain US coverage pockets.

The tradeoff: Apollo's data quality varies by niche, and you'll feel it in bounce rates if you don't verify. Pricing signal: free tier exists; paid plans typically run around $49-$99/user/month, with higher tiers for more credits and features.

Clay (the data layer for custom signal recipes)

Clay is a data orchestration layer for building custom enrichment and scoring recipes, perfect once you're past "one vendor does it all."

Clay wins on flexibility. You can combine web sources, enrichment providers, and your own rules. The tradeoff is upkeep: workflows get fragile if you don't standardize inputs and monitor failures.

Pricing signal: credit-based, often around $150-$500/month for lighter usage; heavier GTM ops usage commonly lands around $800-$3,000/month depending on volume.

6sense (ABM-grade intent + orchestration)

6sense is a full ABM/intent platform with strong orchestration: account identification, scoring, and workflow tooling.

Official packaging includes a free plan with 50 credits/month. Market reality is enterprise spend, often around $50k-$200k+/year depending on modules, data, and seats.

ZoomInfo (big suite, big bill)

ZoomInfo's the default because it's broad: contact data, intent, website visitors, and a lot of workflow surface area. ZoomInfo Sales sits at 4.5/5 with 8,997 reviews on G2 (snapshot; page updated Mar 2026).

ZoomInfo still wins on "one vendor for many jobs." The downside is you'll pay for modules you don't fully activate.

Pricing signal: often $15k-$45k+/year depending on tier/modules, and renewals often come in 10-20% higher.

Cognism (phones + EU compliance)

Cognism is the phone-data pick when calling's central, especially for Europe. Compliance setup is strong, and teams that live on the dialer tend to stick with it.

Pricing signal: often around $12k-$35k/year depending on seats and regions.

Amplemarket (AI prioritization that's actually useful)

Amplemarket is closer to an "AI lead engine" than most tools with AI in the tagline. The best use is prioritization: helping reps decide who matters right now based on buying signals and engagement.

Pricing signal: often around $600-$1,500/user/month depending on features and volume.

Tier 3 quick mentions (useful, but not your core system)

Skip these if you're still fighting basic routing and data hygiene. You'll just add cost and confusion.

- Bombora: classic topic intent provider; great as a layer, not a full workflow. Typical range: $20k-$80k/year.

- Demandbase: ABM platform with fit/intent/engagement framing that's operationally solid. Typical range: $40k-$150k+/year.

- Hunter: simple email finder for quick lookups. Typical range: $49-$199/month.

- Pocus: strong for product-led signals and RevOps ownership of "who's activated." Typical range: $500-$2,000/month.

- Make.com: automation glue when you need branching logic and retries. Typical range: $10-$99/month.

- Zapier: quick automations when you don't need heavy branching. Typical range: $20-$100+/month.

- Salesforce/HubSpot/Outreach/Salesloft: destinations where signals must land as fields, tasks, and sequences. Typical ranges vary widely by plan.

Where it helps (and where it fails)

AI is useful in signal-based selling, but not where most teams try to use it.

A clean way to think about it:

- Augmented: AI helps you do a task faster (summarize, draft, classify)

- Assisted: AI recommends next steps (prioritize, suggest playbooks)

- Autonomous: AI executes end-to-end (route, enrich, launch plays)

Most teams should live in augmented + assisted.

AI jobs that actually work

- Signal extraction: turn messy inputs (news, job posts, site events) into structured fields like "Hiring spike: SDR"

- Reason generation: create a short "why now" sentence for the CRM

- Playbook selection: map tier + signal pattern to a recommended sequence

- De-duplication support: flag likely duplicates and conflicting owners

- Personalization assist: draft a first line based on the top 1-2 signals, not a novel

Where it fails (and why)

In practice, AI fails when the underlying data is stale or unverified, the system can't write back into CRM/SEP cleanly, or you ask it to "decide" without transparent rules (Sales won't trust it).

I'm opinionated here: if an agentic SDR tool can't reliably write to your routing fields and CRM objects, it's not an SDR. It's a demo.

Signal-Based Selling AI KPIs (prove pipeline impact)

If you can't prove impact, your signal program turns into a "cool project" that gets cut at renewal.

Here's the KPI set that tells you whether it's working:

| KPI | Definition / formula | What "good" looks like |

|---|---|---|

| Time-to-first-touch (Tier A) | first_touch_at - signal_created_at (business hours) |

Median < 60 minutes |

| SLA breach rate | % of routed records where first_touch_at > sla_due_at |

Tier A < 15% breached |

| Tier A meeting rate | meetings booked / Tier A routed |

Tier A beats Tier B by a lot |

| Opp creation rate by tier | opps created / routed per tier |

Tier A highest; Tier C lowest |

| SDR acceptance rate | accepted / assigned (for SDR-owned tiers) |

>80% (or your routing's junk) |

| Lead-to-account match rate | % of leads/events mapped to correct account |

>85% |

| Enrichment coverage | % routed with required contact fields present |

>90% for Tier A |

| Bounce rate | bounces / emails sent |

<3-5% (lower is better) |

| Spam complaint rate | complaints / delivered |

As close to 0 as possible |

| Duplicate rate | % routed that are duplicates/conflicts |

<2-5% |

| Pipeline influenced | Pipeline where an opp had a signal event within X days pre-creation | Track trend; define X (often 14-30 days) |

Two opinions that save time:

- If Tier A meeting rate isn't clearly better than Tier B, your Tier A definition's wrong (or you're too slow).

- If enrichment coverage is low, stop tuning scoring. You're optimizing a system that can't reach anyone.

Data quality, freshness, and compliance (visitor ID reality included)

Signal-based selling gets creepy fast if you're sloppy. The goal is "helpful and timely," not "we're watching you."

Data quality + freshness checklist

- Define your source of truth for each field (don't let five tools overwrite job title)

- Track

last_verified_attimestamps for email and mobile - Enforce recency windows and decay (stale signals create false positives)

- Monitor bounce rates and spam complaints weekly (deliverability is your early warning)

Visitor ID reality (what's real, what's hype)

- 97% of visitors leave without converting (no form fill)

- Fingerprinting can identify returning visitors with up to ~90% accuracy in best-case setups

- IP-based identification is weaker now (remote work, shared networks, VPNs)

- Cookies are phasing out, so "perfect attribution" is a fantasy

That fingerprinting number is best-case for returning visitors. Treat visitor ID as a directional account signal, not a license to pretend you know the exact person.

GDPR/CCPA best practices (simple rules)

- Disclose what you collect and why, in plain language

- Offer opt-out and honor it globally

- Minimize retention: keep what you need, delete what you don't

- Restrict access internally (need-to-know)

- Document vendors, DPAs, and deletion workflows

Over-personalization is how teams blow up trust. Don't say "I saw you visited pricing at 2:13pm." Say "Teams usually evaluate X when they're hiring SDRs."

Budget reality (what teams actually pay for intent + data)

Signal-based selling AI isn't expensive because of "AI." It's expensive because you're paying for data coverage and packaging.

Pricing sidebar (realistic ranges)

| Tool | What you're buying | Typical cost |

|---|---|---|

| ZoomInfo | Data + intent tiers | $15k-$45k+/yr (typical market range) |

| 6sense | ABM + intent suite | Free plan (50 credits/mo) + $50k-$200k+/yr (typical enterprise spend) |

| Bombora | Topic intent layer | $20k-$80k/yr (typical market range) |

| Prospeo | Verified data + intent | Free tier -> usage-based (~$0.01/email; mobiles use credits) |

One more opinion: if a vendor can't give you a clear pricing shape, you'll also struggle to get clear operational ownership. Signal programs die in ambiguity.

What practitioners complain about (and they're right)

- "We bought intent and got alert fatigue. Nothing changed in rep workflow."

- "Data quality is inconsistent by niche, so reps stop trusting the score."

- "The stack is fragile. One broken sync and routing silently stops."

The fix is always the same: fewer signals, stricter tiers, and monitoring that catches failures before Sales does.

A worked example: from signal -> routed task -> meeting (end-to-end)

Here's what "good" looks like in one flow.

Trigger pattern (stacked):

- ICP fit = true (industry + headcount + geo)

- Topic intent surge on your category (last 7 days)

- Pricing page viewed twice (last 72 hours)

- New RevOps hire (last 30 days)

Scoring result: 15 (fit boost) + 15 (intent) + 30 (high intent engagement) + 15 (timing) = 75 -> Tier A

Routing:

- Account matched (lead-to-account match succeeds)

- Owner resolved (AE owns account; SDR is paired)

- Task created with

recommended_playbook = "Pricing+Intent+New Leader" - SLA due in 60 minutes (business hours)

Enrichment + contact selection:

- Pull 2-3 contacts: economic buyer, ops owner, likely champion

- Verify email + mobile, stamp

last_verified_at - If enrichment coverage fails, auto-fallback: route to Tier B and create a "missing contact" task (don't pretend it's Tier A)

Outreach (human, not creepy):

- Call the ops owner first (fastest path to context)

- Email angle: "New RevOps leader + evaluation behavior usually means a tooling review. Want the 2-minute checklist teams use to pick X without a 6-week bake-off?"

Measurement:

- Log

first_touch_atautomatically - Track meeting booked within 7 days as Tier A meeting rate

- If SLA breached, it shows up in the weekly ops review with a reason code

That's signal-based selling AI: not "we saw something," but "we did something, fast, and we can prove it."

Next steps (what to do Monday)

Week 1: pick 15 signals, define fit gating, and write your Tier A/B/C definitions on one page. If you can't explain Tier A in 20 seconds, it's too complex.

Week 2: implement the scoring table + decay and backtest against closed-won. Adjust weights until Tier A clearly beats Tier B on meeting rate.

Week 3: build routing with timestamps, a no-owner queue, and an escalation rule for Tier A breaches. Cap Tier A volume so reps can execute.

Week 4: add monitoring (sync failures, diff checks, dead-letter queue) and ship a KPI dashboard. Then run the 30-minute weekly ops review until the system's boring and reliable.

FAQ

What's the difference between signal-based selling and lead scoring?

Signal-based selling is an end-to-end system that turns signals into routed actions with SLAs, while lead scoring is usually a static points model that lives in a dashboard. Signal-based selling includes fit gating, multi-signal stacking, decay, routing tiers, and playbooks so reps act fast instead of just seeing "hot leads."

What are the best "high-intent" signals to start with in 2026?

Start with signals that imply evaluation or purchase: pricing/demo page views, quote requests, webinar attendance, competitor comparisons, and topic intent surges, then stack them with fit and timing. Funding, hiring for revenue roles, and senior job changes are strong timing multipliers when they're recent and ICP-aligned.

How fast do you need to respond for signals to matter?

Tier A response needs to land inside 60 minutes. That's the difference between "in the conversation" and "too late."

How do you prevent false positives and alert fatigue?

Fit gate first, require at least two signal categories before Tier A, and apply score decay so stale behavior stops looking "hot." Alert fatigue disappears when signals create CRM tasks, sequences, and owners, because reps see actions, not noise.

What tool do you use to activate signals with verified contact data?

Use a verified data and enrichment layer to turn in-market accounts into reachable contacts quickly so your signal playbooks don't die on bounce rates or missing fields.

The article says it plainly: if your bounce rate's above 5%, verify before outreach. Prospeo returns 50+ data points per contact at a 92% match rate - emails, direct dials, job changes - so your routed plays hit real inboxes, not spam traps.

Stop routing hot signals to dead email addresses.

Summary

Signal-based selling AI works when it's an execution system: fit-gated signals, stacked patterns, simple scoring with decay, strict routing tiers, and SLAs you actually measure.

Keep the program small (15 signals, three tiers), push "why now" into the CRM, and use verified enrichment so your hottest accounts are also reachable.