Ideal Customer: Definition, ICP vs Persona, and How to Validate It

Most teams don't have an ideal customer problem. They've got a focus problem.

They try to sell to everyone who could buy, instead of the smaller set of customers who buy fast, stick around, and expand. Once you see that difference in your cohorts, it's hard to unsee.

Here's the thing: "ideal customer" isn't a vibe. It's a measurable bet.

What you need (quick version)

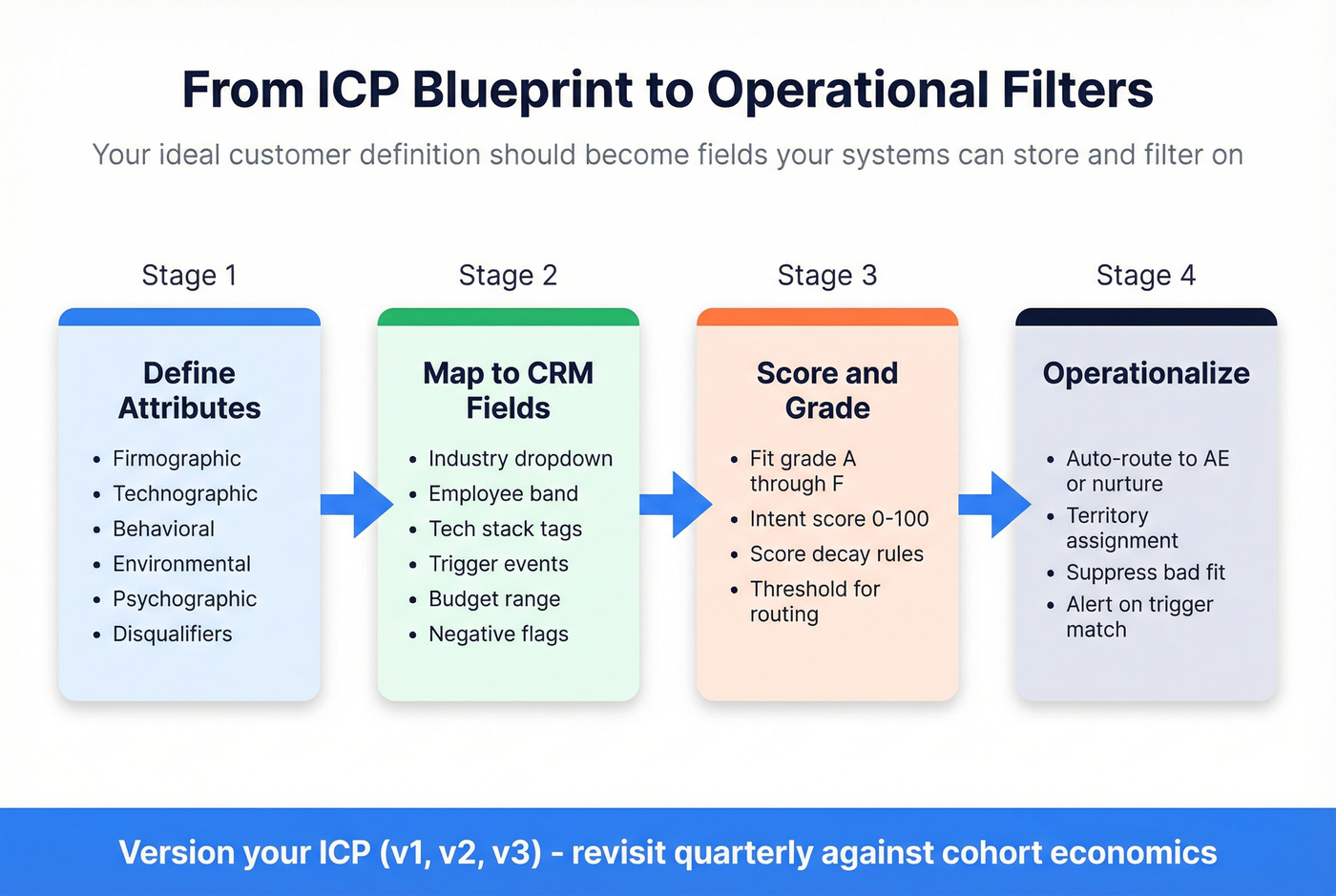

- Write down your ideal customer as a hypothesis, not a belief. Put it in a one-pager with fields you can store in your CRM (industry, employee band, tech must-haves, triggers) and a version number (ICP v1). The output should be something RevOps can turn into filters and lifecycle rules, not a narrative doc.

- Do ICP first, persona second. Qualify the company before you obsess over messaging to a role. Salesforce reports 86% of business buyers are more likely to buy when providers understand their goals. Persona work pays off after you've narrowed to the right accounts.

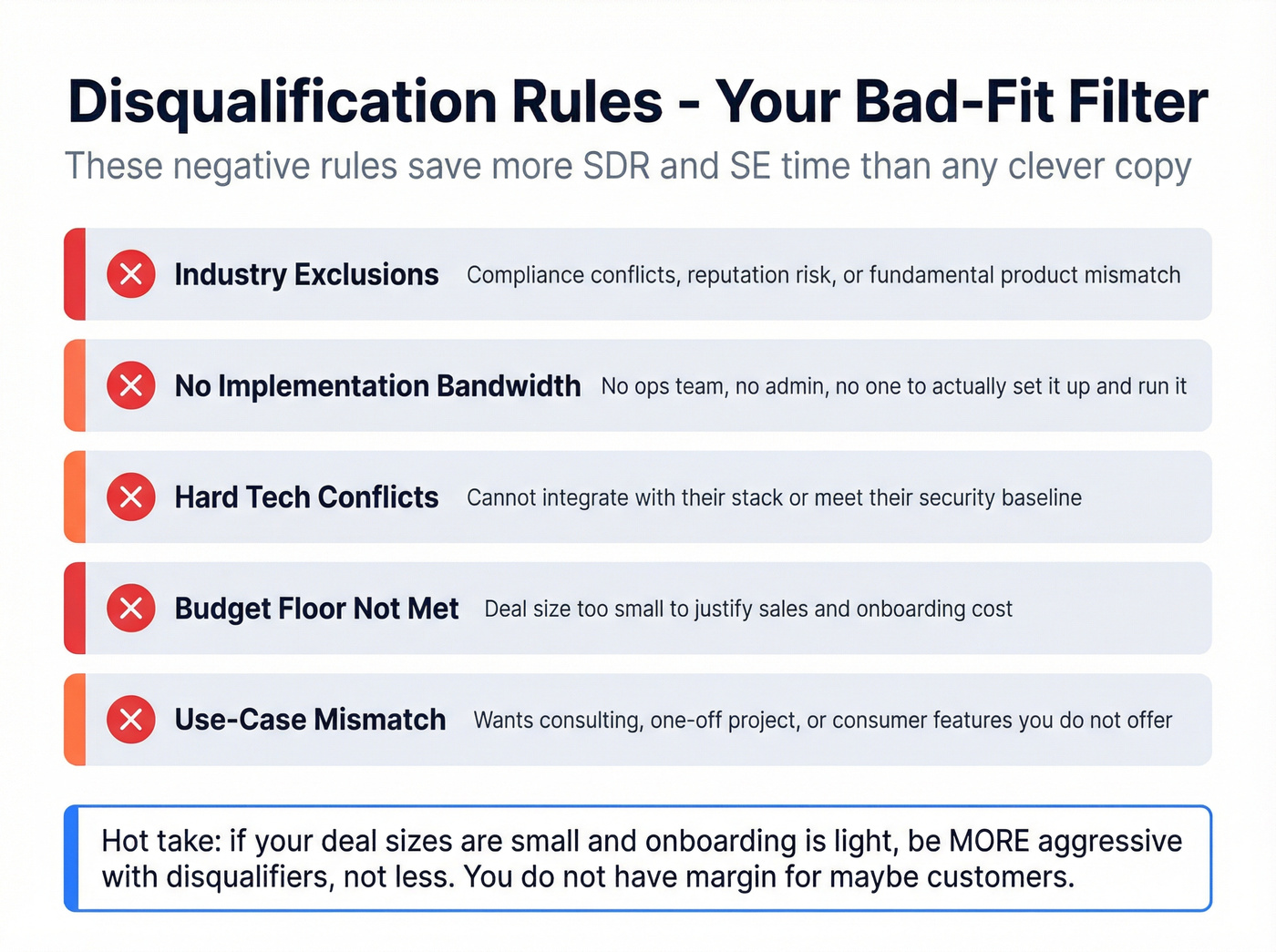

- Make disqualification criteria a first-class output. "Who we're not for" should become explicit negative rules in routing/scoring (e.g., Industry = staffing -> auto-nurture, No SOC2 path -> suppress). This one move saves more SDR and SE time than any clever copy.

- Treat B2B as a buying-group problem. Your ideal customer isn't "a champion." It's an account where the buying group can actually say yes: budget owner, security/IT, legal/procurement, and the team that will implement.

- Validate with economics (cohorts, not vibes). Slice retention, expansion, CAC payback, and NRR/GRR by ICP attributes. A "cohort slice" is simply "customers acquired in the same period who match a specific ICP rule" and then you compare slices against each other.

- Operationalize it with fit + intent + decay. Use a fit grade (A-F) and an intent score (0-100), then add score decay so last month's curiosity doesn't clog today's pipeline. Decay keeps your CRM from treating stale engagement like a hot lead.

Those bullets are the principles. The next list is the minimum execution plan: the smallest set of actions that forces clarity and produces a usable output.

If you do only three things this week:

- Set 5-8 disqualification rules (industry exclusions, tech constraints, budget floor, compliance blockers).

- Run 12 interviews (6 wins, 6 churned/lost) and extract triggers + "switch" language.

- Ship a simple model: fit grade (A-F) + intent score (0-100) and route anything under your threshold to nurture.

Ideal customer definition (and how it differs from ICP and persona)

If you're looking for the ideal customer meaning in plain language: it's the customer you want more of because the relationship works.

An ideal customer is the customer you want more of because the relationship works: they get value quickly, they keep paying, and they're not a constant support fire drill.

In B2C, "ideal customer" usually means a person (or household) with a repeatable need and predictable buying behavior. In B2B, it's usually shorthand for an ICP: the type of company that's a great fit.

Salesforce's definition of an ICP is a clean way to say it: a detailed description of a company that's a perfect fit and most likely to become a paying, successful customer. The key word is detailed. A range like "100-700 employees" is so wide it's almost useless, because 101 employees and 699 employees don't buy the same way, don't implement the same way, and don't churn for the same reasons.

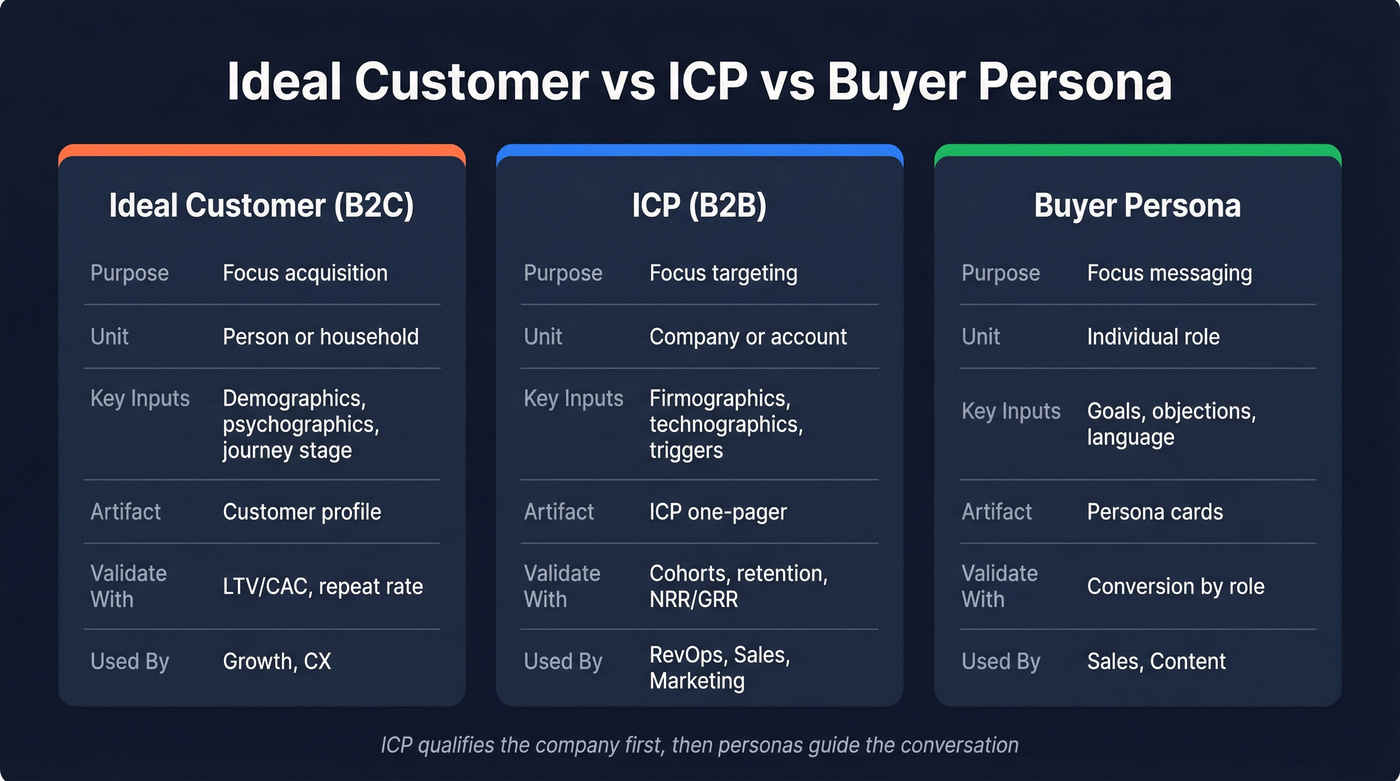

HubSpot's taxonomy is the one we use with exec teams because it ends the "persona vs ICP" argument fast:

- ICP decides which companies qualify.

- Persona decides how you talk to people inside those companies.

Here's the mini glossary I wish every org had in their wiki:

- Ideal customer (B2C): the person you can acquire profitably and retain, with a clear "why now."

- ICP (B2B): the company profile that predicts success (value realized + retention + expansion).

- Buyer persona: the role-based archetype inside the ICP (goals, objections, language, influence).

Ideal customer vs ICP vs persona (quick table)

| Ideal customer (B2C) | ICP (B2B) | Buyer persona | |

|---|---|---|---|

| Purpose | Focus acquisition | Focus targeting | Focus messaging |

| Unit | Person/household | Company/account | Individual role |

| Inputs | Demographic, psychographic, journey stage | Firmographic, technographic, triggers | Goals, objections, language |

| Artifact | Customer profile | ICP one-pager | Persona cards |

| Validate | LTV/CAC, repeat rate | Cohorts, retention, NRR/GRR | Conversion by role |

| Used by | Growth, CX | RevOps, sales, marketing | Sales, content |

The ideal customer blueprint (what to include + what to exclude)

The fastest way to make your "ideal customer" useful is to turn it into fields your systems can actually store and filter on, so your definition becomes operational, not aspirational.

Salesforce's component categories are a solid backbone: demographic, geographic, psychographic, behavioral, technographic, and firmographic. It also calls out environmental characteristics like industry trends, economic conditions, geography, and regulatory factors. I'd add one more category that most teams forget until it hurts: disqualification criteria.

What to include (and how it becomes usable)

| Category | B2C examples | B2B examples | "Usable" output |

|---|---|---|---|

| Demographic | age, income | job-level mix | CRM/form fields |

| Geographic | region, language | country, region | territory rules |

| Psychographic | values, motives | risk tolerance | messaging angles |

| Behavioral | usage, repeat | site/product events | scoring events |

| Firmographic | - | industry, size | account filters |

| Technographic | devices/channels | stack, tools | tech filters |

| Environmental | - | regulation, economy | routing notes |

| Disqualifiers | low intent | no budget, hard conflicts | negative rules |

Ideal customer attributes (B2C)

For B2C, you're usually building a customer profile that blends who they are with how they buy.

Zendesk's persona template types are a good mental model: demographic-based, psychographic, technographic, role-based, and customer-journey-based. The journey-based piece is where most B2C "ideal customer" docs get real: what triggers awareness, what causes consideration, what blocks purchase, and what drives repeat.

Checklist (B2C fields that actually matter):

- Primary job-to-be-done (what they're trying to accomplish)

- Trigger events ("why now?")

- Preferred channels and devices

- Price sensitivity and decision style (fast vs research-heavy)

- Retention drivers (habit, community, switching cost)

- Top 3 objections in their own words

ICP fields (B2B)

B2B ICPs fail when they're written like a vibe: "mid-market SaaS, modern stack, growth-minded." That's not filterable, routable, or scoreable.

What works is turning the ICP into constraints and signals:

- Firmographics: industry, employee band (tight), revenue band, geo, ownership (PE-backed vs founder-led), growth stage.

- Technographics: must-have systems (CRM, data warehouse, security stack), "can't-have" tech (hard incompatibilities).

- Triggers: hiring spikes, funding, new exec, compliance deadline, tool migration, job posts mentioning your category.

- Constraints: data residency, procurement requirements, security review depth, implementation resources.

- Behavioral fit: the workflows they already run (outbound motion, PLG, channel-led, etc.).

Broad ranges fail in practice. "100-700 employees" is the classic self-inflicted wound: you'll end up with SDRs calling companies that need entirely different onboarding, pricing, stakeholder mapping, and success plans.

Disqualification rules

Real talk: bad-fit pipeline costs you twice.

First, you pay to acquire and work it (ads, SDR time, SE time). Then you pay again when it churns, refunds, or drags your team into custom work that doesn't scale.

Start your disqualification list with negatives that are easy to detect:

- Industry exclusions (compliance, reputation, or product mismatch)

- Minimum capability (no ops team, no admin, no implementation bandwidth)

- Hard tech conflicts (can't integrate, can't meet security baseline)

- Budget floor (or procurement reality: "must be on an approved vendor list")

- Use-case mismatch ("wants consulting," "wants one-off project," "wants consumer features")

Hot take: if your deal sizes are smaller and onboarding is light, you should be more aggressive with disqualifiers, not less. You don't have margin for "maybe" customers.

You just built your ICP with firmographics, technographics, and triggers. Prospeo lets you operationalize it instantly - 30+ filters including buyer intent, tech stack, headcount growth, and funding stage across 300M+ profiles. 98% email accuracy means your outreach actually lands.

Turn your ICP one-pager into a live pipeline in minutes.

In B2B, your ideal customer is a buying group (not a person)

If you're selling B2B and your "ideal customer" doc describes one person, you're setting yourself up for stalled deals.

Forrester's buying data is blunt: 86% of purchases stall, the average deal involves 13 stakeholders, and 89% of purchases span multiple departments. Forrester also found 81% of buyers are dissatisfied with at least one purchase, which is exactly why "fit" has to include implementation and procurement reality, not just interest.

One more shift that matters in 2026: Forrester found ~95% of buyers expect to use genAI in their buying process. That changes how "intent" shows up (more research, more comparison, more anonymous evaluation), and it's why your scoring needs freshness and decay.

Why deals stall even with "good leads"

The most common failure mode is the champion trap.

You find a motivated user, they love the demo, they say "send me pricing," and then... nothing. Not because the product's wrong, but because you never mapped the people who can kill the deal: finance, security, legal, IT, or the exec who owns the budget.

I've watched teams celebrate a monster month of "pipeline created" and then spend the next 60 days arguing about why nothing's moving. The answer's usually boring: they let bad-fit accounts in the front door, and the buying group reality did the rest.

Pipeline volume isn't the goal. SQLs, opportunities, closed-won, and retained revenue are.

Default 10-role buying committee map

Traction Complete's role list is a practical default for most B2B motions:

- Project Sponsor

- Champion

- Executive Sponsor

- Financial Approver

- Technical Buyer

- Ops/Process Owner

- Business User

- Legal Reviewer

- Influencer

- Final Authority

You won't see all ten in every deal. But if you only ever talk to one, you're basically hoping the org chart cooperates.

Decider / payer / user separation

MarTech's framework is simple and useful: separate deciders (choose), payers (write the check), and users (use the product). One person rarely holds all three roles.

The "wrong customer" failure mode is when you optimize for the user and ignore the payer. You'll win pilots and lose procurement. Or you'll win deals that churn because the decider wanted a feature checklist, not an outcome.

Validate your ideal customer with economics (cohorts, not vibes)

Your ideal customer isn't the segment that likes your pitch. It's the segment that produces durable unit economics.

That means cohorts.

A segment is a snapshot ("companies 200-500 employees in fintech"). A cohort is a time series ("customers acquired in January who match X - how did they retain and expand over 12 months?"). Northbeam's line - segment is snapshot, cohort is time series - is the cleanest way to keep yourself honest.

The validation loop

Use this loop until your ICP stops moving every quarter:

- Hypothesis: write your ICP v1 (fields + disqualifiers) so sales, marketing, and CS can agree on the ideal customer in operational terms.

- Test: acquire and sell into it for a defined window.

- Cohort compare: retention, expansion, payback by ICP slice.

- Update ICP: tighten ranges, add triggers, add disqualifiers.

- Repeat.

The "best" ICP often looks smaller on paper after validation. That's not a problem. Precision beats volume.

How to run a simple cohort check

You don't need fancy tooling to start. You need exports and a grid.

Pull a dataset with:

- Customer ID / account ID

- Acquisition month (signup date or first invoice date)

- ICP fields (industry, size band, geo, tech flags, trigger flags)

- Revenue by month (or invoices)

- Churn date (if applicable)

- CAC proxy (at least by channel or campaign)

Then build a retention grid (triangle chart):

- Rows = acquisition month

- Columns = month age (M0, M1, M2...)

- Cells = % retained or revenue per starting customer

Now slice it by ICP attributes. You're looking for:

- Early retention cliffs (onboarding mismatch)

- Cohorts that expand vs plateau

- One channel that looks good on leads but bad on payback

Glen Coyne's cohort LTV example is the punchline: an "Early Adopter" cohort at ARPA $300 with 2% churn yields $15,000 LTV, while a "Scale" cohort at ARPA $150 with 8% churn yields $1,875 LTV. Same product, wildly different reality, and the only reason you see it is because you forced the data into a time series instead of arguing about anecdotes.

Worked example: two ICP slices -> one clear decision

Below is what "cohort validation" looks like when it actually changes your go-to-market.

Assume a simple monthly model (good enough to make decisions):

- LTV (rough) ~= ARPA ÷ monthly churn

- Payback ~= CAC ÷ gross margin dollars per month (use your real margin)

| ICP slice (example) | ARPA (monthly) | Monthly churn | Rough LTV | CAC (blended) | What you do next |

|---|---|---|---|---|---|

| Slice A: "Early Adopter" (tight band + clear trigger) | $300 | 2% | $15,000 | $1,200 | Grade A. Route to SDR + AE fast lane, allow higher bids, prioritize multi-threading. |

| Slice B: "Scale" (broader band, weaker trigger) | $150 | 8% | $1,875 | $900 | Grade C. Keep in nurture/PLG, require stronger intent to hit SQL, don't spend SE time early. |

What changed?

- You stop arguing about "who feels excited on calls" and start optimizing for payback + retention.

- You tighten the ICP around the attributes that correlate with Slice A (specific triggers, tighter employee band, required systems).

- You update lead lifecycle stages so A-fit accounts can become SAL/SQL with less friction, while C-fit accounts need more proof (higher intent score, stronger use case, or inbound hand-raise).

Pass/fail thresholds to define

Pick numbers that match your business model. Don't borrow someone else's.

At minimum, define:

- Payback window: e.g., 6 months for SMB, 12 months for mid-market, 18 months for enterprise.

- Churn ceiling: e.g., logo churn under 2-3% monthly for SMB, or under 10-15% annual for mid-market.

- Expansion expectation: e.g., NRR above 100% for usage-based, or expansion within 6-9 months for seat-based.

If a cohort fails the gates, it's not the ideal customer even if sales loves the conversations.

Turn your ideal customer into routing rules (fit grade + intent score)

An ICP doc that doesn't change routing is just a strategy slide.

The operational version is a two-part system:

- Fit grade (how well they match your ICP)

- Intent score (how much buying behavior they're showing right now)

A usable starting heuristic is 40% fit / 40% intent-behavior / 20% economics, then you calibrate it against MQL->SQL, win rate, and 90-day retention. Treat it like a v1 model, not gospel.

One reason scoring rots over time is stale data: headcount bands drift, job titles change, and bounced emails create false "no response" signals. If your inputs are wrong, your routing is wrong, and you'll drift away from the ideal customer without noticing.

Grade (fit) vs score (intent)

RevBlack's framing is the one I wish every CRM enforced: grade is fit, score is intent.

- Grade (A-F): based on ICP match (industry, size band, tech, geo, constraints).

- Score (0-100): based on behavior and engagement (demo requests, pricing visits, high-intent content, reply signals).

That's how you get a label like A95 (perfect fit, hot) versus C25 (meh fit, lukewarm). They shouldn't route the same way.

Copy/paste scoring example

Start simple. You can run this in Marketo-style scoring or any modern automation tool.

Fit (grade inputs)

- +5 ICP industry match

- +5 employee band match

- +5 required tech present

- -10 disqualifying industry

- -10 hard tech conflict

Intent/behavior (score inputs)

- +25 demo request

- +10 high-intent asset download

- +5 pricing page visit (repeatable, cap at +15)

- -15 unsubscribe

- -10 no activity for 30 days

Decay rule

- Subtract 10 points every 14 days without new high-intent activity.

Thresholds:

- MQL: 60-100 points is a solid starting band (then calibrate).

- Route anything with disqualifiers to nurture or suppress, even if intent is high.

Governance + calibration

Scoring without governance becomes a political argument.

Set a monthly calibration ritual:

- Backtest last quarter: grade/score vs MQL->SQL, SAL->SQL, win rate, and 90-day retention.

- Adjust weights based on outcomes, not opinions.

- Keep a change log so you can explain why conversion moved.

RevOps benchmarks commonly land around 25-35% MQL->SQL. 40-50% happens when sales and marketing are truly aligned on what "qualified" means and the ICP is tight.

Do the research properly (interviews that don't create "fairytale personas")

Personas get a bad reputation because teams do persona theater: a workshop, a few guesses, a pretty slide deck, and zero behavior change.

The fix is interviews with recency and rigor. And one practical rule: quantify pains ("10 hours/week," "two-week security review," "$8k/month wasted") instead of adjectives ("inefficient," "slow," "frustrating"). Numbers travel through an org; vibes don't.

NN/g's guidance is refreshingly practical: 20-30 interviews uncover ~90-95% of needs, saturation often shows up around ~12, and you should start with 5-6 and analyze as you go.

How many interviews you need (and why)

Use this rule:

- Start with 6 (3 wins, 3 churned/lost).

- If themes are repeating, go to 12.

- If your market is diverse (multiple industries, multiple buyer types), go to 20-30.

For JTBD-style interviews, Ideafloat's recency guidance matters: talk to people who bought (or churned) in the last 3-6 months. Memory decays fast, and "why we bought" turns into a story people tell themselves.

10-question JTBD interview script

Use this script and don't freestyle it into feature requests.

JTBD interview questions (copy/paste):

- What was happening in your world that made you start looking?

- What was the trigger event? Why then, not earlier?

- What did you try first (internal workaround, competitor, doing nothing)?

- What frustrated you about the old way?

- Who else got involved, and when?

- What were you most worried about going wrong?

- What alternatives did you seriously consider?

- What was the tipping point that made you choose a direction?

- How did you define success in the first 30-90 days?

- What would make you switch away (or what made you churn)?

I've seen one good churn interview rewrite an ICP more than a month of dashboard staring.

JTBD statement template

UserInterviews' template is the cleanest deliverable:

When [circumstance], I want to [job], so I can [outcome], without [pain point].

Example: When we add 10 new reps in a quarter, I want to standardize outbound data and routing, so I can hit pipeline targets, without burning domains on bounced emails.

Build your first target list from your ICP (practical workflow)

Your ICP isn't real until you can pull a list from it, and until it reflects your ideal customer in a way your team can execute.

HubSpot's workflow is boring, and that's why it works. It's a two-step sourcing loop:

- Step A (companies): use Crunchbase to sort by funding series and growth stage.

- Step B (qualification): use a prospecting tool to qualify accounts with filters like company size, industry, hiring activity, and technology mentions in job postings, then build your contact list by role/seniority and buying-group coverage.

And yes, verify before outreach. Deliverability is fragile, and nothing nukes it faster than blasting unverified emails.

Step 1: Translate ICP into filters

Turn your ICP doc into filterable fields:

- Industry + sub-vertical

- Employee band (tight, not "100-700")

- Geography + compliance constraints

- Funding/growth stage (if relevant)

- Technographics (must-have tools)

- Triggers (job changes, headcount growth, hiring signals)

- Constraints (data residency, security baseline)

If you can't express it as filters, it's not an ICP yet. It's a narrative.

Step 2: Pull accounts + contacts

Pull accounts first, then map contacts across the buying group.

Don't stop at one title. For most B2B deals, you want at least:

- A likely champion/user (day-to-day pain)

- An ops/process owner (implementation reality)

- A technical evaluator (security/integrations)

- A financial approver (budget)

- An exec sponsor (priority and timing)

This is where most outbound programs quietly fail: they've "got accounts" but only one thread per account.

Step 3: Verify + enrich before you send

We've tested a lot of data tools over the years, and I'm opinionated about one thing: if the data isn't fresh and verified, you're not "doing outbound." You're burning domain reputation and teaching your CRM the wrong lessons.

Prospeo is "The B2B data platform built for accuracy" and it's built for this exact workflow: list-building plus real-time verification, on a 7-day refresh cycle (the industry average is 6 weeks). You get 300M+ professional profiles, 143M+ verified emails, and 125M+ verified mobile numbers, and the email verification runs at 98% accuracy.

Prospeo's verification is built for deliverability: proprietary email-finding infrastructure plus a 5-step verification process with catch-all handling, spam-trap removal, and honeypot filtering. That's how bounce rates stay low, and it's how you avoid the classic mess where "no response" is really "never delivered." If you want to compare tooling, start with an email verifier and a workflow for how to verify an email address.

Common mistakes (and how to avoid persona theater)

Use this if you want an ICP that changes outcomes:

- You tie ICP to retention, NRR/GRR, and CAC payback, not just lead volume.

- You write disqualifiers first, then build routing rules around them.

- You map the buying group and build multi-thread lists per account.

Skip this if you're about to do any of the following:

- Confirmation bias trap: you decide the ICP in a leadership meeting, then cherry-pick data to "prove" it.

- Data swamp trap: you collect every field imaginable, but ops can't route it, sales can't work it, and marketing can't target it.

- You build B2B personas like B2C demographics. Titles don't explain procurement, security reviews, or internal politics.

- You keep broad ICP ranges because narrowing feels scary. Broad ranges dilute messaging, break routing, and flood sales with "maybe" accounts.

I'm going to say the quiet part out loud: persona decks that don't change routing are a waste of time.

If your ICP doesn't change who gets called tomorrow morning, it's dust.

Next steps (do this in order)

Write ICP v1 (with disqualifiers) and force it into CRM fields so everyone can execute on your ideal customer, not just talk about it.

Then run 12 interviews to capture triggers and "switch language," validate with cohort slices against payback, churn, and NRR/GRR, and only after you've found the profitable slice should you operationalize it with fit grade + intent score + decay tied to lifecycle stages (MQL -> SAL -> SQL -> opportunity), because targeting without validation is just expensive guessing.

FAQ

What's the difference between an ideal customer, ICP, and buyer persona?

An ideal customer describes who you want more of; in B2C it's usually a person, and in B2B it's usually expressed as an ICP. An ICP defines which companies qualify to buy and succeed, while a buyer persona describes the people inside those companies and how to message, sell, and support them.

How do I know my ideal customer is profitable?

Your ideal customer is profitable when cohorts that match your definition show strong retention, acceptable CAC payback, and healthy expansion compared to other cohorts. Set pass/fail gates (payback window, churn ceiling, expansion expectation) and update your ICP until the "ideal" cohort consistently clears them.

How many customer interviews do I need to define an ICP?

You typically need 12 interviews to reach early saturation, and 20-30 interviews to uncover about 90-95% of needs in a broader market. Start with 5-6 (wins and churned/lost), synthesize themes, then add interviews until new conversations stop changing your ICP fields and disqualifiers.

What's a good starting MQL score threshold?

A good starting MQL threshold is 60-100 points, as long as you combine fit (grade) and intent (score) and include negative scoring plus decay. Then backtest: if MQLs don't convert to SQL at roughly 25-35% (or 40-50% in high alignment), recalibrate weights and events.

How do I turn an ICP into a verified outreach list?

Turn ICP fields into filters, pull accounts first, add contacts across the buying group, then verify emails before sending to protect deliverability. Prospeo lets you filter and export only verified contacts with 98% email accuracy and a 7-day refresh cycle, so you can reach the ideal customer you actually want, not just whoever happens to be in your CRM.

Disqualification criteria only work if your data is fresh enough to trust. Prospeo refreshes every 7 days - not the 6-week industry average - so your fit grades and routing rules run on current firmographic and technographic signals, not stale records.

Stop scoring leads against last quarter's data.