Lead Temperature Scoring in 2026: The Complete Operating Model

Lead temperature scoring is how you stop arguing about "priority" and start working the right leads first - fast. The goal isn't a prettier dashboard. It's a system that tells your team exactly who to call, what to do next, and how quickly it has to happen.

Here's the hook: temperature only works if it changes behavior.

Most teams fail in two predictable ways: they build a scoring model that's too complex to run, or they build a simple one that's too vague to trust. Either way, reps revert to gut feel and "whoever replied last."

What you need (quick version)

- Split scoring into Fit (0-50) + Engagement (0-50) so "busy but wrong" doesn't outrank "perfect but quiet."

- Use a 100-point rubric with 5-7 criteria to start; add complexity only after you've audited outcomes.

- Set MQL at 50-75/100 and tune it so it captures the top ~20% of leads by score.

- Apply -25% monthly decay so stale activity doesn't beat fresh intent.

- Set a hot-lead SLA in minutes, not hours (routing + first touch) - see speed-to-lead.

- Audit monthly, recalibrate quarterly, and do a lightweight weekly spot-check.

What it is (and what it isn't)

This is an operating label driven by scoring + recency. That's the whole game.

"Scoring" answers: How valuable is this lead to us? "Recency" answers: How urgent is it to act right now?

Combine them and temperature becomes a routing signal: who gets worked first, who gets nurtured, who gets suppressed, and who gets recycled. In other words, it's your system for warm prospect prioritization - done with explicit rules instead of rep-by-rep intuition.

What it isn't: a philosophical definition of "interest," or a vanity metric for marketing dashboards. If your temperature label doesn't change what happens next (lead routing, lead assignment, SLA, sequence, meeting flow), it's decoration.

I've seen teams ship "Hot/Warm/Cold" fields that look great in a QBR and do absolutely nothing in the day-to-day because nobody agreed on the recency window, the handoff rules, or what "Hot" triggers in the CRM. Everyone nods, nobody acts, and the same leads get cherry-picked while the real buyers cool off.

Temperature should be explicit: score thresholds + a recency rule. No vibes.

A common mistake is wording like "active for more than 30 days," which is operationally confusing because most teams mean "active within the last 30 days." That ambiguity is exactly how you end up with "hot" leads that haven't done anything in weeks.

Here's the version you should actually implement:

- Hot = score >= 75 AND activity within the last 14 days

- Cold = score < 25 OR no activity within the last 30 days

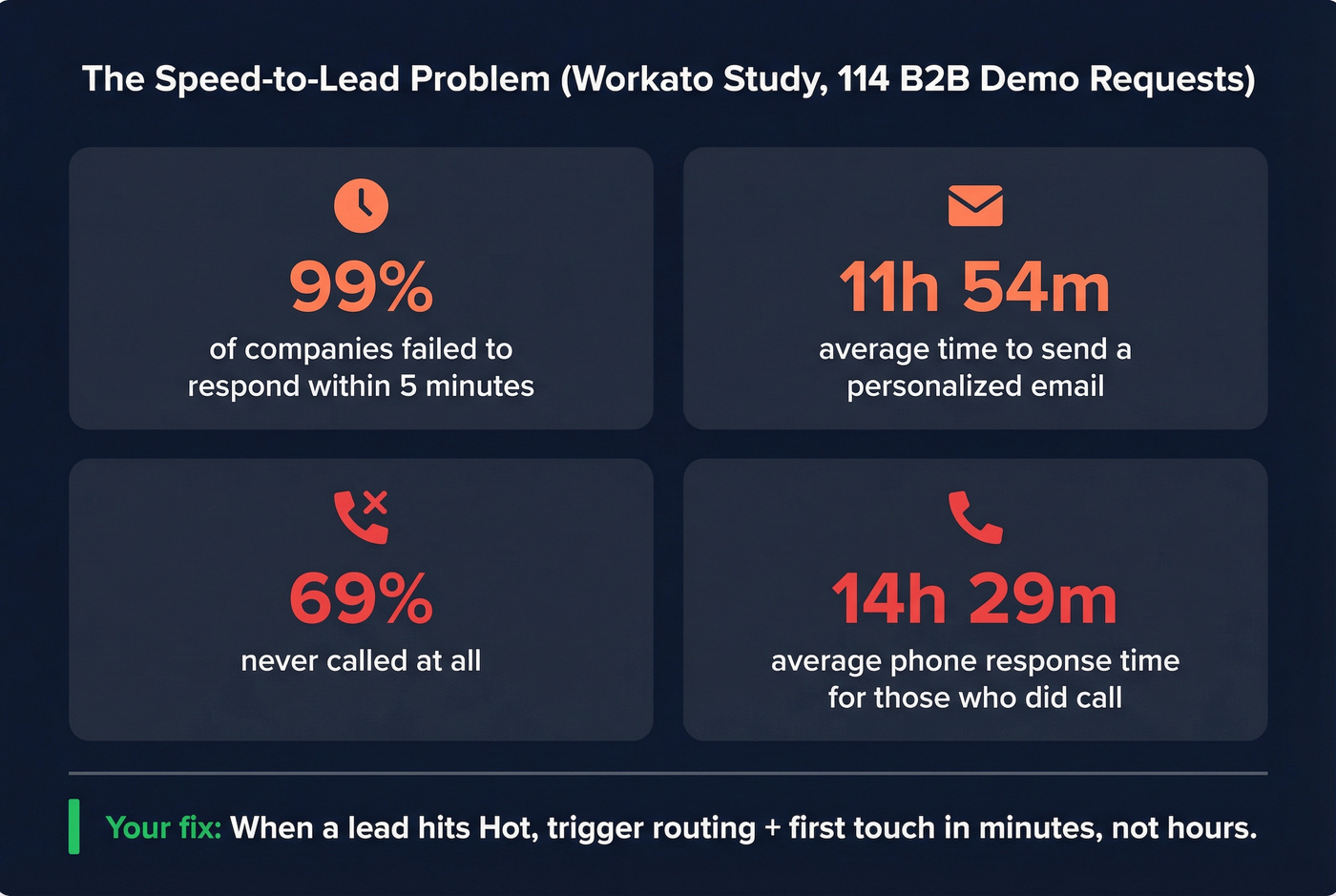

Why this exists: speed-to-lead is the real ROI

This isn't about being clever. It's about being fast.

Workato ran a field test across 114 B2B demo requests and found that over 99% of companies didn't respond within 5 minutes; the average time to a personalized email was 11h 54m, only 31% called at all, and the average phone response was 14h 29m.

That's the punchline: most companies treat "hot" like it's a label, not a clock.

Your scoring model can be perfect and still fail if routing and outreach are slow. A lead that hits "hot" at 10:02am and gets touched at 4:30pm is basically a warm lead you paid extra to generate.

The biggest lift usually comes from two boring changes:

- Instant lead routing (owner + channel + sequence)

- A real SLA that's enforced (not "same day")

If you do nothing else, do this: when a lead crosses your hot threshold, trigger a workflow that creates a task, enrolls them in a short "hot" sequence, and pings the owner in the tool they actually live in.

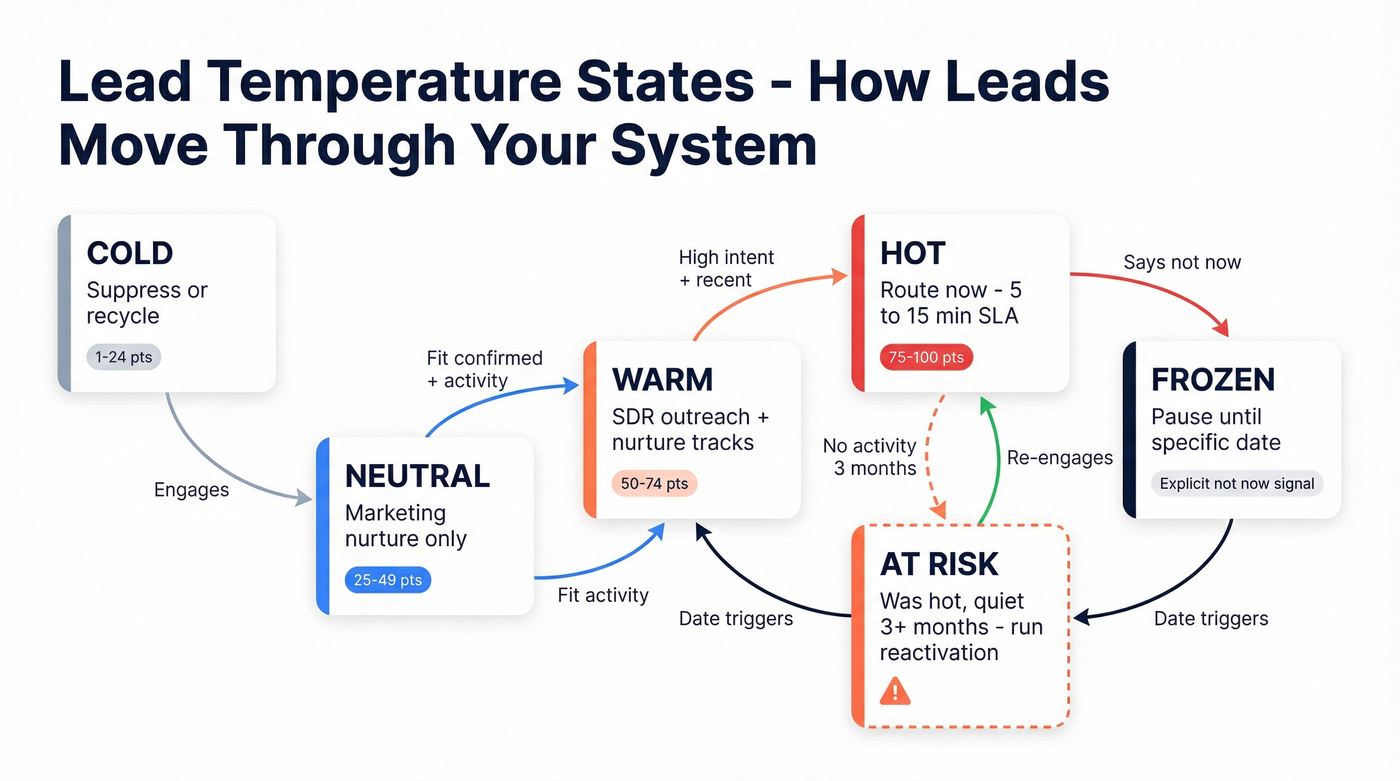

Temperature states that actually work (not just cold/warm/hot)

Cold/warm/hot is fine for a slide deck. It's not enough for operations.

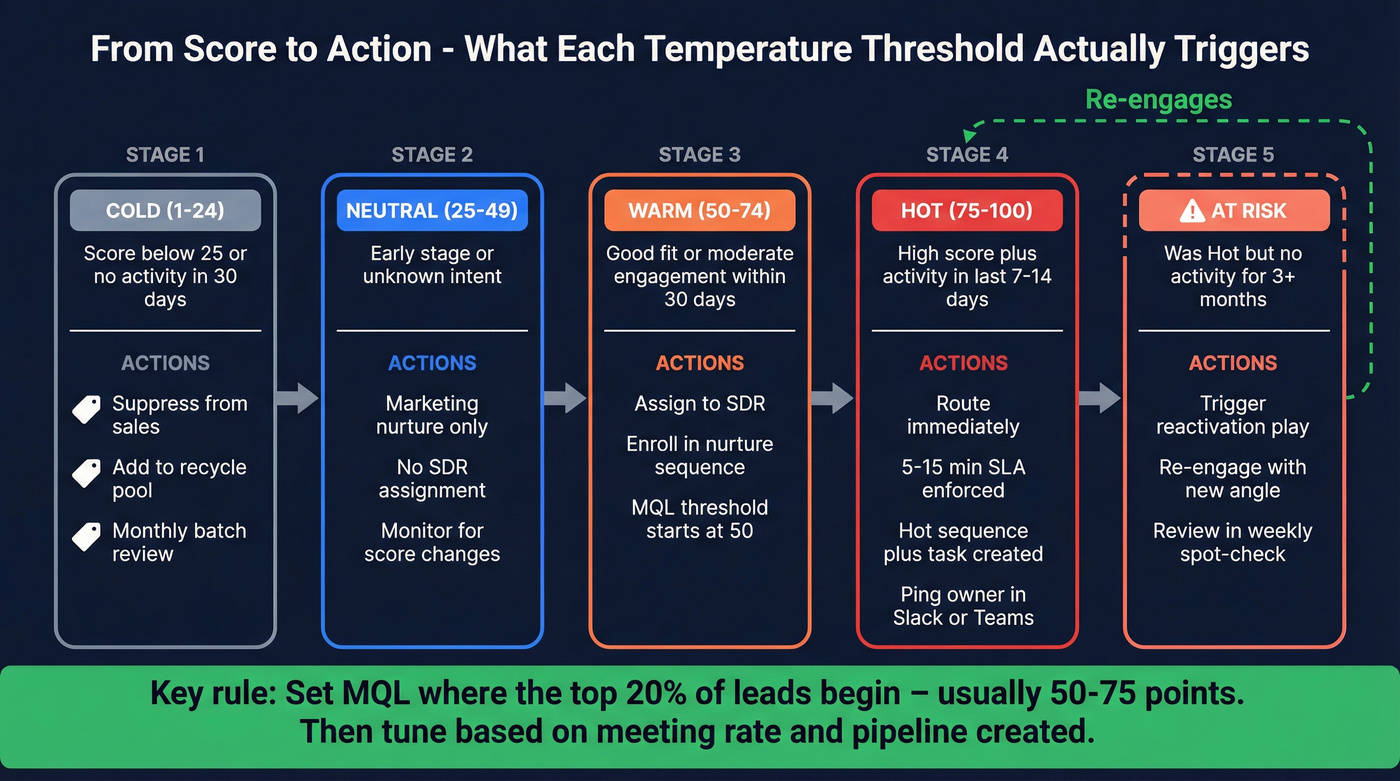

SalesWings uses a more usable set of states: Hot, Warm, Neutral, Cold, Frozen, plus Leads at Risk (previously hot, but >3 months inactivity). That "At Risk" bucket is the one most teams are missing - it's how you stop forgetting accounts that were almost ready.

Here's a practical state model that works in real CRMs:

| State | Meaning | Use this if | Skip this if |

|---|---|---|---|

| Hot | High score + recent intent | You can respond fast | You can't enforce SLAs |

| Warm | Good fit or moderate intent | You run nurture tracks + SDR | You only do inbound |

| Neutral | Unknown / early | You need triage | You refuse "maybe" buckets |

| Cold | Low score or stale | You suppress + recycle | You have tiny lead volume |

| Frozen | Explicitly not now | You respect timing | You never re-engage |

| Leads at Risk | Was hot, now quiet >3 mo | You run reactivation plays | You don't track recency |

Use-skip guidance (the part most teams don't write down):

- Use Neutral when you have lots of early-stage leads and need a holding pen that doesn't trigger sales.

- Use Frozen when you have a real "not now" signal (budget cycle, contract renewal date, "reach out in Q4").

- Use Leads at Risk when you're doing ABM or multi-threading and don't want "almost-ready" accounts to disappear.

If you're a small team, collapse this to 4 states: Hot / Warm / Cold / At Risk. That's still better than pretending everything is a linear funnel.

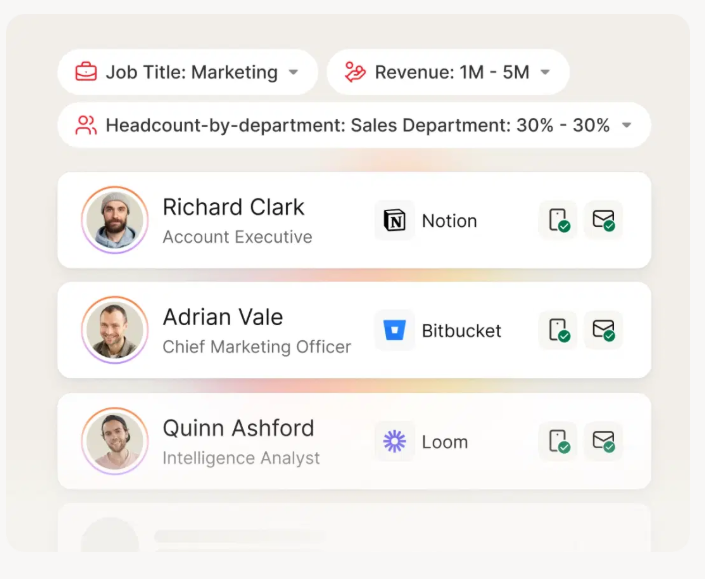

A hot lead scored at 85 is worthless if the email bounces. Prospeo's 98% verified emails and 125M+ direct dials mean your speed-to-lead SLA actually connects reps to real buyers - not dead inboxes.

Stop scoring leads you can't actually reach. Start with verified data.

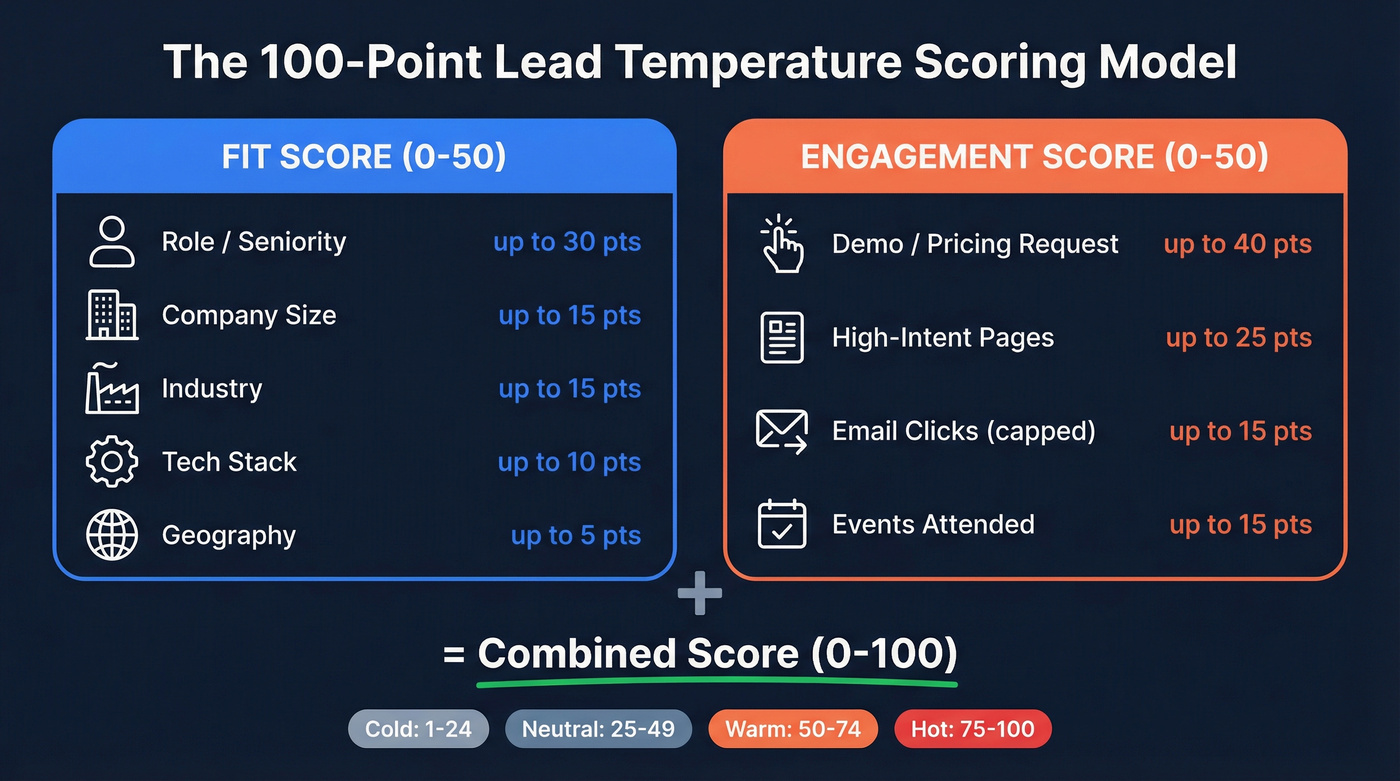

The 100-point template (copy/paste)

This is the model we'd start with for most B2B teams. It's simple enough to explain to reps, but structured enough to avoid the classic failure modes (job seekers, click-happy interns, and stale "hot" leads).

monday.com recommends starting with 5-7 criteria, setting MQL around 50-75/100, tuning it so it captures the top ~20% of leads, and applying -25% monthly decay to keep recency honest. They also share concrete scoring examples like C-level decision maker +30, demo request +40, email unsubscribe -25, competitor employee -50, and personal email -15.

Fit score (0-50)

Fit is "should we ever talk to them?" It's mostly static.

The trick is to cap each group so one attribute doesn't dominate.

| Fit group | Criteria | Points | Group cap |

|---|---|---|---|

| Role/seniority | C-level / VP / Director / IC | +30 / +20 / +12 / +5 | 30 |

| Company size | ICP range / adjacent / wrong | +15 / +5 / -20 | 15 |

| Industry | Target / adjacent / excluded | +15 / +5 / -25 | 15 |

| Tech stack | Uses key tech / competitor tech | +10 / -20 | 10 |

| Geography | In-territory / out-of-territory | +5 / -10 | 5 |

Rules we'd enforce:

- If you sell to a narrow ICP, make industry + company size do real work. Don't be afraid of negative points.

- If you sell globally, geography shouldn't matter much. If you don't, geography should matter a lot.

- Keep Fit stable. Don't mix "visited pricing page" into Fit. That's Engagement.

Engagement score (0-50)

Engagement is "are they showing buying intent right now?"

This is where teams accidentally create spammy models by overweighting low-signal actions (email opens, generic pageviews). Use frequency caps.

| Engagement group | Criteria | Points | Group cap |

|---|---|---|---|

| Pricing/demo intent | Demo request | +40 | 40 |

| Pricing request / contact sales | +30 | 40 | |

| High-intent pages | Pricing page view | +15 | 25 |

| Integration/security page | +10 | 25 | |

| Email engagement | Click | +5 (cap 3) | 15 |

| Events | Webinar attended | +15 | 15 |

| Webinar registered | +7 | 15 |

Guardrails that keep this sane:

- Email clicks are useful, but they're not "hot" by themselves. Cap them.

- "High-intent pages" should be a curated list. If you include your blog, you'll inflate noise.

- If you're product-led, add "activated key feature" as a high-intent event.

Common weighting mistakes (don't do these)

A scoring model can be mathematically consistent and still be operationally dumb. Avoid these mistakes and you'll save yourself a quarter of cleanup.

Do not score email opens.

Privacy changes and bot opens make it junk.

Other common mistakes:

- Don't score generic pageviews. Score specific intent pages (pricing, integrations, security, migration).

- Don't let repeat visits stack forever. Cap frequency-based actions or you'll create "hot lurkers" who never convert.

- Don't let one soft event create Hot. Webinar registration isn't a buying signal. A meeting booked is.

- Don't ignore negatives. If you don't penalize job seekers and competitors, reps won't trust the model.

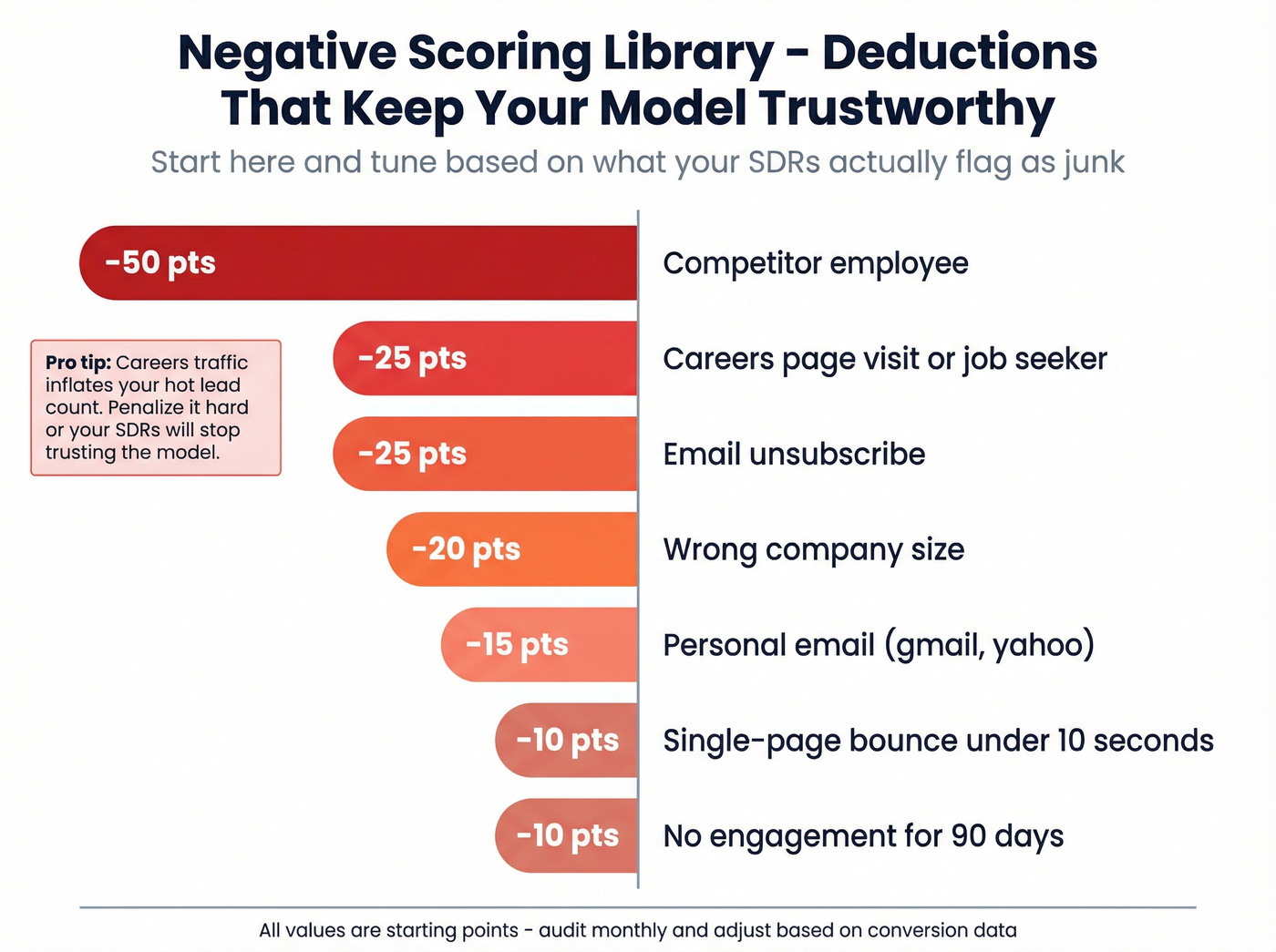

Negative scoring library

Negative scoring is where temperature scoring becomes trustworthy. Reps don't trust models that keep surfacing junk.

Start with this library and tune from there:

| Negative signal | When it triggers | Points |

|---|---|---|

| Competitor | Email domain or company match | -50 |

| Unsubscribe | Marketing unsubscribe | -25 |

| Personal email | gmail/yahoo/etc. | -15 |

| Careers/job seeker behavior | Visits careers page / job apply | -25 |

| Wrong company size | Outside ICP band | -20 |

| Single-page bounce | 1 page, <10s | -10 |

| No engagement 90 days | No tracked activity | -10 |

One pattern that shows up everywhere: careers traffic makes "hot" look great on dashboards and terrible in SDR reality. Penalize it hard.

Temperature thresholds

You need two mappings:

- Temperature labels (Cold/Warm/Hot/etc.)

- Funnel thresholds (MQL/SAL/SQL)

A clean starting point:

| Label | Combined score | Recency rule | Operational meaning |

|---|---|---|---|

| Hot | 75-100 | activity in 7-14 days | route now |

| Warm | 50-74 | activity in 30 days | SDR + nurture tracks |

| Neutral | 25-49 | any | marketing nurture |

| Cold | 1-24 | any | suppress / recycle |

| Frozen | any | explicit "not now" | pause until date |

| At Risk | was Hot | no activity >3 mo | reactivation play |

Now the MQL method that works: set your MQL threshold so it captures the top ~20% of leads by score. That usually lands at 50-75 points on a 100-point model.

Pull the last 60-90 days of leads, sort by score, find the score where the top 20% begins, and start there. Then adjust based on meeting rate and pipeline creation.

Sanity-check heuristic: monday.com suggests 15-25% can be achievable for "qualified leads -> closed deals" when follow-up is tight. Use that as a target range to test against your baseline, not a universal benchmark.

Score caps & guardrails

This is where most models either become gameable or become useless.

Use these guardrails:

- Cap each group (role, firmographics, intent, email engagement).

- Cap frequency-based actions (clicks, pageviews).

- Require both Fit and Engagement for Hot (or you'll chase noisy intent from bad-fit accounts).

- Don't let one event create instant Hot unless it's truly decisive (demo request is; webinar registration isn't).

Here's a simple "temperature scoring operating system" table you can hand to marketing + sales leadership:

| Fit signals | Engagement signals | Negative signals | Recency rule | Owner action | SLA |

|---|---|---|---|---|---|

| Role, size, industry | Demo/pricing, intent pages | Competitor, careers | -25%/mo | Route to SDR | 5-15 min |

| ICP match | Email clicks (capped) | Unsub, personal | 30-day window | Nurture | 24-72 hrs |

| Adjacent fit | Webinar attended | No activity 90d | At Risk >3 mo | Reactivate | 3-5 days |

Example walkthrough (so you can sanity-check your model)

Lead A (inbound demo request):

- Fit: Director at ICP company size (+12 +15) + target industry (+15) = 42 Fit

- Engagement: demo request (+40) + pricing page (+15) capped by group limit = 50 Engagement

- Combined: 92 -> Hot

- Recency: demo request is "fresh" for 7-14 days, so they stay Hot unless they go dark.

- Action: route + call + 1:1 email inside 5-15 minutes.

Lead B (webinar registrant who never shows):

- Fit: VP at adjacent industry (+20 +5) + ICP size (+15) = 40 Fit

- Engagement: webinar registered (+7) + 1 email click (+5) = 12 Engagement

- Combined: 52 -> Warm

- Recency: if they don't attend or hit intent pages, decay pushes them back toward Neutral quickly.

- Action: SDR sequence within 24 hours, not a "drop everything" interrupt.

Recency rules: time frame vs decay (and how to choose)

Recency is where this becomes "temperature" instead of "a score that never dies."

You've got two clean options:

- Time frame window (criterion-level): points count only if the event happened within X days (7/14/30). After that, they drop to zero.

- Decay (group-level): the whole group's points reduce by a percentage over time (like -25% per month).

HubSpot has a constraint you need to design around: time frame (criterion-level) and decay (group-level) can't coexist in the same group. So you can't have "pricing page view in last 14 days" and also "engagement decays 25% monthly" inside one Engagement group. Pick one approach per group.

Mini decision tree (pick fast, don't debate for weeks)

Is the signal "now or never"? (demo request, meeting booked, pricing request) -> Use a time frame (7-14 days).

Is the signal "soft but cumulative"? (email clicks, repeated visits, content engagement) -> Use decay (monthly is easiest to run).

Do you need reps to understand it instantly? -> Use time frames for your top 2-3 intent criteria and decay for everything else.

Two concrete recency examples

Example 1: Inbound demo request (binary freshness)

Rule: +40 for demo request within 14 days

Why: after two weeks, the buying moment is usually gone or has moved channels.

Operational effect: keeps Hot truly urgent and protects first response time.

Example 2: Webinar registrant (gradual fade)

Rule: +7 for registration, then decay Engagement by -25% monthly

Why: webinars create interest, not urgency. You want a smooth fade, not a cliff.

Operational effect: prevents "warm forever" leads that clog SDR queues.

Default recommendation: start with -25% monthly decay on Engagement. It's simple, it keeps stale leads from staying hot forever, and it's easy to explain.

Map temperature to MQL -> SAL -> SQL (and set SLAs reps will follow)

Temperature is useless unless it changes handoffs and SLAs.

Definitions (keep them crisp):

- MQL (Marketing Qualified Lead): meets your scoring threshold and is worth sales attention.

- SAL (Sales Accepted Lead): sales reviewed it and committed to a follow-up motion.

- SQL (Sales Qualified Lead): sales engaged and confirmed it's a real opportunity.

Benchmarks give you sanity checks:

- Lead -> MQL: 20-40%

- MQL -> SAL: 70-90%

- SAL -> SQL: 30-50%

- SQL -> Customer: 20-30%

If your MQL -> SAL is 30%, your MQL threshold's too low or your lead assignment's sloppy. If your SAL -> SQL is 10%, your SAL definition's too generous or reps are "accepting" without real work.

Now the SLA part. Look, "same day" isn't an SLA. Hot lead SLA should be minutes, not hours, and it should be paired with an auto-triggered workflow so the rep doesn't have to remember to be fast.

A practical mapping:

| Temperature | Funnel stage | Owner | Required action | SLA |

|---|---|---|---|---|

| Hot | MQL -> SAL | SDR/AE | call + 1:1 email | 5-15 min |

| Warm | MQL | SDR | sequence + light call | 24 hrs |

| Neutral | Lead | Marketing | nurture | weekly |

| At Risk | recycled | SDR | reactivation sequence | 3-5 days |

Metrics to track (name them, or you'll drift back to vibes)

If you want this to survive past rollout, track these weekly:

- First response time (by temperature)

- Connect rate (calls answered / dials) and/or reply rate - align on definitions like contact rate vs connect rate.

- Meeting booked rate (by temperature)

- MQL -> SAL conversion

- SAL -> SQL conversion

- Bounce rate (email deliverability) for Hot leads

Hot take: if your average deal size is small, you probably don't need a fancy 40-criterion model. You need fast routing, a tight Hot definition, and ruthless recency. Complexity is a tax on speed.

Implement in HubSpot (2026-ready)

If you're rebuilding scoring in HubSpot, you're not imagining it - the ground shifted.

HubSpot's legacy scoring properties were replaced by the newer scoring tools; Aug 31, 2026 is when legacy scores stop updating. In 2026, the Lead Scoring tool is the default, and it supports scoring across Contacts, Companies, and Deals (with Deals using a combined score).

Start with architecture, not criteria.

HubSpot scoring mechanics you need to design for

You can configure:

- an overall score limit (max points)

- group limits (caps per group)

- criteria that add or subtract points (including decimals)

You can also use scores in lists, workflows, and reporting, which is why temperature scoring becomes an operating system, not just a number.

HubSpot supports three patterns:

- Fit score

- Engagement score

- Combined score (often 100 total, split 50/50)

If you want temperature states, Combined is usually easiest because it gives you one number for routing, while still letting you keep Fit and Engagement visible for diagnosis.

HubSpot plan gotcha: Pro caps total score at 100; Enterprise can go higher (up to 500). If you want a 100-point temperature model, Pro's perfect - just design within caps.

Also, object availability depends on your HubSpot subscription (Marketing Hub vs Sales Hub), so confirm which objects you can score before you design the model.

A practical HubSpot setup (Fit + Engagement + Combined)

Step 1: Decide your score ceiling. Most teams should stick to 100. It forces discipline.

Step 2: Create two groups: Fit (0-50) and Engagement (0-50). Add group limits so you don't accidentally let Engagement hit 80 because someone clicked five emails.

Step 3: Add Fit criteria (firmographics + role). Keep it to 5-7 criteria total across the whole model at first.

Step 4: Add Engagement criteria with time frames or decay. Use time frames for the "this is happening now" events.

Step 5: Implement a Fit/Engagement grid for routing. This is the cleanest way to avoid "high engagement, terrible fit" leads wasting SDR time.

Use this A/B/C + 1/2/3 example:

- Fit: A = 38-50, B = 24-37, C = 0-23

- Engagement: 1 = 35-50, 2 = 18-34, 3 = 0-17

Operationally:

- A1 = immediate SDR/AE action (true hot)

- A2/B1 = work fast (warm-to-hot)

- C1 = nurture or qualify harder (often noisy)

Step 6: Build workflows that do something. When someone becomes Hot (or A1), trigger owner assignment, task creation, sequence enrollment, internal notification, and an SLA timer (if you track it).

Step 7: Add negative scoring. Competitors, personal emails, unsubscribes, careers traffic. Don't skip this.

Mini build table: event -> criterion -> points -> recency choice

Use this as your "RevOps ticket" starter:

| Property / event | Score group | Criterion | Points | Time frame or decay |

|---|---|---|---|---|

| Demo request form submission | Engagement | Demo request | +40 | Time frame: 14 days |

| Meeting booked | Engagement | Meeting booked | +50 | Time frame: 14 days |

| Pricing page view | Engagement | Pricing page | +15 | Time frame: 7-14 days |

| Integration/Security page view | Engagement | High-intent page | +10 | Time frame: 30 days |

| Marketing email click | Engagement | Click | +5 (cap 3) | Decay: -25% monthly |

| Webinar attended | Engagement | Attended | +15 | Decay: -25% monthly |

| Job title contains VP/Director | Fit | Seniority | +20 / +12 | No decay |

| Company size in ICP band | Fit | ICP size | +15 | No decay |

| Unsubscribe | Negative | Unsubscribe | -25 | Immediate |

| Careers page / job apply | Negative | Job seeker | -25 | Immediate |

Rule-based vs predictive (when to use which)

Rule-based scoring wins when you need something reps will trust next week. It's great for early teams, clear ICPs, and any org that's still cleaning up lifecycle stages and attribution because you can explain every point and fix obvious failure modes (job seekers, competitors, stale activity) without retraining a model.

Predictive scoring earns its keep when you have enough closed-won and closed-lost volume and your data's clean: consistent lifecycle stages, reliable source tracking, and stable product packaging. If your CRM is a junk drawer, predictive learns your mess faster.

The best setup is hybrid: let predictive influence the score, but keep temperature operational. Temperature still needs explicit thresholds, recency rules, lead routing, and SLAs. Even the smartest model can't save you from slow first response time - compare approaches in AI lead scoring vs traditional lead scoring.

If you're not on HubSpot: universal scoring inputs (Salesforce-style)

You can run this in any CRM/marketing automation stack if you standardize inputs.

Salesforce's categories are a good universal checklist:

- Demographic (role/seniority)

- Company (size, industry, geo, tech)

- Behavioral (pages, forms, events, product usage)

- Spam (junk, bots, students/job seekers)

Automation matters because reps waste time on prioritization and research. Salesforce breaks it down like this: reps spend 8% prioritizing, 9% researching, and 8% prospecting in an average week.

Implementation path that works anywhere:

- Store Fit score and Engagement score as separate fields (so you can debug).

- Compute Combined score in automation (workflow/flow) and map it to temperature labels.

- Apply decay with a scheduled job/workflow (weekly or monthly) that reduces Engagement and re-evaluates temperature.

Checklist to implement anywhere:

- define Fit fields (pick 3-5)

- define Engagement events (pick 3-5)

- define negative rules (pick 3-5)

- define recency (time frame or decay)

- define routing + SLA per temperature

Audit & iterate (because "a score you can't explain won't be used")

If reps don't trust the score, they won't use it. And if they don't use it, you'll never get clean feedback to improve it.

The recurring HubSpot practitioner complaint is that scores feel like a "black box" because you can't see a satisfying per-contact breakdown after publishing. That adoption killer's real, and it shows up as quiet sabotage: reps ignore the score, managers stop coaching to it, and marketing keeps shipping MQLs that sales doesn't want.

Workarounds that work:

- Create filtered views: "Hot leads created last 14 days," "Hot but no meeting," "Warm with high fit," etc.

- Add columns for contributing properties: last conversion, last seen, key pageviews, form submissions, job title, company size.

- Use test records: create a handful of known personas/accounts and force the behaviors to see if the score behaves.

- Run parallel scores for 2-4 weeks: keep your old model and a new model live, compare which one predicts meetings/opportunities better.

Troubleshooting playbook (common failures + fixes):

- Job seekers inflate heat: exclude careers pages from engagement, add a -25 penalty for careers/apply events, and suppress student domains if relevant.

- Email clicks dominate: cap click points and move "real intent" (demo/pricing/meeting) up in weight.

- Stale hot leads: add decay (-25% monthly) or strict time frames on high-intent criteria.

- Too many MQLs: raise threshold or tighten Fit caps; don't just lower Engagement weights blindly.

I've watched teams waste months debating weights while never checking whether "Hot" leads become meetings. Audit first, argue later.

Data quality makes "hot" actionable (reach them on the first attempt)

A hot lead you can't reach is just a dashboard artifact.

If your hot-lead SLA is 5-15 minutes, bounced emails and missing mobiles are fatal. Your reps will "follow up" and still lose because they never made contact. Treat reachability as part of the temperature operating model, because it is.

Prospeo verifies emails at 98% accuracy, provides 125M+ verified mobile numbers with a 30% pickup rate, and refreshes data every 7 days (the industry average is about 6 weeks). It returns contact data for 83% of leads via enrichment and delivers a 92% API match rate, backed by 300M+ professional profiles and 143M+ verified emails.

Operational workflow:

- when a lead hits Hot, enrich/verify email + mobile

- push verified fields into your CRM + sequencer

- route to the owner with confidence that the first touch will land

If you're tightening deliverability as part of this workflow, use an email verifier and keep an email verification list SOP.

Your fit score needs fresh firmographics. Your engagement score needs real intent signals. Prospeo refreshes 300M+ profiles every 7 days and tracks 15,000 intent topics - so your temperature model runs on data that's actually current.

Fuel your lead scoring with data refreshed weekly, not monthly.

Copy/paste: scoring spec (v1)

Paste this into a RevOps ticket or internal doc and adjust the weights to your ICP.

lead_temperature_scoring_v1:

score_ceiling: 100

groups:

fit:

max_points: 50

caps:

role_seniority: 30

company_size: 15

industry: 15

tech_stack: 10

geography: 5

rules:

- name: role_seniority

criteria:

c_level: 30

vp: 20

director: 12

individual_contributor: 5

- name: company_size

criteria:

icp_range: 15

adjacent: 5

wrong: -20

- name: industry

criteria:

target: 15

adjacent: 5

excluded: -25

- name: tech_stack

criteria:

uses_key_tech: 10

competitor_tech: -20

- name: geography

criteria:

in_territory: 5

out_of_territory: -10

engagement:

max_points: 50

cap_notes: "Cap frequency-based actions (clicks/pageviews)."

recency:

default: "decay"

decay_rate: "-25% monthly"

rules:

- name: demo_request

points: 40

recency: "time_frame"

time_frame_days: 14

- name: pricing_request_or_contact_sales

points: 30

recency: "time_frame"

time_frame_days: 14

- name: meeting_booked

points: 50

recency: "time_frame"

time_frame_days: 14

- name: pricing_page_view

points: 15

cap: 25

recency: "time_frame"

time_frame_days: 14

- name: integration_or_security_page_view

points: 10

cap: 25

recency: "time_frame"

time_frame_days: 30

- name: email_click

points: 5

frequency_cap: 3

recency: "decay"

- name: webinar_attended

points: 15

recency: "decay"

- name: webinar_registered

points: 7

recency: "decay"

negative_scoring:

rules:

competitor_match: -50

unsubscribe: -25

personal_email_domain: -15

careers_or_job_apply_behavior: -25

wrong_company_size: -20

single_page_bounce_under_10s: -10

no_engagement_90_days: -10

temperature_labels:

hot:

combined_score: "75-100"

recency_rule: "activity within last 7-14 days"

action: "route now; call + 1:1 email"

sla: "5-15 minutes"

warm:

combined_score: "50-74"

recency_rule: "activity within last 30 days"

action: "SDR sequence + light call"

sla: "24 hours"

neutral:

combined_score: "25-49"

recency_rule: "any"

action: "nurture track"

sla: "weekly touch"

cold:

combined_score: "1-24"

recency_rule: "any"

action: "suppress/recycle"

sla: "none"

frozen:

combined_score: "any"

recency_rule: "explicit not-now date"

action: "pause until date"

sla: "none"

at_risk:

trigger: "was hot; no activity > 3 months"

action: "reactivation sequence"

sla: "3-5 days"

funnel_thresholds:

mql:

method: "set threshold to capture top ~20% of leads by combined score"

starting_range: "50-75"

sal:

definition: "sales reviewed and committed to follow-up motion"

sql:

definition: "sales engaged and confirmed real opportunity"

FAQ

What's the difference between lead scoring and lead temperature scoring?

Lead scoring is the points model (fit + engagement) that ranks leads by value, while lead temperature scoring is the operational label driven by score + recency that triggers routing, SLAs, and next actions. In practice, scoring computes the number; temperature decides who gets worked first and within what time window.

What's a good MQL threshold on a 100-point model?

A strong starting MQL threshold is 50-75 points out of 100, set so it captures the top ~20% of leads by score over the last 60-90 days. If MQL -> SAL drops below 70%, raise the threshold or tighten Fit caps before you touch Engagement weights.

Should I use score decay or a time frame window?

Use time frames for high-intent events where freshness is binary (demo request, meeting booked, pricing request) and decay for blended engagement that should fade gradually (clicks, webinar activity). In HubSpot, time frame (criterion-level) and decay (group-level) can't coexist in the same group, so pick one approach per group.

What's a good free tool for verifying "hot" lead contact data?

Prospeo includes a free tier with 75 emails + 100 Chrome extension credits/month, verifies emails at 98% accuracy, and refreshes data every 7 days. If you're comparing options, prioritize anything that verifies deliverability and returns mobiles - bad data breaks a 5-15 minute SLA.

How do I make sure "hot leads" are actually reachable?

Make reachability part of the workflow: when a lead becomes Hot, verify the email and enrich a mobile number before routing the task. Prospeo returns contact data for 83% of leads via enrichment and provides 125M+ verified mobiles with a 30% pickup rate, so first-touch attempts turn into real conversations.

Summary: make temperature an operating system, not a label

If you want lead temperature scoring to move pipeline, keep it simple: split Fit and Engagement, cap the noisy signals, add negative scoring, and enforce recency so "hot" means "now." Then wire routing + a minutes-based SLA, and make sure your contact data's reachable so the first touch lands.