Speed-to-Lead Metrics: The Complete KPI Framework (2026)

Your "responded in 5 minutes" SLA can be green and your pipeline can still be dead.

That happens when you measure attempts like they're conversations, and you ignore the long tail where leads quietly rot. We'll break down the speed to lead metrics that actually explain what's happening, so you can fix routing, coverage, and contactability instead of arguing about averages.

What you need (quick version)

- Track three core KPIs: Time to First Attempt (TTFA), Time to First Contact (TTFC), and Time to First Qualification (TTFQ). Everything else supports these.

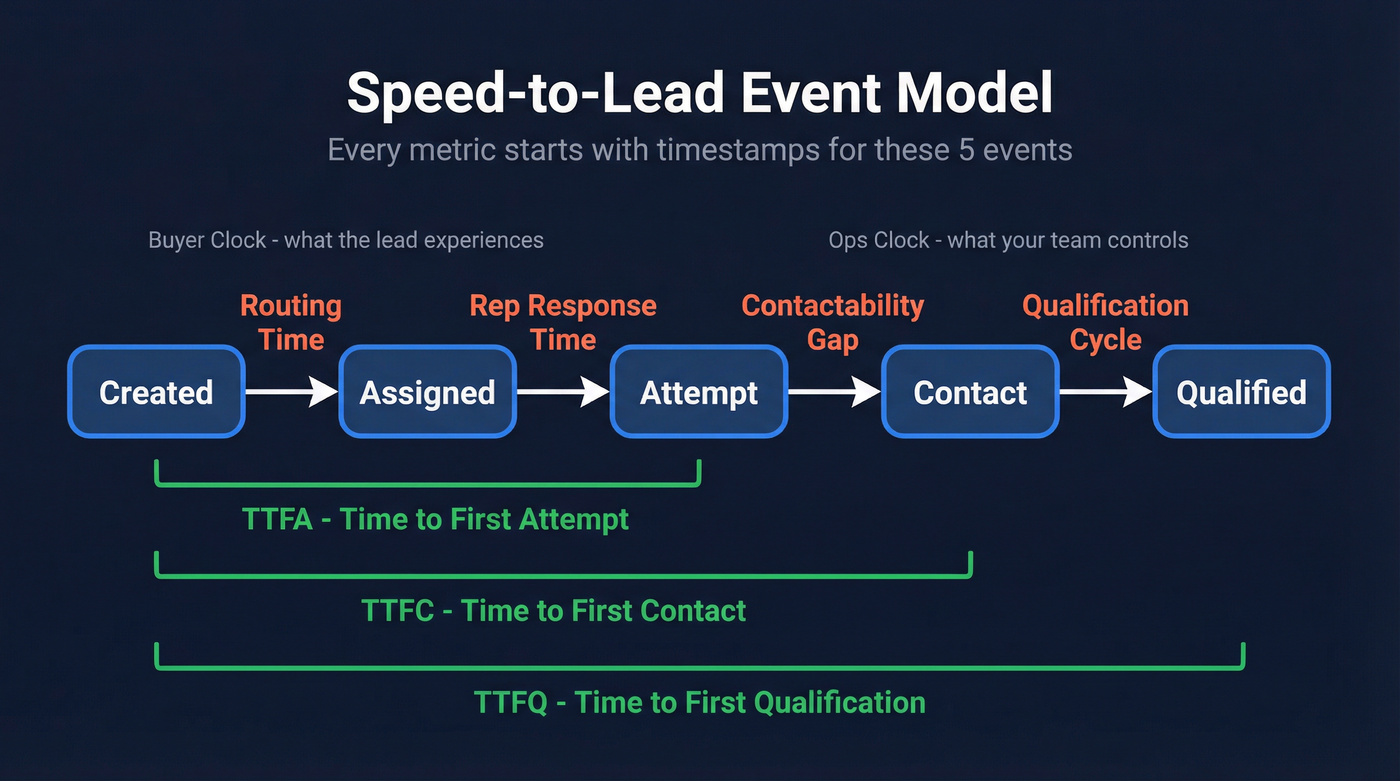

- Use an event model, not "first activity": Created → Assigned → Attempt → Contact → Qualified. If you can't point to timestamps for each, your dashboard's fiction.

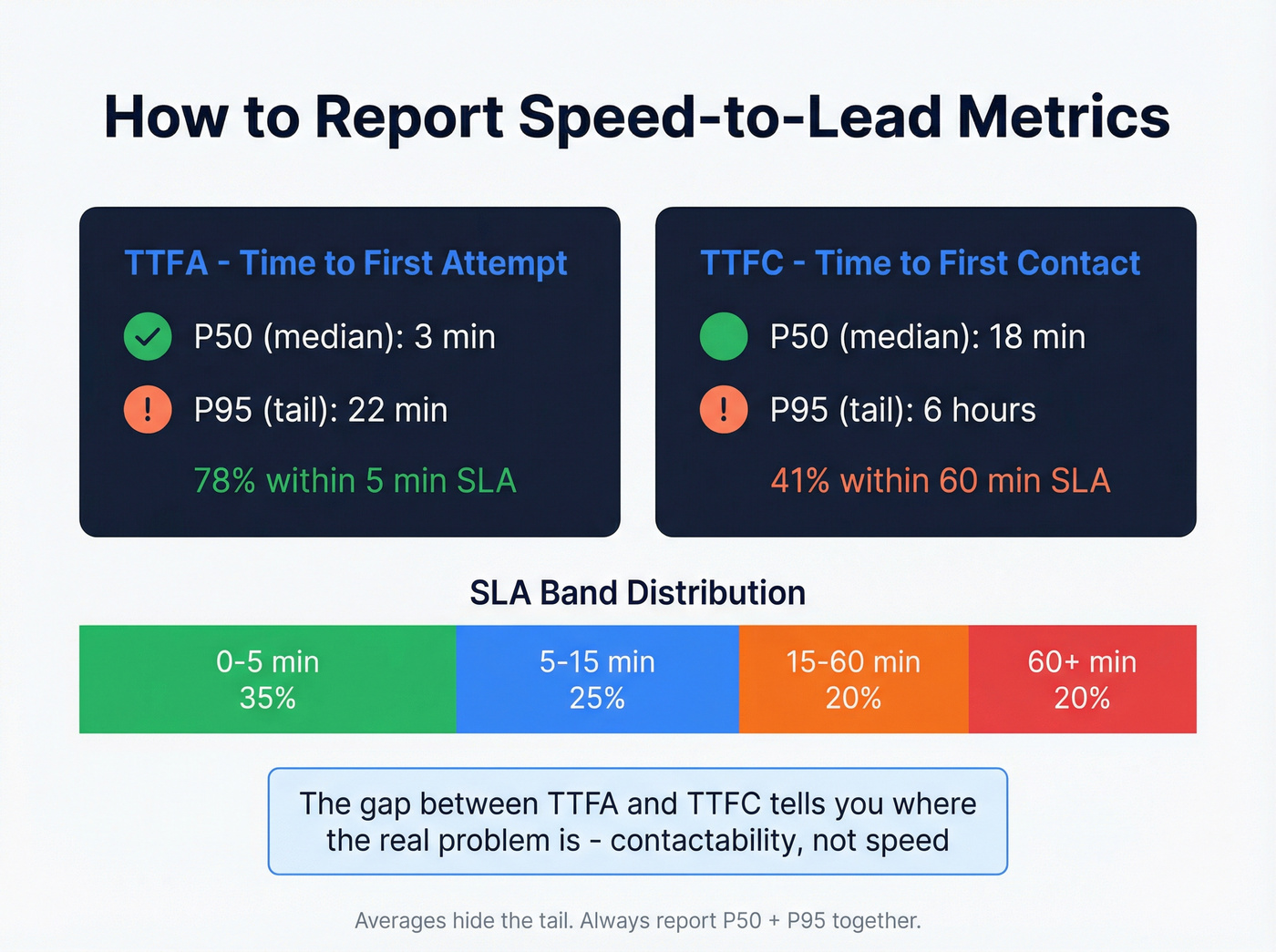

- Report P50 + P95 (median + tail) for TTFA/TTFC/TTFQ, plus % within SLA bands. Averages lie in long-tail workflows.

- Standard SLA bands that work operationally: 0-5 / 5-15 / 15-60 / 60+ minutes.

- Always separate attempt vs contact. A call logged or an email sent isn't "contact."

- Add contactability metrics next to speed: email bounce rate, phone connect rate, answer rate.

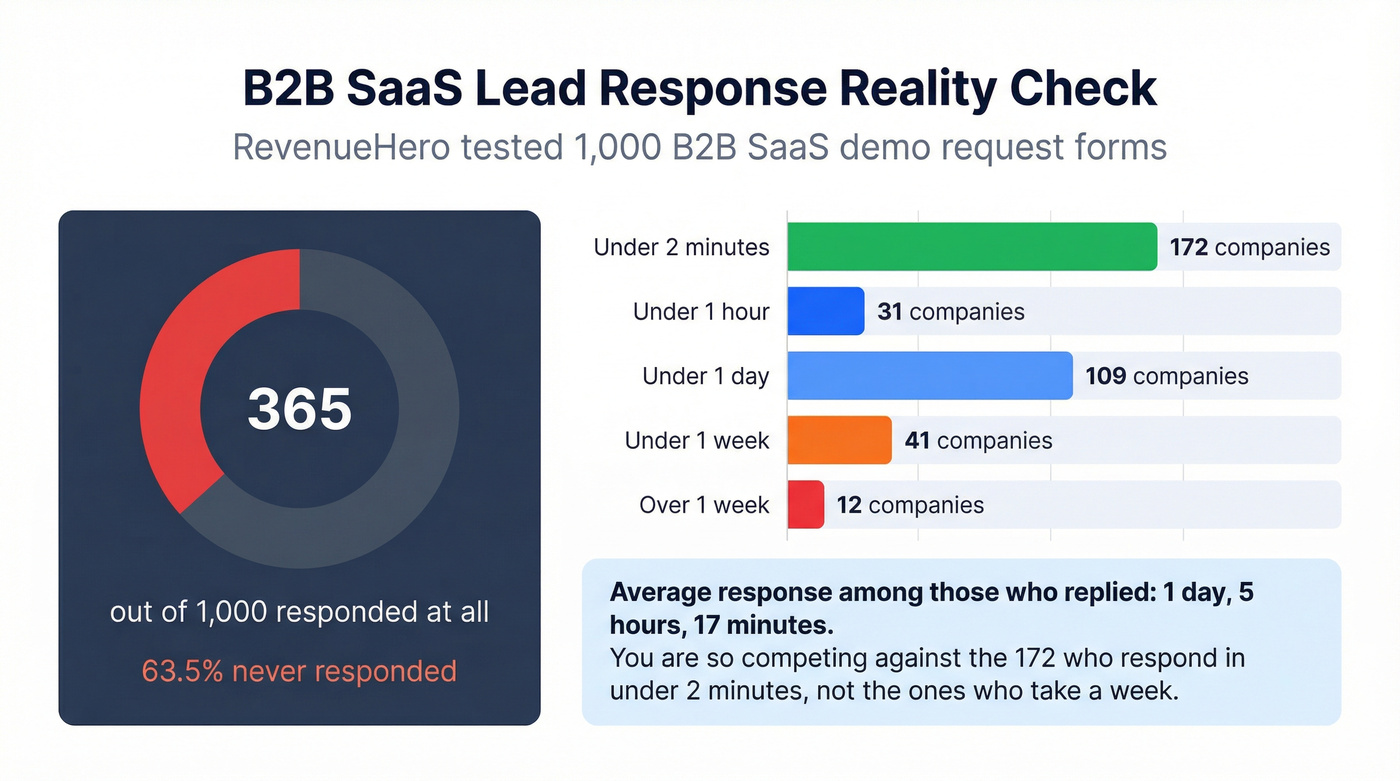

- Benchmark reality check: RevenueHero tested 1,000 B2B SaaS demo forms - only 365/1000 responded, and responders averaged 1d 5h 17m.

- Hot take: if your email bounce rate's above 2%, stop arguing about shaving minutes off TTFA. Fix data quality first.

What "speed-to-lead" actually measures (and what it doesn't)

Speed-to-lead measures the time between a lead raising their hand and your team taking action - ideally making real contact. It's a proxy for "did we treat this like a hot opportunity or like a backlog item?" Done right, it's one of the clearest predictors of conversion because it captures whether high-intent buyers get a timely, human response.

It doesn't measure lead quality, message quality, routing quality, or follow-up quality. Teams get burned when they hit a fast "first touch" and still lose because the touch was a generic email, sent to a bad address, with no second attempt.

The canonical reference everyone quotes is the Harvard Business Review article "The Short Life of Online Sales Leads" (James B. Oldroyd, Kristina McElheran, David Elkington, Harvard Business Review, Vol 89, No. 3, March 2011). It's the origin of the "minutes matter" argument that kicked off modern lead response management.

Here's the thing: speed-to-lead's only meaningful if your start/stop points match the buyer's reality. A lead doesn't care when a Task got created; they care when a human actually engaged them, and they definitely don't care that your CRM says "touched" because an auto-email fired.

Common misconceptions (the ones I see every quarter):

- "We're fast because we send an auto-email instantly." That's not contact.

- "We're slow because reps don't log Tasks." That's instrumentation, not performance.

- "Our average is 12 minutes." Your P95's probably 18 hours.

Speed to lead metrics framework: KPIs, not one stopwatch

The cleanest way to build this is to treat speed-to-lead like an SRE latency problem: define events, compute durations between events, then report percentiles and SLA attainment.

The best event definitions I've found come from LRM's published study definitions (based on the InsideSales.com dataset): 3 years, 6 companies, 15,000+ leads, 100,000+ call attempts. The win isn't the scale; it's the rigor around what counts as a real attempt and a real contact, which is exactly where most teams accidentally cook their numbers.

Two clocks you should report (this ends the "Created vs Assigned" argument)

You need two stopwatches, not one:

- Buyer clock (primary KPI): Created → Attempt/Contact/Qualified This is the experience the lead lives through. It's what impacts conversion.

- Ops clock (routing/coverage KPI): Assigned → Attempt/Contact This isolates what your team controls: routing rules, round-robin, staffing, and alerting.

Recommendation: keep Created-based metrics as your headline numbers, and use Assigned-based metrics to debug ops. If you only report Assigned-based speed, you can "improve" by delaying assignment, which is the dumbest kind of win.

KPI definitions + formulas (use this table as your spec)

| KPI | Start event | Stop event | Report as | Why it matters |

|---|---|---|---|---|

| TTFA | Lead Created | First Attempt | P50/P95 + % SLA | Measures routing + responsiveness |

| TTFC | Lead Created | First Contact | P50/P95 + % SLA | Measures "real engagement" speed |

| TTFQ | Lead Created | First Qualification | P50/P95 | Measures sales readiness velocity |

| SLA Attainment | Lead Created | Attempt/Contact | % in bands | Makes SLAs enforceable |

| Contactability | Attempt | Contact | rates | Explains "fast but no pipeline" |

Time to First Attempt (TTFA) - start/stop timestamps

- Start:

Lead_Created_At(web form submit, inbound call start, chat start, etc.) - Stop:

First_Attempt_At(first outbound call placed, first SMS sent, first email sent, first chat reply)

Formula:

TTFA_minutes = (First_Attempt_At - Lead_Created_At) in minutes

TTFA's the metric your routing and alerting systems can move quickly.

Skip this if: your CRM can't reliably stamp First_Attempt_At. Stop building dashboards and fix instrumentation first.

Time to First Contact (TTFC) - define "contact" as a successful conversation

- Start:

Lead_Created_At - Stop:

First_Contact_At

Use the LRM definition: contact = successful call resulting in a conversation of a predetermined duration. In practice, teams implement this as:

- call connected + talk time ≥ X seconds, or

- inbound chat with at least one back-and-forth, or

- email reply received from a human

Guardrail (non-negotiable): exclude auto-replies/out-of-office and "wrong person" replies from contact; treat them as a routing/data-quality event.

Formula:

TTFC_minutes = (First_Contact_At - Lead_Created_At) in minutes

If you only track TTFA, you're optimizing for activity, not outcomes. TTFC is where reality shows up, and it's the metric most directly tied to inbound conversion because it measures the first moment a buyer actually gets a human.

Time to First Qualification (TTFQ) - meeting booked / sales-ready milestone

- Start:

Lead_Created_At - Stop:

First_Qualified_At(meeting booked, SQL created, "sales-ready" stage reached)

Formula:

TTFQ_hours = (First_Qualified_At - Lead_Created_At) in hours

TTFQ is where you'll see the truth about follow-up quality. Speed without qualification's just motion.

SLA Attainment (% within bands) - 0-5 / 5-15 / 15-60 / 60+ minutes

Pick one SLA target per channel/lead type, but always report the distribution:

- 0-5 minutes

- 5-15 minutes

- 15-60 minutes

- 60+ minutes

Then compute:

% within band = leads with TTFA (or TTFC) in band / total leads

Contactability metrics - bounce rate, connect rate, answer rate (attempt ≠ contact)

This is the missing layer in most dashboards.

- Email bounce rate = bounces / emails sent

- Phone connect rate = connected calls / call attempts

- Answer rate = answered calls / call attempts (or answered / connected - just be consistent)

If TTFA's great but TTFC's terrible, it's almost always contactability or channel mismatch. If you need to operationalize this, start with a basic data quality scorecard and a weekly refresh workflow.

How to report speed-to-lead (P50/P95 + SLA bands)

Speed-to-lead is long-tailed. One rep on PTO, one lead routed wrong, one after-hours form fill, and your "average response time" turns into a comedy.

Averages are misleading in long-tail response-time distributions; percentiles show the typical experience and the tail. If you want a clean primer on why this works operationally, Dynatrace has solid percentile guidance, and OneUptime's percentile explainer does a nice job of making it concrete.

Report this way:

- P50 (median): the "typical" lead experience

- P95: the "bad but common" tail (5% of leads are slower than this)

- P99: the "this is broken" tail (optional, great for QA)

Then pair percentiles with SLA attainment:

- TTFA P50 + P95

- TTFC P50 + P95

- % within 5 minutes, % within 15, % within 60

Mini example (what good looks like):

- TTFA: P50 = 3 min, P95 = 22 min, 78% within 5 min

- TTFC: P50 = 18 min, P95 = 6 hours, 41% within 60 min

That tells you what to fix: not "speed," but contactability plus after-hours coverage.

Checklist for honest reporting:

- Use real-hours and business-hours side by side.

- Separate Attempt and Contact metrics.

- Exclude system-generated Tasks from "attempt" unless they represent real outreach.

- Don't let "auto-reply email" count as contact.

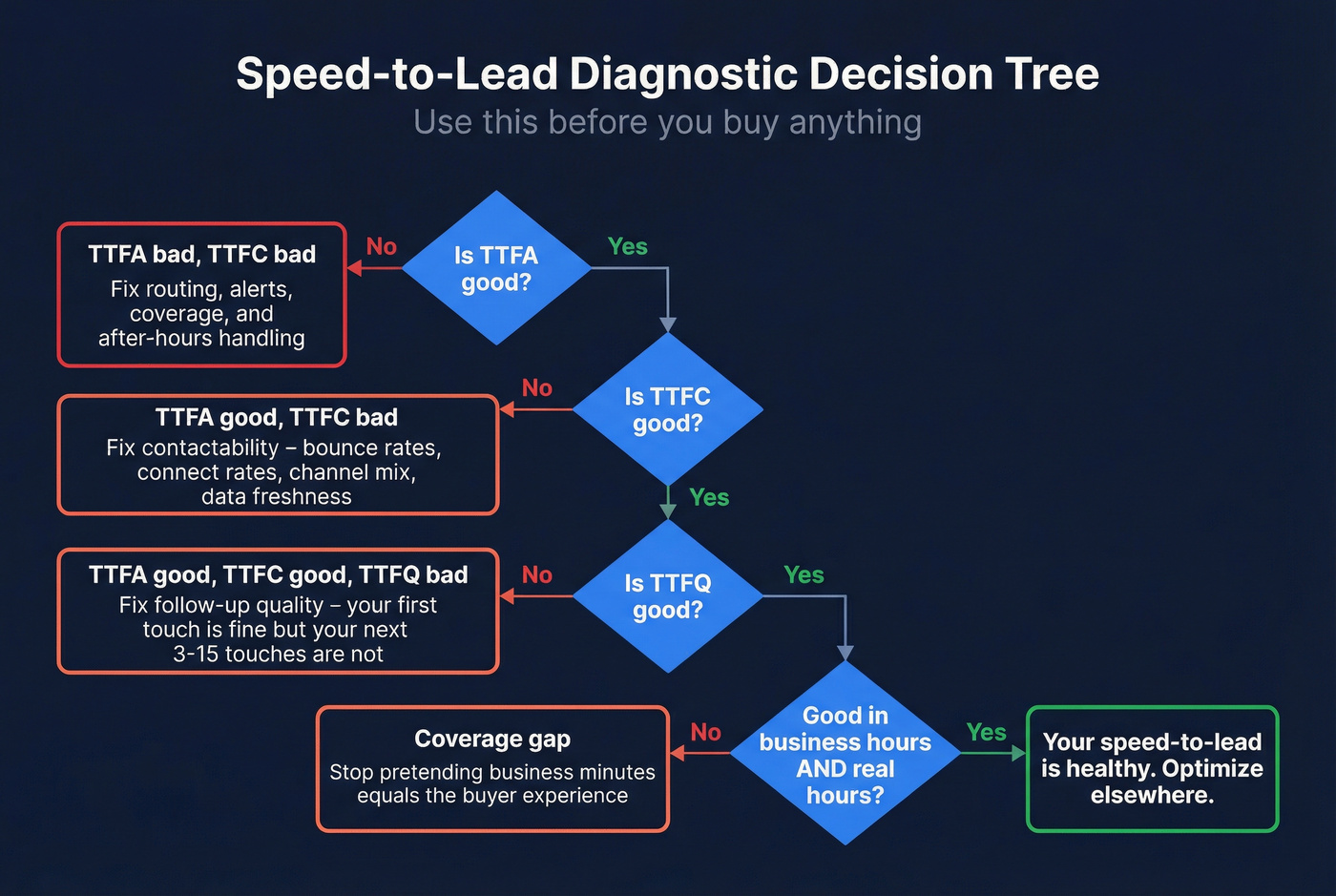

Diagnostic decision tree (use this before you buy anything)

- TTFA is bad, TTFC is bad → routing/alerts/coverage is broken. Fix assignment rules, notifications, and after-hours handling.

- TTFA is good, TTFC is bad → contactability's the bottleneck. Fix bounce/connect rates, channel mix, and data freshness.

- TTFA is good, TTFC is good, TTFQ is bad → follow-up quality's weak. Your first touch is fine; your next 3-15 touches aren't.

- Everything looks good in business-hours, bad in real-hours → you've got a coverage gap. Stop pretending "business minutes" is the buyer experience.

Your speed-to-lead dashboard says sub-5-minute TTFA. But your TTFC tells a different story - because emails bounce and phones ring out. Prospeo's 98% email accuracy and 30% mobile pickup rate close the gap between attempt and actual contact.

Stop optimizing for activity. Start reaching real buyers.

Benchmarks that matter in 2026 (modern distribution + classic decay)

Most teams still benchmark against folklore ("5 minutes or you lose"). I prefer two benchmark types: modern distribution benchmarks (what's actually happening) and decay benchmarks (why it matters). For extra context, compare this to broader average lead response time data.

Modern reality check (RevenueHero's experiment)

RevenueHero submitted demo requests to 1,000 B2B SaaS companies and only 365/1000 responded at all. Among responders, the average response time was 1d 5h 17m.

Methodology (why this benchmark's believable): they measured timestamp-to-response after submitting demo forms, used a separate work domain to avoid prior relationships, counted automated replies as "responses," and noted whether a scheduler/booking link appeared in the reply flow. That's the right level of rigor for a messy real-world channel.

The distribution's the real punchline:

- 172 responded instantly (<2 minutes)

- 31 responded in <1 hour

- 109 responded in <1 day

- 41 responded in <1 week

- 12 took >1 week

You're competing against the companies responding in minutes, not the ones responding next week.

The "60 seconds" narrative (and my opinion)

Look, "60-second response" is real for inbound calls and live chat, and for teams with routing that's basically instant. For demo forms, chasing sub-60 seconds is usually theater: you'll spike TTFA with auto-emails and still miss TTFC, because the buyer didn't get a human and you didn't get a conversation.

My practical target that actually moves revenue:

- Attempt within 5 minutes

- Contact within 60 minutes

- Then obsess over contactability so attempts turn into conversations

Decay benchmarks (why speed matters)

LRM's findings are the business case for SLAs and automation: after 1 hour, likelihood of contact drops about 10x, and likelihood of qualification drops 6x+. That's not a motivation poster; it's an ops requirement, and it's why the tail (P95) matters more than your median once you're "pretty good."

A simple benchmark table you can use internally:

| Metric | Strong | OK | Weak |

|---|---|---|---|

| TTFA P50 | <5 min | 5-15 | >15 |

| TTFA P95 | <30 min | 30-120 | >120 |

| TTFC % <60m | 40%+ | 20-40% | <20% |

LRM timing insights (the part most dashboards ignore)

LRM's timing breakdown is brutally actionable. Best days skew to Wednesday/Thursday: Thursday is 49.7% better for contact than the least effective day, and Wednesday is 24.9% better for qualification than the least effective day. Best time blocks matter too: 4-6 PM is best for contact (114% better than the least effective block), while 8-9 AM and 4-5 PM are best for qualification (164% better than 1-2 PM). One more: after roughly 20 hours, piling on additional attempts can become counterproductive for contact - at that point, shift the lead into nurture instead of hammering calls.

If you want the full modern benchmark methodology, RevenueHero's detailed post is here: RevenueHero's lead response time experiment.

Segmentation rules (so your dashboard isn't nonsense)

If you don't segment, you'll end up "optimizing" the wrong thing and punishing the wrong team.

Use this rule: segment by anything that changes routing, buyer intent, or channel behavior.

Use this segmentation (almost always):

- Lead source: demo form vs content download vs partner vs inbound call

- Lead type: net-new inbound vs recycled/nurture vs expansion

- Region/time zone

- Channel: phone vs email/SMS vs chat

- Business-hours vs after-hours

Skip this segmentation (until you've stabilized basics):

- Micro-sources that create tiny sample sizes

- Rep-by-rep leaderboards before instrumentation's clean (you'll create politics, not improvement)

Business-hours distortion is the classic trap: a lead comes in at 4 PM and gets touched at 10 AM next day. That's 2 business hours vs 18 real hours. Friday to Monday can be 66 hours in real time.

So report both:

- Real minutes (buyer reality)

- Business minutes (operational fairness)

And be explicit about which one your SLA's based on.

Channel-specific lead response metrics (phone, SMS/email, chat)

Different channels need different stop events. If you use one definition across everything, you'll either over-credit email or under-credit phone.

Here's a practical channel spec, plus phone benchmarks from Invoca's analysis of 60M+ calls.

| Channel | "Attempt" | "Contact" | Key benchmark |

|---|---|---|---|

| Phone | first dial | answered + talk | 61% of callers speak to a person |

| SMS/Email | first send | reply received (human) | Target bounce rate <2% |

| Chat | first agent msg | 2-way exchange | First reply <60 seconds during coverage |

Invoca's benchmark summary explains why phone SLAs are different:

- 61% of callers speak with a person

- Answer rates run 54%-69% depending on industry

- 37% of phone leads convert during the call

That's why call-first workflows win for high-intent inbound. If you want to tune the phone side, start with your answer rate definition and benchmarks.

If you're phone-heavy, you'll also want:

- Time to first answered call (new numbers only)

- Abandon rate (caller hangs up before answer)

- Booked-on-call rate

Instrumentation playbook (Salesforce + generic CRM) - the fields, flows, and pitfalls

If you're in Salesforce, native reporting makes speed-to-lead harder than it should be because the Lead has CreatedDate, but "response" usually lives on Task/Event records (one-to-many). You need a "first outreach" timestamp stamped onto the Lead (or a related response object) to report cleanly.

I've watched teams spend weeks arguing about dashboards when the real issue was simple: the timestamps weren't trustworthy, and the CRM was quietly rewarding people for logging activity instead of reaching buyers.

Salesforce field spec (minimum viable)

Create these fields on Lead:

First_Outreach_Date__c(DateTime) - first real attemptFirst_Contact_Date__c(DateTime) - first real contactResponse_Time_Business_Minutes__c(Number) - optional but usefulResponse_Time_Real_Minutes__c(Formula/Number)

Baseline minutes formula:

(FirstResponseDatetime__c - CreatedDate) * 1440

Flow/automation logic (what to actually build)

Checklist:

- Trigger on Task creation/update (or Activity logging in your CRM).

- Filter to "real attempts" only:

- Task Type in {Call, SMS, Email}

- Status = Completed (or Sent)

- Exclude system-generated tasks

- If

First_Outreach_Date__cis blank, stamp it with the TaskActivityDateTime(or equivalent). - For contact, stamp

First_Contact_Date__conly when:- Call outcome = Connected AND talk time ≥ threshold, or

- Inbound reply captured (human reply), or

- Chat transcript indicates 2-way exchange

Where talk time comes from (the detail people miss)

If you've got a dialer/CTI, map its call duration/talk time field into the Salesforce Task (or a custom Call object) and use that for your "conversation ≥ X seconds" rule. If you don't have talk time in Salesforce, use a strict fallback: Connected disposition plus a "Reached decision-maker / Meaningful conversation" outcome - it's less precise, but it's still better than pretending an email send is contact.

Pitfalls (the ones that wreck accuracy)

- Tasks vs Lead CreatedDate mismatch: your "response" is on a different object; reporting gets messy fast.

- LastActivityDate is Date, not DateTime: it can't measure minutes. It's useless for speed-to-lead SLAs.

- Batch logging breaks truth: if reps log calls later, your dashboard becomes a lie.

If you want a Salesforce-specific reference, Kixie's breakdown is here: how to accurately track lead response time in Salesforce.

Generic CRM event model (HubSpot, etc.)

Same concept, different objects:

- Pick a canonical "lead created" timestamp

- Stamp first attempt/contact onto the lead/contact record

- Compute durations in minutes

- Report percentiles + SLA attainment

Don't let your CRM vendor convince you "first activity" is enough. It never is.

Execution levers that move the numbers (routing, after-hours, automation, and "first contact" reality)

Speed-to-lead improves when you treat it like an ops system: routing rules, timers, escalation, and a fallback path when the first rep doesn't act.

Use these SLA bands (they're operationally clean and map to real escalation):

- 0-5 minutes: ideal

- 5-15 minutes: acceptable

- 15-60 minutes: at risk

- 60+ minutes: broken

Escalation timers that work in practice:

- At 10 minutes: notify rep + manager channel

- At 20 minutes: re-route to next available rep

- At 60 minutes: mark as breached + trigger "save" sequence

A workflow template I see repeatedly (and it's effective because it's multi-channel):

- Form submit → immediate call

- If no answer → voicemail + SMS + email

- Wait 5 minutes → second SMS

- Notify team channel + update pipeline stage

- If after-hours → automated SMS to capture intent and book (train it on real transcripts before you trust it broadly)

Operator reality (what teams actually struggle with)

Teams always ask if they need 24/7 coverage. Most don't, and small teams shouldn't pretend they do. If your SDRs are already juggling 50-100 active leads, "respond instantly to everything" just creates burnout and sloppy follow-up.

Real scenario: one team we worked with had TTFA looking "fine" on paper, but P95 was ugly every Monday morning because weekend leads sat unassigned until a queue sweep; the fix wasn't yelling at reps, it was adding a simple after-hours router plus a Monday morning catch-up rule that re-assigned stale leads automatically.

Speed's the handshake. The next 3-15 touches are what win the deal. If you need a starting point for the follow-up system, use a documented lead qualification process so teams don’t confuse “fast” with “done.”

Contactability is the TTFC lever most teams ignore

Here's the equation: TTFC = TTFA + time-to-reach-a-human. If bounce/connect rates are bad, TTFC won't move even if TTFA's world-class.

This is where tools like Prospeo (The B2B data platform built for accuracy) earn their keep in a speed-to-lead system: you can enrich inbound leads before routing so reps aren't burning their first five minutes on bounced emails and dead numbers. Prospeo has 300M+ professional profiles, 143M+ verified emails with 98% accuracy, 125M+ verified mobile numbers, and a 7-day data refresh cycle (industry average: 6 weeks), which is exactly what you want when you're trying to turn "fast attempt" into "fast contact" consistently. If you’re evaluating vendors, start with a short list of lead enrichment tools and a deliverability-first email verification list SOP.

The speed-to-lead dashboard spec (widgets + exact formulas)

A good dashboard answers three questions fast:

- How fast are we attempting?

- How fast are we actually contacting?

- Where's the tail, and why?

Build these widgets (and keep them stable for 90 days so trends mean something):

| Widget | Metric | Slice |

|---|---|---|

| TTFA P50/P95 | minutes | by lead type |

| TTFC P50/P95 | minutes | by channel |

| SLA attainment | % in bands | biz vs real |

| Funnel counts | Attempt/Contact/Qual | by source |

| Contactability | bounce/connect | by source |

| After-hours | SLA breach % | by hour/day |

Exact formulas (conceptual):

TTFA = First_Attempt_At - Lead_Created_AtTTFC = First_Contact_At - Lead_Created_AtSLA_0_5 = count(TTFA<=5)/count(all)Contact_rate = count(Contact)/count(Attempt)(or per lead, depending on model)

Standard reporting set (don't negotiate this):

- P50 & P95 for TTFA and TTFC (TTFQ if you can)

- % within SLA bands

- Segmentation by lead type + business vs real hours

- Funnel counts: attempt vs contact vs qualify

30-day rollout plan (baseline → SLA → automation → review)

This sequence avoids the classic trap: launching an SLA before measurement's trustworthy.

Week 1: Instrumentation audit

- Define events (Created/Assigned/Attempt/Contact/Qualified)

- Add fields (

First_Outreach_Date__c, etc.) - Fix logging behaviors that create fake speed (batch logging)

Week 2: Baseline percentiles

- Compute TTFA/TTFC P50/P95 by segment

- Add SLA band attainment (0-5/5-15/15-60/60+)

- Identify top 3 tail drivers (after-hours, routing, data quality)

Week 3: SLA bands + escalation

- Set SLA targets per lead type/channel

- Implement escalation timers (10/20/60 minutes)

- Add after-hours coverage path (on-call, routing pool, or automation)

Week 4: QA + coaching using P95 outliers

- Review breached leads weekly (sample the worst 5%)

- Coach on follow-up sequences, not just first touch

- Keep repeating the mantra: don't optimize averages - optimize P95 + SLA attainment

FAQ

What's the difference between time to first attempt and time to first contact?

Time to first attempt is the minutes until your first outbound action (call/SMS/email), while time to first contact is the minutes until you reach a real human (connected conversation or true two-way exchange). In practice, teams should target TTFA under 5 minutes and track TTFC separately because it's the outcome-aligned metric.

What percentiles should we track for speed-to-lead reporting (P50 vs P95)?

Track P50 and P95 by default: P50 shows the typical experience, and P95 shows the operational tail where most SLA breaches live. As a rule, if P50 looks fine but P95 is over 2 hours, you don't have a "rep problem" - you've got a routing, coverage, or after-hours problem.

Should speed-to-lead be measured in business hours or real hours?

Measure both, but manage to real hours for buyer experience and conversion impact. Business-hours views are still useful for staffing and fairness, especially across time zones. Put them side by side; if real-hours P95 is 18+ hours, your coverage model's the bottleneck.

What are good SLA bands for inbound leads (0-5, 5-15, 15-60, 60+)?

Use 0-5, 5-15, 15-60, and 60+ minutes because they map cleanly to escalation and reporting. For high-intent inbound (demo requests and inbound calls), aim for 0-5 minutes during coverage and review every lead that lands in the 60+ bucket weekly until it shrinks.

How can we improve "first contact" when emails bounce or numbers are wrong?

Fix contactability first: keep email bounce under 2%, enrich missing mobiles, and refresh records weekly so attempts turn into conversations. Prospeo's a strong option here because it delivers 98% email accuracy, 125M+ verified mobile numbers, and a 7-day refresh cycle, which reliably lifts connect rates and pulls TTFC down.

Next step

The article's clear: if your email bounce rate is above 2%, shaving minutes off TTFA is pointless. Prospeo refreshes 300M+ profiles every 7 days - not every 6 weeks - so your reps dial numbers that connect and email addresses that land.

Fix contactability at $0.01 per verified email.

Summary

If you want speed to lead metrics that correlate with pipeline (not just activity), stop treating "first touch" as the finish line. Instrument Created → Attempt → Contact → Qualified, report P50/P95 plus SLA bands, and pair speed with contactability (bounce/connect/answer rates). When TTFA improves but TTFC doesn't, it's almost always data freshness, channel mismatch, or after-hours coverage, not rep effort.