AI Lead Scoring vs Traditional Lead Scoring: The Honest, Data-Backed Guide

Your best customer scored 12 out of 100. They skipped every whitepaper, ignored the nurture sequence, and booked a demo directly from a Google search. Meanwhile, a marketing intern downloading everything in sight scored 95. Your sales team stopped trusting the model within a month - and honestly, you can't blame them.

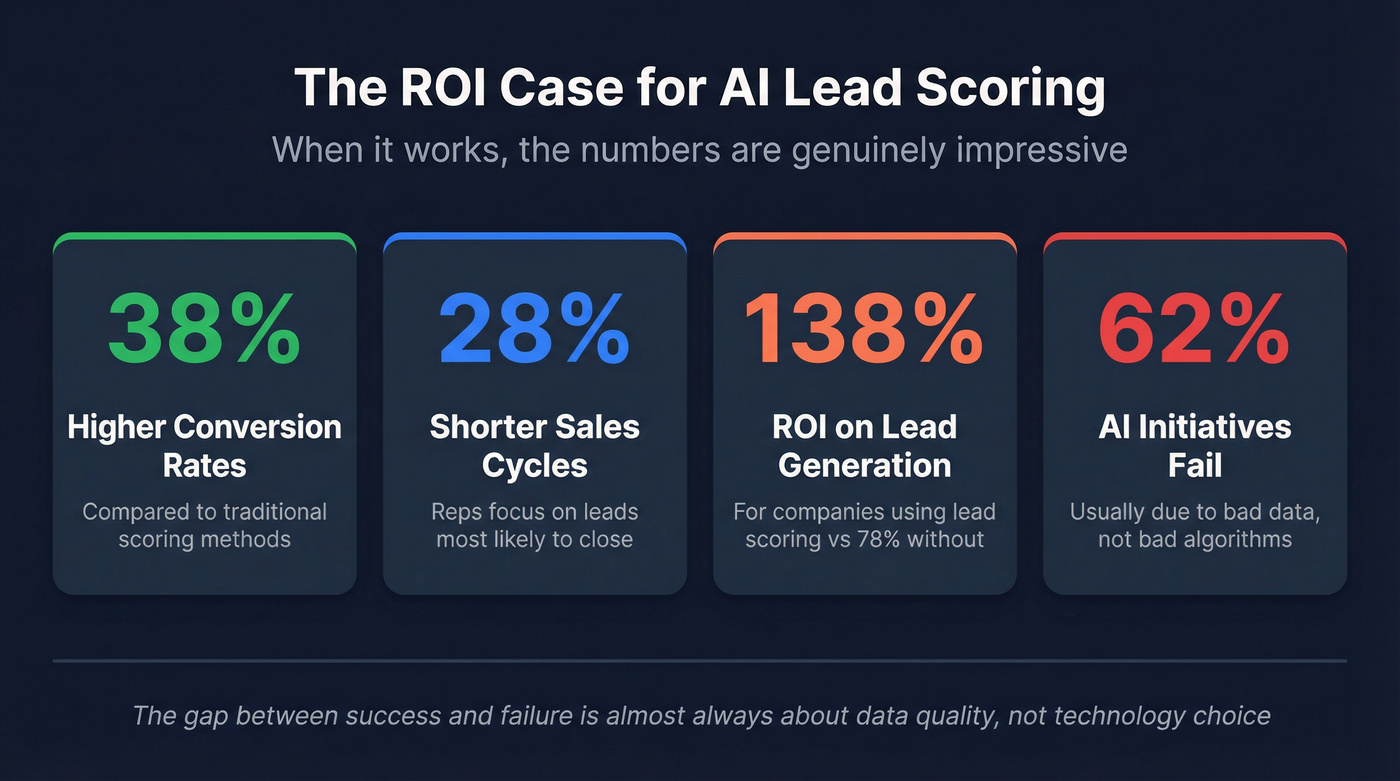

That scenario isn't hypothetical. It's one of the most upvoted threads on r/hubspot, and it captures the core problem with lead scoring as most companies practice it. Only 27% of leads sent to sales are actually qualified, and just 44% of organizations even use lead scoring at all. The ones that do achieve 138% ROI on lead generation versus 78% without it. But 62% of AI initiatives in sales fail outright. So the question isn't "should you score leads?" - it's "which approach won't blow up in your face?"

Most articles treat AI scoring as the obvious winner and traditional scoring as a relic. The reality is messier. I've seen teams waste six figures on predictive scoring tools that performed worse than a spreadsheet because their CRM data was garbage. I've also seen simple rule-based models outperform enterprise AI platforms for years before the company had enough data to justify the switch.

This guide breaks down both approaches with real numbers, real pricing, and a decision framework that doesn't assume you have a data science team on staff.

What You Need (Quick Version)

Before you read 5,000 words, here's the short answer:

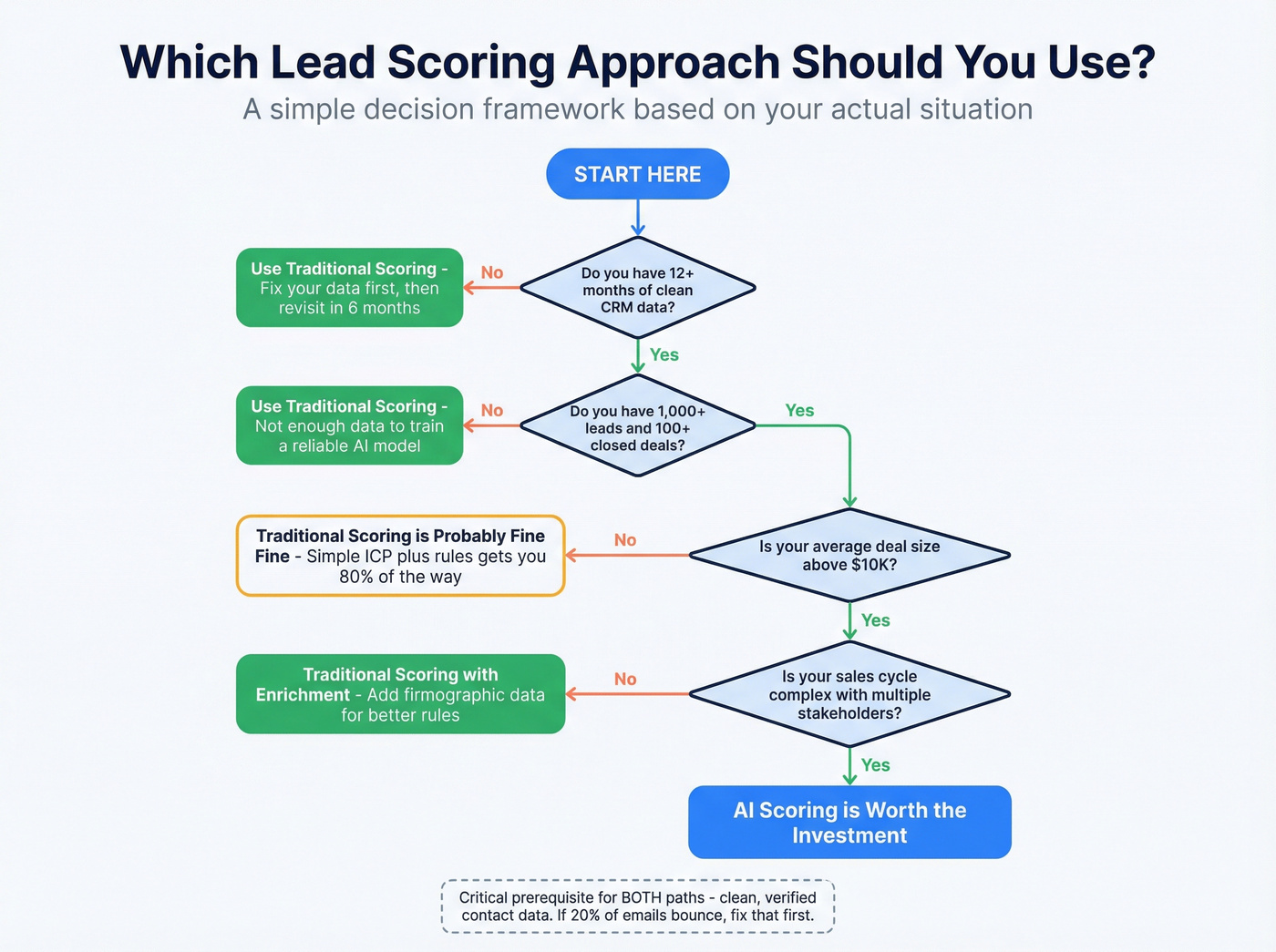

Use traditional scoring if you have fewer than 1,000 leads per year, fewer than 100 closed deals, or a short sales cycle. A well-built rule-based model will outperform a poorly trained AI model every single time.

Use AI scoring if you have 12-24 months of clean CRM data, 100+ conversions to train on, and a sales cycle complex enough to justify the investment. The ROI is real - 38% higher conversion rates, 28% shorter cycles - but only with sufficient data.

Before either approach: fix your data. Scoring models - AI or manual - are only as good as the contact data feeding them. If 20% of your emails bounce and your phone numbers are stale, no algorithm will save you.

Now, the full breakdown.

What Is Traditional Lead Scoring (And Where It Breaks)

Traditional lead scoring is a point system. You assign numerical values to lead attributes and behaviors, add them up, and route leads based on the total. Title is VP or above? +15 points. Company has 50+ employees? +10. Downloaded a whitepaper? +5. Visited the pricing page? +20.

It's simple, transparent, and fast to deploy. Most CRMs - HubSpot, Salesforce, Pipedrive - support it natively. You can have a working model in hours, not months.

The accuracy ceiling is the problem. Traditional scoring typically lands in the 60-70% range for correctly identifying qualified leads. That's not terrible, but it means roughly one in three leads your reps chase will be a dead end. And the model doesn't learn from its mistakes.

The deeper issue is subjectivity. Who decides that a whitepaper download is worth 5 points versus 15? Usually it's a marketing team guessing based on intuition, not data. Those guesses calcify into rules that nobody revisits for years. This is the fundamental limitation: humans set the rules, and humans are bad at weighting dozens of variables simultaneously.

Then there's the student problem. Rule-based models can't distinguish between a VP who visits your pricing page once and a grad student who downloads every piece of content you've ever published. The student scores higher. Every time.

Signs you've outgrown traditional scoring:

- Your best customers routinely score low because they don't engage with marketing content

- You have enough historical data to train a model (1,000+ leads, 100+ conversions)

- Your sales team has already stopped trusting the scores

- You're seeing high false-positive rates - lots of high-scoring leads that never convert

Use traditional scoring if:

- You're processing fewer than 1,000 leads per year

- Your sales cycle is short and straightforward

- You don't have 12+ months of clean CRM data

- You need something running by next week

- You're in a regulated industry where you need to explain exactly why a lead was prioritized

Traditional scoring isn't dead. For early-stage companies and simple sales motions, it's the right call. But it has a ceiling, and most teams hit it faster than they expect.

What Is AI Lead Scoring (And How It Actually Works)

At its core, AI lead scoring is a binary classification problem: will this lead convert (1) or not (0)? Instead of a human assigning point values, a machine learning model analyzes thousands of historical leads, identifies patterns that correlate with conversion, and scores new leads based on how closely they resemble past winners.

That's an important distinction. Predictive scoring isn't magic. It's pattern recognition at scale.

Three Approaches to AI Scoring

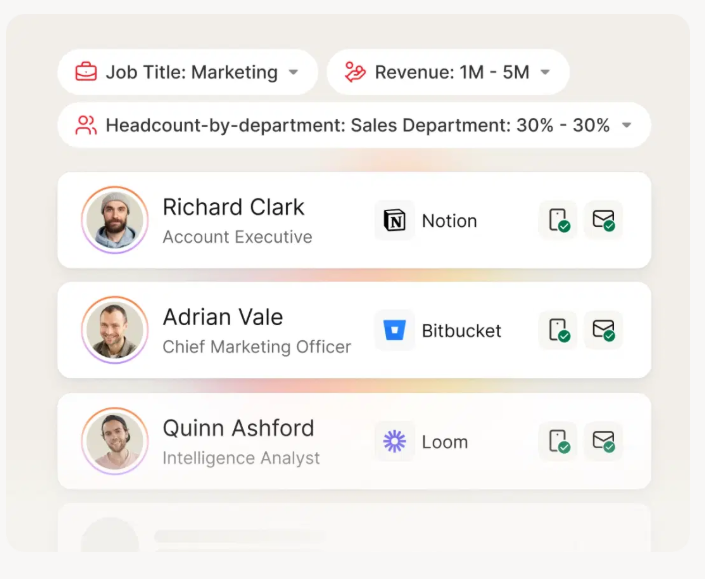

Aggregation models collect and weight signals from multiple data sources - firmographic, behavioral, technographic - and produce a composite score. Think of these as "traditional scoring on steroids." (If you're building around firmographics, start with firmographic hygiene first.)

Prediction models use supervised learning algorithms trained on your historical conversion data. These are the workhorses: logistic regression, random forests, gradient boosting. They learn which combinations of features predict conversion and apply those patterns to new leads.

Generation models (the newest category) use large language models to synthesize unstructured data - call transcripts, email threads, social signals - into scoring inputs. Still early, but the trajectory is clear.

Beyond Lead-Level Scoring: MQAs and Decay

One evolution worth knowing: account-level scoring, sometimes called Marketing Qualified Accounts (MQAs). Instead of scoring individual leads, you score entire buying committees. When three people from the same company all visit your pricing page in the same week, that's a stronger signal than any individual lead score. AI models handle this multi-contact, account-level analysis far better than rule-based systems. (Related: account-level scoring.)

AI scoring also enables lead decay modeling - automatically reducing scores over time when engagement drops off. A lead who visited your pricing page six months ago isn't the same as one who visited yesterday. Traditional scoring treats them identically unless someone manually adjusts the rules. AI models degrade scores automatically based on recency patterns. (If you want a practical model, see website visitor scoring.)

Algorithm Performance Comparison

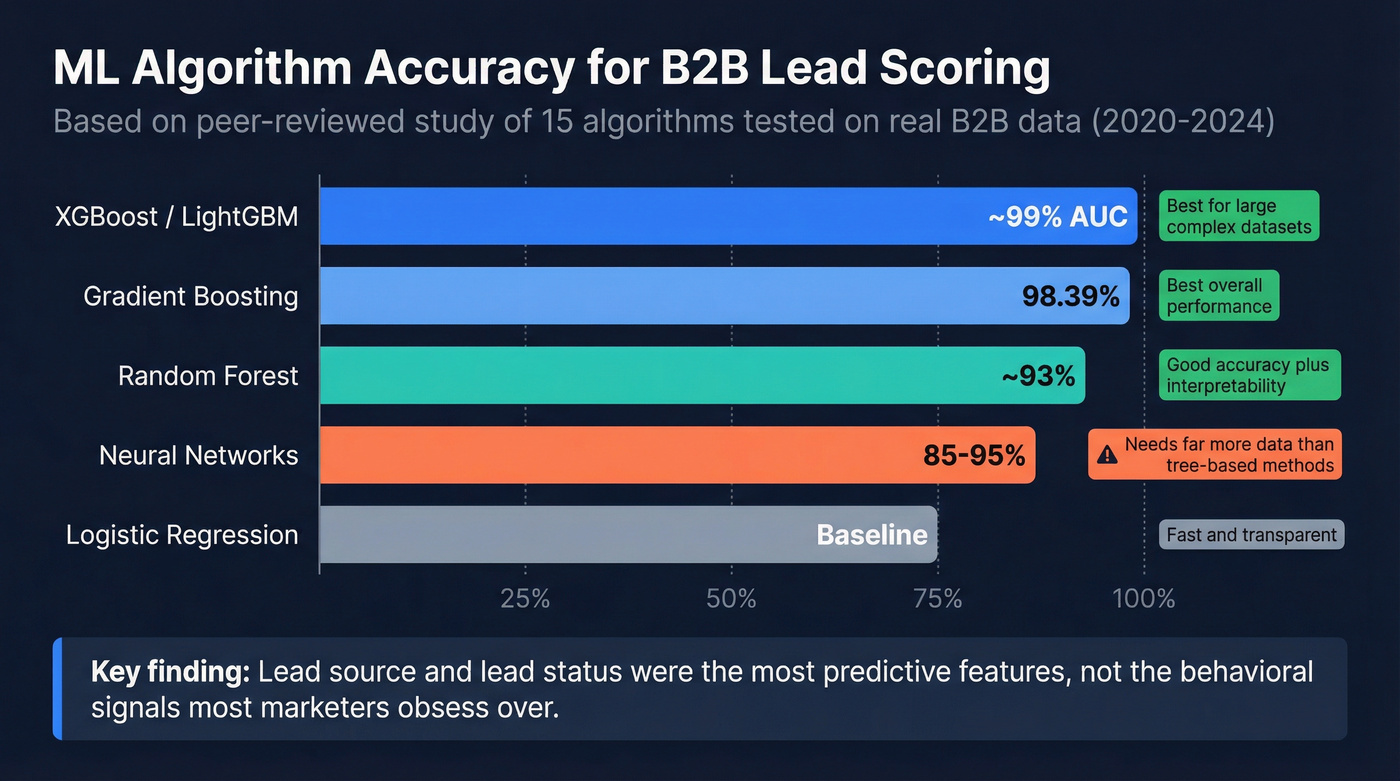

A peer-reviewed study published in early 2025 in Frontiers in Artificial Intelligence tested 15 classification algorithms on real B2B lead data spanning January 2020 to April 2024. Here's how the top performers stacked up:

| Algorithm | Accuracy | Best For |

|---|---|---|

| Gradient Boosting | 98.39% | Overall performance |

| XGBoost/LightGBM | ~99% AUC | Large, complex datasets |

| Random Forest | High | Accuracy + interpretability |

| Logistic Regression | Baseline | Speed, transparency |

| Neural Networks | 85-95% (needs far more data than tree-based methods) | Unstructured data |

The study found that "source" and "lead status" were the most predictive features - not the behavioral signals most marketers obsess over. That's a finding worth sitting with.

Minimum Data Requirements

Here's where most teams get tripped up. AI scoring needs fuel:

- 1,000+ historical leads minimum (more is better)

- 100+ closed deals (both won and lost) to train on

- 12-24 months of CRM data with clear outcomes

- Clean, labeled data - not a CRM full of "Other" in the industry field

Below those thresholds, you're training a model on noise. A simple rule-based system will outperform it.

Every scoring model - AI or traditional - fails when 20% of your emails bounce and your phone numbers are stale. Prospeo's 5-step verification delivers 98% email accuracy and 125M+ verified mobiles, refreshed every 7 days. Clean data in, reliable scores out.

Stop feeding garbage data into your scoring model. Start with verified contacts.

How AI and Traditional Lead Scoring Compare Head-to-Head

Here's the honest side-by-side. I've called a winner in each category, even when it's uncomfortable.

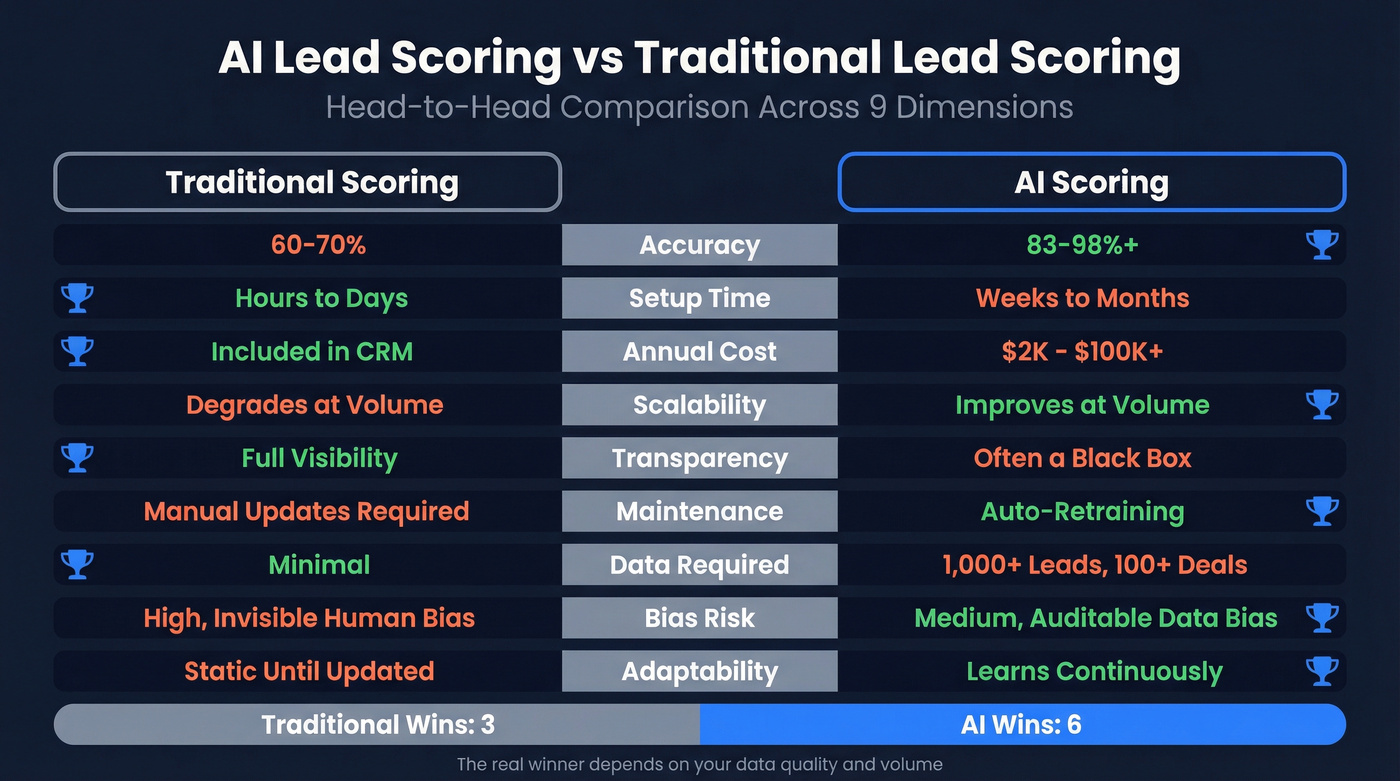

| Dimension | Traditional | AI | Winner |

|---|---|---|---|

| Accuracy | 60-70% | 83-98%+ | AI |

| Setup time | Hours to days | Weeks to months | Traditional |

| Annual cost | Included in CRM license | $2K-$100K+ | Traditional |

| Scalability | Degrades at volume | Improves at volume | AI |

| Transparency | Full visibility | Often a black box | Traditional |

| Maintenance | Manual updates | Auto-retraining | AI |

| Data required | Minimal | 1,000+ leads, 100+ deals | Traditional |

| Bias risk | High (invisible human bias) | Medium (auditable data bias) | AI (auditable) |

| Adaptability | Static until updated | Learns continuously | AI |

The pattern is clear: AI wins on accuracy and scale. Traditional wins on transparency, cost, and speed-to-value.

But here's the nuance most comparison articles miss: the gap between these two approaches is entirely dependent on your data quality and volume. A well-built rule-based model running on clean data will beat a poorly trained AI model running on a messy CRM every single day. I've watched this happen more times than I can count.

The real question isn't "which is better?" It's "do you have the data infrastructure to make AI scoring work?" (If your ops foundation is shaky, start with data quality.)

If the answer is no, traditional scoring isn't a consolation prize - it's the correct choice.

Hot take: If your average deal size sits below $10K, you probably don't need ML-level scoring at all. A tight ICP definition, clean data, and a simple rule-based model will get you 80% of the way there at 5% of the cost. Save the AI budget for when your deal complexity actually justifies it.

AI scoring also introduces a transparency problem that matters more than most vendors admit. When a rep asks "why did this lead score 87?" and the answer is "the model determined it based on 200+ weighted features," that's not an answer that builds trust. HubSpot's predictive model, for instance, doesn't show the specific weight of individual factors. It's a black box. Sales teams tolerate black boxes right up until the model sends them a bad lead - then trust evaporates overnight.

Explainable AI is becoming a selection criterion for exactly this reason. Tools that surface the top 3-5 factors driving each score - even if the underlying model uses hundreds of features - get dramatically better adoption from sales teams. If you're evaluating scoring tools, "can my reps understand why a lead scored high?" should be a top-three question.

The ROI Case for AI Lead Scoring

When AI scoring works, the numbers are genuinely impressive.

Forrester's "AI in B2B Sales" report found that companies using AI scoring see 38% higher conversion rates from lead to opportunity, 28% shorter sales cycles, 17% higher average deal values, and 35% lower cost-per-acquisition. ML scoring delivers 75% higher conversion rates compared to traditional methods, and McKinsey research shows a 50% surge in leads and appointments for sales teams using AI tools.

Machine learning scoring delivers 300-400% ROI within the first year for successful implementations. The best deployments - typically companies with 10,000+ leads, dedicated data teams, and 18+ months of clean CRM history - see 300-700% ROI.

Real talk: those are the success stories. The failures don't publish case studies.

Case Studies Worth Knowing

Progressive Insurance deployed ML-based lead scoring and generated $2 billion in new premiums in the first year, with 90%+ model accuracy. That's an insurance company - not exactly a Silicon Valley startup with a data science army.

Carson Group, a financial advisory firm, achieved 96% accuracy predicting lead conversions using an AWS-based ML implementation. Financial services, heavy regulation, and they still made it work.

A medium-sized IT service provider reduced SDR workload by 35% while increasing qualified opportunities by 27%. Their top 20% of scored leads generated 73% of all opportunities.

The Productivity Angle

Here's something that doesn't get enough attention: sellers spend only about 25% of their time actually selling to customers. The rest is research, admin, data entry, and chasing leads that go nowhere.

AI scoring doesn't just improve conversion rates - it gives reps time back. Early AI successes show 30%+ improvement in win rates, largely because reps spend more time on leads that actually close. If AI reclaims even half the time reps spend on non-selling activities, a 10-person team effectively gains 2-3 additional sellers' worth of capacity - without hiring anyone. (If reclaiming time is the goal, see reduce sales admin time with AI.)

Why AI Scoring Fails (And Why 62% of Projects Do)

Gartner's stat is sobering: 62% of AI initiatives in sales fail due to excessive expectations and inadequate preparation. Almost half of companies abandon their AI initiatives entirely. Understanding why is more valuable than understanding the algorithms.

As Dr. Michael Feindt, founder of Blue Yonder, puts it: "AI cannot tell you which leads will buy - it can only tell you which leads resemble the buyers you have already won." That framing should temper every expectation you bring to an AI scoring project.

Failure Mode 1: Information Leakage

This is the most insidious problem, and GenComm's analysis explains it well. Information leakage happens when you train your model on data that was observed after the scoring decision point.

Here's how it works: your CRM stores the latest state of every field. A lead that eventually converted has 47 email opens, 12 page views, and a "Customer" lifecycle stage. You train your model on this data. The model learns that leads with 47 email opens convert - but those 47 opens happened because the lead was already in a nurture sequence after being qualified. You've trained the model on the outcome, not the inputs.

CRM systems like HubSpot and Salesforce only retain the most recent values for engagement metrics - not historical snapshots. This latest-state design makes sense for 95% of business applications but is fundamentally broken for predictive modeling.

The result: models that look impressively accurate during training but fail catastrophically in production.

Failure Mode 2: The Cold Start Problem

The first time a person shows up at your business, you don't know anything about them. First-party activity data is noisy - many random factors affect engagement signals. Models relying solely on first-party data are weaker, less widely applicable, and less stable.

The fix is integrating third-party data (firmographic, technographic, intent signals) alongside behavioral data. But most teams don't do this, and their models suffer. (If you’re building around intent, start with intent signals.)

Failure Mode 3: Automating Mediocre Processes

If your sales process is broken - unclear ICP, no handoff criteria, reps cherry-picking leads anyway - AI scoring won't fix it. It'll just make the dysfunction faster.

90% of predictive lead scoring projects stall at data preparation and feature engineering. Not at algorithm selection. Not at tool procurement. At the boring, unglamorous work of cleaning data and defining what "qualified" actually means. (A practical starting point: lead qualification framework.)

Failure Mode 4: Black Box Syndrome

On Reddit, users describe enterprise tools like 6sense as "$10K/month black boxes that provide a score but no explanation of WHY." HubSpot's predictive model has the same limitation - it gives you a "Likelihood to close" percentage but won't tell you which factors drove it.

When sales teams can't understand the score, they don't trust it. When they don't trust it, they ignore it. When they ignore it, your $10K/month tool becomes an expensive dashboard nobody opens.

Failure Mode 5: Bad Data

Here's what nobody tells you about AI scoring costs: the license fee is the cheap part. Your scoring model is only as good as the data feeding it. If 20% of your email addresses bounce and your phone numbers are outdated, even Gradient Boosting won't save you. Clean, verified contact data - with regular refresh cycles - is the non-negotiable foundation. Tools like Prospeo verify emails at 98% accuracy on a 7-day refresh cycle, returning 50+ data points per contact. Whatever enrichment tool you use, clean data isn't optional. Without it, you're training your model on ghosts. (For the SOP, see how to keep CRM data clean.)

When Traditional Scoring Is the Right Call

Not every company needs AI scoring. Not every company is ready for AI scoring.

Skip AI entirely if you process fewer than 1,000 leads per year. There's not enough data to train a reliable model. Period.

Same goes if you have fewer than 100 closed deals (won and lost combined), a short and straightforward sales cycle, or you're in a regulated industry where "the model said so" doesn't fly in compliance reviews. Early-stage companies still figuring out their ICP shouldn't bother either - you can't train a model on patterns you haven't established yet.

The Four Evolution Phases

Most companies should think about scoring as a progression, not a binary choice:

- Intuitive - reps use gut feel. Where most startups begin.

- Rule-based - manual point systems in your CRM. Where you should be by 50 customers.

- Behavior-based - rules that incorporate engagement signals and intent data. The bridge. Aim for this around 500+ leads with clear engagement patterns.

- Predictive - ML models trained on historical data. When you cross 1,000 leads and 100 conversions with clean data.

The Hybrid Approach

The smartest teams I've worked with don't choose one or the other. They run rule-based thresholds as the foundation - hard disqualifiers like wrong industry, wrong company size, wrong geography - and layer AI scoring on top for prioritization within the qualified pool.

In our experience, the hybrid approach outperforms pure AI scoring for the first 6-12 months. The rules handle the obvious disqualifications instantly, while the AI model learns the subtler patterns that separate "good fit" from "ready to buy." You don't have to abandon what works to adopt what's new.

Pilot AI alongside your existing rules for 3-6 months before switching. Compare the AI's top-scored leads against your rule-based top-scored leads. Track which group converts better. Let the data decide, not the vendor's sales pitch.

What AI Lead Scoring Tools Actually Cost

Here's the part every vendor tries to hide.

| Tool | Plan | Cost | Scoring Type |

|---|---|---|---|

| HubSpot Professional | Sales Hub | $90/user/mo | Manual rules |

| HubSpot Enterprise | Sales Hub | $150/user/mo + $3,500 setup | AI predictive |

| Salesforce Enterprise | Base | $165/user/mo | CRM + rules |

| Salesforce Einstein | Add-on | $50/user/mo (on top of Enterprise) | AI predictive |

| Salesforce Unlimited | Full AI suite | $825/user/mo | AI + agents |

| 6sense | Enterprise | ~$10K-25K/mo | AI + intent |

| Warmly | AI Data Agent | ~$10K/yr | AI + warm leads |

| Warmly | AI Outbound Agent | ~$22K/yr | AI + outbound |

| Apollo | Basic-Organization | $59-149/user/mo | Rules + signals |

| Clay | Pro | $229/mo (3K credits) | Enrichment + scoring |

| Salesflare | Growth | $31.60/user/mo | Basic AI |

| ActiveCampaign | Starter | $19/mo | Rules-based |

| GenComm | Mid-tier | ~$550-2,750/mo | Custom AI models |

The 10-Person Team Scenario

Let's do the math for a 10-person sales team:

HubSpot Enterprise: $150 x 10 = $1,500/mo + $3,500 onboarding = $21,500 first year. You get predictive scoring, but it's a black box optimized for the HubSpot ecosystem.

Salesforce Enterprise + Einstein: $165 base + $50 Einstein add-on = $215 x 10 = $2,150/mo = $25,800/year. Add Revenue Intelligence and you're at $385/user/mo - $46,200/year. Industry reviewers note the cost is "quite high for small businesses," which is an understatement.

6sense: At $10K-25K/month, you're looking at $120K-300K/year. That's enterprise pricing for enterprise companies. If you have to ask, you probably can't afford it - and the frustrating part is they won't tell you the price until you've sat through three demos.

Apollo Professional: $99 x 10 = $11,880/year. Not true AI scoring, but the signal-based prioritization is good enough for many mid-market teams.

Hidden Costs Nobody Mentions

The license fee is just the start. Budget for:

- Data cleanup: 2-4 weeks of a RevOps person's time before you can train anything

- Onboarding: HubSpot charges $3,500; Salesforce implementations routinely run $10K-50K+

- Integration: connecting your scoring tool to your sequencer, CRM, and enrichment stack

- Ongoing maintenance: someone needs to monitor model drift, retrain quarterly, and explain to sales why the scores changed

Credit-based pricing models are particularly frustrating. One Reddit user burned 1,912 Clay credits - 64% of their monthly budget - on a single workbook. Enriching 85 firms cost ~$57 before they even identified contacts. Credit systems that count exploratory queries the same as production exports are a tax on RevOps teams trying to iterate.

How to Build Your Lead Scoring System

Whether you go traditional, AI, or hybrid, the implementation steps are the same.

Step 1: Audit Your Data

Before you touch a scoring model, answer these questions:

- How many leads did you process in the last 12 months?

- How many closed-won and closed-lost deals do you have with complete data?

- What percentage of your email addresses are verified and current?

- Are your industry, company size, and job title fields consistently filled?

If you can't answer these confidently, start here. Enrich your CRM contacts with verified emails and current firmographic data before building anything. (If you need a process, use this email verification list SOP.)

Step 2: Separate Fit Scoring from Behavior Scoring

This is the single most important structural decision.

Fit scoring answers "is this the right kind of company and person?" Behavior scoring answers "are they showing buying intent?" Mixing them into one score is how you end up with students outscoring VPs.

Fit criteria: industry, company size, job title, geography, tech stack, funding stage. These are binary or tiered - the lead either fits your ICP or doesn't.

Behavior criteria: pricing page visits, demo requests, free trial signups, multiple contacts from the same account engaging. These are weighted signals that indicate timing and intent.

Step 3: Analyze Your Last 50 Closed-Won and 50 Closed-Lost Deals

Pull the data. Look for patterns. What did your best customers have in common before they became customers? What did the losses share?

You'll likely find that 3-5 attributes explain 80% of the variance. Maybe it's company size + industry + whether they visited the pricing page. Maybe it's job title + tech stack + inbound vs outbound source.

These patterns become your scoring rules. Not guesses - data.

Step 4: Start with Simple Rules, Validate with Sales

Build your initial model as simply as possible:

- +20: Demo request

- +10: Pricing page visit

- +5: Opened nurture emails

- -20: Not in ICP (wrong industry, wrong size, wrong geo)

Run it for 2-4 weeks. Then sit down with your top 3 reps and ask: "Are the high-scoring leads actually good?" Their feedback is worth more than any algorithm at this stage.

Step 5: Layer AI When Data Is Sufficient

Once you have 1,000+ leads and 100+ conversions with clean data, you're ready to test AI scoring. Start with a pilot:

- Run AI scoring in parallel with your existing rules for 3-6 months

- Compare conversion rates between AI-prioritized and rule-prioritized leads

- Track false positives (high AI score, didn't convert) and false negatives (low AI score, did convert)

Don't rip out your rule-based system until the AI has proven itself on your data, with your sales team, in your market.

Step 6: Monitor, Retrain, Iterate

AI models drift. Your market changes, your ICP evolves, your product shifts upmarket. A model trained on 2024 data will be wrong by mid-2026.

Set a quarterly review cadence. Check accuracy metrics. Retrain when conversion patterns shift. And keep your sales team in the feedback loop - they're the ground truth your model is trying to approximate.

AI scoring needs 1,000+ leads with clean CRM data to train on. Prospeo's enrichment API fills in 50+ data points per contact at a 92% match rate - giving your predictive models the firmographic, technographic, and intent signals they need to actually work.

Enrich your CRM data so your scoring model has something real to learn from.

What's Next for Lead Scoring

Gartner projected that by 2026, B2B sales organizations using generative AI would reduce prospecting and meeting-prep time by over 50% - and early data suggests they're right. By 2027, AI is expected to initiate 95% of sales research workflows.

The lead scoring market reflects this trajectory: projected to grow from $2-5 billion today to $8.3-35.4 billion by 2032.

The shift isn't just from manual to automated scoring. It's from passive scoring (wait for signals, assign points) to autonomous agents that research accounts, identify buying committees, personalize outreach, and route leads - all before a human touches anything. Some platforms are already shipping "digital workers" that handle the entire top-of-funnel workflow autonomously.

But we're not there yet. Only 21% of commercial leaders have fully enabled enterprise-wide AI adoption in B2B sales. The gap between what's possible and what's deployed is enormous, which means there's still a massive first-mover advantage for teams that get their data and processes right now.

Look, the future is clearly AI-driven. But the teams that win won't be the ones who bought the fanciest tool first. They'll be the ones who built the data foundation that makes any scoring approach actually work.

FAQ

What is the main difference between AI and traditional lead scoring?

Traditional lead scoring uses static, manually assigned point rules that don't update unless a human changes them. AI lead scoring uses machine learning to find patterns in historical conversion data and adjusts scores in real time. The accuracy gap is significant: traditional methods hit 60-70% while AI models routinely exceed 83-98%.

How much data do you need for AI lead scoring to work?

At minimum, 1,000 historical leads, 100+ closed deals (both won and lost), and 12-24 months of CRM data with clear outcomes. Below those thresholds, rule-based scoring outperforms most AI models because the algorithm lacks enough examples to learn meaningful patterns.

Is AI lead scoring worth it for small businesses?

For teams processing fewer than 1,000 leads per year with short sales cycles, a well-built rule-based model in your existing CRM costs nothing extra and deploys in hours. Graduate to AI when your data volume justifies the investment - typically after 12-24 months of consistent CRM hygiene and 100+ closed deals.

Why do most AI lead scoring projects fail?

62% fail primarily due to dirty CRM data, information leakage in model training, and unrealistic expectations. Data quality - not algorithm choice - is the top success factor. Verified, regularly refreshed contact data is the foundation that separates the 38% that succeed from the majority that don't.

How do you improve lead scoring accuracy without AI?

Separate fit scoring (company size, job title, industry) from behavior scoring (demo requests, pricing page visits). Analyze your last 50 closed-won and 50 closed-lost deals to identify real patterns. Verify your contact data is current - stale emails and wrong numbers mean scores reflect ghosts. Then iterate based on direct sales feedback every 2-4 weeks.