How to Measure ABX Program Success: Metrics, Frameworks & What Actually Matters in 2026

You've got the ABX program running. Sales and marketing are "aligned." The target account list is live. Six months in, the CMO asks a simple question: "Is this working?"

Nobody in the room can give a straight answer.

That's the reality for most ABX programs. Not because the strategy is wrong, but because measuring ABX program success is broken from day one. The fix starts with tracking the right things - and making sure the data feeding those measurements isn't already rotten.

Why Most ABX Measurement Fails Before It Starts

We've watched teams spend six figures on ABX platforms, build gorgeous account plays, and then try to measure success with MQLs. That's like hiring a personal chef and rating them on how fast they microwave leftovers.

The fundamental problem: most B2B organizations carry over their demand gen measurement playbook into an account-based model. They count leads instead of accounts. They obsess over form fills instead of multi-threaded engagement. They report on marketing-sourced pipeline when the whole point of ABX is that sales and marketing source pipeline together. [Over 60% of B2B organizations](https://www.gartner.com/en/newsroom/press-releases/2020-10-06-gartner-says-60 - of-b2b-sales-organizations-will-tran) say they can't attribute revenue to their ABM/ABX programs. That's not a technology problem - it's a framework problem.

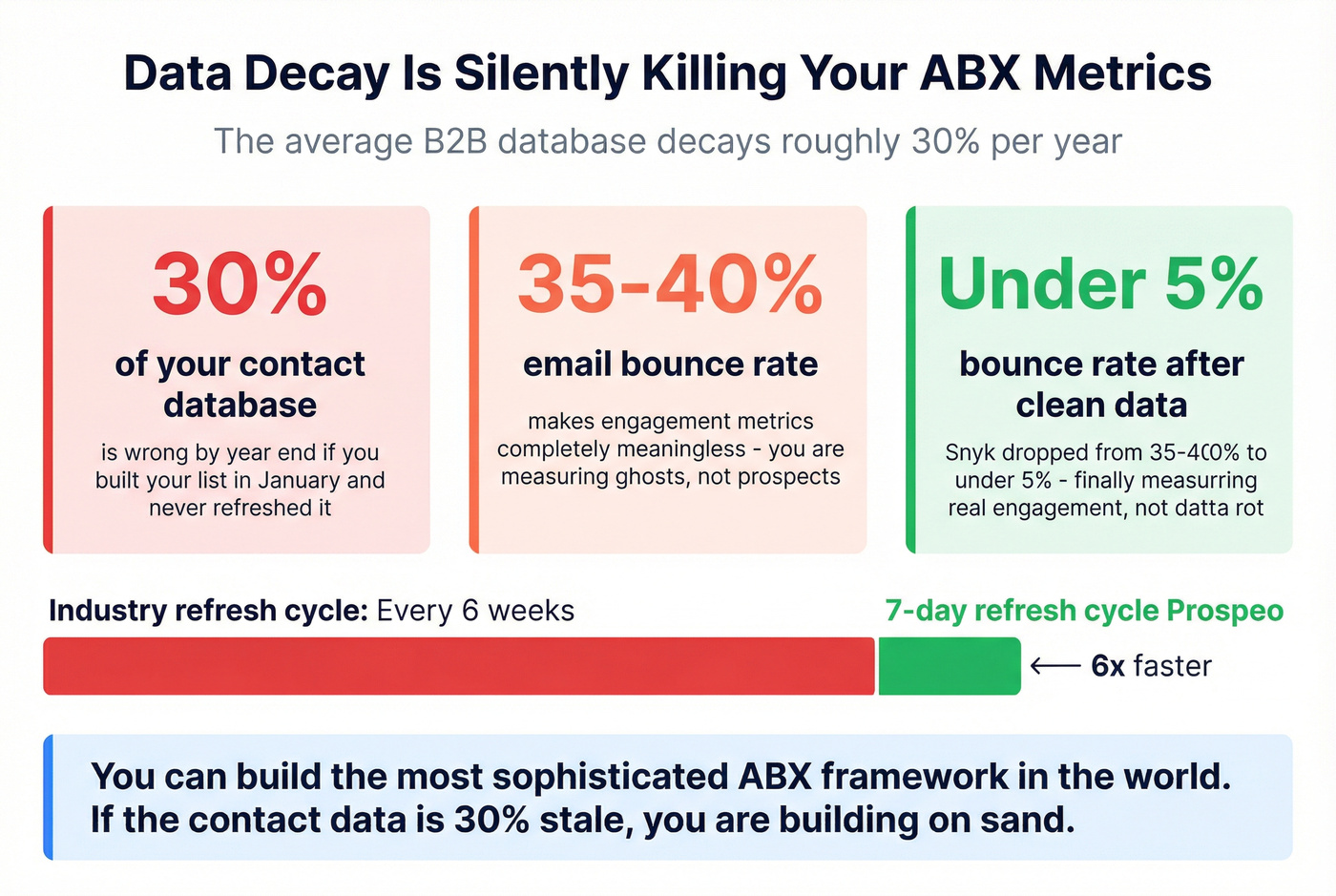

The other silent killer? Data quality. If 30% of your contact database has decayed since you built your target account list, your engagement metrics are fiction. You're measuring bounced emails and wrong-number dials as "low engagement" when the real issue is that you're reaching voicemail boxes of people who left the company eight months ago.

ABX measurement isn't harder than demand gen measurement. It's different. And once you set it up correctly, it gives you clearer signal on what's working.

What You Need to Prove Account-Based Program Success

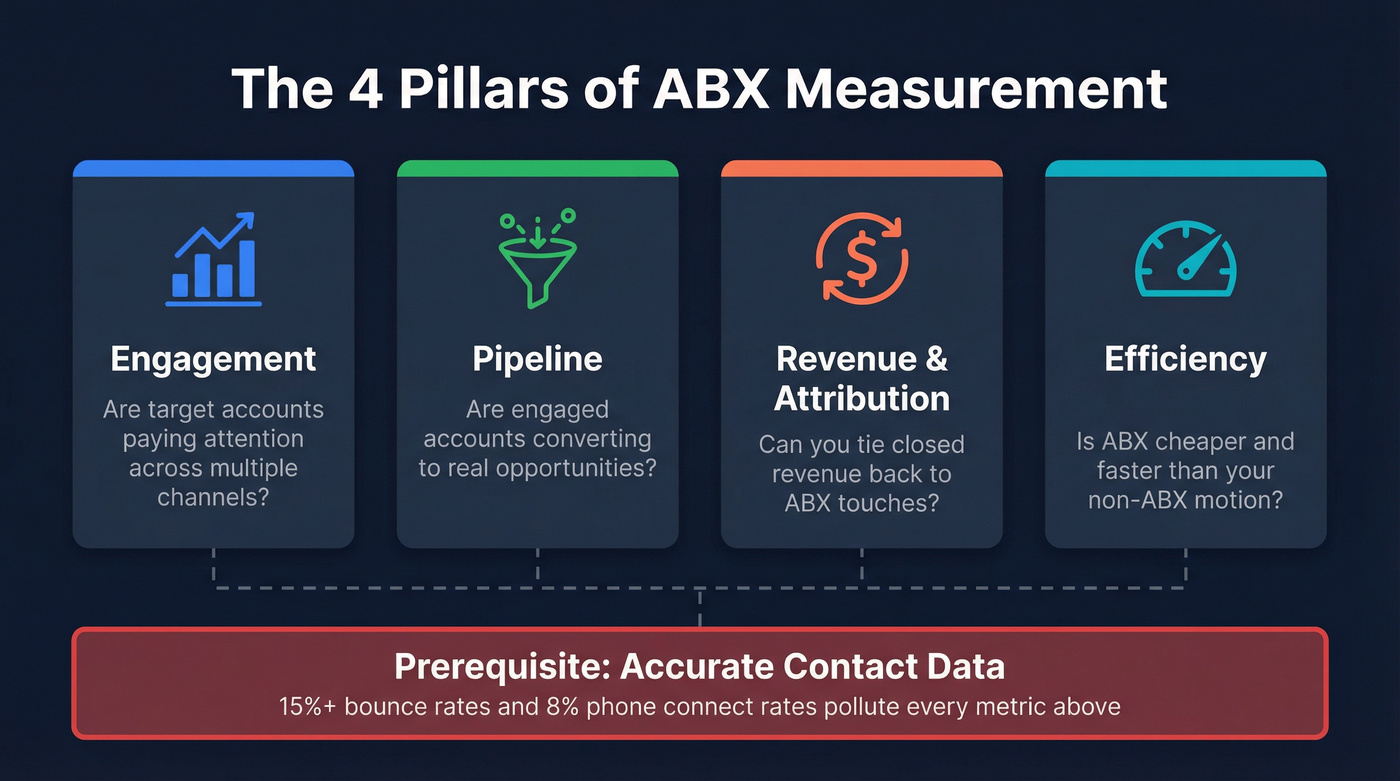

ABX measurement breaks into four pillars. Get these right and you can answer the CMO's question in under 60 seconds:

- Engagement metrics - Are target accounts paying attention? Multi-channel, account-level, not individual lead scores.

- Pipeline metrics - Are engaged accounts converting to real opportunities? How fast? How big?

- Revenue & attribution metrics - Can you tie closed revenue back to ABX touches? Which model do you use?

- Efficiency metrics - Is ABX cheaper and faster than your non-ABX motion? Where's the waste?

The prerequisite nobody lists: accurate contact data. If your emails bounce at 15%+ and your phone numbers connect 8% of the time, every metric downstream is polluted.

Three things kill ABX measurement before it starts: vanity metrics (ad impressions aren't engagement), no pre-launch baseline (you can't measure lift without a "before"), and stale data masquerading as low engagement. Fix those three, and you're ahead of 80% of ABX programs.

You just read that 30% of contact databases decay before teams even measure ABX engagement. Every bounced email and wrong-number dial pollutes your pipeline metrics. Prospeo refreshes all 300M+ profiles every 7 days - not every 6 weeks - with 98% email accuracy and a 30% mobile pickup rate. Your ABX measurement framework is only as honest as the data underneath it.

Stop measuring data decay and start measuring real account engagement.

The ABX Measurement Framework: 4 Pillars

Account Engagement Metrics

Engagement is where ABX measurement diverges most sharply from traditional demand gen. You're not counting individual leads who filled out a form. You're measuring whether an account - multiple people across multiple buying roles - is engaging with your brand across multiple channels.

The core metric is an account-level engagement score. This composites website visits, ad interactions, email opens and replies, content downloads, event attendance, and sales touchpoints into a single score per account. Some teams use a FIT + Intent + Engagement scoring model to weight these signals. The specifics matter less than scoring at the account level, not the contact level.

Benchmark: top-performing ABX programs see 3-5x engagement lift on target accounts versus non-target accounts. If you're not seeing at least 2x lift within the first 90 days, either your targeting is off or your plays aren't resonating.

Key metrics to track:

- Account engagement score (composite, trended over time)

- Multi-channel touch density - how many distinct channels is each account engaging through?

- Website visit-to-target-account ratio - what percentage of your web traffic comes from accounts you actually care about?

- Buying committee coverage - are you engaging 1 person or 5?

If you're only reaching one contact per target account, you don't have an ABX program. You have a targeted email list.

Pipeline Metrics

This is where ABX earns its budget. Engagement is nice. Pipeline is money.

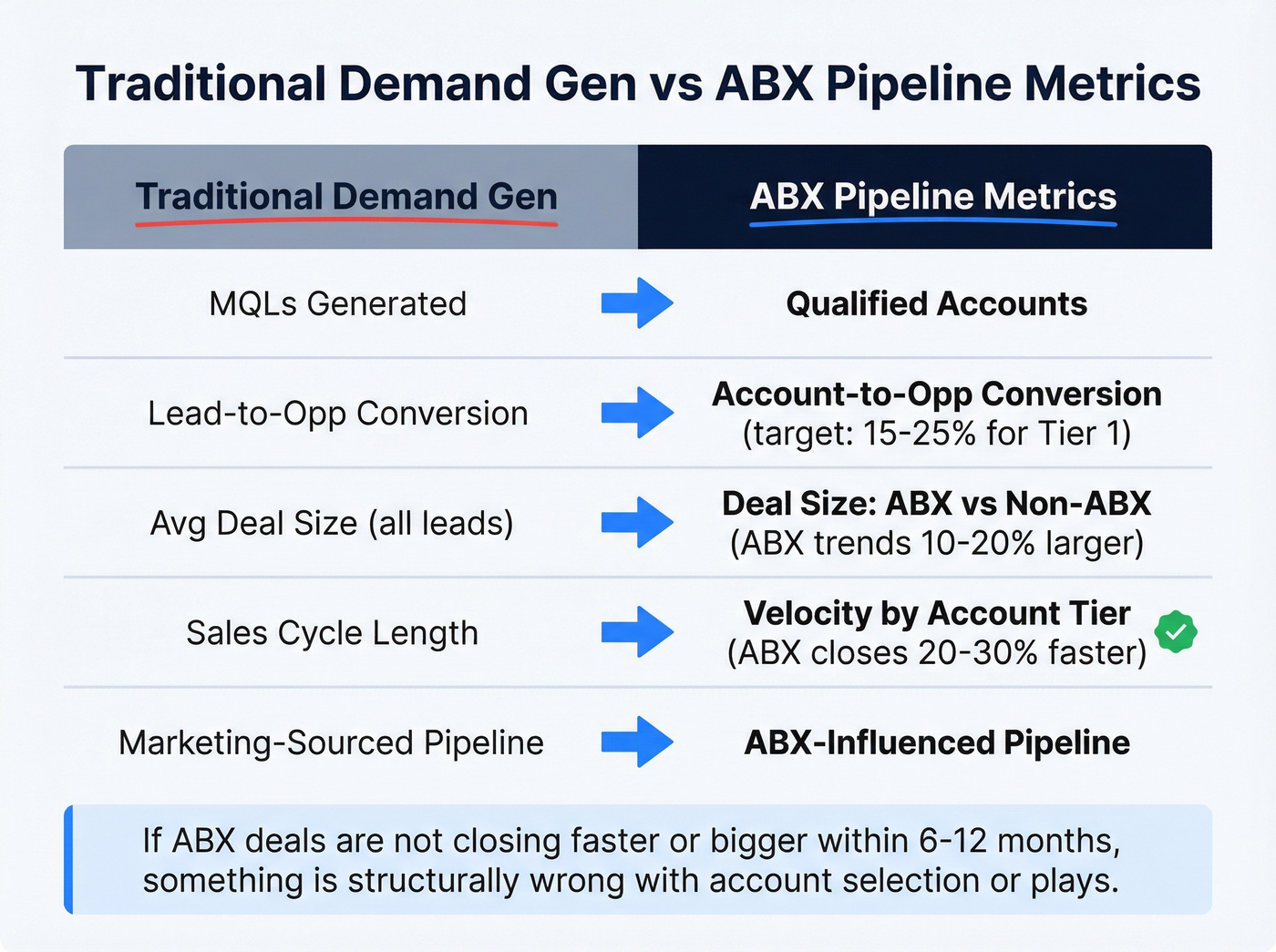

| Traditional Demand Gen | ABX Pipeline Metric |

|---|---|

| MQLs generated | Qualified accounts |

| Lead-to-opp conversion | Account-to-opp conversion |

| Avg deal size (all) | Deal size: ABX vs non-ABX |

| Sales cycle length | Velocity by account tier |

| Marketing-sourced pipe | ABX-influenced pipeline |

ABX-influenced deals consistently close 20-30% faster than non-ABX deals. That directly impacts your revenue forecast and capacity planning.

Track these specifically:

- Account-to-opportunity conversion rate - healthy programs hit 15-25% for Tier 1 accounts.

- Pipeline velocity - days from first engagement to closed-won, segmented by ABX vs non-ABX.

- Average deal size - ABX accounts should trend 10-20% larger because you're multi-threading into the buying committee.

- ABX-influenced pipeline - total pipeline where at least one ABX touch occurred before or during the sales cycle.

If your ABX deals aren't closing faster or bigger than your non-ABX deals within 6-12 months, something's structurally wrong with your account selection or your plays. Full stop.

Revenue & Attribution Metrics

Attribution in ABX is structurally hard. Dozens of touches across marketing and sales, spread over months, hitting multiple people at the same account. Last-touch attribution is useless here.

The models that work:

W-shaped attribution gives credit to first touch, lead creation, and opportunity creation - a reasonable starting point for teams without a data science function. Full-path attribution extends this to include the customer close event, but requires clean data across the entire funnel. Account-level influence reporting sidesteps the debate entirely: did ABX touches occur on this account before it closed? Report ABX-influenced revenue as a percentage of total.

The metrics:

- ABX-influenced revenue as a percentage of total closed-won. Mature programs target 30-50%. One published case study showed a program driving $500K in monthly influenced revenue with 4x pipeline growth - that's the kind of benchmark to aim for.

- Revenue per target account - are you extracting more value from ABX accounts over time?

- Customer lifetime value (CLV) of ABX-sourced accounts vs non-ABX.

Start with influence reporting. Graduate to multi-touch when your data hygiene supports it.

Efficiency & Cost Metrics

This is the section that gets the CFO on your side.

- Cost per target account engaged - total ABX spend divided by accounts hitting your engagement threshold

- Customer acquisition cost (CAC) - ABX vs non-ABX, apples to apples (and sanity-check with your CAC:LTV ratio)

- Sales cycle length - ABX vs non-ABX, by account tier

- Wasted outreach rate - bounced emails, wrong numbers, undeliverable touches

That last metric is the one nobody wants to look at. If 20% of your outbound touches bounce or reach the wrong person, you're burning budget and inflating cost-per-touch numbers. In our experience, teams running on weekly-refreshed, verified data see dramatically lower wasted spend, which directly improves every efficiency KPI on this list.

The benchmark: ABX programs should deliver 15-30% lower CAC than non-ABX motions within 12 months.

Building Your ABX Measurement Dashboard

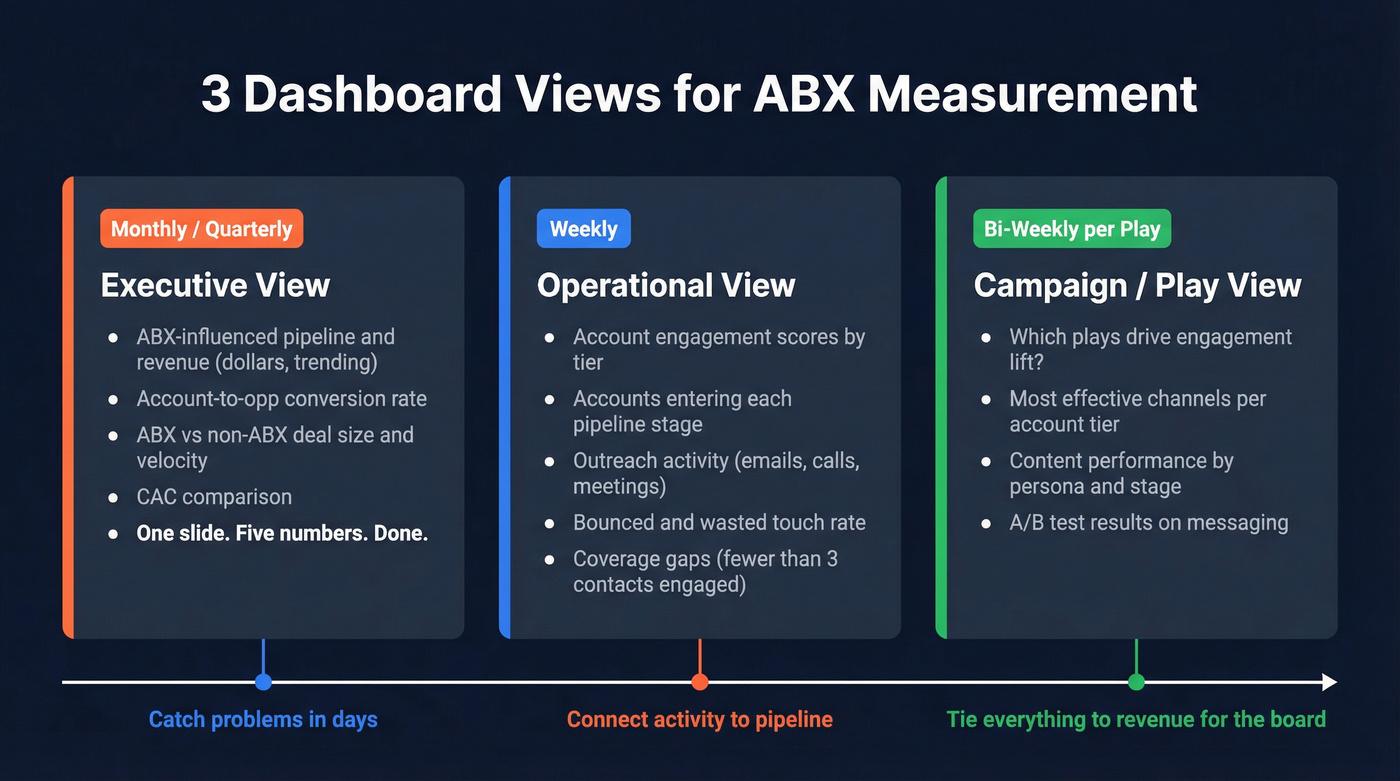

You don't need one dashboard. You need three views of the same data, tailored to three audiences.

Executive view (monthly/quarterly):

- ABX-influenced pipeline and revenue (dollar amounts, trending)

- Account-to-opportunity conversion rate

- ABX vs non-ABX deal size and velocity comparison

- CAC comparison

- One slide. Five numbers. That's it.

Operational view (weekly):

- Account engagement scores, trended by tier

- New accounts entering each pipeline stage

- Outreach activity metrics (emails sent, calls made, meetings booked)

- Bounced/wasted touch rate

- Coverage gaps (accounts with fewer than 3 contacts engaged)

Campaign/play view (per play, reviewed bi-weekly):

- Which plays are driving engagement lift?

- Which channels are most effective per account tier?

- Content performance by persona and buying stage

- A/B test results on messaging and sequencing

The cadence matters as much as the metrics. Weekly operational reviews catch problems early - if engagement drops on Tier 1 accounts, you want to know in days, not months. Monthly strategic reviews connect activity to pipeline. Quarterly reviews tie everything to revenue and give you the data to demonstrate account-based program success to the board.

The Data Quality Problem Nobody Talks About

Here's the uncomfortable truth: most ABX programs are measuring ghosts.

The average B2B database decays at roughly 30% per year. People change jobs, companies get acquired, email domains change, phone numbers rotate. If you built your target account list in January and haven't refreshed it, a third of your contacts are wrong by December. This isn't just a "data hygiene" problem. It's a measurement corruption problem. Every bounced email inflates your cost-per-touch. Every wrong title skews your buyer persona engagement analysis. Every outdated company record misattributes account activity.

You think your ABX program has low engagement on Tier 1 accounts - but really, you're emailing people who left those companies six months ago.

The industry average data refresh cycle is six weeks. Prospeo runs on a 7-day refresh cycle, 6x faster than that norm. After implementing Prospeo as their data layer, Snyk's sales team of 50 AEs saw bounce rates drop from 35-40% to under 5%. That's not just a deliverability improvement - it's a measurement improvement. When 35% of your emails bounce, your engagement metrics are meaningless. When under 5% bounce, you're finally measuring real engagement.

You can build the most sophisticated ABX measurement framework in the world. If the contact data feeding it is 30% stale, you're building on sand.

Your wasted outreach rate is the ABX metric nobody wants to face. Teams using stale data inflate cost-per-account and kill pipeline velocity. Prospeo's 5-step verification, catch-all handling, and spam-trap removal cut bounce rates to under 4% - so every efficiency KPI in your ABX framework reflects reality, not data rot. At $0.01 per email, cleaning up your target account list costs less than one bad campaign.

Slash your wasted outreach rate and make every ABX dollar count.

Common ABX Measurement Mistakes (And How to Fix Them)

Mistake 1: Measuring leads instead of accounts. The whole point of ABX is account-level orchestration. If your dashboard still shows "MQLs generated," you haven't made the shift. Replace every lead-level metric with its account-level equivalent.

Mistake 2: Ignoring sales feedback loops. Marketing builds the dashboard. Sales ignores it. Nobody asks reps whether the accounts they're working are actually engaged or just "scored high" because of bot traffic. Build a weekly 15-minute sync where sales validates or challenges the engagement data. The qualitative signal from reps is as valuable as the quantitative signal from your platform.

Mistake 3: Attribution model too simplistic. Last-touch attribution in ABX is like giving the goalie credit for winning the soccer match. Start with account-level influence reporting. Graduate to W-shaped or full-path attribution when your data maturity supports it. A proper ABX measurement system should predict revenue, not just report on activity.

Mistake 4: You launched without a baseline. If you didn't document pre-ABX conversion rates, deal sizes, and sales cycle lengths before launch, you're not alone. The fix: use your non-ABX accounts as a control group right now. Compare ABX account performance against non-ABX accounts in the same segment and time period. It's not perfect, but it gives you a credible "before" to measure against.

Mistake 5: Treating all accounts equally.

Real talk: your Tier 1 cost-per-account-engaged will be 10-50x higher than Tier 3. That's fine - as long as the revenue follows. Define different engagement thresholds, conversion benchmarks, and efficiency targets per tier. Skip the "one-size-fits-all" benchmarking entirely; it'll mislead you every time.

Measuring ABX Program Success by Maturity Stage

Not every metric matters at every stage. Trying to measure revenue attribution in month two is like checking your marathon time at mile one.

| Metric Category | Early (0-6 months) | Growth (6-18 months) | Mature (18+ months) |

|---|---|---|---|

| Primary focus | Engagement & coverage | Pipeline velocity | Revenue & LTV |

| Key metric | Account engagement score | Acct-to-opp conversion | ABX-influenced revenue % |

| Benchmark | 2-3x engagement lift | 15-25% conversion (Tier 1) | 30-50% of total revenue |

| Efficiency target | Baseline cost-per-touch | 15% lower CAC vs non-ABX | 25-30% lower CAC |

| Attribution model | Influence reporting | W-shaped | Full-path |

Early stage is about proving the concept. Can you engage target accounts at a higher rate than non-target accounts? Focus on coverage and engagement lift - don't obsess over pipeline yet. ABX effectiveness at this point is best measured by whether you're reaching the right people at the right accounts.

Growth stage is where pipeline metrics take center stage. Pipeline velocity and account-to-opportunity conversion are your north stars. Start segmenting by tier - Tier 1 should convert at meaningfully higher rates than Tier 3.

Mature stage is about revenue attribution and long-term value. Measure CLV of ABX accounts, ABX-influenced revenue as a percentage of total, and whether ABX accounts expand faster post-sale. This is when you confidently shift budget from channels that don't convert to those that do.

FAQ

What's the single most important ABX metric to track?

Account-to-opportunity conversion rate. It bridges engagement and revenue, showing whether your ABX program moves target accounts into real pipeline rather than just generating activity. Track it by tier: Tier 1 accounts should convert at 15-25%, while Tier 3 sits closer to 5-10%.

How long before an ABX program shows measurable results?

Expect 3-6 months for engagement metrics to stabilize and 6-12 months for meaningful pipeline and revenue data to emerge. Set baselines before launch so you can measure lift accurately. Teams that skip the baseline step end up unable to prove ROI even when the program is clearly working.

How does data quality affect ABX measurement?

Stale emails, wrong titles, and outdated firmographics corrupt every metric downstream - inflating cost-per-touch, deflating engagement scores, and misattributing account activity. A 7-day refresh cycle and 98% email accuracy prevent the measurement distortion that plagues programs running on data that's weeks or months old.

Should ABX metrics replace traditional demand gen metrics?

Not entirely - ABX metrics complement demand gen by adding account-level context to your existing reporting. Run both in parallel during the transition, then gradually shift reporting weight toward account-based KPIs as your program matures and proves its pipeline impact.