Negative Lead Scoring in 2026: A Practical Playbook (With Templates)

Positive scoring gets all the attention. Negative lead scoring is what keeps your sales team from drowning in "MQLs" that were never going to buy.

Here's the uncomfortable truth: most scoring models fail because they only reward activity. They don't penalize bad data, low intent, or obvious disqualifiers, so the score inflates until it's meaningless.

I've watched teams celebrate "record MQL volume" on Monday and spend the rest of the week arguing about why SDRs aren't following up. The score wasn't wrong. The score was useless.

What you need (quick version)

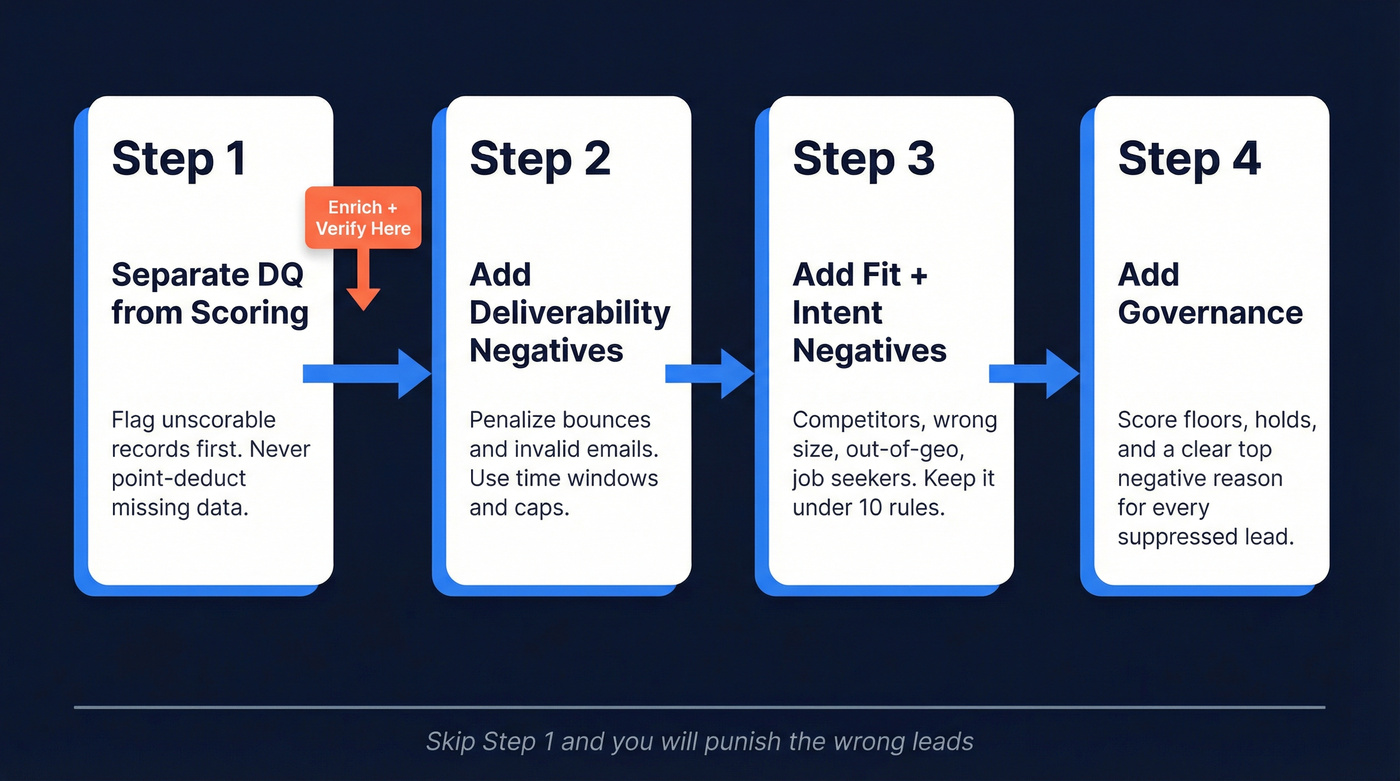

Do these four steps in order. Skip step 1 and you'll punish the wrong leads.

Separate DQ from scoring

- Create a DQ flag/queue for missing or unscorable records.

- Don't "point-deduct" your way out of missing data.

Add deliverability negatives with caps

- Penalize repeated bounces and invalid mailboxes using time windows.

- Cap the damage so one issue can't dominate the score.

Add a small set of fit + intent negatives

- Competitors, wrong size/geo, job-seeker behavior, and repeated low-quality sessions.

- Keep the list short enough to explain to Sales in one minute.

Add governance: floors, holds, and reasons

- Clamp scores so leads can recover.

- Store a clear "top negative reason" for every suppressed lead.

Prospeo fits right before step 2: verify and enrich before you penalize. It's self-serve with no contracts, and its enrichment returns contact data for 83% of leads, so your "bounce" rules reflect reality, not stale fields.

Negative lead scoring: what it is (and what it isn't)

Negative lead scoring is the deliberate use of point deductions to reduce a lead's priority when they show signals of low fit, low intent, or poor contactability.

It's not "being harsh." It's making your score behave like a real prioritization system instead of a one-way ratchet.

Use this mental model: small negatives push a lead back a stage (deprioritize), while big negatives remove them from the sales path (disqualify). That's how scoring stays readable.

It also isn't the same as disqualification:

- Disqualification is a state (flag, lifecycle stage, exclusion list).

- Negative scoring is a mechanism (math) that can contribute to that state.

Look, if you're selling a lower-priced product, you probably don't need a "genius" scoring model. You need a clean DQ gate plus 6-10 negative rules that your SDR manager can repeat from memory.

Fit vs engagement vs combined scores (where negatives belong)

Most teams cram everything into one number and then argue about why it's noisy. Split your model into Fit and Engagement, then decide how you want to combine them.

HubSpot supports three score types:

- Engagement score (behavioral)

- Fit score (attributes)

- Combined score (roll-up; it can populate a combined value and can optionally populate separate engagement/fit values)

Practical gotcha: lead scoring is gated to HubSpot Pro/Enterprise tiers. Also, which objects you can score depends on your Hub: Contacts are Marketing Hub, Companies are Marketing or Sales Hub, and Deals are Sales Hub. If you're on a lower tier, you'll end up recreating scoring with workflows and custom properties.

Where to put negative points (quick table)

| Score type | What it measures | Best negatives |

|---|---|---|

| Fit | "Should we sell?" | competitor, wrong size, out-of-geo, personal email (conditional) |

| Engagement | "Are they active?" | unsubscribes, repeated bounces, repeated low-quality sessions, no-reply sequences |

| Combined | "Priority now" | caps, floors, routing thresholds (not hard DQ) |

How to combine scores (formulas that actually work)

Pick one of these and commit. Don't mix patterns.

Option A: Simple sum (most common)

Combined = Fit + Engagement- Route when

Combined >= 60and Fit isn't terrible (see thresholds below).

Option B: Fit-gated engagement (my default for B2B)

- Route only if

Fit >= 30andEngagement >= 30 - This prevents "high activity, wrong fit" from slipping through.

Option C: Weighted (only if you have stable data)

Combined = (0.6 x Fit) + (0.4 x Engagement)- Use this when Fit data is reliable and you sell to a narrow ICP.

Routing object matters too:

- If Sales routes off Contacts, keep deliverability negatives on the contact record.

- If Sales routes off Companies/Accounts, push fit disqualifiers (competitor, geo, size) to the company/account level so one bad contact doesn't poison the whole account, or vice versa.

HubSpot vs Marketo negative scoring mechanics (what's native vs what's a pattern)

| Item | HubSpot | Marketo Engage |

|---|---|---|

| Score field model | Dedicated scoring tool with Fit/Engagement/Combined score types | Any numeric field can be a "score"; you build the logic in Smart Campaigns |

| Native caps | Yes: group limits to control how many points a rule group can add/subtract | No: implement caps via batch normalization/clamping |

| How negatives are applied | Add/subtract points in score rules | Change Score flow action (e.g., -5 decrement, =-5 set exact) |

| Common failure mode | Too many rules + no DQ gate -> "mystery scores" Sales ignores | Trigger campaigns firing on "score changed" without guarding directionality |

| Best-practice mitigation | DQ flag + group limits + store "top negative reason" | Separate DQ field + clamp scores nightly + gate MQL with a boolean |

Half your negative scoring rules exist because your data is stale. Prospeo refreshes every 7 days and returns verified contact data for 83% of leads - so you penalize real disqualifiers, not outdated fields. 98% email accuracy means fewer false bounces inflating your negatives.

Clean data in, accurate scores out. Start enriching for $0.01 per email.

The business case: why this prevents junk MQLs

Sales doesn't hate marketing leads. Sales hates wasting time.

Negative lead scoring forces your system to say "not now" (or "not us") out loud, instead of quietly shoving everyone into the same MQL bucket and pretending the SDR team will sort it out.

One widely cited case study found lead scoring cut leads sent to Sales by 52% while increasing revenue by 41%. That's the trade you want: fewer handoffs, better handoffs.

And yes, if your MQL volume drops after you add negatives, that's not a bug. That's your model finally telling the truth.

Negative lead scoring rules: 10 signals that hold up in production

Keep the list short. Every extra rule is another way to punish the wrong people.

Below are 10 negative signals that hold up in production. Each includes starter points, a cap/window, and how to track it (where the data comes from). These are baseline signals you can defend in a pipeline review because they're observable and repeatable.

Guardrail: if you add a new negative, remove one. Keep the model at 10 or fewer.

Deliverability & contactability (use these first)

- Repeated soft bounces (not the first one)

- Points: -10 per bounce event

- Cap/window: cap at -30 in 30 days

- Track it: ESP bounce events -> HubSpot email events / Marketo "Email Bounced" activity; map "soft bounce count last 30d" into a property/field.

- Hard bounce / invalid mailbox

- Points: -25 immediately

- Cap/window: one-time, then hold for 90 days

- Track it: ESP "hard bounce" event; Marketo bounce category; HubSpot email hard bounce property. Also set a DQ flag like

Email invalid = true.

- Unsubscribe

- Points: -25

- Cap/window: one-time, then hold for 180 days

- Track it: ESP unsubscribe event; HubSpot subscription status; Marketo "Unsubscribed" activity. Treat as "don't email," not "bad fit."

This is the one place I'll be blunt: don't penalize bounces until you've verified the address. If your email is wrong, your scoring model's grading your database, not the buyer.

Here's a workflow we've used that stays sane even as volume grows: trigger on "new contact created," run verification and enrichment immediately, write back an Email status field (Verified/Risky/Invalid), and only let bounce-based penalties fire when status is Verified and the lead still bounces after that. It sounds picky, but it stops you from burying real buyers because your form captured "john@gmail.com" and your CRM guessed the rest.

Fit disqualifiers (high signal, but separate from "DQ state")

- Competitor employee

- Points: -50

- Cap/window: static, 365 days

- Track it: email domain match against a maintained competitor list + company domain enrichment. Implementation hook: "if domain in competitor list -> set

Competitor = trueand subtract."

- Wrong company size

- Points: -20

- Cap/window: static, cap at -20

- Track it: firmographic enrichment (employee range / revenue band) -> CRM company fields. If size is unknown, don't score it; route to DQ/enrichment.

- Personal email (for a B2B motion)

- Points: -15

- Cap/window: static, 180 days

- Track it: regex/domain list (gmail/yahoo/etc.) at form submit + CRM field validation. Make it conditional if you sell to very small businesses.

- Out-of-geo / unsupported region

- Points: -50 (go -100 if you truly can't sell there)

- Cap/window: static, 365 days

- Track it: country/state from form + IP-to-geo + enrichment. Unknown geo is DQ, not a negative.

Low-intent behaviors (use sparingly)

- Repeated "single-page bounce" sessions

- Points: -10 per session

- Cap/window: cap at -20 in 14 days

- Track it: GA4 engaged sessions (or lack of engagement) + GTM events; HubSpot behavioral events; Segment/Heap. Hook: "2+ sessions with <10s engagement and no key events."

- Careers/job-seeker intent

- Points: -50

- Cap/window: 90 days

- Track it: URL contains

/careersplus an event likeapply_clickin GTM; or repeated visits to job pages + form submit on application flow. Don't penalize a single careers page view. Buyers do diligence.

- Ignoring outreach attempts (sequence non-engagement)

- Points: -5 after 6-8 touches with zero replies

- Cap/window: cap at -15 in 30 days

- Track it: Salesloft/Outreach activity counts + reply flag. Use "no reply" as the primary signal; opens/clicks are too noisy.

Negative scoring template: points, caps, DQ, time windows

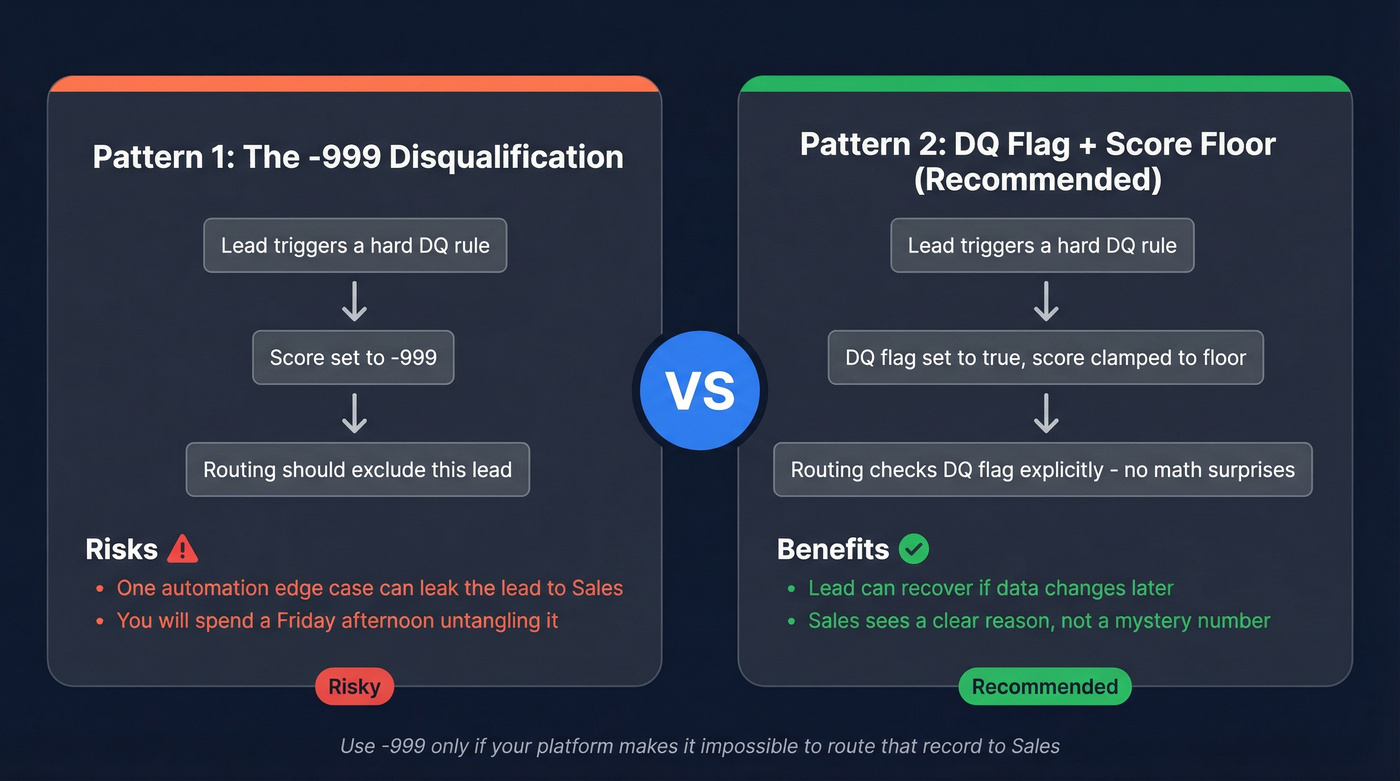

Two patterns you'll see in the wild:

- The "-999 equivalent" disqualification (simple, but risky if routing leaks).

- The safer approach: DQ flag + score floor, so math can't do something surprising.

I'm opinionated: use -999 only if your platform makes it impossible to route that record to Sales. Otherwise, one automation edge case will eventually ship a "disqualified" lead to an SDR, and you'll spend a Friday afternoon untangling it.

Also: don't over-engineer decay. If your platform can't do clean decay, a 90-day reset is easier to operate and easier to explain, and it keeps your reporting from turning into a forensic exercise.

Table A: Negative scoring rubric (starter)

| Signal | Points | Cap group | Window | Notes |

|---|---|---|---|---|

| Soft bounce | -10 | Deliverability | 30d | cap -30 |

| Hard bounce | -25 | Deliverability | 90d | set DQ flag |

| Unsubscribe | -25 | Deliverability | 180d | suppress email |

| Personal email | -15 | Fit | 180d | conditional by segment |

| Wrong size | -20 | Fit | 180d | static attr |

| Competitor | -50 | Fit | 365d | also DQ flag |

| Out-of-geo | -50 | Fit | 365d | if strict |

| Repeat bounce sess. | -10 | Low intent | 14d | cap -20 |

| Careers "apply" | -50 | Low intent | 90d | require apply event |

| No reply (8 touches) | -5 | Low intent | 30d | cap -15 |

Caps to copy: Deliverability cap -60, Fit cap -100, Low-intent cap -40. Floor to copy: Combined score floor at -100 (or clamp to 0 if your reporting breaks on negatives).

Table B: DQ rules (don't score these, route them)

| Condition | Action | Safeguard |

|---|---|---|

| Missing company | Set DQ property | don't score |

| No country/region | Set DQ property | enrich first |

| Email invalid | Exclude list | verify first |

| Competitor domain | Set lifecycle "DQ" | block routing |

| Student/job seeker | Set "Not ICP" | manual review |

Thresholds + lifecycle mapping (defaults you can ship this week)

Start with thresholds that create a manageable MQL lane. A practical heuristic: set MQL so only the top ~20% of new leads qualify, then tune from there.

A clean default:

- Fit MQL threshold:

Fit >= 30 - Engagement MQL threshold:

Engagement >= 30 - Combined MQL threshold:

Combined >= 60 - Auto-DQ override: any hard DQ flag blocks MQL regardless of score

Lifecycle mapping example:

- DQ queue: DQ flag = true (missing key fields, invalid email, competitor)

- Nurture: not DQ, but below thresholds

- MQL: meets thresholds and not in recycle hold

- Recycle: Sales returns lead; apply hold + reduce engagement score

Routing example (copy/paste logic)

Use this exact order:

- If

DQ = true-> Lifecycle =DQ queue(no routing) - Else if

Recycle hold = true-> Lifecycle =Recycle(no routing) - Else if

Combined >= 60ANDFit >= 30ANDEngagement >= 30-> Lifecycle =MQL(route) - Else -> Lifecycle =

Nurture

Decay/reset defaults that don't cause chaos

- Default A (decay): reduce score by 25% monthly if there's no new activity.

- Default B (reset): if no meaningful engagement for 90 days, reset engagement score to 0 and move lifecycle back to nurture.

If you're unsure, pick the 90-day reset.

Governance that keeps Sales trust

If Sales doesn't trust the score, they'll ignore it. Governance is how you keep the model from turning into a black box.

1) Caps and floors are non-negotiable

- Caps prevent one behavior (like repeated bounces) from dominating the score.

- Floors prevent "negative infinity" leads that never recover.

- In HubSpot, group limits are the cleanest way to enforce caps without extra workflows, and overall score limits keep totals bounded.

2) Every suppressed lead needs a plain-English reason (and an audit trail)

Create three fields/properties and make them mandatory outputs of your scoring logic:

- Top negative reason (single-select):

Invalid email,Unsubscribed,Competitor,Out of geo,Job seeker, etc. - Last negative event (text): the specific rule that fired (e.g.,

Soft bounce x3 in 30d) - Last negative timestamp (date/time): when it happened

This solves the #1 Sales question: "Why is this lead suppressed?" It also makes debugging fast when someone escalates a "good lead" that got buried.

3) Holds + recycling (stop the MQL ping-pong)

Recycled leads need friction or they'll bounce MQL -> recycle -> MQL in the same quarter.

- Apply a 60-day recycle hold after Sales returns a lead.

- During hold: allow scoring to update, but block routing.

- Exit hold only on a strong reactivation event (demo request, reply, high-intent form) or after the time window.

4) Separate "don't email" from "don't sell"

Don't collapse everything into "negative score."

- Unsubscribe -> suppress email sends (compliance + deliverability)

- Competitor -> suppress routing (sales efficiency)

- Invalid email -> DQ/enrichment queue (data hygiene)

5) Change management (the part everyone skips, then regrets)

Treat scoring like production logic, not a one-off project:

- Version your model (v1, v1.1, v2) and log what changed.

- Quarterly review with Sales + Marketing Ops: keep, remove, adjust thresholds.

- One owner (RevOps) who approves changes and can roll back.

6) False-negative review queue (keep yourself honest)

Every week, sample 20 suppressed leads:

- 10 from "deliverability suppressed"

- 10 from "fit/intent suppressed"

Check: were they truly bad, or did you have missing fields, wrong enrichment, or a misfiring event? This is how you prevent quiet revenue loss.

Skip this if you can't commit to the weekly sample. You'll end up with a model that looks tidy in a spreadsheet and quietly hurts pipeline.

How to implement negative scoring in HubSpot (2026 mechanics)

HubSpot's scoring builder is strong enough now to run real negative scoring without a maze of workflows.

Setup steps (the way we'd do it)

Pick your score type

- Build Fit and Engagement first.

- Add Combined only when you're ready to route.

Set the overall score limit

- For most teams, -100 to 100 or -200 to 200 is plenty.

- Bigger ranges make it harder to explain why someone's "a 742."

Create negative rule groups

- Deliverability negatives

- Fit disqualifiers

- Low-intent behaviors

Apply group limits (caps)

- Example: Deliverability min -60, Fit min -100, Low-intent min -40.

Route on score + flags

- Use Combined thresholds plus DQ flags and recycle holds.

If you're enriching into HubSpot, use native integrations where possible so your scoring properties stay current.

How to implement negative scoring in Marketo (Change Score + pitfalls)

Marketo's mechanic is simple: the Change Score flow action supports negative operations.

-5decrements the score by 5=-5sets the score to exactly -5

Marketo Engage is typically enterprise-priced and contract-driven, often $2,000-$10,000+/month depending on database size and modules, so plan for formal ops ownership and change control.

A clean Marketo pattern

- Keep your main score in a bounded range (0-100 is common).

- Apply negative scoring via Change Score for deliverability and low intent.

- Use a separate field/flag for hard disqualification (competitor, invalid email, job seeker).

- Clamp scores with a scheduled batch (nightly/weekly) because Marketo doesn't have native caps.

Avoid these failure modes

"Score is Changed" triggers firing on negative changes

- If your trigger is Score is Changed and your filters only check current score > threshold, a negative change can still fire the campaign if the score remains above the threshold after the decrease.

- Safer pattern: trigger on specific behaviors (form fill, key page event, reply) and gate MQL creation behind a separate "MQL ready" boolean that only flips on qualifying increases.

No caps = runaway negatives

- Fix: scheduled normalization that clamps the score into your intended range (e.g., 0-100), plus caps implemented in the logic.

Negative events not suppressing downstream flows

- Fix: add people to an exclusion list (or set a suppression flag) when certain negatives occur, and ensure routing campaigns always exclude them.

Marketo can do almost anything. That's exactly why you need guardrails.

Measuring success (and not breaking reporting)

Measure it like a filtering system: it should reduce noise and improve downstream conversion.

Metrics checklist (what should move)

- MQL -> SQL rate (up)

- SQL -> Opp rate (up or flat)

- Opp -> Closed-won rate (flat or up)

- Sales touches per SQL (down)

- Bounce rate / unsubscribe rate (down)

- Recycle reactivation rate (healthy, not zero)

Before/after dashboard checklist (simple and effective)

Build a one-page view with:

- MQL volume (weekly)

- MQL -> SQL conversion (weekly)

- % of MQLs blocked by DQ flags (weekly)

- Top 5 negative reasons (weekly)

- Median time-to-first-touch for SQLs (weekly)

If "Top negative reasons" is empty, your model isn't governable. Fix that before you tune points.

SLA guardrails (so you don't torch dashboards)

- Run in parallel for 2-4 weeks. Keep the old MQL definition for reporting while you validate the new one.

- Stage activation: apply the new model to new leads first, then backfill in controlled batches.

- Add a temporary routing gate: for the first week, route MQLs to a review queue if they qualify solely due to historical activity.

Your goal's simple: fewer leads to Sales, more revenue per lead.

Your "hard bounce" rule just flagged a VP who changed jobs. Prospeo tracks job changes across 300M+ profiles and verifies emails in real time - so your scoring model deducts points for actual disqualifiers, not data decay from a 6-week-old record.

Enrich before you penalize. 100 free credits, no contract required.

FAQ

What's the difference between negative lead scoring and disqualification?

Negative lead scoring subtracts points to deprioritize leads based on low fit, low intent, or deliverability issues, while disqualification is a hard state (flag/stage/list) that blocks routing entirely. Use negative points for "not now," and a DQ flag for "never route," with at least 5-10 explicit DQ conditions.

How many negative rules should a model have?

Most teams should run 6-10 negative rules max, because accuracy drops fast once Sales can't explain the score in a minute. If you want to add an 11th rule, remove one and keep caps by category (for example: Deliverability -60, Fit -100, Low intent -40).

What's a safe negative scoring range (caps/floors) for most teams?

A safe default is -100 to 100 (or -200 to 200 for longer sales cycles), with category caps like Deliverability min -60, Fit min -100, and Low-intent min -40. Add a floor so leads can recover after enrichment or a strong reactivation event, instead of getting stuck forever.

What's a good free tool to verify emails before scoring bounces?

Prospeo's free tier includes 75 email credits/month plus 100 Chrome extension credits/month, which is enough to verify new inbound leads before bounce-based penalties kick in.

Should I verify emails before penalizing bounces/unsubscribes?

Yes. Verify first so you don't punish leads for bad data, then apply bounce penalties only when the email status is Verified and the lead still bounces. In practice, this cuts false negatives immediately and makes deliverability-based rules meaningful.

Summary

Negative lead scoring works when it's boring: a short list of high-signal penalties, hard DQ flags for "never route," and governance (caps, floors, and clear reasons) so Sales can trust what they're seeing. Verify and enrich first, then ship your template with thresholds you can defend, and you'll trade noisy MQL volume for cleaner handoffs and better conversion.