Sales Development Rep Enablement: The 2026 Operating System for Faster Ramp

Most SDR teams don't lose because they "need better messaging." They lose because deliverability quietly collapses, standards drift, and coaching turns into vibes.

Sales development rep enablement is the fix, but only if you treat it like an operating system, not a folder of docs.

And yeah, this is the part reps complain about the loudest: enablement that's "just docs," run by people who've never carried a number, pushing trainings nobody uses. If that stings, good. Fixing it is how you get meetings back.

What sales development rep enablement actually is (and what it isn't)

Sales development rep enablement is a behavior-change system: standards -> practice -> coaching -> measurement, enforced weekly.

It's not onboarding checklists. It's not a folder of scripts. And it's definitely not "we did a training last month."

Here's the POV I wish more teams internalized: if your enablement is mostly docs and trainings, you don't have enablement. You've got a content library.

Myths vs reality (SDR edition)

Myth: Enablement is "training" and happens in week one. Reality: Enablement is a weekly operating rhythm that doesn't stop.

Myth: Ramp is a time period (30/60/90) you wait out. Reality: Ramp is a set of gates you certify.

Myth: More activity fixes everything. Reality: Activity without list quality and deliverability is just faster failure.

Myth: Managers can coach from gut feel. Reality: Coaching without scorecards is just vibes.

Hot take: if your average deal size is small, you don't need a "bigger" enablement program. You need stricter gates and cleaner data, because most teams try to buy their way out of discipline and it never works for long.

What you need (quick version): the 3 levers that move meetings

If you're rebuilding enablement from scratch, don't start with a new LMS. Start with these levers, in this order.

Week-1 checklist (ranked by impact)

1) Lock a 30/60/90 plan + certification gates (not "time served")

- Define what "ready" means for: ICP, messaging, tools, CRM hygiene, and meeting quality.

- Add pass/fail gates (roleplay score, CRM test, deliverability rules, first-call QA).

- Make gates visible: one page, one owner, one weekly review.

2) Run weekly call review + scorecard calibration

- Pick 5 calls per rep per week (mix of wins + losses).

- Score them with the same rubric across managers.

- Calibrate as a team so "good" doesn't change depending on who's listening.

3) Treat data refresh + verification as enablement (bounce rate stays under 2%)

- Set a hard rule: bounce rate stays under 2% or outbound pauses.

- Refresh contact data every 30-90 days (job changes will wreck you).

- Use a verification workflow (Prospeo: 98% email accuracy, 7-day refresh) so reps only send to verified contacts and inbox placement stays healthy.

Most enablement guides ignore deliverability. That's why their "just do more activity" advice faceplants in 2026.

SDR enablement benchmarks: ramp, meetings, conversion

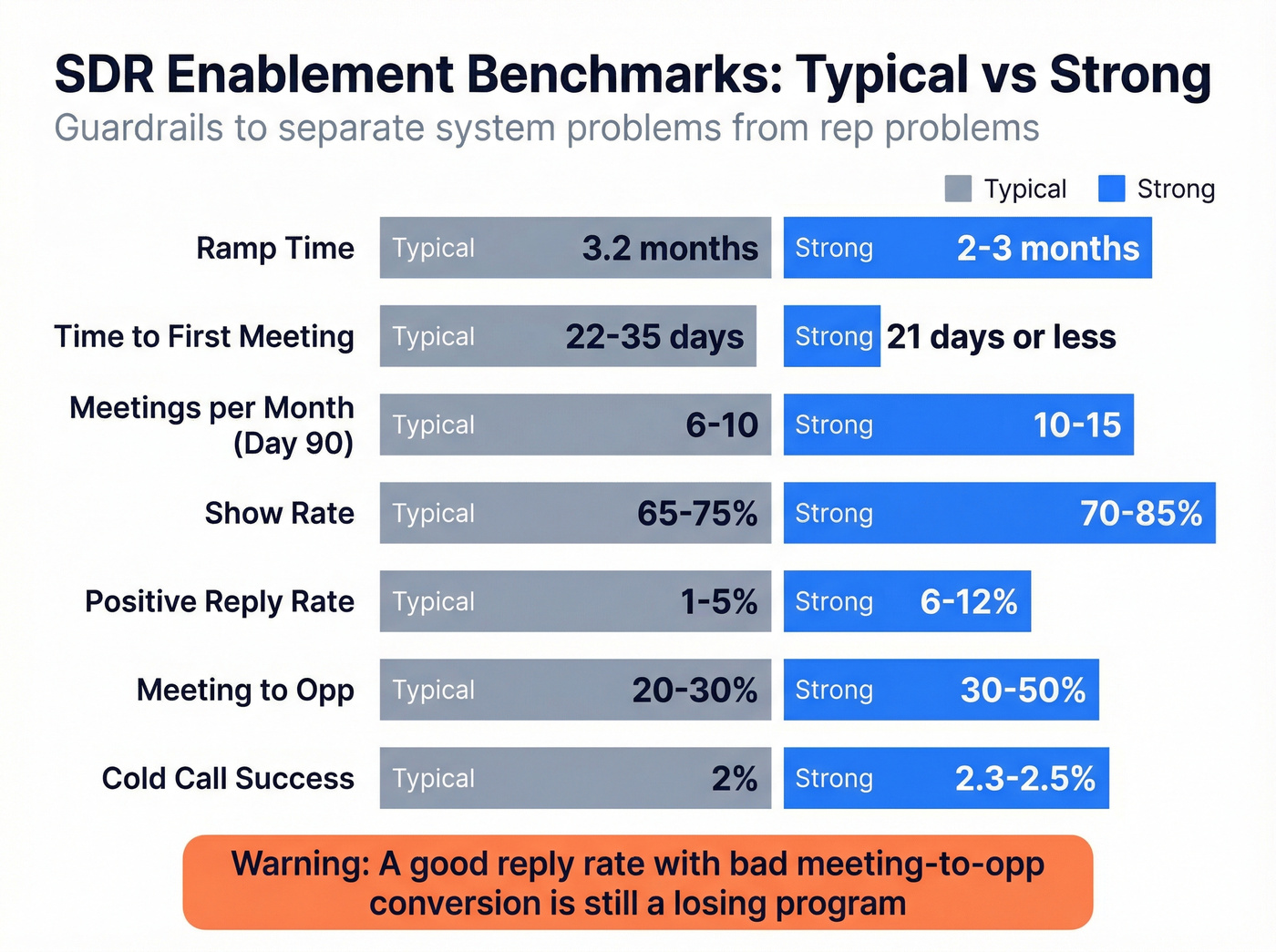

Benchmarks aren't goals. They're guardrails, so you know when you've got a system problem vs a rep problem.

A widely cited ramp anchor is still The Bridge Group's 2024 benchmark: SDR ramp averages about 3.2 months. In 2026, treat that as a baseline, then run your program off live truth metrics: time-to-first-meeting and meeting-to-opportunity conversion.

Practical targets table (directional, not promises)

| Metric | Typical | Strong | Notes |

|---|---|---|---|

| Ramp time | ~3.2 months | ~2-3 months | Bridge Group anchor |

| Time to 1st meeting | 22-35 days | <=21 days | Fastest "system health" signal |

| Meetings/month @ day 90 | 6-10 (SMB/MM) | 10-15 (SMB/MM) | Enterprise often 5-8 |

| Show rate | 65-75% | 70-85% | Over 85%: confirm tracking |

| Positive reply rate (email) | 1-5% | 6-12% | 1-5% is common; 6-12% is strong |

| Meeting -> opp | 20-30% | 30-50% | Quality spec decides this |

| Cold call success | ~2% | 2.3-2.5% | ~1 meeting / 40-45 dials |

How to use these without lying to yourself

- Time to first meeting tells you if onboarding's active or passive. If it's 45+ days, your gates are weak or your list is stale.

- Meeting -> opp is where teams fake progress. If you're booking 20 meetings and converting 10% to opps, you're creating calendar noise.

- Reply rate is the most misleading metric in outbound. A "good" reply rate with bad meeting-to-opp is still a losing program.

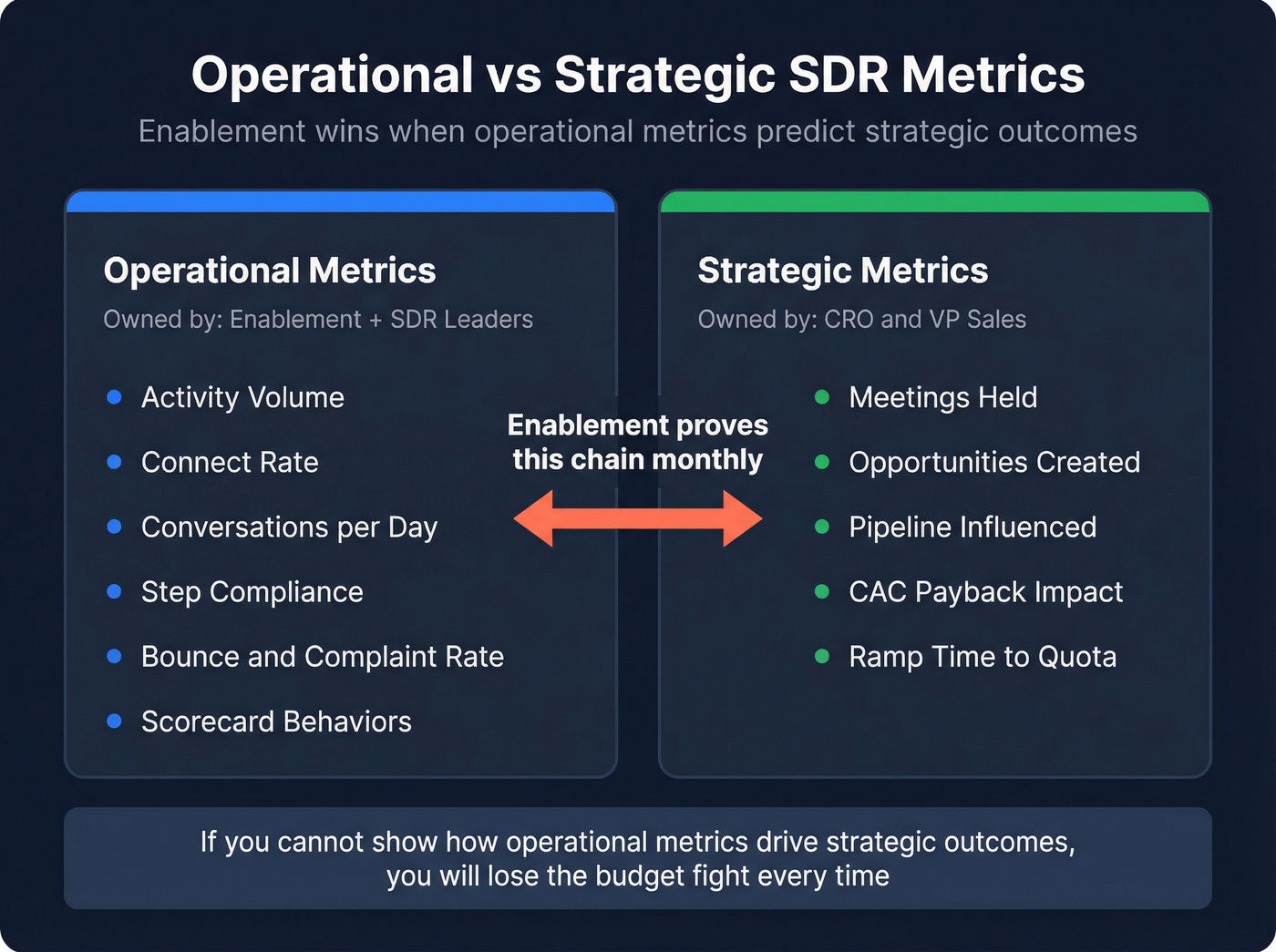

Operational vs strategic SDR metrics (what enablement owns vs what leadership cares about)

One clean way to stop KPI arguments is to separate the two buckets:

- Operational metrics (enablement + SDR leaders own): activity volume, connect rate, conversations, step compliance, bounce/complaint rate, scorecard behaviors.

- Strategic metrics (CRO/VP Sales cares about): meetings held, opportunities created, pipeline influenced, CAC payback impact, ramp time to quota.

Enablement wins when operational metrics predict strategic outcomes, and you can show that chain every month without hand-waving.

You just read that bounce rate must stay under 2% or outbound pauses. That rule only works if your data backs it up. Prospeo refreshes every 7 days and delivers 98% email accuracy - so your reps send to real inboxes, not dead ends.

Stop enabling reps to burn your domain. Enable them with verified contacts.

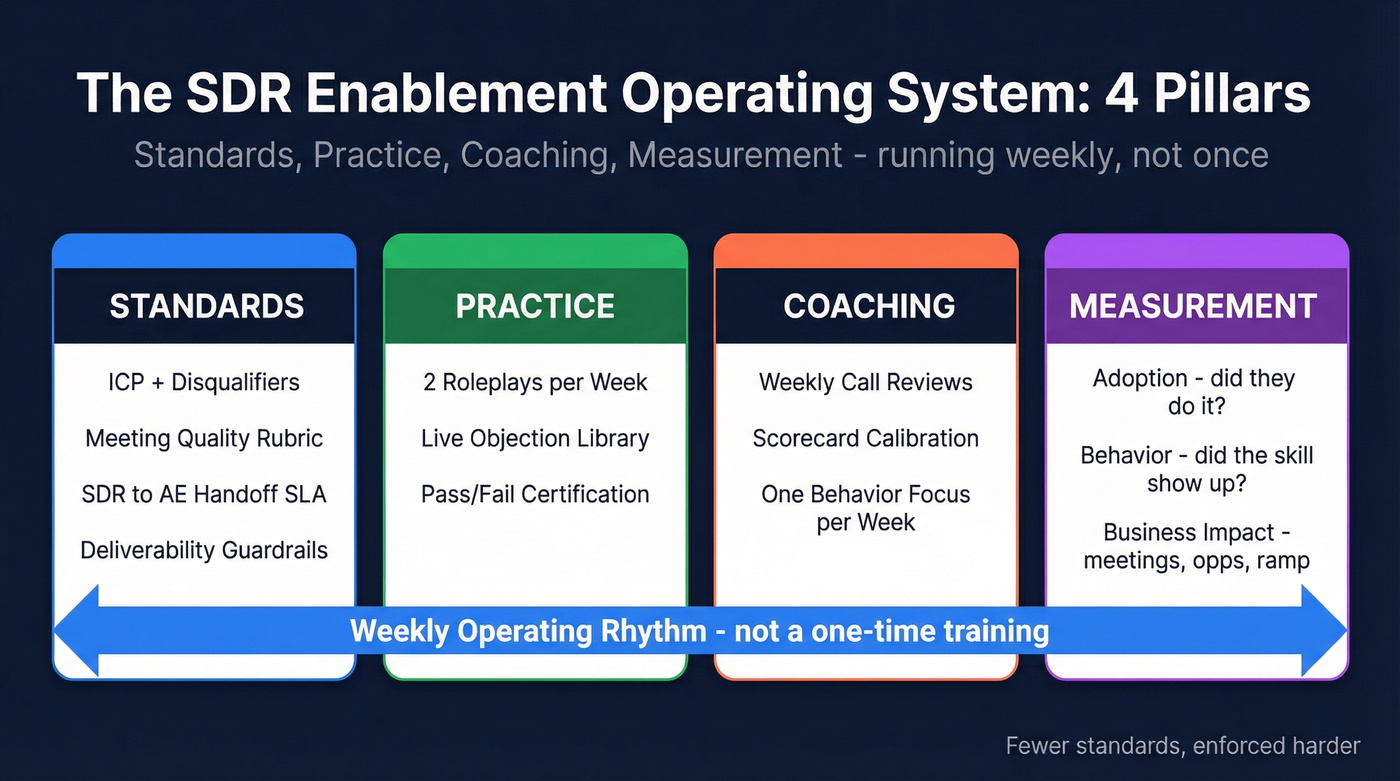

Sales development rep enablement OS: 4 pillars that create behavior change

Most enablement programs fail because they're built like a course. SDR enablement has to run like an operating system.

We've tested a bunch of versions of this with teams that were stuck in "train more" mode, and the simplest model that actually holds up week after week is a 4-pillar OS: standards, practice, coaching, measurement. It maps cleanly to how reps learn, how managers coach, and how leadership funds the work, and it gives you a place to put the unsexy stuff (like deliverability rules) so it doesn't get ignored until the domain's on fire.

Pillar 1: Standards (clarity + consistency)

Use this if: reps interpret "qualified" differently, AEs complain about meeting quality, or messaging changes every two weeks. Skip this if: you enjoy arguing about definitions in every pipeline review.

Standardize:

- ICP + disqualifiers

- Meeting-quality rubric (what must be true to book)

- SDR -> AE SLA (handoff timing, notes, fields)

- Channel rules + deliverability guardrails

Pillar 2: Practice (transfer, not theater)

Use this if: reps know the script but freeze on real objections. Skip this if: you want roleplays to feel like kindergarten (and produce zero transfer to real calls).

Practice that works:

- 2 roleplays/week per rep (one discovery, one objection)

- "Live objection library" updated weekly from real calls

- Certification roleplays with a score threshold (pass/fail)

Pillar 3: Coaching (observable behaviors)

Use this if: performance varies wildly by manager. Skip this if: you're fine with coaching being "try harder."

Coaching that sticks:

- Weekly call reviews with scorecards

- Calibration sessions so scoring's consistent

- One behavior focus per week (not 12)

Pillar 4: Measurement (budget-proofing)

Use this if: enablement gets dismissed as "training." Skip this if: you like losing budget fights.

Measure:

- Adoption (did they do the thing?)

- Behavior (did the skill show up on calls/emails?)

- Business impact (meetings held, opps created, ramp time)

Here's the thing: you don't need more content. You need fewer standards, enforced harder.

The 30/60/90 SDR enablement plan (with targets + certification gates)

This is a template you can copy into Notion/Confluence and run tomorrow. The exact numbers will vary by market and channel mix, but the gates don't change.

Ramp targets (phased quota expectation)

A simple forcing function:

- Days 1-30: 0-20%

- Days 31-60: 60-80%

- Days 61-90: 90-110%

Treat these as outcomes reps earn by passing gates, not time served.

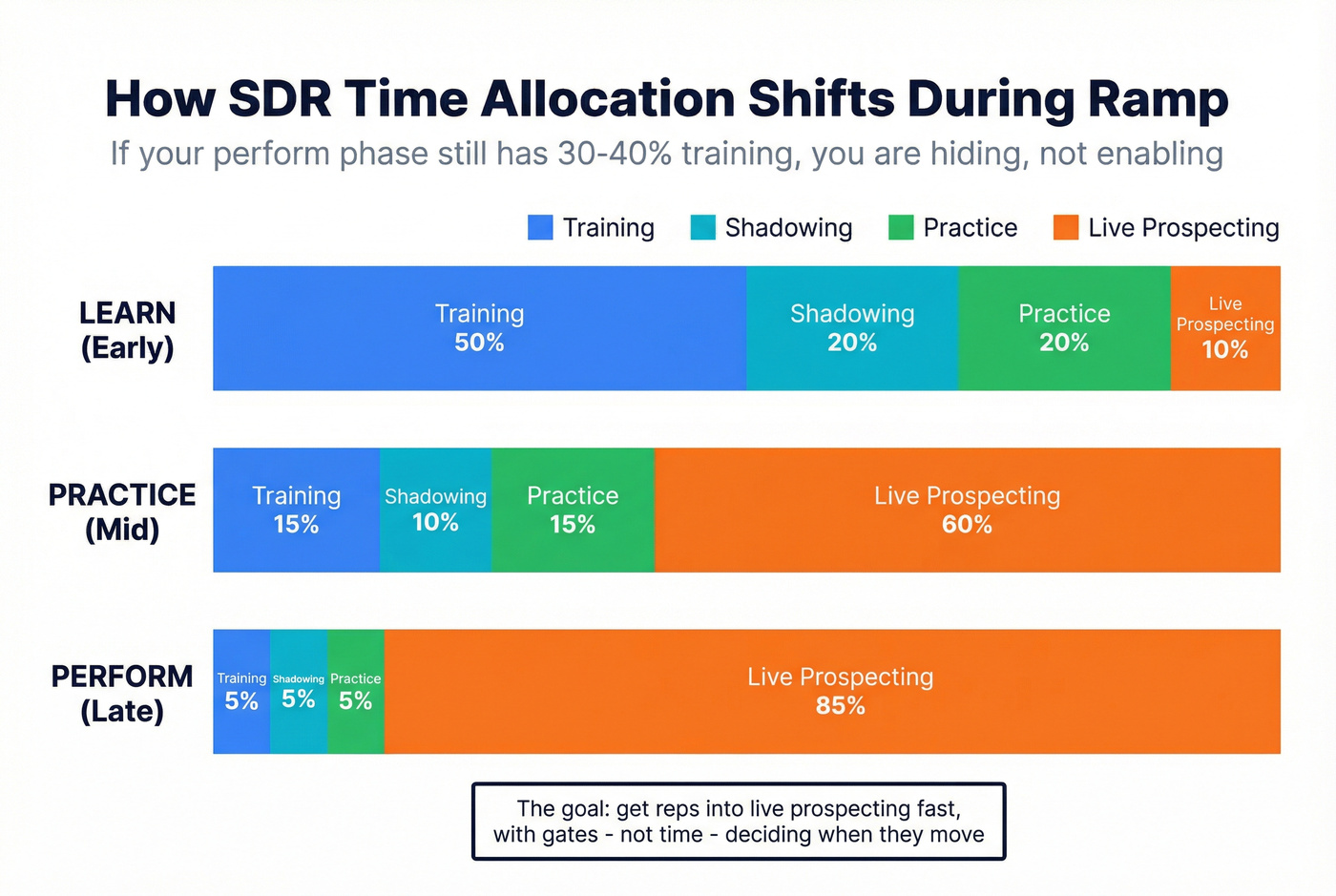

Weekly time allocation model (how ramp actually shifts)

| Phase | Training | Shadowing | Practice | Live prospecting |

|---|---|---|---|---|

| Learn (early) | 50% | 20% | 20% | 10% |

| Practice (mid) | 15% | 10% | 15% | 60% |

| Perform (late) | 5% | 5% | 5% | 85% |

If your "perform" phase still has 30-40% training, you're not enabling. You're hiding.

Certification gates (tight checklists, not walls of text)

Gate A - ICP + messaging

- Pass ICP quiz (industries, titles, triggers, disqualifiers)

- Deliver a 60-second pitch + 2 persona variants

- Write 3 outbound angles (pain, trigger, proof)

Gate B - Tools + hygiene

- CRM required fields + activity logging test

- Sequencer rules (steps, opt-outs, personalization minimum)

- Calendar/routing: accept, reassign, document

Gate C - Call skills

- Roleplay score above threshold (scorecard below)

- Handle 5 core objections (2 responses each)

- Close with a clear next step + calendar lock

Gate D - Meeting quality

- Notes include pain hypothesis + trigger + stakeholders + next step

- Meets SDR -> AE SLA timing and completeness

- AE-ready agenda sent before handoff

Gate E - Deliverability + data

- Bounce/complaint thresholds memorized + enforced

- List build rules (verification required; refresh windows)

- "Stop send" conditions understood (pause triggers)

Optional (add later, not day one): persona-specific talk tracks, competitive traps, multi-threading plays.

Week-by-week plan (sample operating plan)

Weeks 0-1 (preboarding + week one): remove friction

Goal: week two is real prospecting, not admin.

| Week | Outcomes | Activities | Certification |

|---|---|---|---|

| Preboard | Access + context | Accounts, tools, territories | N/A |

| Week 1 | ICP + pitch v1 | Shadow 5 calls, write 5 emails | Gate A |

Weeks 2-4 (Days 1-30): produce signals

Goal: first meetings, not perfect meetings.

| Week | Outcomes | Sample activity targets | Certification |

|---|---|---|---|

| Week 2 | First connects | 40 calls/day (assisted) | Gate B |

| Week 3 | First meeting attempts | 40 calls/day + emails | Gate C |

| Week 4 | 1-2 meetings/week | 40 calls/day | Gate E |

Directional targets:

- Contact rate: 15-20%

- Conversations: 6-8/day

- Meetings: 1-2/week by weeks 3-4

If week 4 has zero meetings, don't "motivate." Diagnose: list quality, talk track, or activity consistency.

Weeks 5-8 (Days 31-60): consistency under volume

Goal: repeatable meeting production without quality collapse.

| Week | Outcomes | Sample activity targets | Certification |

|---|---|---|---|

| Week 5 | 2-3 meetings/week | 500 calls/week | Gate D |

| Week 6 | 6-8 meetings/week | 500 calls/week | Re-cert Gate C |

| Week 7 | Stable show rate | 80+ touches/day | QA on 5 calls |

| Week 8 | 60-80% quota | 80-100 touches/day | SLA compliance |

This is where most teams break: they push volume and stop enforcing standards.

I've seen this exact failure mode play out: week 6 looks "busy," dashboards look great, and then AEs start rejecting meetings because the notes are thin, the persona's wrong, and nobody can explain why the prospect agreed to show up. The SDRs get blamed, the manager says "we need better messaging," and the real culprit is that Gate D never got enforced once volume ramped.

Weeks 9-13 (Days 61-90): full production + optimization

Goal: full output with quality control.

| Week | Outcomes | Sample activity targets | Certification |

|---|---|---|---|

| Week 9 | 90%+ quota pace | 100 calls/day | Monthly cert |

| Week 10 | 12-15 meetings/week | 100 calls/day | QA + coaching |

| Week 11 | 70-85% show rate | Mix channels | SLA audit |

| Week 12-13 | 90-110% quota | Optimize blocks | Final sign-off |

Real talk: if you're pushing 12-15 meetings/week, your data hygiene has to be elite, or you'll train reps to spray and pray.

Coaching that sticks: scorecards, calibration, and weekly rituals

The fastest way to improve SDR output is to stop coaching "effort" and start coaching observable behaviors.

Weekly coaching rituals (simple, repeatable)

- Monday: activity plan + target list review (15 minutes per rep)

- Midweek: 1:1 call review (30 minutes)

- Friday: team calibration (30-45 minutes, 5 calls total)

- Weekly roleplay block: 30 minutes, one scenario, scored

Calibration's the move. It forces managers to agree on what "good" sounds like, which stops coaching whiplash.

Cold call scorecard structure (use this breakdown)

- Opener (first 5 seconds)

- Problem statement (next 30 seconds)

- Conversation (1-8 minutes)

- Close (final 30-60 seconds)

Example rubric (0-2 scale per line)

Opener (5s)

- Pattern interrupt + permission-based ask

- Clear reason for call (not a biography)

Problem (30s)

- Specific pain hypothesis (not generic)

- Proof point or trigger (why now)

Conversation (1-8m)

- Asks 2-3 good questions

- Shows listening (mirrors/labels)

- Handles 1 objection without spiraling

- Keeps control (no pitch-dump)

Close (30-60s)

- Clear next step + timebox

- Confirms stakeholders + agenda

- Sends invite while on the phone

The call-length reality check (a useful heuristic)

A useful heuristic from outbound coaching circles that reference Gong-style analysis: calls under a minute don't convert; around five minutes converts far better; and 10+ minute calls convert best. Don't celebrate "more dials." Celebrate staying in the conversation long enough to earn a next step.

"Meeting quality" standard (mini spec you can enforce)

A meeting counts only if:

- Persona matches ICP (or documented exception)

- Pain hypothesis is explicit in notes

- Trigger is captured (event, change, initiative)

- Next step is agreed (not "learn more")

- AE prep notes are complete within SLA

If AEs complain about calendar spam, this spec fixes it fast.

Data + deliverability enablement (non-negotiable in 2026)

In 2026, deliverability is enablement. If inbox placement drops, SDRs don't need "better messaging." They need you to stop poisoning the domain.

The benchmarks you should run your program against

- Global inbox placement: 84% (meaning 1 in 6 emails doesn't hit inbox)

- Microsoft inbox placement baseline: 75.6% (and it's the most fragile)

- Gmail complaint sensitivity: 0.3% (cross it and you'll feel it fast)

- Bounce thresholds: <2% healthy / >5% danger

- Data decay: ~30% of workers change jobs annually -> refresh every 30-90 days (see contact data decay)

Authentication minimums (don't skip this)

If your outbound domains aren't authenticated, you're donating money to spam filters.

Minimums:

- SPF configured correctly (one record, no permerror)

- DKIM enabled and aligned

- DMARC live with enforcement: start at p=quarantine, move to p=reject once stable (use this SPF DKIM & DMARC guide)

New domains also face stricter scrutiny. Warm up gradually, keep early copy conservative, and don't spike volume.

Guardrails (what SDRs are allowed to do)

List rules

- No sending to unverified emails.

- No list reuse past 90 days without refresh.

- No importing contacts without dedupe + required fields.

Sending rules

- Ramp volume slowly on new domains.

- Keep complaint rate in the 0.1-0.3% range operationally.

- Pause sequences if bounces spike above 2% on any segment.

Quality rules

- Personalization isn't optional when you're targeting small lists.

- Sequence norms: 4-7 emails over 14-21 days (don't stretch to 45 days and call it "nurture") - see SDR cadence best practices

If this, then that (deliverability incident playbook)

- If bounce rate hits 2-5%: stop new sends to that segment -> re-verify -> remove catch-all risk -> refresh contacts.

- If bounce rate goes >5%: pause outbound from that domain -> audit list source + enrichment -> only resume with verified contacts.

- If Microsoft replies drop suddenly: assume inboxing fell -> reduce volume -> tighten targeting -> clean lists.

- If complaint rate approaches 0.3%: rewrite copy (less hype, fewer links) -> tighten ICP -> reduce volume.

The practical fix: verified data + fast refresh

This is the unsexy lever that wins. Clean data keeps bounce under 2%, protects inbox placement, and makes every other enablement investment actually work.

Prospeo ("The B2B data platform built for accuracy") is a strong fit for teams that want self-serve verified contacts without contracts: 300M+ professional profiles, 143M+ verified emails, 125M+ verified mobile numbers, 98% email accuracy, and a 7-day refresh cycle. It also makes verification outcomes explicit (verified/invalid/catch-all), which matters because your "verified before send" rule can't be enforced if reps can't see the status. If you're comparing vendors, start with an email verifier website shortlist.

One more opinionated note: if you're buying a giant suite but your bounce rate's 6% and nobody's enforcing a stop-send rule, you're not "investing in data." You're paying for a nicer way to ignore the basics.

Ramp time drops when reps aren't fighting stale lists. Prospeo gives SDRs 300M+ verified profiles with 30+ filters - intent, job changes, technographics - so they hit ICP from day one, not day 45.

Cut ramp time by giving new reps data that actually connects.

Governance: who owns SDR enablement (Enablement vs Ops vs SDR leadership)

Enablement fails when ownership's fuzzy. The clean split is simple: enablement equips people (skills, coaching, content); ops builds the systems (process, tooling, reporting) that make execution repeatable; SDR leadership enforces both daily.

Research from Mindtickle has pointed out that most orgs invest in enablement, and Salesforce has also made the case that sales ops is critical to growth. Translation: you need both, and they can't step on each other.

Ownership comparison (what each team should actually own)

| Area | Enablement | RevOps/Sales Ops | SDR Leadership |

|---|---|---|---|

| Skills + coaching | Owns | Supports | Enforces |

| Playbooks/scripts | Owns | Informs | Enforces |

| Tool training | Owns | Configures | Enforces |

| CRM fields/SLA | Informs | Owns | Enforces |

| Reporting/KPIs | Uses | Owns | Uses |

Minimum viable SDR enablement stack in 2026 (required vs optional)

If your stack's bloated, reps stop trusting it. Here's the minimum that actually moves meetings.

Required

- CRM (system of record): Salesforce/HubSpot are common; budget varies widely, but plan ~$25-$150/user/month depending on edition.

- Engagement/sequencing: Outreach/Salesloft are enterprise; SMB teams often run lighter tools. Budget ~$75-$200/user/month for mainstream options (see cold email outreach tools).

- Data + verification (non-negotiable): budget around ~$0.01/email for verified contacts and enforce bounce rules.

- Conversation intelligence (call recording + coaching): plan ~$80-$160/user/month depending on vendor and bundles.

- Knowledge base / LMS (where gates live): Notion/Confluence can work; formal LMS only when you've got scale. Budget ~$8-$40/user/month for KB tools; ~$20-$60/user/month for basic LMS tiers.

Optional (buy only after you've enforced gates)

- Sales Navigator for targeted prospecting: $99/month (Core plan anchor).

- Close (if you're a small team that wants CRM + calling in one): from $49/month (entry anchor).

- Kondo (Gmail organization for sellers): $28/month (anchor).

Don't-buy guidance: if you can't enforce a meeting-quality spec and a bounce threshold, buying "AI coaching" is just paying to automate chaos.

Proving ROI (so enablement doesn't get dismissed as "training")

If you can't prove ROI, enablement becomes the first budget line cut.

The clean model is: adoption -> behavior -> business impact. It works because it forces you to connect learning to outcomes, and it matches the reality that a lot of enablement teams still struggle to measure value in a way leadership trusts.

Level 1: Adoption (leading indicators)

- Onboarding completion

- Certification pass rates

- Call review participation

- Playbook usage (scripts, battlecards)

Level 2: Behavior (the real leading indicators)

- Scorecard improvements (opener, problem, close)

- % meetings meeting the quality spec

- SLA compliance rate (handoff notes on time)

- Deliverability compliance (bounce under 2%)

Level 3: Business impact (lagging indicators)

- Meetings held (not just booked)

- Show rate

- Opportunities created

- Ramp time (days to first meeting, days to quota)

- Meeting -> opp conversion (target 30-50%)

2026 reality: AI + consolidation (use it to enforce standards)

Enablement teams are consolidating tools and using AI more, but the only AI that matters is the kind that reinforces your cadence: call reviews, certifications, QA, SLA checks. If you're rolling AI into outbound, use a rubric-first approach like this AI in sales cadences playbook.

My rule: if it doesn't make standards easier to enforce, it's a distraction with a budget line.

What to do next (next 7 days, no excuses)

- Write your gates on one page (A-E) and schedule a weekly gate review. If it isn't visible, it isn't real.

- Run one calibration meeting with five calls and a single scorecard. You'll fix more in 45 minutes than in a month of trainings.

- Stand up a deliverability dashboard: bounce %, complaint %, and a simple "pause conditions" checklist. Then enforce it, because inbox placement is revenue. If you need a deeper SOP, start with an email verification list workflow.

FAQ

What's the difference between SDR enablement and sales enablement?

SDR enablement is the outbound-specific part of sales enablement: prospecting standards, talk tracks, sequencing rules, meeting quality, and SDR -> AE handoffs. Sales enablement is broader (AEs, renewals, partners). For SDRs, weekly coaching, strict certification gates, and deliverability guardrails matter most because outbound breaks fast.

What SDR ramp time should we plan for in 2026?

Plan around ~3.2 months as a common ramp anchor, but manage to two numbers: time to first meeting (target <=21 days) and meeting-to-opp conversion (target 30-50%). If reps "finish onboarding" but don't book early meetings, your gates are too loose, your list is stale, or coaching isn't happening weekly.

What deliverability metrics should SDR teams monitor weekly?

Monitor bounce rate, complaint rate, and inbox-risk signals weekly because they predict outbound performance faster than reply rate. Keep bounce under 2% and treat anything above 5% as a stop-send event. Keep complaints in the 0.1-0.3% range; Gmail gets sensitive around 0.3%, and Microsoft inboxing is typically the most fragile.

What's a good free tool to keep SDR contact data verified?

Prospeo includes a free tier (75 email credits plus 100 Chrome extension credits per month) and shows verification status clearly (verified/invalid/catch-all), which makes it easier to enforce "verified before send" without turning it into a manager policing exercise.

Summary

Sales development rep enablement works when it's run like an operating system: clear standards, weekly practice, scorecarded coaching, and measurement tied to meetings held and opp creation.

If you do nothing else in 2026, enforce certification gates and treat deliverability + verified data as part of the program, because clean lists and consistent coaching beat "more activity" every time.