What Is Contextual Data? A Practical Definition + How to Use It

Finding "the number" is easy. Figuring out what that number means right now - for this customer, in this situation - is where decisions get made. Contextual data is the layer that turns a dashboard into an action you can defend.

Here's the thing: context isn't decoration.

It's the difference between "interesting" and "do this next."

What is contextual data (and why it changes decisions)

Contextual data is the surrounding information that changes how you interpret a data point and what you should do next.

A widely shared definition (often attributed to Gartner via secondary write-ups) describes contextual data as "any relevant facts from the environment." That's broad on purpose. "Environment" can mean the customer's last interaction, a supply chain delay, a policy change, a competitor launch, or a macro shift that changes demand.

Practical definition (2 sentences): Contextual data is decision-relevant information that sits around an event or entity and changes its meaning. It only counts as "context" if it's tied to a specific moment and can be joined back to the thing you're deciding about.

Non-negotiable rule: if it isn't timestamped and joinable, don't call it contextual data.

Context decays. A "high intent" signal from six weeks ago doesn't just get weaker; it pushes you into confident, wrong actions. I've seen teams build gorgeous scoring models that quietly rot because they treat context like a static attribute instead of a time-bound signal, and nobody notices until pipeline quality drops and everyone starts blaming the algorithm.

Freshness isn't a nice-to-have; it's part of the definition. Late context isn't contextual decisioning - it's historical analysis wearing a real-time costume.

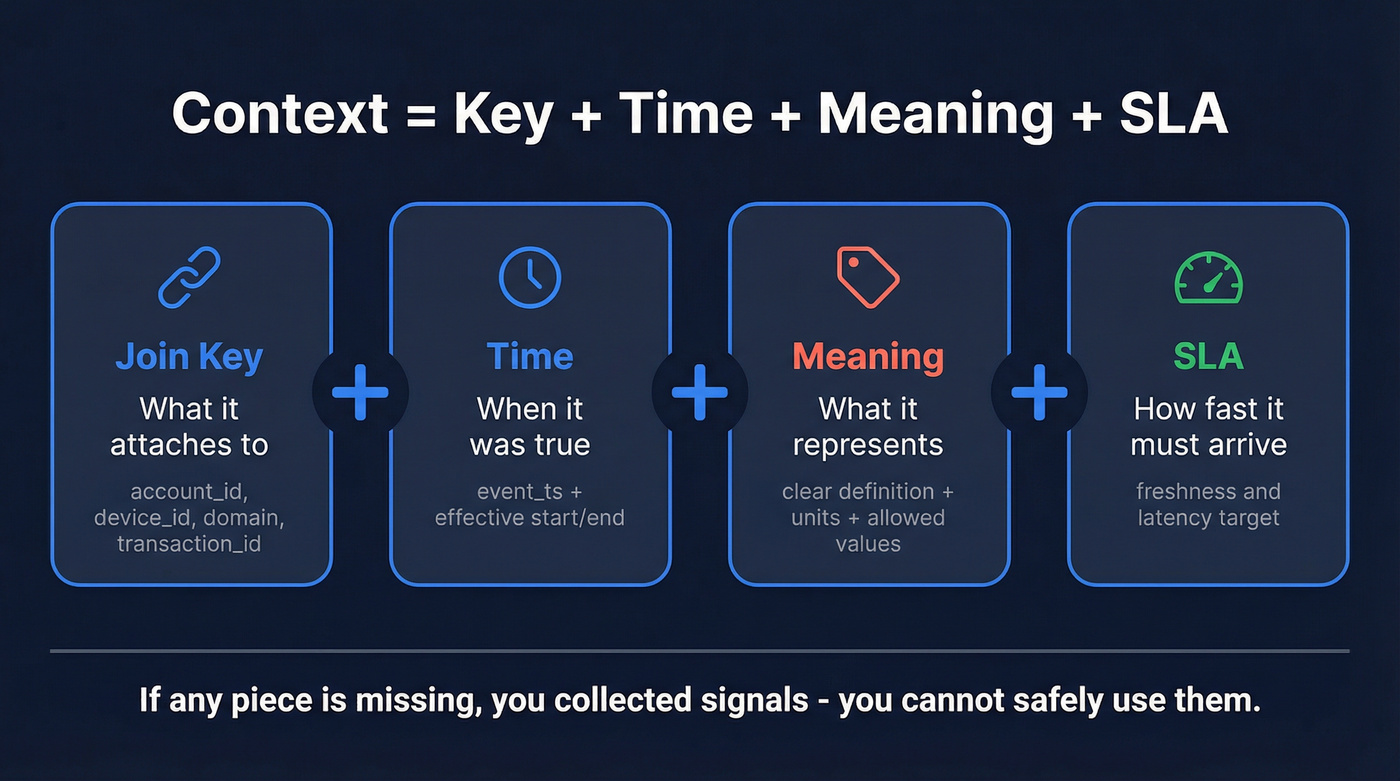

The 4-part framework: Context = Key + Time + Meaning + SLA

If you remember one thing, remember this:

- Join key: what it attaches to (account_id, device_id, domain, transaction_id)

- Time: when it was true (event_ts + effective start/end)

- Meaning: what it represents (clear definition + units + allowed values)

- SLA: how fast it must arrive (freshness/latency target)

You'll see this show up again in modeling and quality. It's the difference between "we collected signals" and "we can safely use them."

What you need (quick version)

If you do only three things this week, do these:

- Write a taxonomy you can enforce. Decide what counts as contextual data (signals around events) vs metadata (about data) vs master data (core entities) vs reference data (controlled values). If you can't name it, you can't govern it.

- Define join keys + time rules (point-in-time correctness). Every contextual signal needs: (1) an entity key, (2) an event timestamp, and (3) an "as-of" join rule (what was true at the time of the event). No timestamp = no context.

- Install quality gates for timeliness, interpretability, reliability. Timeliness prevents stale context. Interpretability prevents mystery features. Reliability prevents one flaky source from hijacking decisions.

Most "contextual data projects" fail because teams collect signals first and ask governance questions later. Flip that order and you move faster.

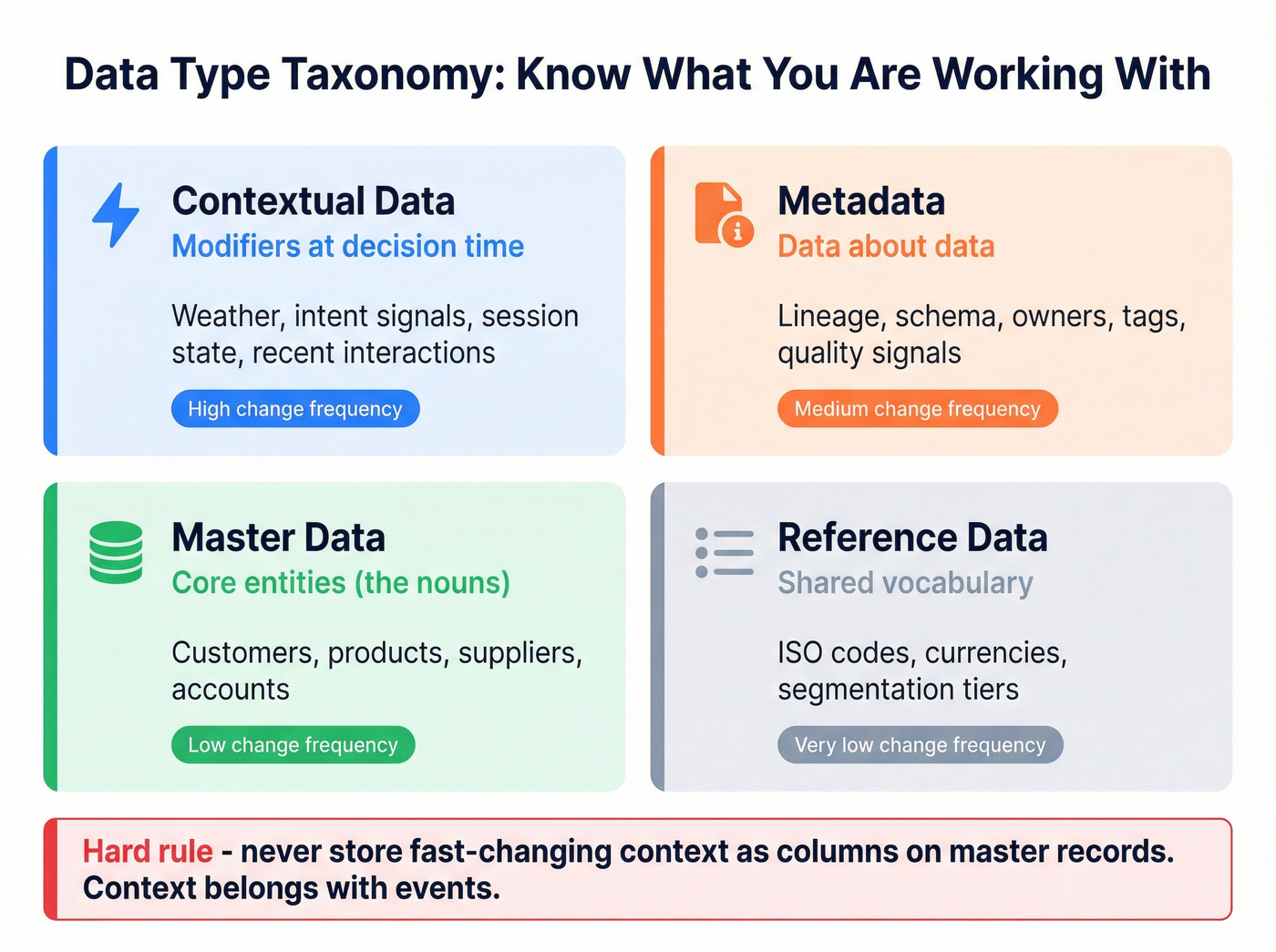

Contextual data vs metadata vs master data vs reference data

People mix these up, then wonder why joins break and metrics don't reconcile. Use this table as your internal language.

| Data type | What it is | Examples | Change frequency |

|---|---|---|---|

| Contextual data | Facts that change decisions | weather, intent, session state | High (time-bound) |

| Metadata | Data about data | lineage, owner, schema, tags | Medium |

| Master data | Core entities | customers, products, suppliers | Low |

| Reference data | Controlled values | ISO codes, currency codes | Very low |

How to interpret the taxonomy (without turning it into a philosophy debate)

- Master data = nouns. Stable entities you transact on: Account, Contact, Product, Vendor, Asset. Keep it clean and slow-changing.

- Reference data = shared vocabulary. Country codes, currencies, segmentation tiers, transaction codes. This is where standardization pays for itself; "US / U.S. / United States" shouldn't become three different countries in your warehouse.

- Metadata = trust layer. Definitions, owners, lineage, and quality signals. Metadata is what keeps contextual data from becoming folklore.

- Contextual data = modifiers at decision time. It's the "adjectives and adverbs" that change meaning now: a $100,000 payment is just a number; a $100,000 payment to a new counterparty from a high-risk country right after a password reset is a different story.

One hard stance: never store fast-changing context as columns on master records. Context belongs with events (or time-bounded facts), or you'll lose point-in-time correctness and spend months reconciling "why the model worked last quarter."

You just learned that contextual data without freshness is "historical analysis wearing a real-time costume." Prospeo's 7-day data refresh cycle means your prospect signals - job changes, intent surges, company growth - stay time-bound and actionable, not stale labels. 98% email accuracy, 300M+ profiles, all refreshed weekly.

Stop deciding on six-week-old context. Start with data refreshed every 7 days.

What contextual data isn't: two common confusions

Don't confuse contextual data with contextual targeting (adtech)

Use this: contextual data = relevant facts from the environment that change a decision. Skip this confusion: "contextual" in advertising often means contextual targeting - placing ads based on the content being consumed (page/topic), not the user's identity.

A clean memory hook from adtech: contextual targeting aligns to content; behavioral targeting uses identifiers and profiles (cookies, MAIDs, IP addresses) to infer who someone is.

Real talk: contextual targeting gets oversold.

A common complaint in performance marketing circles is paying premium CPMs for "contextual inventory" that doesn't beat cheaper placements once you do the math on conversion rate and CAC. Context is powerful, but unit economics still win.

Don't confuse "context" with "a segment that never expires"

Use this: context is time-bound and decays. Skip this: treating a segment label as permanent truth.

Recency is the trap: segments keep firing after the moment has passed (the classic example is travel ads following someone for weeks after they already booked). In B2B and product analytics, "in-market" flags without an expiry are just sticky labels pretending to be reality.

If you can't answer "as of when?", you don't have context - you have a label.

Sources of contextual data (the 3 buckets) + collection methods

A practical source taxonomy maps to how pipelines actually get built:

| Bucket | What it includes | Typical collection |

|---|---|---|

| Third-party orgs | news, weather, macro, events | APIs, feeds, scraping |

| Customers | interactions, prefs, behavior | product events, CRM, surveys |

| Things / IoT | sensors, GPS, kiosks | streaming, device telemetry |

Collection methods that actually ship

Start with what you can join and refresh reliably:

- Customer-generated context you already own: product events, support tickets, sales activities, renewals, usage-limit hits, onboarding milestones. This is high-signal and already keyed to your entities.

- CRM "operational context": meetings booked, stage changes, call outcomes, notes tags, opportunity push/pull, renewal risk flags. Treat these as events with timestamps, not as overwritten fields.

- Marketing automation triggers: email opens/clicks, form submissions, webinar attendance, nurture progression, ad-to-site handoffs. Capture the trigger and the timestamp.

- Progressive profiling: collect context over time instead of asking 20 questions on day one. Ask one new field when it's relevant (role, use case, team size, timeline), store each answer as an event, and stop re-asking what you already know. This keeps forms short and data fresh.

- Third-party macro context when it changes decisions: macro indicators are legitimate context for forecasting and risk. In Jan 2026, CPI rose +0.2% and unemployment was 4.3% - directly relevant if you model demand, churn risk, or pricing sensitivity (Bureau of Labor Statistics: https://www.bls.gov/).

- Social and news monitoring: brand mentions, executive changes, product launches, regulatory actions. Use it when it changes prioritization, not because it's "nice to have." (If you want a sales-ready system, see competitive intelligence.)

- Location and environment context: region, timezone, device type, app version, network type. This is the backbone of debugging and personalization.

Architecture opinion (and yes, I'm opinionated about this): if a contextual signal affects real-time decisions (fraud, routing, personalization), treat it like a streaming problem first and a BI problem second. Batch-first designs create stale context by default, and then teams act surprised when "real-time" features are 12 hours late.

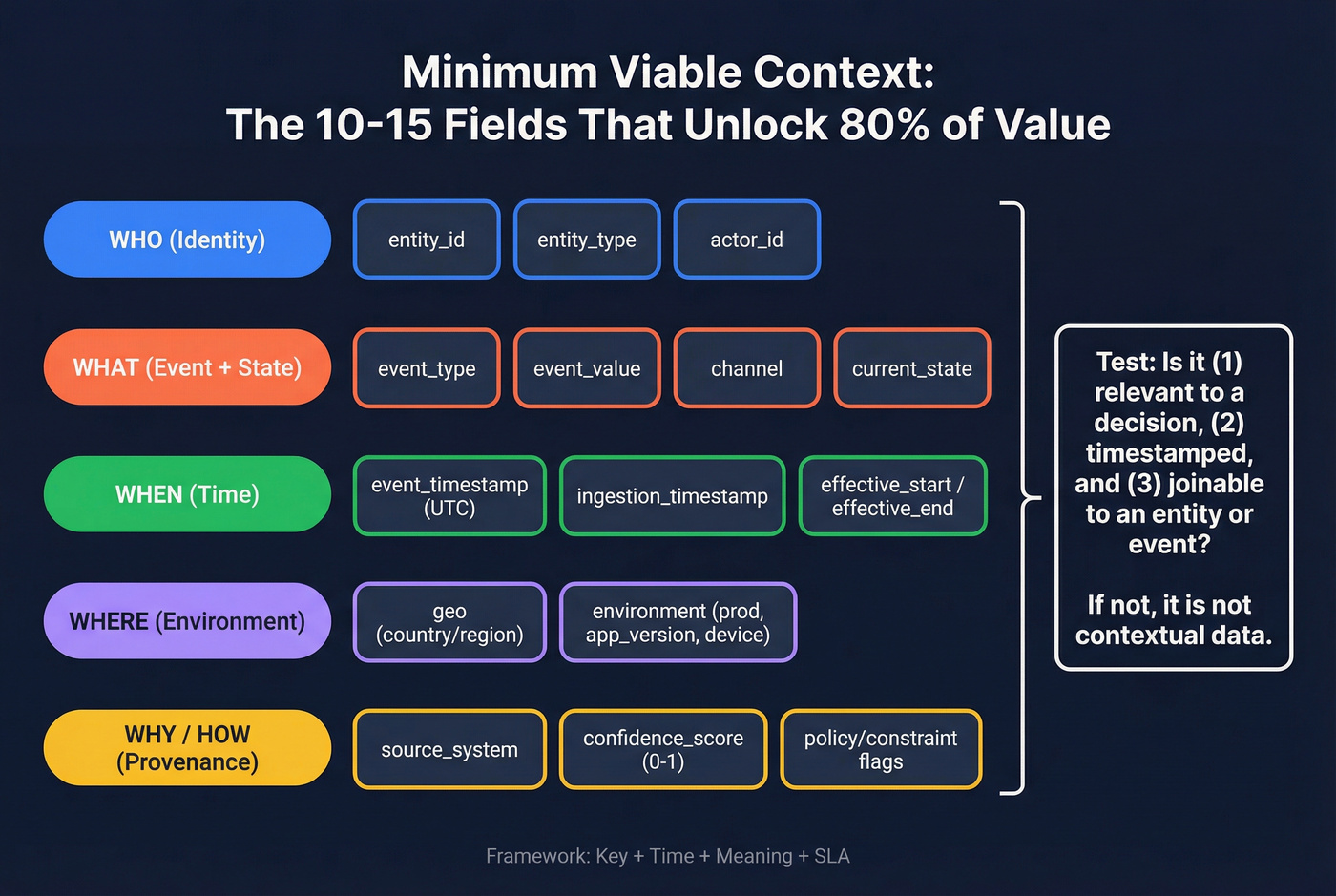

Minimum viable context (the 10-15 fields that unlock 80% of value)

You don't need 200 fields. You need the right 10-15, consistently captured.

Is this actually contextual? Test: it must be (1) relevant to a decision, (2) timestamped, and (3) joinable to an entity/event.

Who (identity)

- entity_id (customer_id / account_id / device_id)

- entity_type (customer, account, device, transaction)

- actor_id (user_id, agent_id) when applicable

What (event + state)

- event_type (purchase, login, ticket_opened)

- event_value (amount, plan, severity)

- channel (web, mobile, phone, email)

- current_state (trial, active, delinquent)

When (time)

- event_timestamp (UTC)

- ingestion_timestamp (when you received it)

- effective_start / effective_end (for time-bounded context)

Where (environment)

- geo (country/region)

- environment (prod/sandbox, app_version, device_type)

Why / How (provenance)

- source_system (CRM, app, vendor feed)

- confidence_score (0-1) or quality flag

- policy/constraint flags (GDPR region, risk tier)

Bring the framework back: Key + Time + Meaning + SLA. The field that saves the most pain later is effective time (start/end) plus a clear "as-of" join rule. Without it, you rebuild the same pipeline twice: once for analytics, once for production.

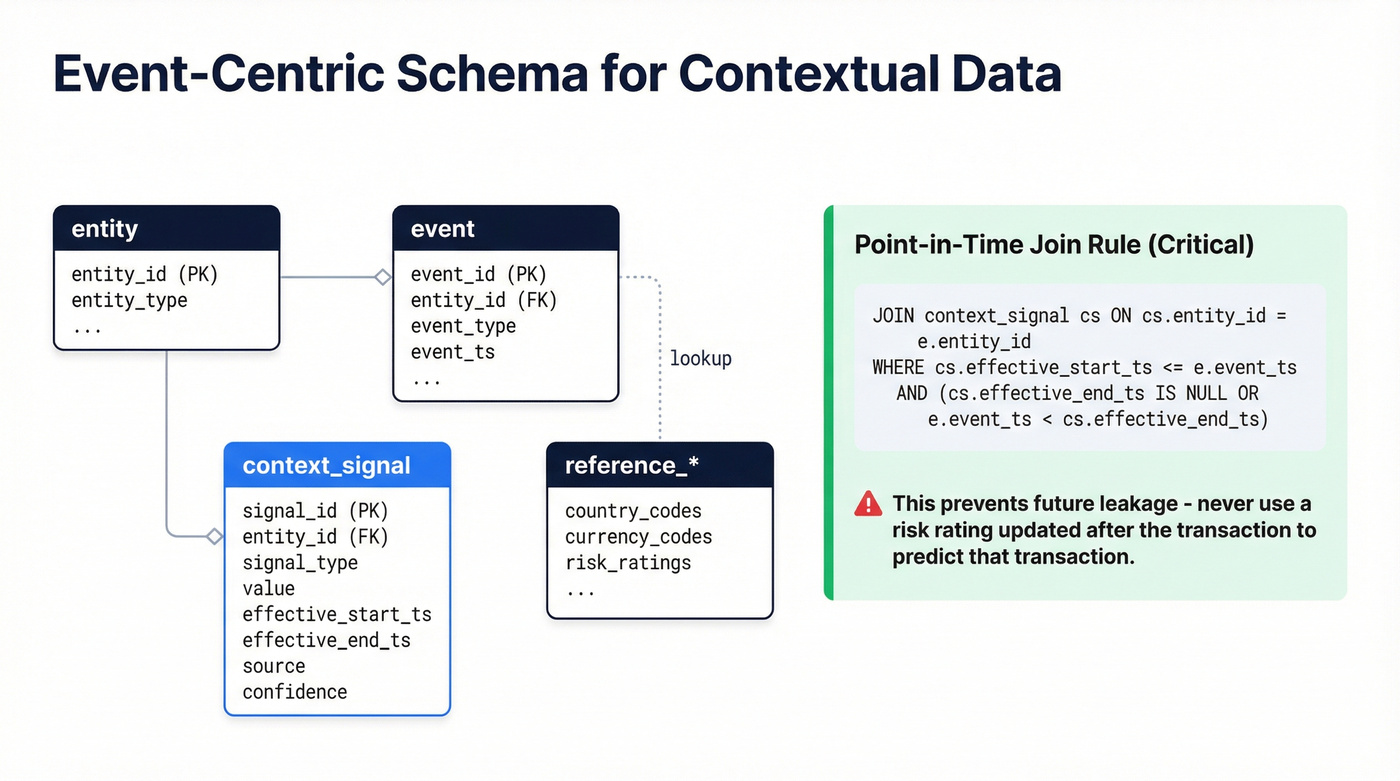

How to model contextual data so it's joinable (relational + graph starter schema)

Most contextual data problems are modeling problems wearing a data-collection costume.

Relational starter schema (event-centric)

A simple pattern that scales:

tables

entity(entity_id, entity_type, ...)event(event_id, entity_id, event_type, event_ts, ...)context_signal(signal_id, entity_id, signal_type, value, effective_start_ts, effective_end_ts, source, confidence)reference_*(country_codes, currency_codes, risk_ratings, ...)

Point-in-time join rule (critical): when analyzing an event at event_ts, join only context where:

effective_start_ts <= event_ts- and (

effective_end_tsis null orevent_ts < effective_end_ts)

That prevents future leakage - like using a risk rating updated after the transaction to "predict" the transaction's risk.

Do / Don't (the modeling rules that prevent rework)

Do

- Store context as time-bounded facts (effective start/end).

- Keep raw events immutable; add corrections as new events.

- Track ingestion lag (p50/p95) per source so you know what's safe to use.

Don't

- Don't overwrite context in place ("current risk tier") and expect audits to work.

- Don't build features in one code path and serve them in another. That's how offline/online skew happens, and production performance degrades in ways that are brutal to debug because the numbers look "close enough" until they don't.

Graph starter schema (when relationships are the context)

Graphs shine when the context is the network: counterparties, shared devices, shared domains, shared addresses, shared vendors.

A clean ontology starter:

- Nodes:

Account,Person,Transaction,Country,Device - Edges:

MADE,SENT_TO,LOCATED_IN,USED_DEVICE,EMPLOYED_BY - Rule: every

Transactionmust have exactly oneSENT_TOcounterparty

Traversal query pattern: "Find transactions where the sender is connected within 2 hops to a watchlisted entity."

The win here is path evidence: context you can show to humans, not just feed to a model.

Quality checklist for contextual data (what to measure and enforce)

The Government of Canada's nine dimensions are a strong, non-vendor checklist: access, accuracy, coherence, completeness, consistency, interpretability, relevance, reliability, timeliness.

For contextual data, two failure modes dominate:

- Timeliness: context arrives too late to influence the decision, so you optimize for the past.

- Interpretability: nobody can explain what a signal means, so it becomes a silent model input that no one can challenge.

The minimum metrics to track (start here)

If you measure nothing else, measure these:

- Lag distribution (p50/p95) from event time to ingestion time, per source

- Missing rate by entity + source

- Definition stability (did meaning/units/enums change?)

- Uptime/error rate for the pipeline feeding decisions

Quality gates (what to enforce)

- Schema gate: reject unknown fields / invalid enums (reference data makes this easy)

- Freshness gate: drop or downweight signals older than X

- Lineage gate: every record must have source + ingestion_ts

- Confidence gate: require confidence_score for probabilistic signals

Hard stance: if a signal can't be explained, it can't ship to production. Mystery features are how you end up with models nobody trusts - or worse, models everyone trusts blindly.

Contextual data in action (one scenario across industries)

A single pattern shows up everywhere: a high-stakes event happens, and context determines whether it's normal or alarming.

A crisp AML-style example:

- Event: a $100,000 payment

- Context layer 1: it originated in a high-risk country

- Context layer 2: the recipient is on a watchlist

Without context, it's just a big payment. With context, it's an escalation with a reason.

Now map the same pattern across industries:

- Ecommerce fraud: a $500 order is fine; a $500 order + new device + shipping/billing mismatch + velocity spike is fraud-shaped.

- Healthcare triage: a lab value is a number; that value + medication change + recent symptoms changes urgency.

- Logistics/ops: a delivery delay is annoying; a delay + weather alert + driver hours constraint changes routing decisions immediately.

- B2B sales: a demo request is good; a demo request + hiring spree in the target department + active category intent changes who you call first and what you say.

What separates "context" from "extra columns" is operational discipline:

- Context changes meaning, not just score.

- Context must be point-in-time correct or you'll "explain" the past with the future.

- Context must meet an SLA or it becomes a confident mistake generator.

I've watched teams chase model tweaks for months, only to discover the model learned ingestion latency patterns, not customer behavior. Fixing freshness improved outcomes faster than swapping algorithms.

Context for AI and RAG (why "contextual" is overloaded)

"Contextual" is overloaded because AI teams use it to mean three different things: semantic relationships, operational metadata, and retrieval-time augmentation.

A useful distinction:

- A knowledge graph focuses on what things are: entities, taxonomies, ontologies.

- A context graph adds operational reality: lineage, policies, provenance, time, decision traces, and confidence.

That matters because AI systems don't just need facts; they need to know whether a fact's allowed, current, and trustworthy.

On the RAG side, graph-aware reasoning follows a clean pattern: extract a k-hop subgraph around an entity/event, serialize it into structured text, then let the LLM answer with a justification. The justification is the product because it turns "model said so" into "here's the evidence path," which is what stakeholders ask for the first time something goes wrong.

My hot take: routing + retrieval fixes more RAG failures than "contextual embeddings" do. Get the right evidence into the prompt, log what you retrieved, and you've solved the reliability problem that actually hurts teams.

How to get contextual signals in B2B (practical example)

If you do outbound or account prioritization, context is the difference between "spray and pray" and "call the right account this week."

One scenario we've seen over and over: a RevOps team exports a "hot accounts" list, runs sequences for two weeks, then can't explain why results fell off a cliff in week three. The issue isn't messaging. It's that the "hot" label never expired, the join keys were inconsistent across tools, and nobody tracked whether the intent timestamp was still inside the team's decision window.

Skip this if you're not willing to store snapshots as events. You'll end up with a one-off export that can't be audited, can't be reproduced, and can't be tied back to revenue cleanly.

Mini-workflow (fast and joinable):

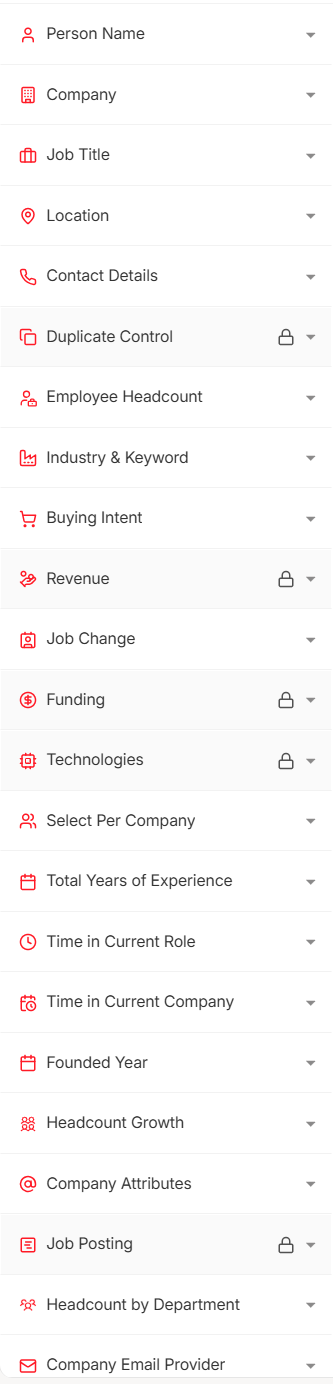

- Build a filtered list (ICP + context): role/seniority + industry + technographics + intent topic + recent job changes (Prospeo supports 30+ search filters).

- Export with stable keys: company domain, company name, person name/title, verified email, mobile (when needed), plus the context fields you filtered on.

- Store it as evented context: create a "ProspectingSnapshot" event with

snapshot_ts, then join downstream to sequences, meetings, pipeline, and revenue.

What to store (so it becomes real contextual data, not a one-off export):

account_domain(join key)person_email(join key)intent_topic,intent_score,intent_tstech_stack+tech_detected_tsjob_change_flag+job_change_tsheadcount_growth_90d+measured_tsdata_refresh_cycle_days = 7(so analysts understand freshness)

Pricing is credit-based and self-serve (no contracts): https://prospeo.io/pricing. A simple unit signal is ~$0.01 per email, plus 10 credits per mobile. The free tier includes 75 emails + 100 Chrome extension credits/month.

FAQ

What's the difference between contextual data and metadata?

Contextual data changes how you interpret an event or entity at decision time, and it's timestamped and joinable to that event/entity. Metadata describes the data itself - definitions, lineage, owners, and quality - so teams can trust and govern it. A good rule: context drives actions; metadata drives trust.

How is contextual targeting different from behavioral targeting?

Contextual targeting places ads based on the content being consumed (like page topic), while behavioral targeting uses identifiers and profiles to infer who someone is and what they might buy. In practice, behavioral targeting's more sensitive to privacy rules and recency decay, while contextual targeting is content-driven and simpler to govern.

How do you store contextual data so it's correct "as of" the event time?

Store context as time-bounded facts with effective_start_ts and effective_end_ts, then join to events using point-in-time rules (start <= event_ts < end). This prevents future leakage and makes analytics and model features reproducible. If you can't effective-date, enforce strict time-window joins using signal_ts.

How fresh does contextual data need to be?

For operational decisions (fraud checks, routing, personalization), set a latency SLA and track p95 lag; if p95 lag exceeds the decision window, the signal shouldn't be used. For planning decisions (weekly forecasts, quarterly reviews), hours or days are fine - but you still need a cutoff plus a freshness gate.

Where can I get B2B contextual signals like intent or technographics?

You can pull B2B signals from product analytics, CRM activity, and third-party data platforms that provide intent and technographics. Prospeo's a strong option because it combines 15,000 intent topics (powered by Bombora) with technographics, job-change and headcount growth signals, plus verified contact data on a 7-day refresh cycle.

Key takeaways

So, what is contextual data in practice? It's decision-relevant information that's joinable, time-bound, and fresh enough to influence the moment you're acting.

- Key + Time: every signal must be joinable and timestamped (effective start/end beats "current value").

- Point-in-time joins: prevent future leakage by joining only what's true at the event time.

- Freshness gates: measure lag (p50/p95) and block stale signals before they poison decisions.

Do those three and "context" stops being a buzzword and starts being decision infrastructure.

Contextual data needs join keys, timestamps, and freshness SLAs. Prospeo delivers all three: intent data across 15,000 Bombora topics, job change tracking with real-time alerts, and 50+ enrichment data points per contact - all keyed to your accounts and refreshed weekly.

Layer real buyer context onto every record in your CRM for $0.01 per email.