Competitive Intelligence for B2B Sales (2026): A Practical Operating System

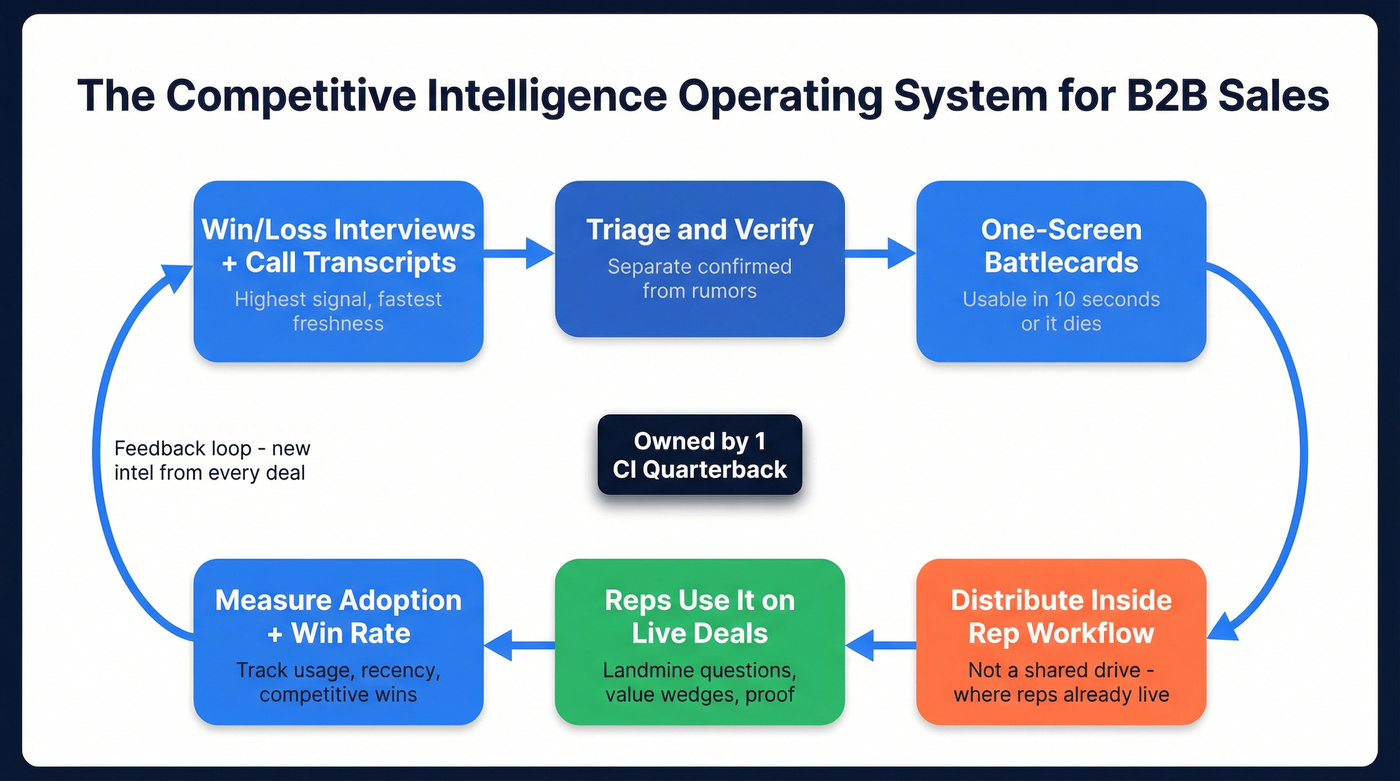

Competitive intelligence for B2B sales usually breaks the same way: someone builds a "competitor wiki," reps ignore it, and the same objections keep showing up on calls like it's Groundhog Day. The fix isn't more content. It's an operating system that turns live deal intel into talk tracks, then into actions that move pipeline.

Most teams don't have a dedicated CI department. So this playbook assumes a 1-2 person reality and shows you how to get real adoption anyway.

You'll know it's working when reps stop asking, "Do we have anything on Competitor X?" and start saying, "I used the landmine question and it changed the whole eval."

What you need (quick version)

- Pick a sales-first charter: improve competitive win rate, shorten cycles, and eliminate late-stage surprises.

- Assume you're understaffed: design for a 1-2 person team, not an "intel newsroom."

- Name one accountable owner: a single CI "quarterback" owns prioritization, publishing, and adoption.

- Run two inputs on repeat: win/loss interviews (highest signal) + call transcripts (fastest freshness).

- Ship one-screen battlecards: if a rep can't use it in 10 seconds, it won't get used. Period.

- Separate "verified" from "to be verified": field intel matters, but rumors don't belong in talk tracks.

- Instrument adoption + outcomes: track usage, recency, search-to-answer time, and competitive win rate by competitor.

- Add an execution layer: once you have talk tracks, turn them into stakeholder lists and outreach so plays actually reach decision-makers.

What "competitive intelligence" means for B2B sales (not marketing)

Sales CI is decision support for live deals. It's the minimum set of truths a rep needs to (1) control the narrative, (2) surface landmines early, and (3) win the evaluation on the buyer's scoring criteria - not yours.

News aggregation isn't CI.

A stream of competitor blog posts, funding announcements, and feature releases feels productive, but it rarely changes what a rep says on a call tomorrow. I've watched teams spend months building "competitor newsletters" that get polite thumbs-ups and zero behavior change.

Here's the thing: CI for sales has to be opinionated. It needs to tell reps what to do and what to avoid, not just what happened in the market.

If your deal sizes are small and your cycles are measured in weeks (not quarters), you probably don't need a full CI program. You need a tight objection doc, two battlecards, and ruthless follow-up.

Use this if:

- You're losing late-stage deals to a small set of competitors.

- Reps keep getting surprised by the same objections (security, implementation, pricing model, "we already use X").

- Product marketing's buried in launches and can't keep up with field intel.

Skip this (or keep it tiny) if:

- You're pre-PMF and your "competitor" is mostly spreadsheets and internal builds.

- Your motion's inbound-only and buyers rarely evaluate alternatives.

One more strong opinion: if your battlecards live in a shared drive, you've already lost.

What practitioners complain about (and they're right):

- "Battlecards sit in a shared drive and nobody opens them."

- "Monitoring tools create noise unless someone curates it."

- "We're paying $50k-$150k and still getting generic insights."

The fix is boring: one-screen standards + distribution inside the rep workflow + a curator queue with SLAs.

The competitive intelligence operating system for B2B sales (ownership, intake, cadence)

You need a single accountable "quarterback." Without it, CI becomes a shared responsibility, which means it becomes nobody's job.

CI operating checklist (what to set up)

- Owner: 1 CI lead (often PMM, enablement, or RevOps-adjacent) who owns prioritization + publishing; backed by a sales sponsor.

- Intake sources: win/loss interviews, transcript mining, SE notes, procurement feedback, competitor trials, product gaps.

- Cadence: monthly competitive kit for top competitors + quarterly exec readout with actions.

- Governance: verified vs to-be-verified, versioning + timestamps, and one distribution home inside seller workflow.

- Budget heuristic: if you want CI to matter, fund tools + interviews + enablement time; underfunded CI becomes a content hobby.

Mini-RACI (keep it boring and clear)

| Workstream | Owner | Contributors | Approver |

|---|---|---|---|

| Prioritize competitors | CI QB | Sales, PMM | Sales VP |

| Battlecards | CI QB | PMM, SE | PMM lead |

| Win/loss interviews | CI QB | CS, Sales | Rev leader |

| Transcript triage | Enablement | CI QB | CI QB |

| Distribution | Enablement | RevOps | Sales VP |

| Quarterly readout | CI QB | PMM, RevOps | Exec sponsor |

We've run bake-offs where the "best" CI content lost because nobody owned distribution. The cards were good. They just weren't where reps lived.

This is the unsexy part that wins.

The battlecard format reps actually use (the 10-second, one-screen standard)

The battlecard's the unit of value in sales CI. Not the newsletter. Not the Notion page. Not the 40-slide "competitive deck."

If it's not actionable in 10 seconds, it won't get used. That's why one-screen beats "big doc" every time.

Also: stop writing battlecards like you're trying to win an argument on the internet. Your rep needs a calm, credible path to reframe the buyer without sounding defensive, and they need it while they're juggling discovery, notes, and the awkward silence after "So... what's your pricing?"

One-screen battlecard template (copy/paste)

Competitor: [Name] Last verified: [Date] Where they win: [2 bullets] Where we win: [2 bullets] Red flags (don't say this): [1-2 bullets]

Quick Dismiss (10 seconds)

- "Totally fair - [Competitor] is a common shortlist vendor. The real question is what you need for [buyer outcome]. Can I ask one thing?"

Landmine Question (forces the weakness to surface)

- "How are you handling [hard thing competitor struggles with] today?"

- "What happens when [edge case] shows up - who owns it and how fast can you fix it?"

- "What's your plan if [implementation/security/data] becomes the bottleneck?"

Value Wedge (one differentiated capability, not a feature dump)

- "Where we're different is [wedge]. It matters because [impact on their KPI]."

Proof (make it believable fast)

- "A team like yours used us to [result]. The reason it worked was [mechanism]."

- Link: [case study / security doc / ROI model]

Objection counters (2 lines each)

- "But [Competitor] is cheaper." -> "On paper, yes. In practice, the cost shows up in [hidden cost]."

- "But they have [feature]." -> "If that's critical, we should test it. The tradeoff is [tradeoff]."

Trapdoor exit (if you're losing the thread)

- "If [Competitor] is the direction, I can help you de-risk it. Want a checklist of what to validate before you sign?"

Annotated battlecard example (fictional, steal this)

Scenario: You sell "AcmeCRM." You're competing against "RivalCRM" in mid-market SaaS. Goal: Give an AE something they can say today without sounding insecure.

Competitor: RivalCRM Last verified: 2026-02-01 Where they win:

- Strong brand recognition in SMB

- Fast initial setup for simple pipelines Where we win:

- Cleaner multi-team workflows (Sales + CS + RevOps)

- Better reporting governance (fewer "spreadsheet exports") Red flags (don't say this):

- "They're terrible / nobody likes them." (You'll lose trust instantly.)

Quick Dismiss (why it works: de-escalates and re-centers criteria)

- "Totally fair - RivalCRM is a common shortlist vendor. The real question is whether you're optimizing for speed-to-live or for clean reporting at scale. Can I ask one thing?" Annotation: You validate the choice, then force a tradeoff. Buyers love tradeoffs because it makes the decision feel rational.

Landmine Question (why it works: surfaces the hidden cost)

- "When your pipeline definitions change next quarter, who's responsible for updating reports and permissions - and how long does that take today?" Annotation: This doesn't attack RivalCRM. It exposes operational pain (governance) that shows up after the honeymoon.

Value Wedge (why it works: ties to a KPI, not a feature)

- "Where we're different is governance: RevOps can change stages, fields, and permissions without breaking dashboards. That matters because it keeps forecast and conversion reporting stable while you scale." Annotation: "Governance" is a wedge only if you connect it to forecast accuracy, conversion rates, and exec trust.

Proof (what counts as fast proof)

- "Here's our reporting governance checklist and a 2-minute screen recording of a RevOps admin making a change without breaking dashboards." Annotation: In competitive moments, proof beats persuasion. Use assets that travel fast: security docs, short recordings, one-page checklists, ROI models, reference calls.

Objection counter (keep it calm, keep it testable)

- "But RivalCRM is cheaper." -> "They often are at first. The cost shows up when you add teams and need consistent reporting. Let's map your next 12 months of users and workflows and price both fairly." Annotation: You don't argue price. You expand the frame to total cost over the buyer's real horizon.

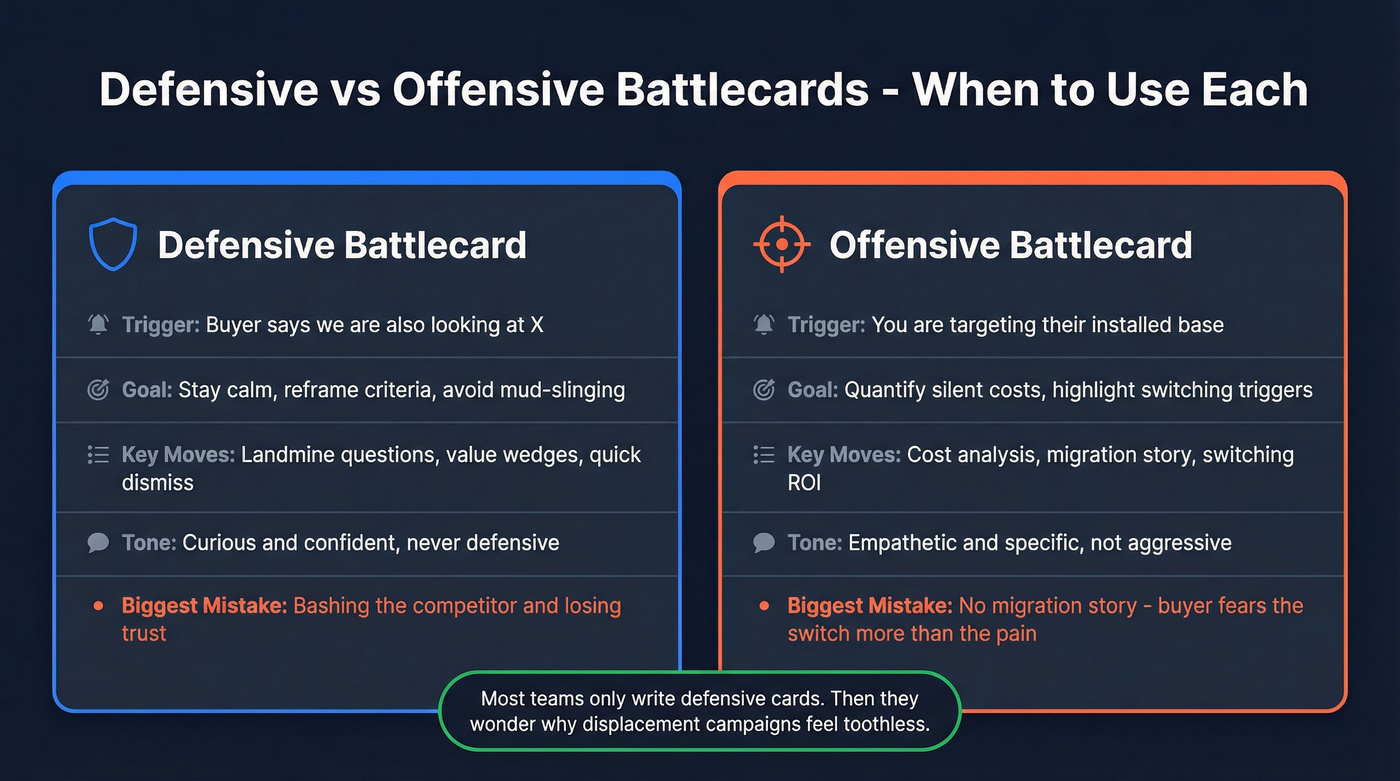

Defensive vs offensive battlecards (when to use each)

You need two versions per competitor because the context changes the psychology.

Defensive battlecard (competitor mentioned first)

- Use when the buyer says: "We're also looking at X."

- Goal: stay calm, reframe criteria, ask landmine questions, avoid mud-slinging.

Offensive battlecard (rip/replace)

- Use when you're targeting installed base or the buyer's unhappy.

- Goal: quantify silent costs, highlight switching triggers, give a safe migration story.

Real talk: most teams only write defensive cards. Then they wonder why their displacement campaigns feel toothless.

Battlecard fields checklist (what must be on the screen)

A simple "don't forget anything important" list:

- Features (only the ones buyers score)

- Pricing model + common gotchas

- Positioning claims (what they say they are)

- GTM strategy (who they sell to, how they package, how they discount)

- Strengths/weaknesses (in buyer language, not yours)

Battlecards without a way to reach decision-makers are just content. Prospeo turns your competitive plays into pipeline - 300M+ profiles, 30+ filters including buyer intent and technographics, so you target the exact stakeholders your landmine questions are designed for.

Stop writing talk tracks you can't deliver. Start reaching buyers.

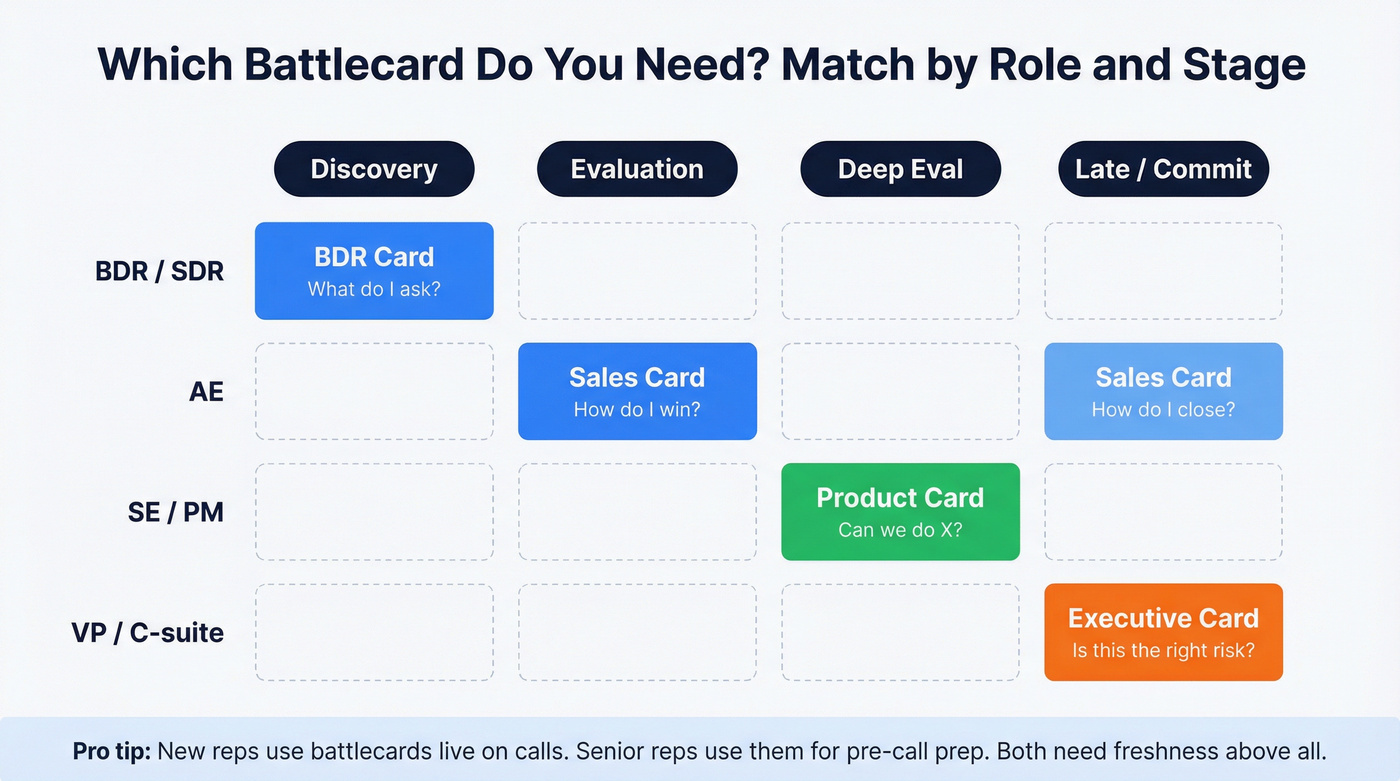

Which battlecard do you need? (types by role + sales stage)

One battlecard won't serve everyone. Different roles need different depth, and different stages need different timing.

Also, usage is predictable: new reps use battlecards live, and senior reps use them pre-call. Senior reps care most about up-to-date intel, not pretty formatting.

| Battlecard type | Primary user | Stage | What it answers |

|---|---|---|---|

| BDR | BDR/SDR | Discovery | "What do I ask?" |

| Sales | AE | Eval/late | "How do I win?" |

| Product | SE/PM | Deep eval | "Can we do X?" |

| Executive | VP/C-suite | Late/commit | "Is this risky?" |

| Why Win/Why Lose | Leaders | QBR/plan | "What's the pattern?" |

If you don't have a Why Win/Why Lose card, you don't have CI. You've got content.

Win/loss analysis: your highest-signal CI source (and why CRM is wrong)

CRM loss reasons are comforting fiction. In practice, reps are wrong about why they win and lose deals around 60% of the time.

Not because reps are lazy. Because they're busy, they're emotionally invested, and they don't get the full buyer conversation after the decision.

Win/loss SOP in 60 seconds (do this, not a "research project")

- Pick a narrow slice: 1-2 competitors + 1 segment (vertical, region, deal size).

- Interview 10 deals/quarter: 5 wins + 5 losses is enough to see patterns.

- Keep it 20-30 minutes: aim for 5-8 datapoints; buyer talks 90% of the time.

- Publish actions, not transcripts: every cycle updates battlecards, proof assets, and one enablement drill.

Set learning objectives first (3 questions to ask internally)

This prevents the most common failure mode: you do interviews, collect a pile of quotes, and nobody knows what decisions to make.

Ask these internally (verbatim):

- "What is most important to you right now?"

- "What are you looking to learn from win-loss analysis?"

- "If you had this information today, what would you do with it?"

Then pick 1-2 competitors and 1-2 deal segments to focus on. Narrow beats "cover everything."

Interview workflow (20-30 minutes) + question buckets

20-30 minutes is the sweet spot. You want 5-8 datapoints, not 50 questions.

SOP:

- Select deals: start with 5 wins + 5 losses per quarter per key competitor.

- Pick interviewer: neutral internal interviewer trained to probe; use external if you need maximum candor.

- Schedule fast: within 2-3 weeks of decision.

- Open with story: "Walk me through how you made the decision."

- Probe with buckets: trigger, criteria, shortlist, evaluation, risk, decision, pricing/procurement, aftertaste.

Biggest "aha" moments often come from procurement questions because that's where packaging and discounting reality shows up, and it's also where your reps tend to have the least visibility.

Synthesis hygiene (Five Whys, tagging, segmentation)

If you don't synthesize well, interviews become anecdote soup.

Use consistent tagging and segmentation by region/vertical/competitor so you don't average away the truth, and so you can say something specific like "We lose to Competitor A in healthcare because of security review time, but we lose to Competitor B in SaaS because of pricing packaging confusion."

Process:

- Run Five Whys on the top 3 loss themes.

- Tag every insight with: competitor, segment, stage, theme, confidence (verified vs unverified).

- Push outputs into rep systems:

- CRM fields (competitor, primary wedge, landmine question used)

- Enablement battlecards (talk track + proof)

- Product feedback loop (top gaps with deal impact)

In-call CI: keep intel current with transcript-driven updates

Win/loss is high signal but slower. In-call CI is how you stay current week to week.

The playbook's simple: auto-capture competitor mentions, route them to a curator queue, verify what's real, then publish updates back to battlecards so your competitive loop doesn't depend on someone remembering to "tell PMM later."

Workflow:

- Transcripts capture competitor mentions + surrounding context.

- Mentions flow into a curator queue (enablement/CI owner).

- Curator labels items: "new + verified," "new, to be verified," or "known, reinforce."

- Verified items update wedges, landmines, proof, and objection counters.

- Best examples become call snippets ("what good sounds like").

Curator queue rubric (what counts as "verified")

This is where most teams get sloppy. Don't.

Mark intel Verified only if it's backed by at least one of these:

- A public artifact (pricing page, docs, release notes, security page)

- A recorded buyer quote with context (who said it, when, why it mattered)

- A repeat pattern across multiple deals in the same segment

- A first-hand internal test (trial, sandbox, SE validation)

Everything else stays To be verified with a next step ("confirm in next 3 calls," "ask procurement," "run a trial").

Recommended SLA: review new competitor intel within 5 business days. That keeps battlecards alive.

I've watched teams try to do this manually in Slack threads. It works for two weeks, then collapses under volume. The curator queue is the difference between "program" and "chaos."

Distribution + measurement: prove CI changes deals (not just activity)

Most CI programs measure outputs because outcomes feel messy. That's a mistake. If you can't prove CI changes deals, you'll get treated like a content team.

A unified enablement platform helps because it puts CI inside the seller workflow instead of in a side folder, and it also gives you the instrumentation to connect usage to outcomes without turning your QBR into a debate about vibes.

Adoption metrics (usage, search-to-answer time, recency)

Track:

- Battlecard views per rep per week (by segment)

- Searches with no click (means "couldn't find it")

- Search-to-answer time (target: under 30 seconds)

- % of opportunities with a competitor field populated

- Recency: % of battlecards updated in last 30/60/90 days

Outcome metrics (competitive win rate, cycle time, CI-influenced wins)

Track:

- Competitive win rate by competitor (quarterly trend)

- Late-stage loss rate (stage 4+ losses)

- Sales cycle time for competitive vs non-competitive deals

- CI-influenced wins: deals where a battlecard was accessed + competitor present + win

CI-influenced wins is a proxy metric. Use it to trend and to spot segments where CI's working; don't use it to "credit" a single win.

Freshness metrics (time-to-update, verified vs unverified intel)

Track:

- Median time from "new intel captured" -> "published update"

- Verified vs to-be-verified ratio

- Stale rate: % of cards with outdated pricing/packaging notes

Instrumentation specifics (what to add to CRM + enablement)

If you want clean reporting, standardize fields.

In CRM (opportunity-level fields):

- Primary competitor (picklist)

- Secondary competitor(s) (multi-select)

- Competitive stage entered (date)

- Primary wedge used (picklist: governance, security, ROI, implementation, etc.)

- Landmine question used (short text or picklist)

- Proof asset sent (picklist + link)

- Competitive outcome (won/lost/no decision)

In enablement (content metadata):

- Competitor tag

- Segment tag (vertical/deal band)

- Persona tag (CFO, IT, RevOps, end user)

- "Last verified" date (required)

- Owner (required)

Dashboard layout (one page, no fluff):

- Competitive win rate by competitor (trend line)

- Late-stage losses by competitor (bar)

- Battlecard usage vs win rate (scatter)

- Stale battlecards (table: competitor, last verified, owner)

- Top 5 loss themes (tag counts, segmented)

Revenue-leader workflows (drop-in agendas that make CI stick)

Most CI dies because it's "extra." Make it part of the cadence leaders already run.

Weekly pipeline review: 3 CI questions to ask

- "Where are we seeing competitors this week, and in which segment?" (forces pattern recognition)

- "What landmine question did we ask, and what did we learn?" (forces behavior, not slides)

- "What proof did we send, and did it move the deal?" (forces evidence)

If your pipeline review never asks these, CI stays theoretical.

Monthly enablement: 15-minute "compete drill" agenda

- 3 min: one verified update (pricing/packaging change, new objection pattern)

- 7 min: role-play (Quick Dismiss -> Landmine -> Wedge -> Proof)

- 3 min: "what to stop saying" (one red-flag line to retire)

- 2 min: assign one action (update proof link, add snippet, fix a stale card)

SKO workshop outline (60 minutes)

- 10 min: top 2 competitors + what changed in the last quarter

- 15 min: live objection handling drills (small groups)

- 15 min: proof library sprint (each team contributes 1 asset + where it lives)

- 10 min: "rip/replace" plays (switching triggers + safe migration story)

- 10 min: commitments (what gets updated, by whom, by when)

Exec/board: one-slide template (copy/paste)

Competitive Intelligence Snapshot (Qx 2026)

- Top competitors in pipeline: A, B, C (share of opps)

- Win rate vs each: A __%, B __%, C __%

- Top win drivers (3 bullets):

- Top loss drivers (3 bullets):

- Pricing pressure / discounting pattern:

- Biggest landmines (2):

- Actions this quarter (3): enablement, product, packaging, proof

Tool stack for competitive intelligence for B2B sales (by job-to-be-done)

Tools don't fix CI. Workflows do. But the right stack cuts friction and keeps intel current.

Pricing note: ranges below reflect typical market pricing based on public pricing pages where available, plus common market positioning for seat-based and enterprise CI tools. Quotes vary by seats, modules, and contract terms.

| Job-to-be-done | Best-fit tools | Typical pricing | Notes |

|---|---|---|---|

| Act on CI (lists/outreach) | Prospeo | Free -> ~$39+/mo | Self-serve |

| Battlecards + workflows | Klue | ~$16k-$90k/yr | Strong adoption |

| Monitoring + alerts | Crayon | ~$30k-$150k/yr | Needs curation |

| Mid-market CI tracking | Kompyte | ~$10k-$60k/yr | Caps matter |

| Digital signals | Similarweb, Semrush | ~$150-$500+/mo | Timing intel |

| Data suite (optional) | ZoomInfo | ~$15k-$45k/yr | If already bought in |

Starting points:

- Klue: https://klue.com/

- Crayon: https://www.crayon.co/

- Kompyte: https://www.kompyte.com/

- Similarweb: https://www.similarweb.com/

- Semrush pricing: https://www.semrush.com/pricing/

- ZoomInfo: https://www.zoominfo.com/

Optional add-ons by signal type (useful, not mandatory)

- Funding + org changes: Crunchbase (~$30-$100+/seat/month)

- Firmographics + risk: Dun & Bradstreet (~$5k-$50k+/year depending on scope)

- Market stats for exec decks: Statista (~$50-$200+/month for light use; enterprise higher)

- Social listening (brand + competitor chatter): Mention / Brandwatch (~$50-$500+/month; Brandwatch enterprise higher)

- Pricing monitoring: Price2Spy / Prisync (~$50-$300+/month)

Prospeo (execution layer for competitive plays)

Prospeo ("The B2B data platform built for accuracy") is the cleanest way to turn competitive talk tracks into actual pipeline without getting stuck in list-building purgatory.

In practice, that means: build a displacement list for a competitor's installed base, pull the 3-5 stakeholders you need to multi-thread (economic buyer, champion, IT/security, ops/admin, end user), and push verified contact data straight into your sequencing or CRM enrichment workflow. Prospeo gives you 300M+ professional profiles, 143M+ verified emails at 98% accuracy, and 125M+ verified mobile numbers with a 30% pickup rate, all refreshed every 7 days; you also get 30+ search filters and intent data across 15,000 topics powered by Bombora, so your "rip/replace" play hits the right accounts at the right time.

Pricing's self-serve and credit-based: free tier (75 emails + 100 Chrome extension credits/month), then roughly ~$0.01/email, with no contracts and cancel-anytime.

Links: https://prospeo.io/pricing, https://prospeo.io/b2b-leads-database, https://prospeo.io/b2b-data-enrichment, https://prospeo.io/intent-data

Klue (battlecards + win/loss workflows)

Klue's a clean "battlecards + workflow" product built for the one-screen reality and the governance you need (owners, updates, distribution). It's a strong default when you care about adoption more than novelty.

Typical pricing lands around $16k-$90k/year depending on users and scope. If you want CI to show up in calls, not in a folder, Klue's a safe bet.

Crayon (monitoring + alerts at enterprise scale)

Crayon's strength is breadth: it monitors a lot across a lot of competitors and keeps the stream flowing. Typical enterprise pricing is $30k-$150k/year.

The tradeoff is noise. Treat it like a sensor network, not the brain. If you don't staff curation, you'll pay a lot to feel busy.

Kompyte (structured tracking where limits matter)

Kompyte works well when you want structured tracking without going full enterprise. The practical gotcha is tier limits (companies tracked, users), which can quietly kneecap adoption.

Expect $10k-$60k/year depending on tier and seats.

Similarweb / Semrush (digital signals)

These aren't CI platforms, but they're excellent for "what changed?" signals: traffic shifts, keyword moves, channel bets, and campaign spikes. Usable plans typically run $150-$500+/month and can climb quickly.

Use them to inform timing and positioning. Don't use them to write talk tracks.

ZoomInfo (data, if you're already bought in)

ZoomInfo supports CI indirectly: account coverage, org charts, and contact discovery for multi-threading. Typical pricing is $15k-$45k/year depending on seats and modules.

Don't buy ZoomInfo just for CI. If it's already in your stack, it's a solid multiplier - just watch data decay and refresh rules.

Your CI playbook says to act fast when a competitor stumbles. Prospeo's 7-day data refresh means you're reaching prospects with verified emails (98% accuracy) and direct dials (125M+ mobiles) - not stale contacts from six weeks ago.

Win competitive deals with data that's fresher than your rival's last update.

90-day rollout plan (built for a 1-2 person CI team)

You don't need perfection. You need a loop that ships, gets used, and improves.

Days 1-30: Build the minimum viable CI loop

- Pick your "big 2" competitors (the ones showing up in late-stage deals).

- Create two one-screen battlecards using the template above.

- Stand up intake:

- Win/loss: schedule 6-10 interviews (mix of wins/losses).

- Transcripts: create a curator queue and a "to be verified" tag.

- Decide distribution: one place reps already live (enablement panel, CRM sidebar, or a pinned channel).

- Run one compete drill: 15 minutes, one competitor, one landmine question.

Deliverable at day 30: 2 battlecards, 1 proof library folder, 1 cadence on the calendar.

Days 31-60: Turn insights into behavior change

- Publish Why Win/Why Lose for the top competitor (one page).

- Add call snippets: 3 per competitor (best dismiss, best landmine, best proof).

- Instrument CRM fields (competitor, wedge, proof sent) and enforce them in stage progression.

- Fix one recurring loss theme with an asset:

- security FAQ, implementation plan, ROI model, migration checklist - whatever blocks deals.

Deliverable at day 60: battlecards that sound like your best reps, not like marketing.

Days 61-90: Prove impact and scale to competitor #3-#4

- Launch competitor #3-#4 battlecards (same one-screen standard).

- Run a quarterly exec readout using the one-slide template.

- Report the scoreboard:

- win rate vs top competitors

- late-stage loss rate

- battlecard usage + recency

- top 3 actions for next quarter

Deliverable at day 90: a CI program leadership trusts because it's tied to pipeline reality.

Ethics & compliance: a CI code of conduct (simple, strict)

Ethical CI keeps you credible and keeps you out of trouble.

Do:

- Use public information (websites, docs, job posts, press releases).

- Run win/loss interviews with consent and clear purpose.

- Aggregate patterns from your own sales process (calls, notes, procurement feedback).

- Keep a visible "to be verified" layer until confirmed.

Don't:

- Ask prospects for confidential competitor documents.

- Misrepresent who you are to access gated info.

- Encourage anyone to violate NDAs or procurement rules.

- Publish "intel" without a timestamp and owner.

Rule of thumb: if you'd be angry seeing it on a competitor's battlecard about you, don't do it.

FAQ: competitive intelligence for B2B sales

What's the difference between competitive intelligence and competitor analysis for sales?

Competitive intelligence for B2B sales is a repeatable loop that turns deal signals (calls, win/loss interviews, objections) into talk tracks and plays that change win rates. Competitor analysis is usually static research; it can inform strategy, but it won't stay current unless you attach it to a cadence and an owner.

What should a sales battlecard include to be usable live on a call?

A live-call battlecard should fit on one screen and be usable in 10 seconds: a Quick Dismiss, one Landmine Question, one Value Wedge, and one Proof link. Add a last-verified date plus two-line objection counters so reps can respond without rambling.

How often should battlecards be updated in a fast-moving market?

For most teams, do a monthly refresh for the top 2-4 competitors plus a weekly "hotfix" loop from transcripts and field notes. A practical target is publishing verified updates within 5 business days of a new pattern showing up in calls.

What's a good tool for turning competitive plays into outbound lists?

For building verified stakeholder lists fast, Prospeo is a top pick: 300M+ professional profiles, 143M+ verified emails at 98% accuracy, 125M+ verified mobile numbers, and a 7-day refresh cycle. The free tier includes 75 emails + 100 Chrome extension credits/month, then pricing's self-serve (about $0.01/email).

How do I run a competitor displacement campaign once we have the intel?

Pick one wedge and one switching trigger, then map 3-5 stakeholders (economic buyer, champion, IT/security, ops/admin, end user) and sequence proof to each role. A simple goal is 20-30 target accounts per competitor per month, with one proof asset per persona, not one mega-deck.

Summary: make CI a loop, not a library

Competitive intelligence for B2B sales works when it's a living loop: win/loss + transcripts feed a curator queue, verified updates ship to one-screen battlecards, and leaders drill it in the cadence they already run. Tie it to adoption and competitive win rate, then add an execution layer (lists + outreach) so your best talk tracks actually reach decision-makers.