Intent Prediction in 2026: What It Means, How It Works, How to Measure It

Intent prediction sounds crisp until you try to ship it.

In a chatbot, intent is a label ("reset password"). In recommendations, it's a short-lived session state ("replenishing" vs "browsing"). In robotics, it's a safety-critical guess about a human goal.

That mismatch is why teams ship the wrong model, log the wrong data, and then stare at "95% accuracy" while the product still breaks.

What you need (quick version)

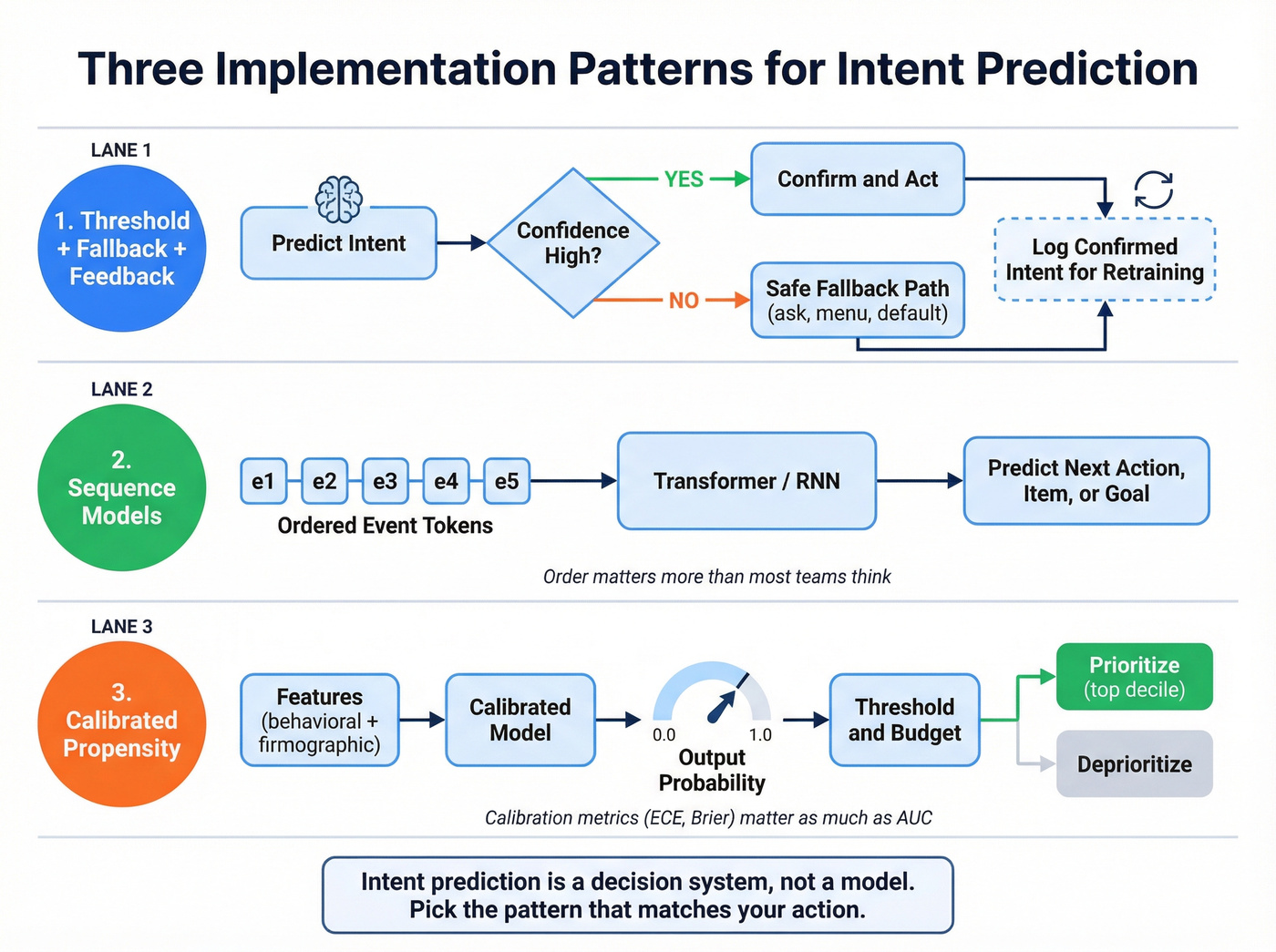

If you only remember one thing: intent prediction's a decision system, not a model. You're turning signals into an action, with a confidence level you can live with.

Quick checklist (before you touch a model):

- Define the action tied to intent (route, recommend, trigger, slow down, prioritize).

- Define the unit of intent (utterance, session, account-week, trajectory).

- Decide what "low confidence" does (abstain, ask a clarifying question, safe default).

- Instrument feedback (confirmations, corrections, downstream outcomes).

3 implementation patterns to start with:

- Threshold + fallback + feedback loop: predict intent, confirm if confidence's high, otherwise fall back to a safe capture path, then log the confirmed intent for retraining.

- Sequence models: treat events as ordered tokens; predict next action/item/goal from the sequence (order matters more than most teams think).

- Calibrated propensity: output a probability you can threshold and budget against (calibration metrics like ECE/Brier matter as much as AUC).

What is intent prediction (and why the SERP is confused)

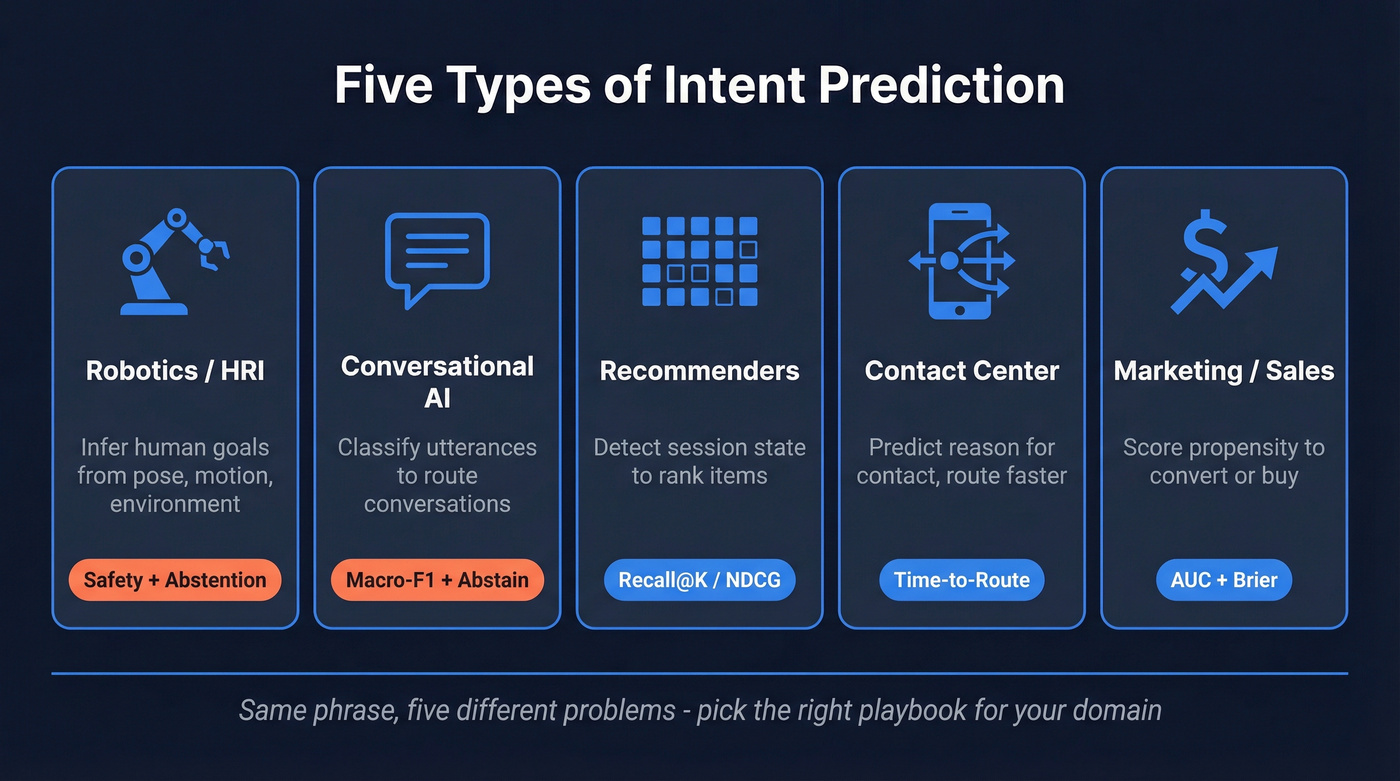

Intent prediction is inferring a goal (or next action) from observable signals, then using that inference to choose an action. The messy part is that different domains use the same phrase for different problems, so people argue past each other and copy the wrong playbook.

Here are the competing meanings you're seeing:

- Apple App Intents / iOS "IntentPrediction": Apple uses "intents" as an app-action abstraction for automation and app actions; it's a separate developer concept from ML intent modeling (not covering Apple's API details here).

- Conversational AI: "intent classification" for routing ("reset password," "cancel subscription," "talk to agent").

- Recommender systems: session intent as a latent state used to rank items ("browsing," "replenishing," "discovering").

- Contact centers: predict the reason for contact early, route faster, and reduce "hunting time" in IVR/menus.

- Marketing/sales: propensity or "buying intent" scores used to prioritize accounts/leads.

- Robotics / HRI (human-robot interaction): infer human behavioral intentions in real-world context so robots can act safely and helpfully.

Here's the thing: in half the real systems I've worked on, "intent" isn't a true label. It's a proxy you define because it's measurable (clicks, next item, confirmed route, conversion).

That's not cheating. That's how intent prediction becomes operational.

A unifying taxonomy: five types of "intent" (with examples)

Intent's overloaded, so the clean way to reason about it is: what's the "intent object," what signals define it, and what action consumes it?

| Domain | "Intent" means | Signals | Typical model | Output/action | Primary metric |

|---|---|---|---|---|---|

| Robotics/HRI | human goal in context | pose, motion, environment | KG+LLM + sequence | safe assist | safety + abstention |

| Conversational AI | utterance label | text + dialogue state | classifier | route / ask | macro-F1 + abstain |

| Recommenders | session state | clicks, views, recency | transformer | rank items | Recall@K / NDCG |

| Contact center | reason for contact | history + transcript + audio | classifier + rules | route flow | time-to-route + transfers |

| Marketing/sales | propensity | firmographic + behavioral | calibrated model | prioritize | AUC + Brier |

Two ideas make this taxonomy work across domains:

Context dependence: a 2026 Nature Scientific Reports paper on HRI intention prediction splits context into user context vs physical context and explains why the same behavior can imply different goals in complex, unstructured environments. That's why many modern systems lean on interpretable hybrids (knowledge graphs plus LLM reasoning): "why did you think that?" matters when safety's on the line, and you often need a chain of evidence that a human can sanity-check.

Intent is often a proxy label: you can't observe "true intent," so you define measurable intent facets (clicks, next item, confirmed route, conversion) and use them to improve downstream decisions.

Robotics/HRI intention prediction

In robotics, intent prediction means inferring a human's goal early enough to provide timely, appropriate service in messy environments. The practical constraint is that the same motion can mean different things depending on user context (needs, history, location) and physical context (objects, layout, time). A concrete example: a person stepping toward a doorway could be leaving, making room, or approaching an object, and the robot's action ranges from yielding to assisting.

The most dangerous failure mode isn't low accuracy. It's false confidence.

A robot that's confidently wrong behaves unsafely, so robotics teams obsess over uncertainty handling, interpretability, and conservative policies (slow down, yield, ask for confirmation) when confidence drops.

Conversational AI intent classification

This is the classic chatbot problem: map an utterance to a predefined label and route it. The pipeline is preprocessing -> model inference -> post-processing/routing, with confidence thresholds and an "unknown" fallback.

A concrete "signals -> model -> action" example: user text + last two turns -> intent classifier -> route to "billing," then trigger a billing workflow or handoff to an agent. The most common failure mode is taxonomy overlap: if "cancel subscription" and "refund" share language and your business rules aren't crisp, the model looks bad even when it's doing exactly what you asked.

Intent sets are business-defined, so your model quality's capped by your taxonomy quality. Shadecoder's intent classification guide is a solid primer: https://www.shadecoder.com/topics/intent-classification-a-comprehensive-guide-for-2026

Session/recommender intent

In recommenders, "intent" is a latent session state: what the user is trying to do right now. You rarely get ground truth, so you use proxy labels (next item, dwell time, add-to-cart) and multi-task learning.

A concrete example: last 30 clicks + recency + device -> transformer -> rank top-20 items; the "intent" is the hidden state that makes the ranking coherent. The failure mode to watch is proxy leakage: if your label is "clicked item" but your features sneak in post-click signals (or you train/evaluate with leakage across sessions), you'll ship a model that looks amazing offline and collapses online.

Research edge (and a caveat): SessionIntentBench is a benchmark designed to evaluate session intent understanding at scale across multiple datasets and tasks. It's a strong sign the field's converging on "session intent" as a first-class evaluation target, but you still need to validate on your own event schema and business outcomes.

Contact-center routing intent

Here intent means "reason for contact," predicted early enough to route the caller without forcing them through menus. A concrete example: caller history + first 10-20 seconds of transcript (and optionally audio features) -> intent model -> route to the right queue; if confidence's high, confirm; if not, fall back to a menu or bot.

The failure mode shows up in ops metrics: misroutes spike transfers, and transfers spike handle time and customer frustration. This is where calibration stops being academic. If you route wrong, you don't just lose accuracy - you burn minutes and agent capacity.

Marketing/sales propensity ("buying intent")

In B2B, intent prediction usually means propensity: probability an account or lead will convert, buy, renew, or respond. A concrete example: firmographics + recent product usage + web behavior + email engagement -> calibrated model -> prioritize the top decile for SDR outreach and allocate spend.

The operational question's simple: can you predict buyer intent early enough to change what sales and marketing do this week?

The failure mode is decile instability. Teams optimize AUC, then treat raw probabilities as trustworthy. The result: the "top 10%" changes wildly week to week, reps stop believing the score, and the whole program dies. If you're going to threshold and tier accounts, calibration (ECE/Brier) is what turns a score into something you can actually run the business on.

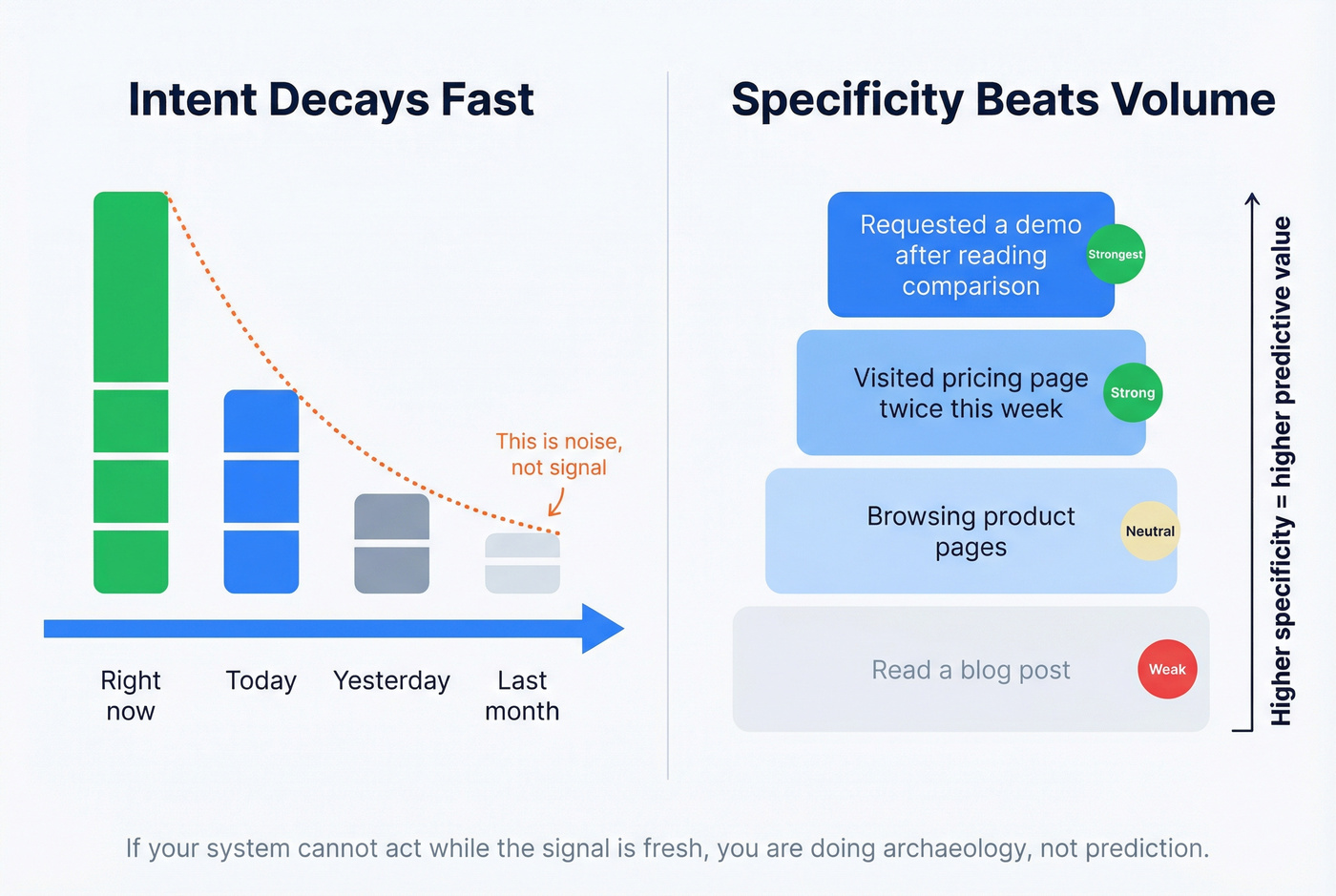

Context-first intent prediction: why identity matters less than recency

Most projects over-invest in identity and under-invest in context and decay. Intent isn't a personality trait; it's a short-lived state.

Two rules that travel across domains:

- Intent decays fast. Treat signals as having a half-life: yesterday's behavior is weaker than today's, and last month's is often noise.

- Specificity beats volume. "Reading about EVs" is vague; "best hybrid SUVs under 40k" is intent-rich. In B2B, "visited pricing page" is stronger than "read a blog post."

If your system can't act while the signal's fresh, you're not predicting intent. You're doing archaeology.

You just read about intent prediction models, taxonomy, and calibration. Now apply it: Prospeo layers Bombora-powered intent data across 15,000 topics onto 300M+ verified profiles - so your propensity scores route to real contacts, not dead ends.

Turn intent signals into booked meetings with 98% accurate contact data.

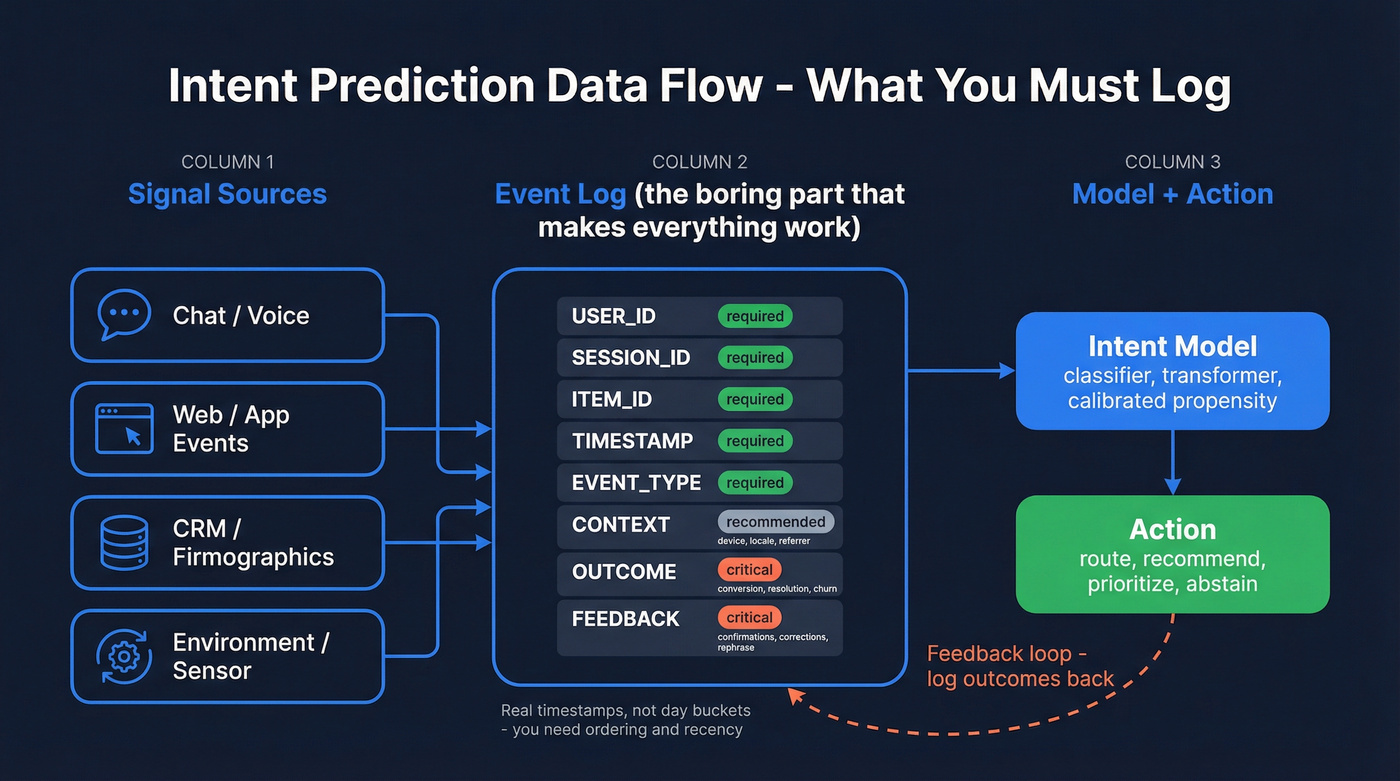

Signals & data for intent prediction: what you must log

Models don't fail because "AI is hard." They fail because teams don't log the right events with the right keys, so the model can't learn sequences, context, or feedback.

In our experience, most "intent" projects fail at instrumentation, not modeling.

Minimum viable logging checklist:

- Stable IDs: user/account ID, session ID, channel ID (chat/call/web/app).

- Event stream: every meaningful interaction (view, click, add-to-cart, utterance, transfer, agent tag).

- Timestamps: real timestamps, not day buckets. You'll need ordering and recency.

- Context fields: device, locale, entry point, referrer, environment state (robotics), queue/agent availability (contact center).

- Outcome fields: conversion, resolution, handle time, refund, churn, meeting booked.

- Feedback fields: confirmations/corrections ("Yes, that's why I called"), agent disposition, user rephrase after fallback.

Contact-center add-on: voice features (prosody) If you've got consent and a clear purpose, audio can add signal that text misses:

- speaking rate (fast/slow), interruptions/overlap, silence duration

- energy/arousal and sentiment proxies

- talk ratio (customer vs agent) and escalation patterns

These features are powerful - and sensitive. Treat them like profiling data: minimize retention, document purpose, and don't reuse them for unrelated targeting.

Mini schema (the boring part that makes everything else possible):

USER_ID(required)ITEM_ID(required)TIMESTAMP(required)- Optional but common:

SESSION_ID,EVENT_TYPE,CONTEXT_JSON

This mirrors the Amazon Personalize interaction schema used in contact-center intent prediction: USER_ID, ITEM_ID, TIMESTAMP, where ITEM_ID can literally be the intent label (encoded as a token). In the AWS Connect reference architecture, Lambda calls Personalize, and Lex/menu is the fallback when confidence is low.

One more thing: don't aggregate away the raw event stream too early. You'll want ordered sequences later.

Modeling patterns for intent prediction that actually work

Most teams jump straight to "fine-tune a model." That's backwards. The right question is: what's the cheapest pattern that's safe, maintainable, and improves outcomes?

Multi-class classification + "unknown" intent

Use this if: you have a fixed set of intents (5-50), you need deterministic routing, and you can tolerate abstention.

Skip this if: your "intent" is really a continuous state (shopping session, browsing mood) or your label set changes weekly.

Key design choices:

- Add an unknown / fallback class and treat it as a first-class outcome.

- Pair with slot filling (entity extraction) when the action needs parameters ("change plan to X," "cancel order #123").

- Optimize for macro-F1 and abstention quality, not just accuracy.

I've seen teams hit 95% accuracy and still melt down because the remaining 5% clusters around high-stakes intents (billing, cancellations). That's not a model issue. It's taxonomy plus thresholding.

Sequence models for session intent

Use this if: intent is expressed through ordered behavior (clickstreams, carts, content sessions).

Skip this if: you only have one event per user or your logging can't preserve order.

Instacart's approach is the modern playbook: treat a session as a sequence of product IDs and train a BERT-like transformer with masked language modeling to predict missing tokens / next item. The key result: randomizing order makes Recall@K 10-40% worse depending on K. That's not a rounding error.

My opinion: if your team can't guarantee correct ordering and timestamps, don't build a transformer recommender yet. Fix the data first.

Hierarchical / multi-task learning

Use this if: you can define multiple intent facets that are individually learnable and jointly useful (genre + action type + freshness preference).

Skip this if: you can't define proxy labels cleanly, or you don't have enough data per facet.

Netflix's hierarchical multi-task setup is a great example: predict session intent facets first, then use them as features for next-item prediction. It's not "one model to rule them all." It's a structured pipeline where intent predictions become intermediate representations.

Multi-task only helps when tasks are aligned. Throw in every label you can think of and you'll get negative transfer and a debugging nightmare.

GenAI categorization vs brittle phrase lists

Use this if: you're doing intent detection/categorization at scale in messy language (contact center transcripts, long chats), and your current system is a graveyard of phrase rules and synonyms.

Skip this if: you need strict determinism, ultra-low latency on-device, or you can't tolerate occasional made-up rationales.

A pattern that's working in contact centers: replace phrase matching with LLM-assisted categorization driven by natural-language category definitions. AWS Contact Lens' generative categorization is a good example of the shift. It doesn't replace predictive routing models; it makes your labels and training data less painful, which makes everything downstream better.

Mini case studies: Netflix, Instacart, and contact-center routing

| Case | What they predicted (intent definition) | What changed (metric + impact) |

|---|---|---|

| Netflix | session intent facets (proxy labels like "discovering" vs "continue watching") | +7.4% offline next-item prediction accuracy vs best baseline |

| Instacart | next product as session intent (sequence-as-language) | Recall@K improved; +30% lift in user cart additions for cart recommendations |

| Contact center | reason for contact early (route intent) | "Hunting time" -54% (5.15 -> 2.37 minutes) |

Netflix (recommenders): Netflix predicts session intent facets and uses them to improve next-item prediction. They reported a 7.4% improvement in offline next-item prediction accuracy versus their best baseline. The lesson isn't "copy their architecture." It's: define intent in a measurable way that improves the downstream decision.

Instacart (e-commerce sessions): Instacart frames intent as "what product is the user likely to want next," using a transformer trained on ordered sequences of product IDs. Two takeaways matter in production: (1) order matters (randomization hurts Recall@K by 10-40%), and (2) they tied it to business outcomes: 30% lift in user cart additions for cart recommendations.

Contact centers (routing): The AWS reference architecture is a clean starter pattern: predict intent, if confidence clears a threshold then ask the caller to confirm, else fall back to Lex or a menu, then feed confirmed intent back into training. A 2026 Natterbox benchmarks report (58.2M calls + 178 leaders) reports "hunting time" dropping 54% (5.15 -> 2.37 minutes) and highlights how common human-in-the-loop workflows are in practice.

If you want the underlying posts: Netflix's write-up is on the Netflix Tech Blog (https://netflixtechblog.com/fm-intent-predicting-user-session-intent-with-hierarchical-multi-task-learning-94c75e18f4b8) and Instacart's is on the Instacart Tech Blog (https://tech.instacart.com/sequence-models-for-contextual-recommendations-at-instacart-93414a28e70c).

Evaluating intent prediction: accuracy isn't enough (calibration, ranking, lift)

Accuracy is a trap metric because many systems don't care about "the right label." They care about ranking, confidence, and decision quality.

| Use case | What to measure | Why it matters |

|---|---|---|

| Chat routing | macro-F1 + abstain rate | long-tail safety |

| Ranking/recs | Recall@K / NDCG | top-K utility |

| Propensity | AUC + precision@decile | prioritization |

| Thresholding | ECE + Brier | confidence trust |

| Business | lift + cost | real outcome |

A concrete threshold-sweep example (how teams actually ship this)

Suppose you're routing 1,000 daily chats across 9 intents. You set up three bands:

- Auto-route if confidence >=

T_high - Clarify if

T_low<= confidence <T_high - Fallback if confidence <

T_low(menu or agent)

Now do a sweep:

- At

T_high = 0.90, you might auto-route 55% of chats with 97% correctness, clarify 30%, fallback 15%. - At

T_high = 0.75, you might auto-route 80% of chats but correctness drops to 92%, and transfers spike.

Pick the threshold that minimizes total cost, not the one that maximizes accuracy. In contact centers, a wrong route costs minutes and transfers; in commerce, a wrong recommendation costs engagement; in robotics, a wrong action can be unsafe. The best threshold is different per intent, and high-cost intents deserve stricter thresholds.

Calibration: the difference between a score and a decision tool

If probabilities drive actions, calibration matters as much as AUC.

- Calibration definition: when the model outputs confidence

c, it should be correctcof the time. - ECE (Expected Calibration Error): bin predictions by confidence, then compute the weighted average of

|accuracy - confidence|across bins. - Brier score: mean squared error of probabilistic predictions; perfect is 0 and worst is 1 (binary case).

A clean calibration explainer is on the ICLR blog: https://iclr-blogposts.github.io/2026/blog/calibration/ For Brier, Neptune's guide is practical: https://neptune.ai/blog/brier-score-and-model-calibration

What "good" looks like in propensity (real numbers): on 62,859 leads (Jul 2024-Jul 2025), a calibrated lead scoring ensemble achieved AUC 0.841, Brier 0.146, and +19% top-decile precision versus a logistic regression baseline. That's the practical win: the top bucket gets meaningfully better, and the probabilities are stable enough to tier leads into operational segments.

If you're doing "buying intent," hold yourself to this standard: your top decile's measurably better, and your probabilities are calibrated enough to threshold without chaos.

Intent prediction in production: latency, long-tail, abstention, and feedback loops

A model that looks great in a notebook can still be a production liability. The failure mode is always the same: long-tail phrasing, edge cases, and the last chunk of uncertainty you didn't design for.

One practitioner thread I bookmarked described a familiar setup: ~100ms latency, 9 intents, 25k samples, ~95% accuracy, and a stubborn long-tail 5% that won't go away. Even with a fine-tuned small model and conversation history, rare phrasings keep slipping through.

Look, chasing the last 5% with a bigger model is how teams light money on fire.

I've watched a team burn a quarter doing exactly that, only to realize the real issue was product design: they didn't build a safe abstention path, so every uncertain prediction still forced a brittle action.

Mitigation checklist (what actually works):

- Abstain + fallback: if confidence < threshold, ask a clarifying question or route to a safe default.

- Confirm high-confidence predictions: "Sounds like you're calling about billing - is that right?" That turns predictions into labeled data.

- Weekly iteration loop: review misroutes, add training examples, update taxonomy, redeploy.

- Long-tail harvesting: log rephrases after fallback; those are gold training data.

- Guardrails by intent: stricter thresholds for high-cost intents (cancellations, fraud, safety).

- Drift monitors: track intent distribution shifts and confidence histograms, not just accuracy.

If you don't have a feedback loop, you don't have this capability. You have a demo.

Privacy & compliance constraints (2026 reality check)

This is profiling. In 2026, that means privacy and compliance shape what signals you can use and how you can act on them, especially if intent scores change eligibility, pricing, routing priority, or outreach intensity.

Two trends drive risk:

- Enforcement on secondary use + consent: collecting data for one purpose and quietly reusing it for another creates real exposure.

- Fingerprinting / probabilistic identifiers scrutiny: probabilistic IDs and fingerprinting techniques are under heavier scrutiny, especially for targeted advertising or cross-context tracking.

US state law's also getting sharper teeth around profiling:

- Connecticut amendments effective July 1, 2026 add rights to contest profiling decisions and access inferences derived from personal data.

- Minors restrictions are tightening (Colorado amendments effective Oct 1, 2025 restrict targeted advertising to minors and add heightened obligations).

Do / don't (practical version):

- Do minimize data: log what you need for the decision, not everything you can collect.

- Do separate product analytics from marketing targeting where possible (purpose limitation).

- Do support access/deletion and be ready to explain "inferences" if your system generates them.

- Don't rely on fingerprinting-like identifiers as a workaround when consent's missing.

- Don't treat "intent" as harmless because it's "just a score." Scores still count as profiling when they drive decisions.

Operationalizing buying intent in B2B (when intent prediction meets outreach)

B2B intent prediction usually dies in the handoff. Marketing has an in-market account list. Sales has a sequencer. RevOps has a CRM full of duplicates. And nobody turns "intent" into reachable people fast enough to matter.

Here's the workflow that actually holds up: detect intent -> map to ICP accounts -> find the right roles -> verify contactability -> launch outreach while signals are fresh. Once you've got a calibrated score, the bottleneck becomes contactability and speed, not modeling.

This is where tools like Prospeo show up naturally in the stack: not as "the model," but as the activation layer that turns an account-level intent signal into verified contacts you can reach today, with data that doesn't go stale before your SDR even opens the queue. If you’re building this motion, start with intent signals, then operationalize it with account scoring and a clean lead qualification process.

One CTA (and only one)

The article's core insight: intent prediction is a decision system, not just a model. Prospeo closes that loop - intent signals identify in-market accounts, then 143M+ verified emails and 125M+ mobile numbers let you act on that signal instantly.

Skip the model-to-action gap. Reach buyers already showing intent.

FAQ about intent prediction

Is intent prediction the same as intent classification?

Intent classification is a common approach where the output is a discrete label (like "billing" or "cancel"), while intent prediction also covers ranked outputs (next-item) and calibrated probabilities (propensity) that drive thresholds, budgets, and fallbacks. In practice, classification is usually one of a few building blocks in a production decision system.

What metrics should I use: accuracy, AUC, Recall@K, or calibration?

Use metrics that match the decision: macro-F1 for multi-class routing, Recall@K/NDCG for ranking, AUC plus precision@decile for prioritization, and ECE/Brier when you act on probabilities. Rule of thumb: if you're thresholding at 0.8-0.9 confidence, track ECE and a reliability diagram.

How do confidence thresholds work safely in production?

A safe setup uses three zones: auto-act above a high threshold (often with a confirmation), clarify in the middle band, and fall back below a low threshold to a menu/agent/safe default. Teams usually start with T_high around 0.85-0.95 and tune per intent based on transfer cost, handle time, and user frustration, not just offline accuracy.

Why does the "last 5%" of intent errors matter so much?

That last 5% concentrates in rare phrasing and high-cost edge cases, so it causes disproportionate damage: wrong transfers, broken automations, safety risks, or lost deals. The best fix is usually operational: abstention paths, per-intent thresholds, and weekly error harvesting, because chasing it with a bigger model often increases latency without eliminating the weird cases.

What's a good free way to turn buyer intent into verified contacts?

Start with a tool that pairs intent signals with verification so outreach doesn't burn your domain. Prospeo's free tier includes 75 emails + 100 Chrome extension credits/month, with 98% email accuracy and a 7-day refresh cycle, which is enough to run a simple "top-decile only" rule so reps focus on the highest-propensity accounts each week.

Summary: how to ship intent prediction without fooling yourself

Treat intent prediction as a decision system: define the action, define the unit (utterance/session/account-week), design abstention and fallbacks, and log feedback so the system learns. Then pick the simplest modeling pattern that fits your domain, and judge it with calibration and business lift, not a vanity accuracy number. For GTM teams, that “decision system” framing maps cleanly to signal-based selling, accounts in market intent data, and website visitor scoring when you need recency-weighted prioritization.