Personalized Drip Campaigns in 2026: A Practical Playbook (Rules + Templates)

Unsubscribes spike early in a sequence, and everyone blames the copy.

Most of the time, it isn't the copy. It's the personalization inputs. Wrong role, stale company info, or a "first name" that's actually "info@" will make even good messaging feel tone-deaf.

Here's the thing: "personalization at scale" is mostly fake when the data's wrong. If your CRM says a CFO is a "Marketing Manager," your clever dynamic block just becomes a faster way to annoy the wrong person. The fix is boring (clean fields + simple rules), but it's the only fix that survives real sending volume, handoffs between teams, and the inevitable "we imported a list from somewhere" moment.

Personalized drip campaigns work when they're consistent: clean data, simple logic, and stop conditions that prevent you from hammering people who already converted.

What you need (quick version)

Implement first (the trio)

- One IF/THEN rule + a fallback

IF

industry = SaaSTHEN show SaaS proof; ELSE show generic proof. (Fallback is mandatory.) - A 3-email sequence + stop conditions Stop on purchase, booked meeting, reply, unsubscribe, hard bounce, or "converted" event.

- SPF/DKIM/DMARC + one-click unsubscribe (for bulk sending) Skip this and your "personalization" lands in spam.

The mini-framework

Data -> Rules -> Templates -> Deliverability -> Measurement

What "personalized drip campaigns" means (and how it differs from nurture)

A personalized drip campaign is a scheduled, automated series of messages that adapts based on what you know about someone (or what they do). Think: "Day 0, Day 2, Day 4" with a couple of dynamic blocks that change by role, stage, or interest.

A nurture sequence is broader and more signal-driven. It's often triggered by behavior over time (content consumption, product usage, sales stage movement) and can branch more aggressively.

Practical difference:

- Drip campaigns win when you need consistency: onboarding, trial conversion, post-demo follow-up, win-back.

- Nurture wins when you need patience: longer buying cycles, multi-stakeholder deals, education before a sales motion.

Also: opens aren't a clean signal anymore. Apple's Mail Privacy Protection (MPP) inflates opens, so treat open rate as directional. Clicks, replies, and downstream events (demo booked, trial activated, order placed) are the real scoreboard.

Hot take: if your deal size is small and your product's self-serve, you don't need a 14-branch "journey." You need one tight drip that stops on conversion and doesn't embarrass you when it's forwarded.

Personalization inputs: the data plan (what to collect + where it comes from)

Personalization doesn't start in your ESP. It starts in your data model.

A useful way to think about inputs is Litmus's taxonomy:

- Zero-party data: someone tells you directly (preferences, goals).

- First-party data: you observe on your own properties (site behavior, product events, CRM activity).

- Second-party data: a partner's first-party data (co-marketing, integrations).

- Third-party data: external sources (firmographics, technographics, contact data).

The guardrail that matters: clean data + fallbacks. If your dynamic block depends on a field that's blank 30% of the time, you're building a drip that randomly breaks.

The minimum viable personalization dataset (7 fields)

| Field | Why it matters | Example values | Fallback |

|---|---|---|---|

| Role | Message angle | VP Sales, RevOps | "team" |

| Company | Social proof fit | Acme Inc. | "your org" |

| Industry | Use cases | SaaS, FinServ | "your industry" |

| Lifecycle stage | CTA + urgency | lead, trial, customer | "exploring" |

| Last action | Context | requested pricing | "checked us out" |

| Product interest | Relevance | API, reporting | "core features" |

| Region | Compliance + tone | US, DACH | "your region" |

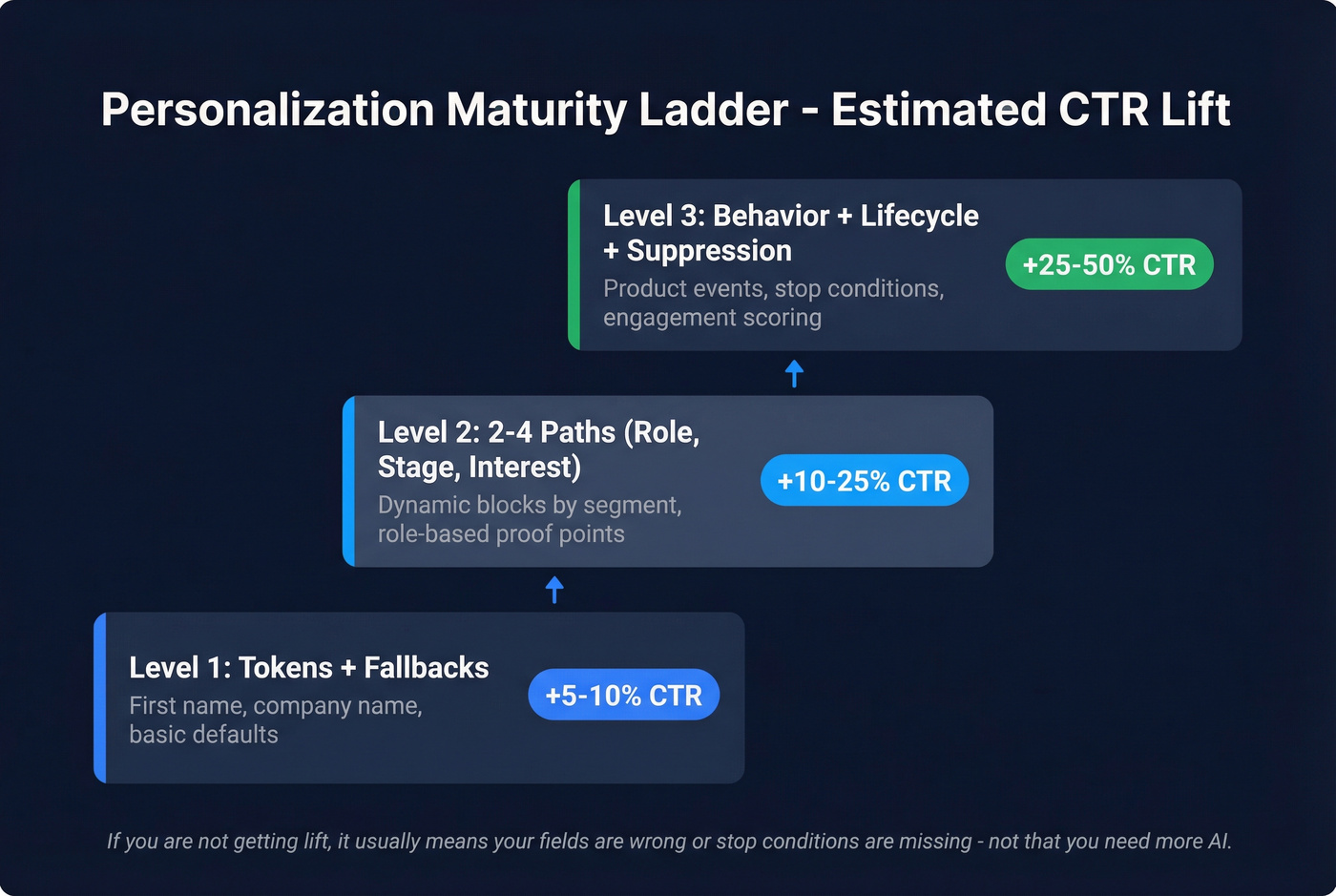

Personalization maturity ladder (CTR lift estimates)

These are estimates based on typical program lifts I've seen when teams move from "token swaps" to real segmentation:

- Level 1: tokens + fallbacks -> +5-10% CTR (estimate)

- Level 2: 2-4 paths (role/stage/interest) -> +10-25% CTR (estimate)

- Level 3: behavior + lifecycle + suppression/pauses -> +25-50% CTR (estimate)

If you're not getting lift, it almost never means you need "more AI." It means your fields are wrong or your stop conditions are missing.

Where the data comes from (and what's risky)

Low-risk (best):

- Forms: role, industry, use case, region.

- CRM: stage, owner, last touch, meeting booked.

- Product events: activated feature X, hit limit, invited teammate.

- Email engagement: clicked onboarding link, replied.

Medium-risk (needs hygiene):

- Website behavior: "visited pricing" is useful, but don't cite it explicitly in copy.

- Enrichment: great for firmographics and role, as long as it's fresh.

High-risk (where personalization breaks):

- Purchased lists and stale exports. Wrong role equals wrong message. And wrong message feels like spam even if the email is "personalized." (If you are buying, read Buying Business Leads first.)

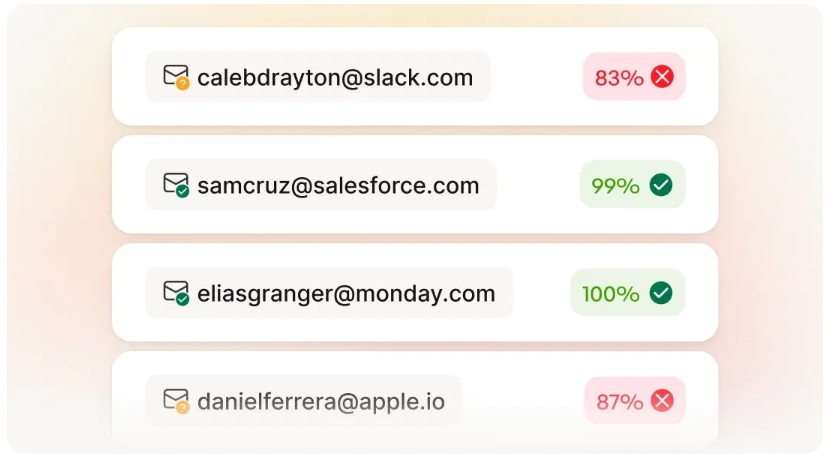

Data quality checkpoint (before you write copy)

Before you write a single subject line:

- Verify emails (hard bounces are a deliverability tax) - use a simple email verification list SOP

- Dedupe contacts

- Normalize fields (VP Sales vs V.P. Sales vs Head of Sales) - treat it like data quality, not “ops busywork”

- Staleness rules (if

last_updated > 90 days, treat as unknown) - this is the core of B2B contact data decay - Fallback coverage (every dynamic field has a default)

I've watched teams spend two weeks rewriting a sequence while their bounce rate quietly climbed past 6%. The fix wasn't "better copy." It was removing bad addresses, re-enriching roles, and stopping sends to records that hadn't been updated since last quarter.

Prospeo - "The B2B data platform built for accuracy" - is the cleanest way we've used to do that at scale: 300M+ professional profiles, 143M+ verified emails, 125M+ verified mobile numbers, and 98% email accuracy on a 7-day refresh cycle (the industry average is 6 weeks). If your drip logic depends on role, industry, and company size being right, that freshness matters more than any fancy dynamic block.

Your drip campaigns break when role, industry, or company fields are wrong. Prospeo's 300M+ profiles refresh every 7 days - not every 6 weeks - so your IF/THEN rules fire on accurate data, not stale guesses. 98% email accuracy means your sequences reach real inboxes.

Stop rewriting copy when the real problem is bad data.

How to build personalized drip campaigns with IF/THEN rules (without a logic maze)

If you can't explain the rule in one sentence, you're guessing.

Dynamic content is conditional blocks:

- IF

tag = paid-> show premium block - IF

location = Canada-> show CAD pricing - IF

clicked promo-> show related recommendations

Aim for 2-4 meaningful paths. That's enough to feel relevant and still be QA-able, and it keeps you from shipping a sequence where half the variants never got reviewed because nobody could remember which segment triggered what. (If you want a few proven branching patterns, see conditional sequences.)

Also: don't do creepy personalization. Don't write "I saw you visited our pricing page at 11:03pm." Write "If you're comparing options right now..."

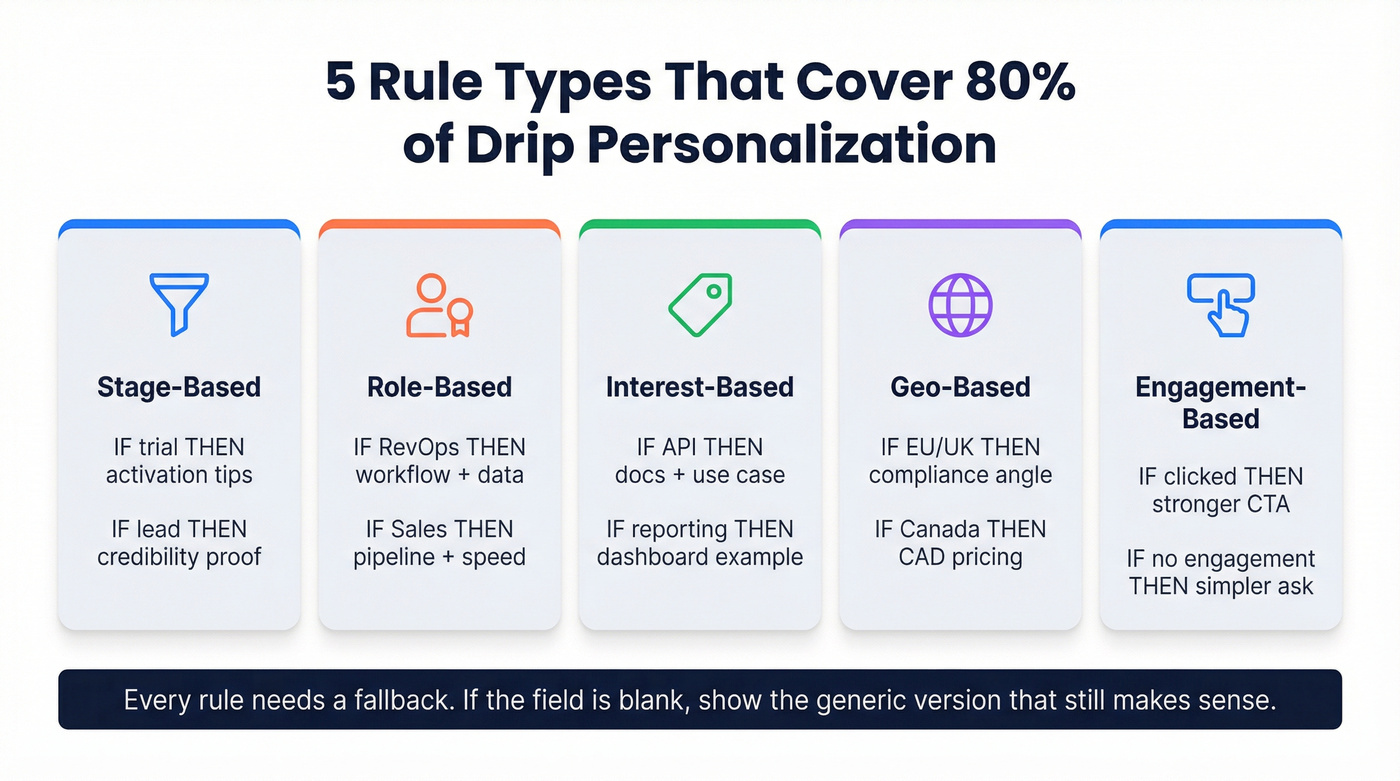

The 5 rule types that cover 80% of personalization

- Stage-based: trial -> activation; lead -> credibility

- Role-based: RevOps -> workflow + data; Sales -> pipeline + speed

- Interest-based: API -> docs + use case; reporting -> dashboard example

- Geo-based: EU/UK -> compliance; Canada -> CAD pricing

- Engagement-based: clicked -> stronger CTA; no engagement -> simpler ask

Fallback block template (copy + logic)

Logic

- IF

field exists-> show personalized block - ELSE -> show generic block that still makes sense

Copy

- Personalized: "Teams like {{company}} usually start with {{product_interest}} because it removes friction for {{role}}."

- Fallback: "Most teams start with one quick win that removes friction for the team running the process."

The "standalone email" rule (steals wins from the best programs)

Forwarding happens. Skipping happens. People open Email 2 first.

Standalone test: if Email 2 is forwarded without Email 1, does it still make sense and have one clear CTA?

Quick checklist:

- Context line: "You're getting this because..." (one sentence)

- Why now: deadline, stage, or next step

- Single CTA: one action, not three links (tighten this with a reply-first sales CTA approach)

- Opt-out visible: unsubscribe link + one-click headers already set

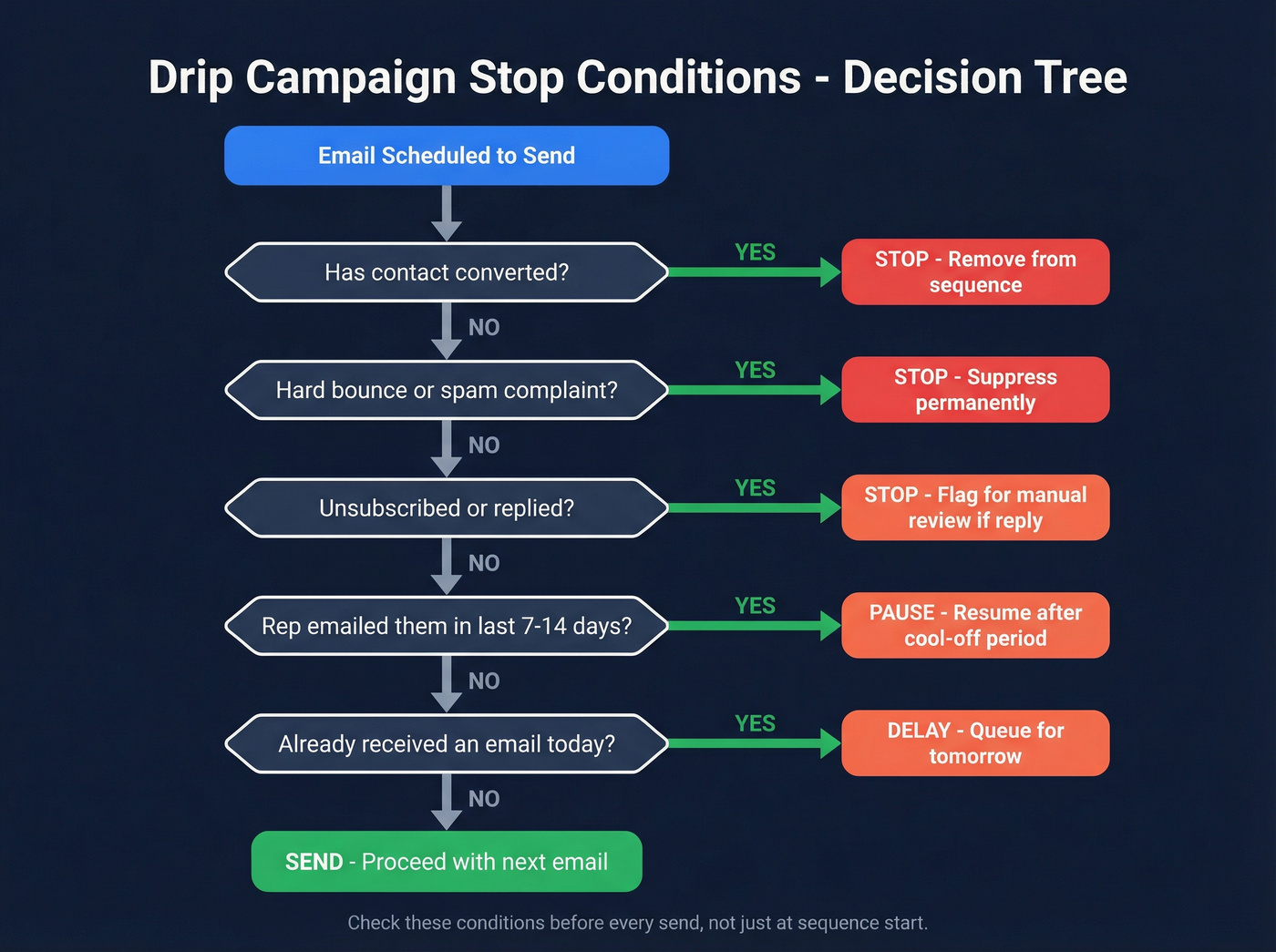

Frequency caps + stop conditions (your safety rails)

Defaults that keep you out of trouble:

- Frequency cap: max 1 email/day for opt-in marketing; slower for B2B.

- Stop on conversion: purchase, booked meeting, trial activated, replied.

- Stop on negative signals: unsubscribe, spam complaint, hard bounce.

- Manual override pause: if a rep emails them, pause the drip for 7-14 days.

One of the ugliest scenarios I've seen: a prospect replies "Yes, let's talk next week," books a meeting, and still gets two automated follow-ups because the calendar event didn't flip the right field. That isn't "automation." It's self-inflicted damage. Fix your stop conditions before you scale volume.

Copy-ready drip templates (with timing + stop conditions)

Format note: each sequence has a compact setup line, then short emails you can paste and adapt.

Welcome / onboarding (3 emails, 4 days)

Setup: Trigger = signup | Timing = now / +2d / +4d | Tokens = {{first_name}}, {{role}}, {{product_interest}} | Stops = activation, reply, unsubscribe

Email 1 (Now) - Subject: "Quick welcome + one question"

- "Hey {{first_name}}, welcome."

- "What are you trying to do right now: A) {{interest_option_1}} B) {{interest_option_2}} C) something else?"

- "Reply A/B/C and I'll send the right 2-minute resource."

IF/THEN (role block)

- Sales: "I'll include a week-one pipeline playbook."

- Else: "I'll include a setup checklist."

Email 2 (+2d) - Subject: "The 10-minute setup most people skip"

- "Do this first: {{setup_step_1}} -> {{setup_step_2}}."

- "If you want, reply with your goal and I'll sanity-check the setup."

Email 3 (+4d) - Subject: "Pick the next step (fast)"

- "Best next step for {{product_interest}}:"

- "Option 1: {{next_step_1}} / Option 2: {{next_step_2}}."

- "Reply 1 or 2."

Why it works

- It earns a reply early (best signal you can get).

- It keeps the CTA simple: one choice, not a menu.

Product launch (4 emails: -7 / -3 / 0 / +1)

Setup: Trigger = launch list signup | Timing = -7d / -3d / launch day / +1d | Tokens = {{first_name}}, {{primary_benefit}} | Stops = purchased, requested demo, unsubscribed

Email 1 (-7) - Subject: "A heads-up: we're shipping {{primary_benefit}}"

- "{{first_name}}, quick teaser: next week we're launching {{primary_benefit}}."

- "If you want, reply with what you're trying to improve - I'll send the most relevant use case."

Email 2 (-3) - Subject: "Early look (2-minute preview)"

- "Here's the preview: {{preview_link}}."

- "If you're evaluating tools this month, this is the part that saves time: {{one_line_value}}."

IF/THEN (engaged vs not engaged)

- IF clicked preview: "Want the setup checklist so you can use it on day one?"

- ELSE: "If you're busy, here's the one-sentence summary: {{one_sentence_summary}}."

Email 3 (0) - Subject: "It's live: {{primary_benefit}}"

- "It's live. Here's what changed: {{bullet_1}}, {{bullet_2}}."

- "CTA: {{launch_CTA}}."

Email 4 (+1) - Subject: "Last call for launch pricing / bonus"

- "Quick reminder: {{deadline}}."

- "CTA: {{launch_CTA}}."

Why it works

- It builds intent before the ask.

- It uses engagement to avoid sending hype to people who didn't care.

Trial conversion / evaluation (4 emails)

Setup: Trigger = trial started or requested pricing | Timing = 0 / +2d / +5d / +7d | Tokens = {{first_name}}, {{company}}, {{trial_end_date}} | Stops = upgraded, booked demo, replied

Email 1 (0) - Subject: "Your evaluation plan (2 steps)"

- "If you only do two things: {{key_action_1}} and {{key_action_2}}."

- "Reply with your goal and I'll tell you the fastest path."

Email 2 (+2d) - Subject: "Example from a team like {{company}}"

- "Before/after: {{metric_before}} -> {{metric_after}}."

- "Want the checklist? Reply 'checklist'."

Email 3 (+5d) - Subject: "The gotcha that kills most trials"

- "Gotcha: {{common_failure}}."

- "Fix: {{simple_fix}}."

- "If you want, send a screenshot and I'll point out one change."

Email 4 (+7d) - Subject: "Trial ends {{trial_end_date}} - keep momentum?"

- "If it's working, lock in the workflow so it doesn't drift."

- "CTA: Upgrade or book 15 minutes."

Why it works

- It gives a plan (people hate open-ended trials).

- It uses proof without turning into a case-study essay.

Re-engagement / win-back (3 emails)

Setup: Trigger = inactive 60-90 days | Timing = 0 / +4d / +10d | Tokens = {{first_name}}, {{product_interest}} | Stops = click, reply, unsubscribe

Email 1 (0) - Subject: "Still working on {{outcome}}?"

- "If you're still trying to get {{outcome}}, here's a 3-step checklist."

- "Want it?"

Email 2 (+4d) - Subject: "Pick your path (A or B)"

- "A) I'm trying to {{goal_A}}"

- "B) I'm trying to {{goal_B}}"

- "Reply A or B."

Email 3 (+10d) - Subject: "Should I close your file?"

- "Want me to stop sending these?"

- "Reply 'stop' and I'll take you off this sequence."

Why it works

- It gives control back (reduces complaints).

- It turns inactive into a simple choice.

Post-demo follow-up (B2B-safe, not annoying)

Setup: Trigger = meeting held | Timing = +2h / +3d / +10d | Tokens = {{first_name}}, {{use_case}}, {{next_step_date}} | Stops = next meeting booked, opp created, replied, manual override pause

Email 1 (+2h) - Subject: "Notes + next step"

- "Good talking today - goal: {{use_case}}."

- "Next step: {{next_step}} on {{next_step_date}}."

- "If I missed anything, reply and I'll fix it."

Email 2 (+3d) - Subject: "One example for {{use_case}}"

- "Here's a quick example of how teams handle {{use_case}}."

- "Want a version tailored to your workflow?"

Email 3 (+10d) - Subject: "Still a priority this month?"

- "Is {{use_case}} still a priority this month?"

- "Reply yes/no."

Why it works

- Every email stands alone (context + one CTA).

- It respects sales activity with a manual pause.

Pattern library you can steal in 10 minutes

If you want more sequences without building a logic maze, use these proven shapes:

- Cart abandonment: Subject "Still want your cart?" -> remind + one objection answer + checkout link

- Upsell: Subject "One add-on that makes {{product}} better" -> benefit + social proof + upgrade CTA

- Educational post-sale: Subject "Day 3: the shortcut" -> one lesson + one action + help CTA

- Post-purchase kickoff: Subject "Your first win in 15 minutes" -> setup steps + success metric + support link

- Launch reminder: Subject "Last day for {{bonus}}" -> deadline + one benefit + CTA

Use these as patterns, then plug in your tokens and stop conditions.

Skip this if you're still arguing internally about whether you should send 3 emails or 7.

Get the first three right, measure, then add.

Cadence rules by scenario (B2B vs ecommerce vs cold outreach)

Cadence is where good programs break. Too fast feels spammy. Too slow loses context.

| Scenario | Typical spacing | Typical length | Notes |

|---|---|---|---|

| Ecommerce flows | 1+ day | 3-6 emails | Fast feedback loop |

| B2B nurture | 4-7 days | 4-6 weeks | Matches longer cycles |

| Cold outreach (rules of thumb) | 3 days then 6-7 | 3 emails | Then pause 2-3 months |

B2B nurture: 4-6 weeks with 4-7 days between touches is the sweet spot for most teams.

Ecommerce / opt-in marketing: at least a day between emails after the immediate welcome message. If you're sending daily for a week, you need a strong preference center and a strong reason.

Cold outreach (rules of thumb):

- Keep volume <= 100/day per mailbox to protect reputation. (If you're running outbound, match this to email pacing and sending limits.)

- Keep it to 3 emails total.

- Spacing: 3 days after the first email, then 6-7 days after the follow-up.

- Then stop and pause 2-3 months before you try again.

Real talk: cold "drip campaigns" aren't the same as opt-in drips. You're borrowing the structure, but the deliverability and compliance bar's higher because recipients didn't ask for it.

Deliverability checklist for personalized drip campaigns (2026)

If deliverability's shaky, personalization just means you're sending more variants to spam.

Litmus found 70% of emails have at least one spam-related issue. That's an everyone problem.

The checklist (do this before scaling)

Authentication (required)

- SPF + DKIM + DMARC for bulk sending. (If you want the technical setup, see SPF DKIM & DMARC.)

- Yahoo's bar is clear: bulk senders need SPF and DKIM and must publish DMARC (at least

p=none). DMARC must pass.

Unsubscribe (required)

- Support one-click unsubscribe via headers.

- Include a visible unsubscribe link in the email body.

- Honor opt-outs within 2 days (Yahoo bulk-sender requirement).

Complaint rate thresholds

- Keep spam complaints < 0.3% (Yahoo requirement).

- Gmail's recommended target is < 0.10%. Run your program against that if you want stability. (More limits + remediation steps: spam rate threshold.)

Bulk sender definition

- If you send > 5,000/day to Gmail/Yahoo recipients, you're in "bulk sender" territory. Act like it.

Header implementation

- Add

List-Unsubscribe(mailto and/or URL). - Add

List-Unsubscribe-Post: List-Unsubscribe=One-Clickfor RFC 8058 POST-based one-click unsubscribe.

Practical implementation guide: Postmark's List-Unsubscribe header guide (https://postmarkapp.com/blog/list-unsubscribe-header). Mailbox-provider rules: Yahoo sender best practices (https://senders.yahooinc.com/best-practices/).

Hygiene rules that keep you out of trouble

- Sunset policy: if someone hasn't opened/clicked in 6 months, stop mailing them (or move them to low-frequency).

- Remove hard bounces immediately.

- Don't keep retrying "unknown" addresses just because your ESP lets you.

- Prefer double opt-in for high-volume lists if complaints creep up.

Fastest deliverability win: cut your audience before you improve your copy. Smaller, cleaner lists beat big, stale lists almost every time.

KPI targets, benchmarks, and what to test first

Benchmarks are guardrails, not grades. List quality and offer matter more than industry averages. And opens are directional because of MPP.

Benchmark table (campaigns vs automated flows)

| Metric | Automated flows | Top 10% flows | MailerLite campaign medians (2026) |

|---|---|---|---|

| Open rate | 48.57% | 65.74% | 43.46% |

| Click rate | 4.67% | 12.22% | 2.09% |

| CTOR | - | - | 6.81% |

| Order rate | 1.42% | 4.93% | - |

| Unsub rate | 0.81% | 0.04% | 0.22% |

These come from different datasets (Klaviyo flows vs MailerLite campaigns). Use them as directional guardrails, not direct comparisons.

KPI definitions (with quick math)

- CTR (click-through rate) = clicks / delivered

- CTOR (click-to-open rate) = clicks / opens (deeper breakdown: email click through rate)

- Conversion rate = conversions / delivered (or / clicks, if you're measuring post-click)

- Complaint rate = spam complaints / delivered

Example: 10,000 delivered, 400 clicks, 40 conversions, 12 complaints

- CTR = 400/10,000 = 4%

- Conversion rate (delivered) = 40/10,000 = 0.4%

- Complaint rate = 12/10,000 = 0.12% (good for Gmail targets)

The personalization testing order (highest impact first)

- Segment definition (who gets it) (build this like a real system: how to segment email list)

- Offer + CTA (what you want them to do)

- Timing + cadence (when you ask)

- Subject line (how you earn the open)

Opinion: if you're rewriting subject lines before you've fixed segmentation, you're polishing the wrong part of the machine.

Common mistakes (and how to fix them fast)

Fake personalization (the "this is BS" problem). Fix: stop guessing. Clean the fields, keep rules simple, and use fallbacks so the email never breaks.

Over-personalization is a conversion killer. Fix: personalize the angle, not the stalker details. "For RevOps teams..." beats "I saw you clicked pricing."

No fallback = broken emails. Fix: default every field. IF

industry blank-> "teams like yours."No stop conditions = automated nagging. Fix: stop on conversion events and replies. Exit immediately on

meeting_booked = true.Too many variants = impossible QA. Fix: cap at 2-4 paths. If you need 12, your positioning's unclear.

Post-call drips that annoy everyone. Fix: run the standalone test, keep one CTA, and pause automation when sales touches the account.

Bounce rates above 4% quietly destroy your drip campaign deliverability. Prospeo's 5-step email verification and 7-day refresh cycle keep your contact list current - so your personalized sequences land in inboxes, not spam folders. At $0.01 per email, re-enriching before every campaign is a no-brainer.

Verify and enrich your list before you hit send.

FAQ

What's the difference between a drip campaign and a nurture sequence?

A drip campaign is a scheduled automated series (like Day 0, Day 2, Day 4) designed to move someone through a specific step. A nurture sequence is broader and more signal-driven, branching based on intent and behavior over time. Drips are easier to QA; nurture is better for longer buying cycles.

What personalization should I do if I only have name + email?

Personalize the context and choice, not the profile: reference the signup source, ask a one-question "pick your path," and use engagement-based rules (clicked vs didn't click). Always include a fallback greeting (like "Hi there") when first name's missing or messy.

What deliverability setup is required for automated drips in 2026?

You need SPF, DKIM, and DMARC for bulk sending, plus one-click unsubscribe via List-Unsubscribe headers and a visible unsubscribe link in the email body. Keep spam complaints under 0.3% (and aim under 0.10% for Gmail), and process opt-outs within 2 days.

What's a good free tool to verify emails before I run a sequence?

For most teams, start with Prospeo's free tier (75 emails + 100 extension credits/month) because it verifies in real time with 98% email accuracy and supports enrichment workflows. If you only need very light checks, some ESPs include basic validation, but it won't fix stale roles/companies that break targeting.

What's the difference between CTR and CTOR - and which should I track?

CTR measures clicks against delivered emails, while CTOR measures clicks against opens. Because Apple MPP inflates opens, CTOR can look better even when nothing improved. Track CTR plus downstream conversions/replies as your primary score, and use opens only to spot big changes.

Summary: the boring stuff that makes personalized drip campaigns work

If you want personalized drip campaigns that convert (and don't torch your sender reputation), keep it simple: collect a small set of reliable fields, write rules you can explain in one sentence, add fallbacks everywhere, and enforce stop conditions. Then measure clicks, replies, and conversions, not just opens, and scale only after hygiene and deliverability are solid.