Email Click Through Rate in 2026: Definitions, Benchmarks, and Fixes

Your dashboard says "CTR is up 40%." Revenue says "no, it isn't."

In 2026, email click through rate is still one of the best email KPIs you can track. But only if everyone on your team means the same thing by "CTR" and you stop letting security scanners and privacy features bully your reporting.

Here's the hook: you can clean this up in a week, and you'll stop "optimizing" ghosts.

What you need (quick version)

- Definition: Unique CTR = unique clickers ÷ delivered (the version you can compare week to week)

- Weekly KPIs: Delivered, Unique CTR, Conversion rate

- Benchmarks: 2-5% CTR is a solid "good" range for many programs

- Sanity check: treat 1-5 second clicks as suspicious, then validate with sessions/conversions before excluding

Email CTR in 2026 (and why your "lift" might be fake)

A team ships a new newsletter layout on Monday. Tuesday's report says CTR is up 40%. Everyone celebrates.

Then pipeline stays flat, purchases don't budge, and the landing page analytics look normal. I've watched this happen in real teams: the "lift" was mostly security scanners clicking links to check for malware, and the A/B test winner was basically chosen by a bot.

Clicks are usually more reliable than opens now, but they aren't pure. Corporate gateways (and endpoint protection) will click through email links before a human ever sees the message, which inflates CTR, breaks experiments, and quietly poisons "engaged" segments over time because you're rewarding automation instead of intent.

Apple Mail Privacy Protection (MPP) mostly wrecks opens by preloading pixels through proxies. That doesn't directly create clicks, but it distorts anything that uses opens as a denominator, which is why CTOR can look like it's falling apart even when your email is fine.

Look, if you're selling low-consideration products (or you're in ecommerce), you can win email with boring fundamentals. Relevance, one clear CTA, clean tracking, and clean lists beat "clever" copy tricks almost every week of the year.

What email click through rate actually means (standardize it)

Most teams talk about "CTR" like it's one metric. In practice, ESPs use a few different definitions, and they don't always label them clearly.

Most modern ESPs separate total vs unique and anchor engagement metrics to delivered (not sent). That's the right default, because deliverability problems should show up in deliverability metrics, not get baked into your engagement math.

The key decision is simple: do you want to measure engagement volume (total clicks) or engaged people (unique clickers)? For performance reporting, unique clickers wins because it doesn't get distorted by one person rage-clicking a button five times, and it doesn't make multi-link newsletters look "better" just because they have more places to click.

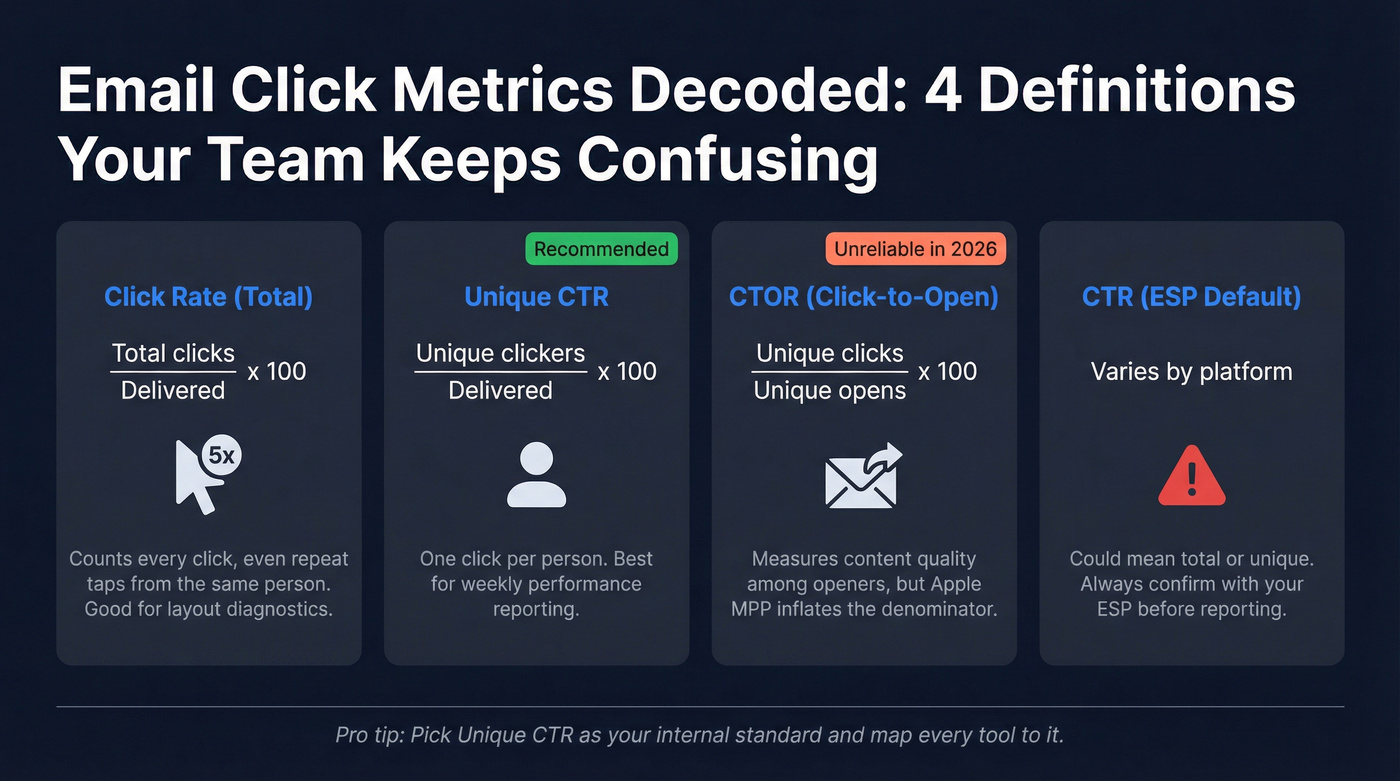

CTR vs click rate vs unique CTR vs CTOR

Use these formulas (they match how most ESPs calculate engagement):

Click rate (total clicks rate) = total clicks ÷ delivered emails × 100 Counts repeat clicks from the same person.

Unique CTR (unique click-through rate) = unique recipients who clicked ÷ delivered emails × 100 One clicker per recipient, no matter how many links they clicked.

CTOR (click-to-open rate) = unique clicks ÷ unique opens × 100 Measures how well the email converts openers into clickers.

If your ESP only shows "CTR," confirm whether it's using total clicks or unique clickers. Those can be wildly different on newsletters with multiple links, or on lifecycle emails where a small group clicks repeatedly.

Unique vs total clicks (why it changes the story)

Total clicks answers: "How much clicking happened?"

Unique CTR answers: "How many people took action?"

If you're reporting to leadership, unique CTR is harder to game and easier to compare week over week. Total clicks is still useful for diagnosing layout (for example, "people clicked the nav links a ton"), but it's not the KPI I'd hang a quarterly target on.

Sent vs delivered denominator (why teams argue about CTR)

"Sent vs delivered" includes emails that bounced. "Delivered" removes bounces.

Delivered is the better denominator because CTR should measure engagement among people who actually received the email. Deliverability is its own problem with its own metrics (bounce rate, inbox placement, spam complaints), and mixing those into CTR just makes every conversation harder than it needs to be.

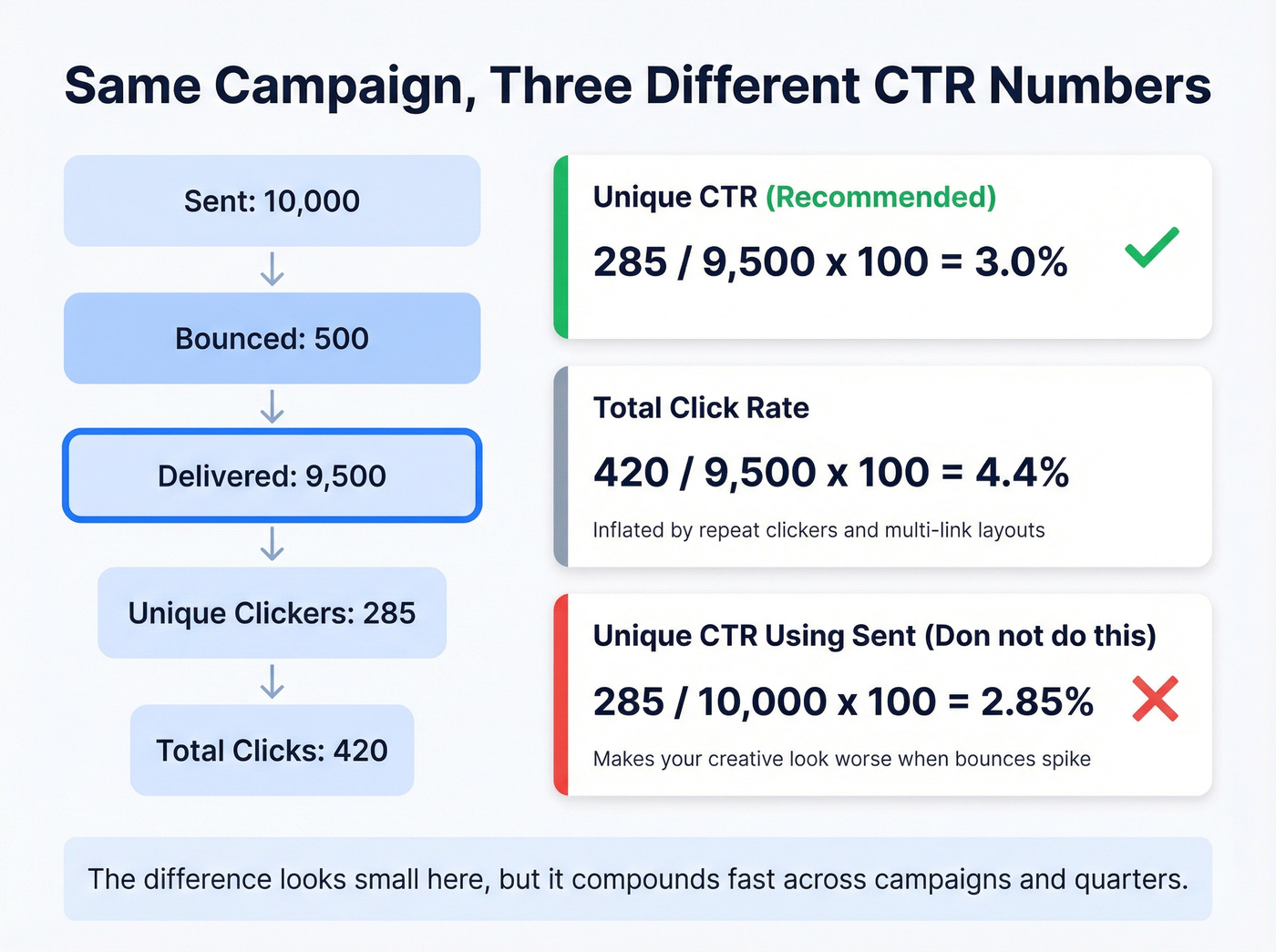

How to calculate CTR (with a worked example you can copy)

Use the simple approach: clicks ÷ delivered. It's the cleanest way to track performance across campaigns and ESPs.

Example campaign

- Sent: 10,000

- Bounced: 500

- Delivered: 9,500

- Unique clickers: 285

- Total clicks: 420

Unique CTR (recommended) = 285 ÷ 9,500 × 100 = 3.0%

Total click rate = 420 ÷ 9,500 × 100 = 4.4%

Now watch what happens if someone uses sent instead of delivered:

Unique CTR using sent (don't do this by default) = 285 ÷ 10,000 × 100 = 2.85%

That difference looks small here, but when bounces spike (new list import, cold outreach, old contacts), "sent-based CTR" makes your creative look worse even if the people who received the email behaved the same.

Pick one internal standard and stick to it. For most teams, that's Unique CTR = unique clickers ÷ delivered.

Your CTR benchmarks are meaningless if half your list never received the email. Prospeo's 98% email accuracy and 7-day refresh cycle mean fewer bounces, cleaner delivered counts, and click metrics that actually reflect human behavior - not bot scanners.

Fix your denominator before you fix your creative.

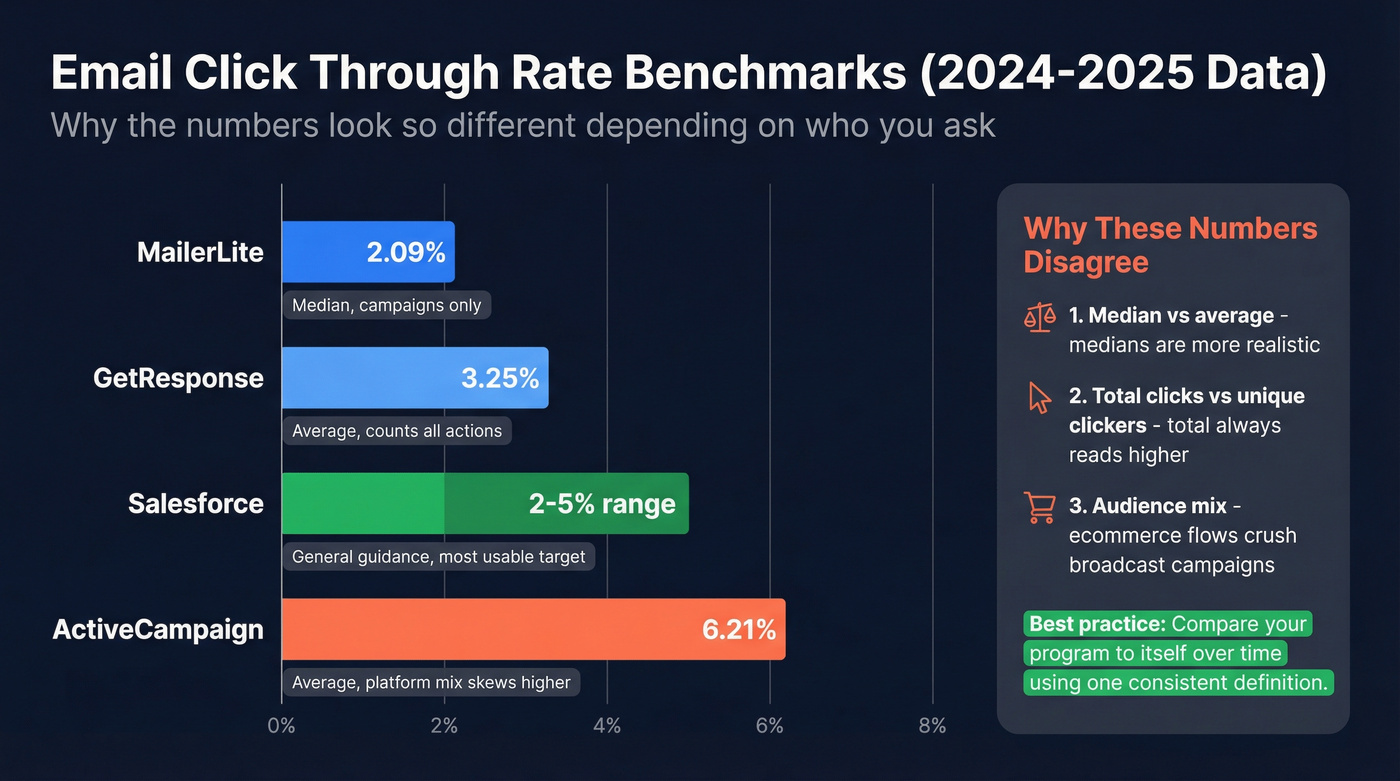

What's a "good" email CTR? Benchmarks you can actually compare

Benchmarks are messy because some sources report median (more realistic), others report average (pulled up by power senders), some count every action (inflates clicks), and audience mix matters a lot (ecommerce flows vs newsletters vs B2B promos).

Use benchmarks to sanity-check, then compare your program to itself over time using one consistent definition. Otherwise, you're comparing apples to oranges and calling it "insight."

Benchmark table (by source + methodology notes)

| Source | Period | Metric basis | Click metric | CTOR |

|---|---|---|---|---|

| MailerLite | Dec 2024-Nov 2025 | Median, campaigns | 2.09% | 6.81% |

| ActiveCampaign | 2025 | Average, platform mix | 6.21% | - |

| GetResponse | 2023 | Average, all actions | 3.25% | 8.62% |

| Salesforce | Ongoing | Guidance | 2-5% | - |

MailerLite also reports an unsubscribe rate of 0.22% across that same period, and it rose year over year. A big driver is simple UX: Gmail made unsubscribing easier (often a one-click flow that doesn't require "reading" the email), so you'll see more people cleanly opting out instead of staying as zombie subscribers who never click again.

That's good for deliverability and long-term CTR, even if it bruises your ego when list growth slows.

ActiveCampaign's 6.21% is an average, not a median, and it's influenced by the platform's customer mix. It's still useful as directional context inside similar industries. Their platform-average click rates by industry include:

- Software: 6.67%

- E-Commerce/Retail: 5.07%

- Accounting/Financial: 4.40%

- Media/Publishing: 7.32%

- Consulting/Agency: 7.05%

GetResponse's dataset is enormous, but its methodology counts every subscriber action (including multiple clicks), so it often reads higher than unique-click reporting.

Salesforce's 2-5% guidance is the most usable target-setting range for mixed programs because it's not "best case." It's "healthy and repeatable."

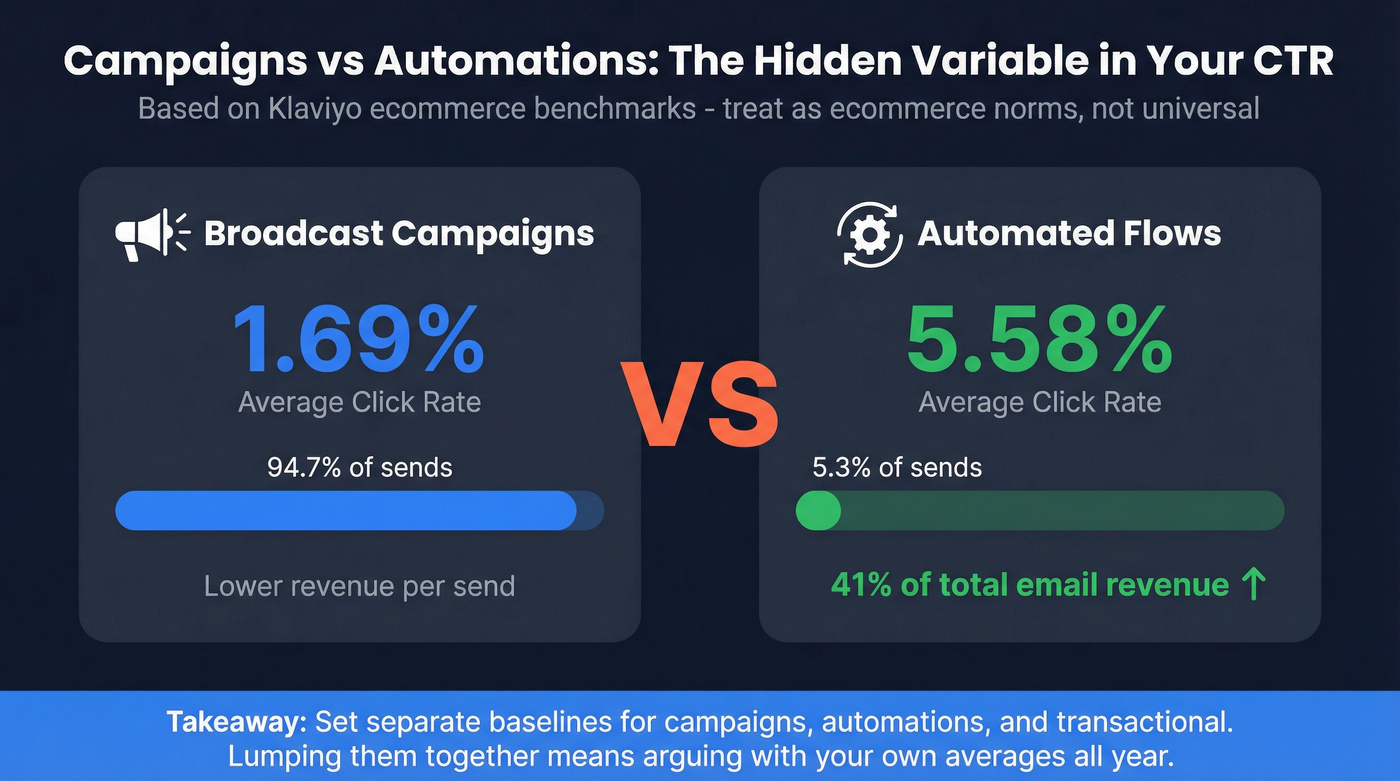

Benchmarks by email type: campaigns vs automations

Email type is the hidden variable most benchmark posts ignore.

Klaviyo's ecommerce benchmarks make this painfully obvious:

- Flows average 5.58% click rate

- Campaigns average 1.69% click rate

- Flows generate ~41% of total email revenue from 5.3% of sends

Klaviyo's numbers reflect a large ecommerce customer base, so treat them as ecommerce expectations, not universal email norms.

Practical takeaway: set separate baselines for broadcast campaigns, automations/flows, and transactional email. If you lump them together, you'll spend all year arguing with your own averages.

Why your dashboards disagree (and why "CTR" isn't universal)

ESPs aren't consistent, and sometimes their own docs use overlapping terms.

Do this when your tools disagree:

- Write your internal definition (unique clickers ÷ delivered) and map each ESP's metric to it

- Turn on bot filtering if your ESP offers it, but don't assume it's perfect

- Export raw events (delivery time, click time, user agent/IP if available) for suspicious campaigns

- Don't compare CTR across ESPs unless you've confirmed they count clicks the same way

If you're migrating platforms, expect a "CTR shift" that's measurement, not performance.

Email click through rate is polluted in 2026: Apple MPP + scanner clicks (how to spot both)

One mental model helps: opens are noisy, clicks are contaminated.

Apple MPP mostly breaks opens. Security scanners mostly break clicks. Together, they can make CTOR and "engaged segments" borderline useless unless you apply filters and validate with on-site behavior.

Why open rate is a vanity metric now (and how it distorts CTOR)

Apple MPP preloads tracking pixels through proxy servers. The client can fetch the pixel even if the person never reads the message, which inflates opens, trashes geo/timestamp fidelity, and pushes CTOR down because the denominator (opens) gets artificially high.

Apple accounted for 49.29% of email opens in Jan 2025, so this isn't a corner case. It's a huge chunk of your list.

Cross-platform handling makes it worse: some ESPs exclude MPP opens only after a cutoff date, others include them by default, and some treat "click implies open" in ways that make CTOR even harder to interpret. If your CTOR fell off a cliff after switching ESPs, it might not be your content. It might be your math.

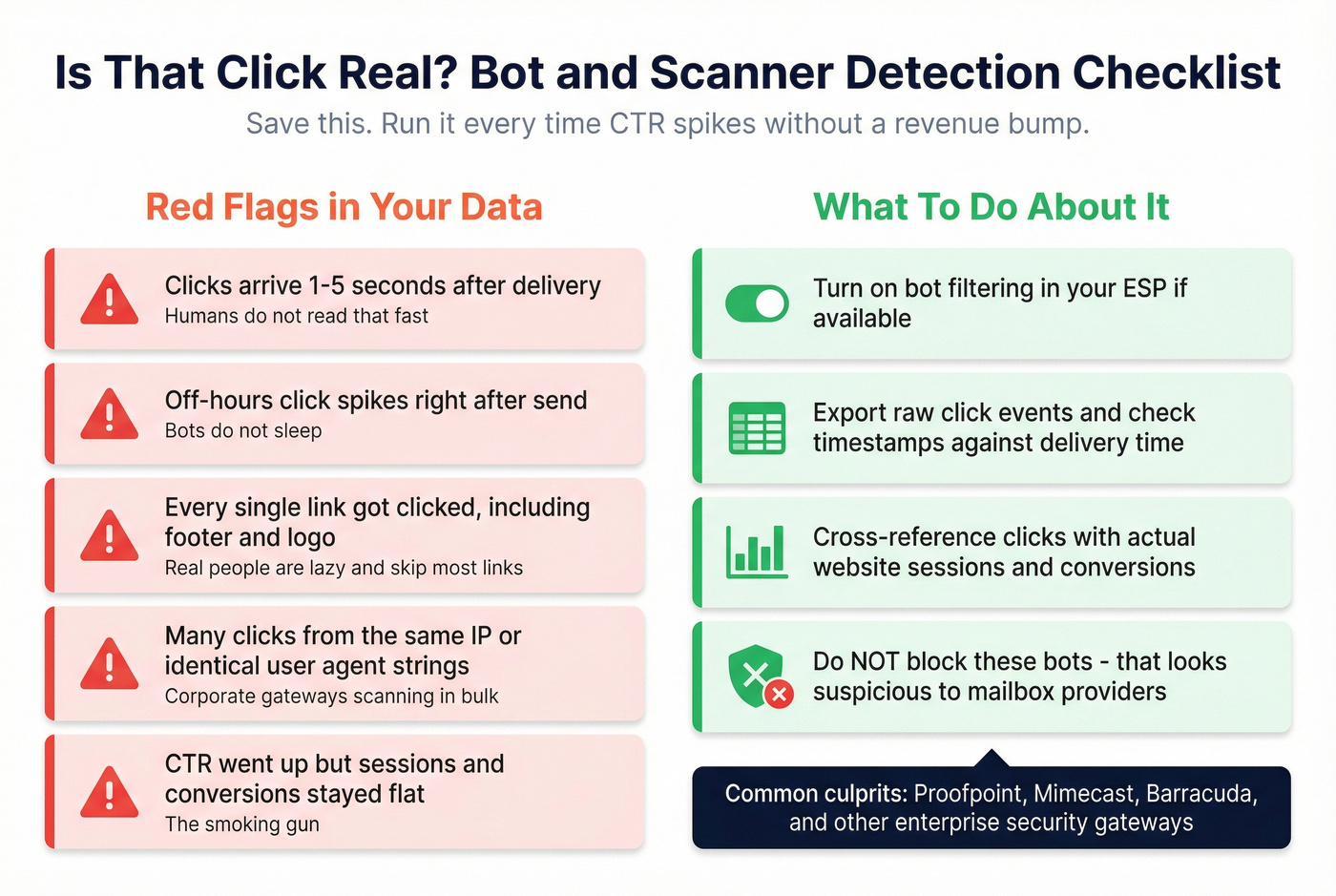

Bot/security scanner clicks: what they look like in reports

Bot clicks aren't "fraud." They're automated safety systems doing link scanning.

Practitioners in Validity's Sender Certification community have described the same pattern over and over: clicks jump, then disappear the moment you line up click timestamps with web sessions. Endpoint protection hits links immediately, often before the email is even visible to the recipient.

These clicks often come from enterprise gateways and security vendors like Proofpoint, Mimecast, and Barracuda.

Red flags checklist (save this):

- Clicks within 1-5 seconds of delivery

- Off-hours click spikes right after send

- Clicks on every link (logo, footer, unsubscribe)

- Many clicks from the same IP or same user agent

- CTR up, but sessions/conversions flat

And no, you shouldn't "fight" these bots by blocking them. That's how you end up looking suspicious to mailbox providers.

"Clicks without opens" explained (including Gmail clipping)

"Clicks without opens" freaks people out, but it's common.

Two big reasons:

- Images don't load, so the open pixel never fires. A human can still click a text link.

- Gmail clips emails over 102KB. If your tracking pixel sits at the bottom, the open won't register unless the user clicks "View entire message."

This is why you can't treat CTOR as gospel. If opens are undercounted (image blocking) or overcounted (MPP), CTOR swings wildly while real behavior stays the same.

How to clean your CTR reporting (without hurting deliverability)

You want cleaner reporting, not a war with security scanners.

The approach below mirrors guidance shared by practitioners in Validity's Sender Certification community and patterns documented in InsiderOne's bot-click writeups.

The practical filter rules (what to implement)

- Time-based exclusion: flag clicks that occur within ~1-5 seconds after delivery as suspicious. Humans don't click that fast. Caveat: validate against sessions/conversions before you permanently exclude anything because internal testers and ultra-short emails can produce legit fast clicks.

- All-links-clicked flag: treat "clicked everything (including unsubscribe/footer)" as scanner behavior.

- A/B test hygiene: remove suspicious clicks from winner selection so bots don't pick your subject line or CTA.

- Don't block or CAPTCHA scanners: it can hurt trust and deliverability.

- Keep email size ~40-80KB: reduces the chance you trigger Gmail clipping at 102KB.

- Use a branded tracking domain aligned with your sending domain: improves trust signals and reduces weird redirect/scanner behavior.

- Use HTTPS everywhere: basic, still missed.

Concrete implementation example (GA4 + UTM + ESP settings)

If you want CTR you can defend in a meeting, set this up once and stop debating screenshots.

1) Lock a UTM standard (and enforce it)

utm_source=emailutm_medium=newsletter(orlifecycle,promo,transactional)utm_campaign=YYYY-MM-campaign-nameutm_content=primary_cta/secondary_link(optional but powerful)utm_term=only if you've got a real taxonomy

2) Make GA4 your "truth" for humans In our audits, the fastest way to confirm bot clicks is matching click timestamps to GA4 sessions on the landing page:

- If clicks spike but sessions don't, it's scanners or broken tracking.

- If sessions spike but conversions don't, it's offer/landing mismatch.

3) Fix the two most common tracking breaks

- Redirects stripping UTMs: check link shorteners, tracking redirects, and "safe link" wrappers.

- Cross-domain journeys: if the click lands on Domain A and converts on Domain B, set up cross-domain measurement or you'll undercount conversions.

4) Turn on ESP bot filtering (then verify it) Most ESPs offer some form of bot filtering. Turn it on, then sanity-check with:

- click-delay distribution (seconds after delivery),

- "all-links clicked" rate,

- GA4 sessions vs clicks.

5) Roll out reporting in two lines for 4 weeks For the first month, report both:

- Raw Unique CTR

- Bot-filtered Unique CTR

That overlap prevents panic when the "real" CTR is lower than the inflated one you were used to.

Weekly KPI snapshot template (copy/paste)

Use this as your weekly email performance block. It's built for 2026 reality (MPP + scanners) and keeps the team focused on outcomes.

Weekly Email KPI Snapshot (Week of ____ )

- Delivered: ____

- Unique CTR (raw): ____%

- Unique CTR (bot-filtered): ____%

- Sessions from email (GA4, UTM): ____

- Conversion rate (primary goal): ____%

- Revenue per email (if applicable): ____

- Unsubscribe rate: ____%

- Spam complaint rate: ____%

Notes: ____ (deliverability changes, list changes, offer changes, tracking issues)

Opinionated rule: if you can't tie clicks to sessions, don't celebrate CTR.

How to improve email click through rate (prioritized levers that still work)

Most CTR advice is "write better copy." Sure. It's also usually the slowest lever.

The fastest wins are structural, and they stack: relevance first, then clarity, then layout. Once those are solid, copy and design tweaks actually matter because you're not trying to rescue a bad offer with a prettier button.

Use this / skip this (prioritized)

Use this: segmentation relevance (fastest lever) If the offer isn't relevant, no CTA tweak will save you. Segment by intent/action when you can (visited pricing, started checkout, clicked last campaign, requested a demo). If you need a deeper playbook, start with segment design.

A personalization test from BrewDog lifted email CTR 15.6%. The exact lift will vary, but the principle doesn't: relevance beats design.

Use this: one primary CTA (second fastest lever) One email, one job. Multiple competing CTAs dilute clicks and make "what worked" impossible to interpret. This is the same principle behind high-performing CTA design in outbound.

If you need secondary links, de-emphasize them (smaller, lower in the layout) and keep the primary CTA visually dominant.

Use this: mobile-first layout (third fastest lever) Most people scan email on a phone. If your CTA is below the fold, tiny, or surrounded by dense text, CTR dies.

Buttons usually outperform text links for the primary action. Button-vs-text tests often show meaningful lifts (iContact has shared examples up to 28%), and that matches what we've seen in day-to-day programs.

Skip this: obsessing over CTOR CTOR is a denominator problem in 2026. Use it as a diagnostic inside a single segment, not as your north star.

Skip this: "clever" anti-bot tricks Hidden links and trap links can backfire and hurt sender reputation. Clean the reporting instead of trying to outsmart scanners.

One more thing that people hate hearing: list hygiene isn't optional. If you're doing B2B email and you keep sending to stale addresses, you'll drag down deliverability, which drags down engagement, and then you end up rewriting emails that were never the problem in the first place. Tools like Prospeo (the B2B data platform built for accuracy) help here because you can verify emails in real time with 98% accuracy and work off data refreshed every 7 days, which keeps your "delivered" denominator honest and your CTR tied to real recipients. If you’re rebuilding your list process, use a simple email verification workflow and a repeatable Email Verification List SOP.

CTR troubleshooting decision tree (diagnose before you optimize)

Most teams jump straight to "rewrite the email." That's how you waste a month.

Use this decision tree first:

CTR high + conversions low

- Check scanner clicks (1-5 second clicks, all-links clicked)

- Check message/landing mismatch (email promises X, page delivers Y)

- Check attribution (UTMs missing, redirects stripping params)

CTR low + opens high

- Assume MPP inflated opens; ignore CTOR panic

- Diagnose relevance/CTA strength (segment too broad, CTA unclear)

CTR down after list cleanup

- Denominator changed (more delivered, fewer "lucky" engaged leftovers)

- Inboxing may have shifted (new sending pattern, new domain reputation)

Clicks without opens

- Image blocking (open pixel didn't fire)

- Gmail clipping at 102KB (pixel at bottom doesn't load)

Mini table to speed triage:

| Symptom | Likely cause | First fix |

|---|---|---|

| Clicks in 3 sec | Scanner | Exclude 1-5s |

| Opens spike | Apple MPP | Focus on CTR |

| CTR up, no sessions | UTMs/redirects | Audit tracking |

| No opens, clicks | Pixel | Move pixel up |

FAQ

What's the difference between CTR and CTOR?

CTR measures clicks relative to delivered emails; CTOR measures clicks relative to opens. In 2026, CTOR gets distorted by Apple MPP inflating opens, so CTR is usually the more stable KPI. Use CTOR for diagnosis inside one segment, not for goal-setting.

Should I use sent or delivered in the CTR formula?

Use delivered, because it removes bounces and makes the metric comparable week to week. If 6% of your list bounces after an import, a sent-based calculation makes your creative look worse even when recipient behavior didn't change. Track bounces separately as a deliverability KPI.

What's a good email click-through rate in 2026?

For many programs, 2-5% is a solid "good" range, with automations often higher than broadcasts. Newsletters and promos commonly land around 1-3%, while high-intent flows (welcome, cart, post-purchase) often exceed 5%. Compare only after you've standardized the definition (unique clickers ÷ delivered).

Why do I see clicks but no website sessions?

It's usually security scanners clicking links instantly, or UTMs/redirects breaking attribution. Flag clicks within 1-5 seconds of delivery and "all-links clicked" patterns, then compare click timestamps to GA4 sessions. If sessions are flat, treat the clicks as non-human until proven otherwise.

How do I improve CTR without wrecking deliverability?

Start with list hygiene and measurement, then optimize creative, because otherwise you're tuning noise. If you're cleaning or enriching a B2B list, Prospeo is a strong pick for keeping your data fresh (7-day refresh cycle) and your sends accurate (98% verified email accuracy), which helps protect deliverability so CTR reflects real humans.

Bounce spikes quietly poison your CTR math - inflating sent counts while real engagement stays flat. Prospeo verifies every email through a 5-step process with spam-trap removal and catch-all handling, so your delivered numbers stay honest and your click rates mean something.

Clean lists make clean metrics. Start with 100 free credits.

Summary: what to do with email click through rate in 2026

Email click through rate is still worth tracking in 2026, but only after you standardize the definition (unique clickers ÷ delivered), separate raw vs bot-filtered reporting, and validate spikes against GA4 sessions and conversions.

Once measurement's clean, the biggest lifts come from relevance/segmentation, one primary CTA, and mobile-first layout, not gimmicks. If you’re tightening the whole stack, make sure your email deliverability fundamentals and SPF DKIM & DMARC setup are solid, and watch your domain reputation as you scale.