Deal Health: The Practical Guide to Scoring, Interpreting, and Improving It

$15k/year for "AI deal scoring" and your reps still can't tell you what's actually wrong with the deal. That's the dirty secret: deal health isn't a dashboard problem, it's an operating system problem. If you don't tie the score to specific behaviors and next actions, it becomes decoration in your CRM.

A score that doesn't change what reps do is just a color.

What you need (quick version)

This works when it's simple, enforced, and tied to actions. If you try to model everything, you'll ship nothing - and the team will keep running pipeline reviews on vibes.

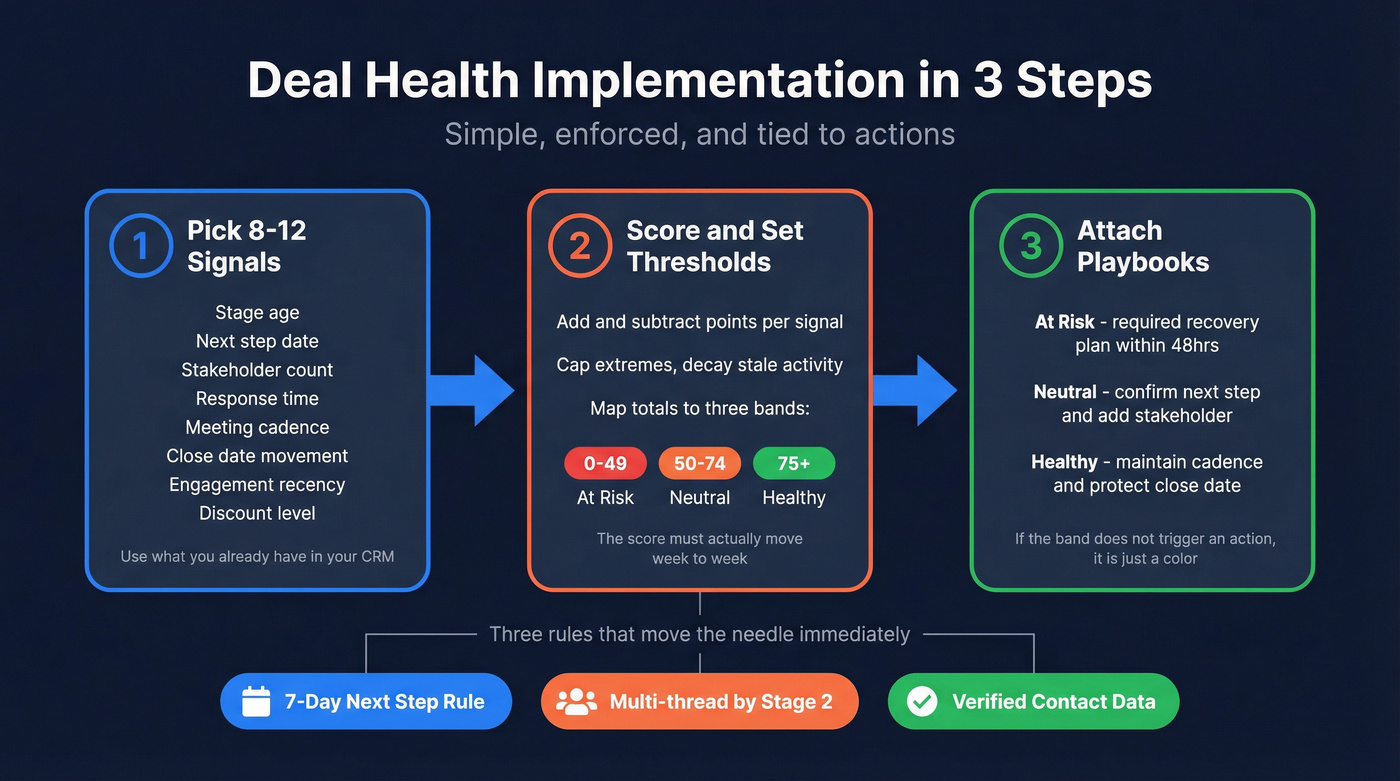

3-step implementation (do this in order):

Pick 8-12 signals you already have. Stage age, next step date, stakeholder count, response time, meeting cadence, close date movement, engagement recency, and discount level get you 80% of the value without buying anything new. These become your core indicators.

Turn signals into points + thresholds. Add/subtract points, cap extremes, and decay stale activity so the score actually moves. Then map the total into three bands your org can remember: at risk / neutral / healthy.

Attach playbooks to each band. Every band needs default actions for reps and a review motion for managers. If "at risk" doesn't trigger a required recovery plan, you don't have a system - you have a color.

Implement first (these three move the needle immediately):

- The 7-day next-step rule: If there's no scheduled next step within 7 days, the deal's at risk. No debate. This is the single best "anti-stall" rule in B2B sales.

- Multi-threading by stage 2/3: Once you're past early discovery, you should have at least two stakeholders engaged (ideally power + user). Single-threaded deals don't "surprise close." They surprise slip. (If you want a deeper playbook, see multi-threading.)

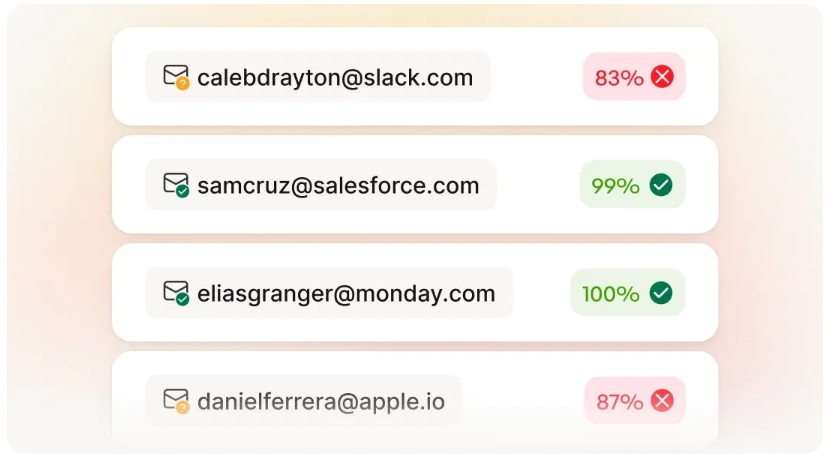

- Verified contact enrichment: Bad emails and missing mobiles create fake "low engagement" signals. Prospeo's verified contact data keeps you from labeling a deal unhealthy when you simply can't reach the right people. (More on this in B2B contact data decay.)

One warning that'll save you confusion: some platforms output percentiles, not true win probability. Gong, in particular, can look like "80% likelihood" when it's really "healthier than 80% of similar deals."

What is deal health (and what it isn't)

Deal health is a single score (or status) that summarizes the likelihood and viability of a deal closing, based on signals you can observe in your CRM and sales motion.

The best mental model is an early warning system. In customer success, health scores flag churn risk 60-90 days early; deal health should do the same for pipeline: surface risk while you can still change the outcome, not after the close date's slipped twice.

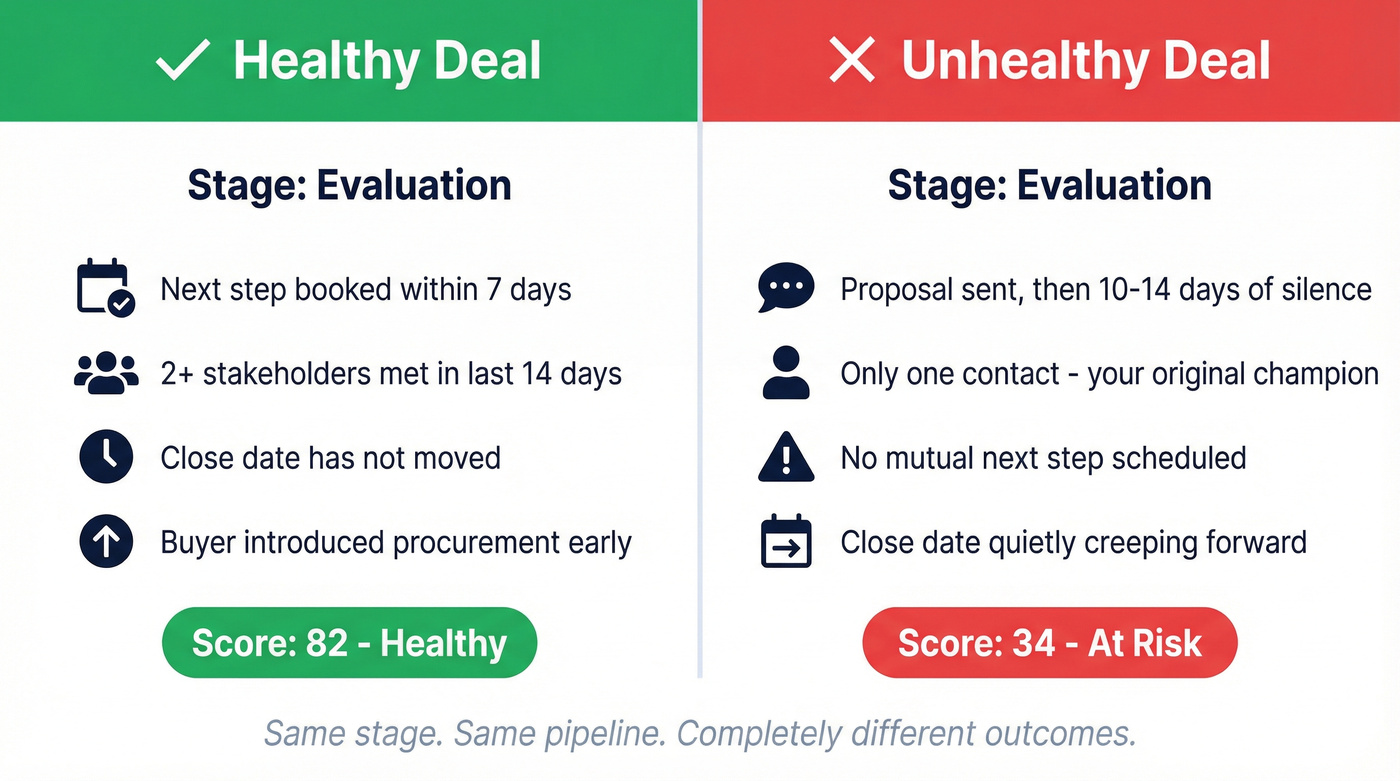

What "healthy" vs "unhealthy" looks like (same stage, different reality)

Here are real-world patterns that make this useful:

- Same stage ("Evaluation"), healthy: next step's booked within 7 days, the buyer introduced procurement early, and you've met at least two stakeholders in the last 14 days. The close date hasn't moved. This deal has momentum.

- Same stage ("Evaluation"), unhealthy: proposal sent, then silence for 10-14 days, no mutual next step, and the only contact is your original champion. The stage says "Evaluation." The behavior says "stalled."

- Same stage ("Security/Legal"), healthy: legal's engaged, timelines are explicit, and the buyer's driving the process ("we need redlines by Friday"). Even if activity volume's lower, the deal's progressing like a winner.

- Same stage ("Security/Legal"), unhealthy: you're "waiting on legal" but can't name who is reviewing it, the close date keeps creeping, and every follow-up is a one-way check-in. That's not legal. That's limbo.

Here's what it isn't

- It isn't your stage. Stage is where the buyer is in their journey. Health is whether the deal's progressing like a deal that wins.

- It isn't rep optimism. If your "Commit" deals keep slipping, you've already learned why vibes don't forecast.

- It isn't a black box you outsource to AI. AI can help, but if reps don't understand the levers, they won't change behavior.

Use it if:

- You run weekly pipeline reviews and want them to be about root causes, not storytelling. (If your meetings are chaotic, tighten the motion with a pipeline review cadence.)

- You want a consistent way to spot stalled deals across reps, segments, and regions.

- You're ready to enforce a few non-negotiables (next step hygiene, multi-threading, close date discipline).

Skip it (for now) if:

- Your stages are basically "demo done / proposal sent." That's seller activity, not buyer progress.

- Your activity logging is fragmented across tools, inboxes, and calendars. People'll ignore the score because they don't trust the inputs. (A quick fix path: CRM automatic email logging.)

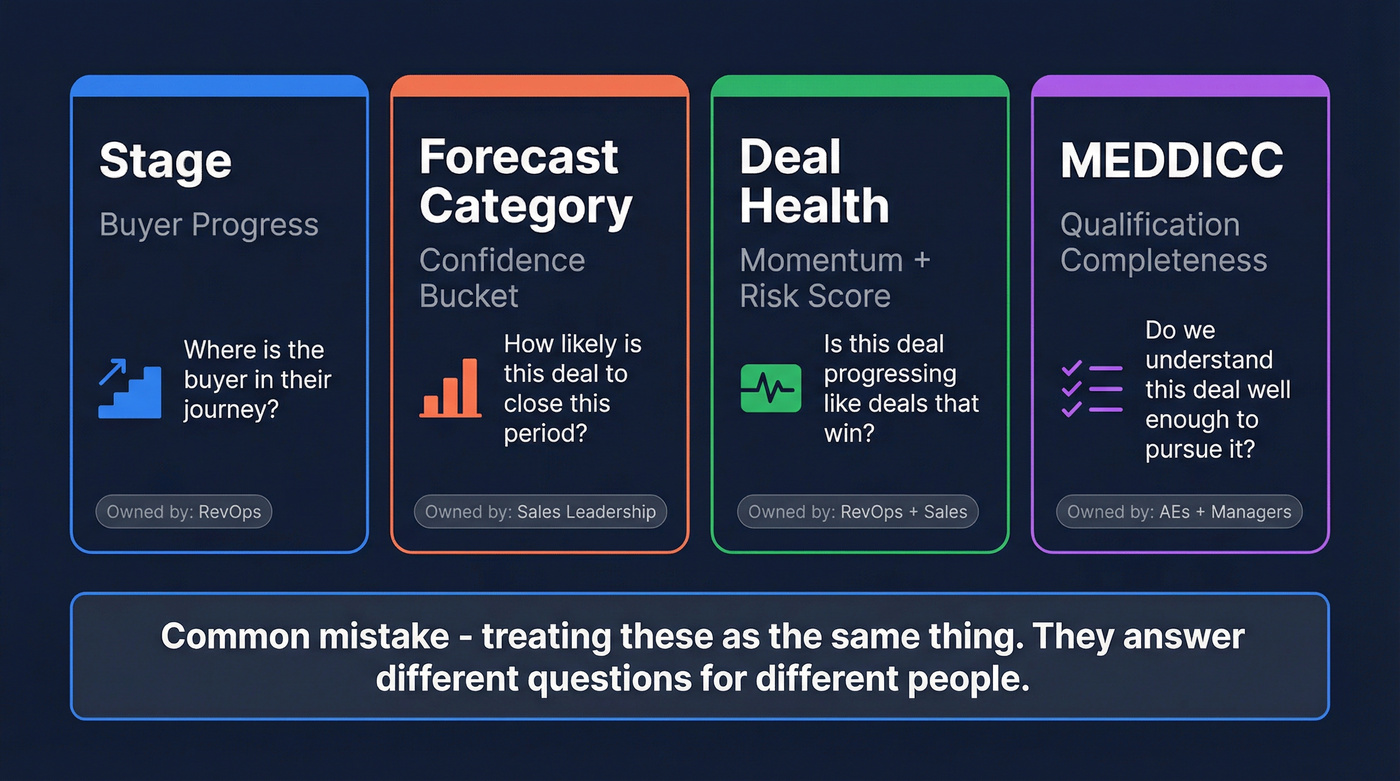

Deal health vs stage vs forecast category vs MEDDICC

These four concepts get mashed together constantly, and it's why teams end up with 14 fields nobody updates.

- Stage = buyer progress (what's happened in the buying journey).

- Forecast category = your confidence bucket for a time period. (If your definitions are messy, align on what is a sales forecast.)

- Deal health = momentum + risk signals summarized into a score/status.

- MEDDICC = qualification completeness (do we understand the deal well enough to pursue it correctly?).

MEDDICC is especially misunderstood. It's a qualification methodology, not "health." You can have a beautifully filled MEDDICC checklist and still have a dead deal because there's no next step, no champion, and the close date is fantasy.

I've seen this go sideways in the real world: a team rolled out MEDDICC, managers started grading deals like homework, and within a month reps were copy-pasting "Metrics" and "Decision Process" text just to get through inspection. The pipeline looked cleaner on paper, but close dates still slipped because nobody was enforcing the basics like mutual next steps and multi-threading.

Methodology rollouts are expensive and fragile. Major MEDDICC training programs are commonly quoted in the $100k-$500k range, and adherence drops fast without reinforcement baked into weekly rhythms, manager inspection, and stage gates that actually block bad behavior.

Here's the clean separation that works in production:

| Concept | Definition | Owned by | Used for | |---|---|---|---| | Stage | Buyer progress | RevOps | Process + reporting | | Forecast category | Sales leadership | Forecast calls | | Deal health | Risk/momentum score | RevOps + Sales | Save deals early | | MEDDICC | Qualification map | AEs + managers | Deal strategy |

Forecast categories (keep them boring and consistent): Pipeline / Best Case / Commit / Closed / Omitted. If you add five more categories, you're just creating new ways to argue in forecast.

Hot take: deal health is more useful than forecast category for coaching, and forecast category is more useful than deal health for the CFO. Stop trying to make one field do both jobs.

Your deal health score is only as good as your contact data. If reps can't reach the right stakeholders, every deal looks stalled - even the ones that should close. Prospeo's 98% verified emails and 125M+ direct dials eliminate false "at risk" signals caused by bad data, so your scoring system reflects real deal momentum.

Stop scoring deals unhealthy when the real problem is missing contact data.

Deal health prerequisites: stages and CRM hygiene that make scoring usable

If you skip this, your score'll get ignored. That's not theory - teams don't adopt scoring systems they don't trust.

Stage design (non-negotiable)

- Stages must reflect buyer progress, not seller actions.

- Each stage needs 2-4 exit criteria that are observable (for example: "mutual success plan agreed," "security review initiated," "evaluation stakeholders identified").

- Stage duration expectations should exist (even if rough). Without that, "stalled" becomes subjective and political.

CRM hygiene (minimum viable discipline)

- A required Next step field (text) and Next step date (date).

- Close date changes tracked (and reviewed).

- Activities logged consistently (calls, meetings, emails).

- One owner at all times. Unowned deals are zombie deals.

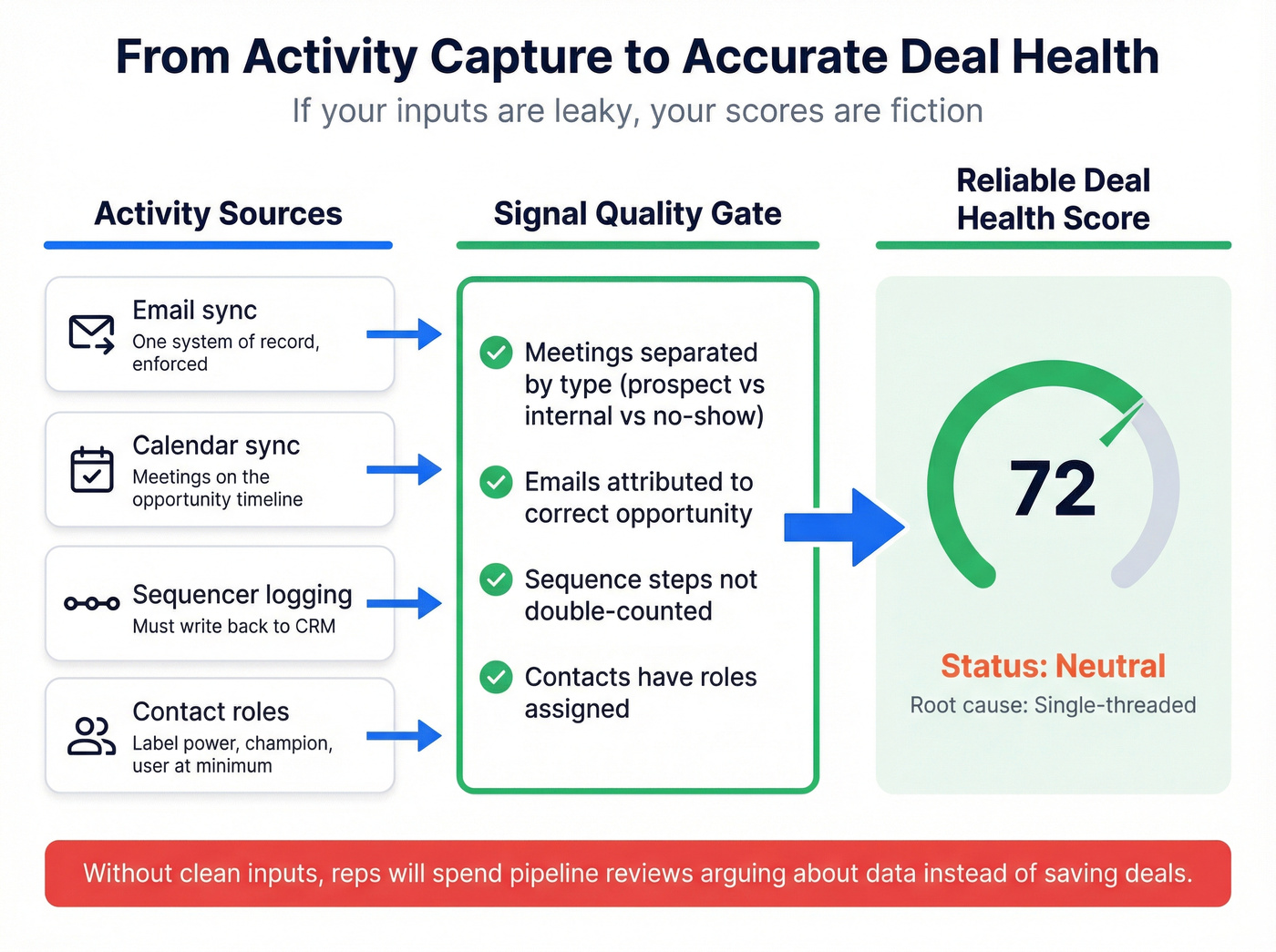

Minimum viable activity capture (the part everyone underestimates)

If your activity capture is leaky, scoring turns into "random red/yellow/green" and reps stop looking.

- Email + calendar sync: pick one system of record and enforce it. If meetings aren't on the opportunity timeline, your "engagement" signals are fiction.

- Sequencer logging: if sequences run in a sales engagement tool but don't write back to CRM, your model will punish reps who actually do the work.

- Meeting types: separate "internal," "prospect," and "no-show." Otherwise no-shows inflate "activity" while the deal quietly dies.

- Contact-role hygiene: label at least power / champion / user (even if it's a simple picklist). Stakeholder coverage's impossible if every contact is just "Contact." (Related: building a real committee map with B2B decision making.)

Look, reps aren't wrong when they say the score feels random. If they remember three real conversations last week but the CRM shows "low engagement," you'll spend your pipeline review litigating data quality instead of saving deals, and everyone will quietly stop using the system.

How to build a deal health score (copy/paste template)

You don't need a PhD model. You need a score that's explainable, tunable, and hard to game.

A practical structure:

- Choose metrics (signals)

- Assign weights (importance)

- Combine into one formula (points → total score)

Minimum CRM field schema (so this actually works in a CRM)

If you only add one thing from this guide, add this schema. It's the difference between "nice spreadsheet" and "operational system."

- Deal Health Score (number, 0-100)

- Deal Health Status (picklist: At risk / Neutral / Healthy)

- Deal Health Root Cause (picklist: No next step / Single-threaded / Close date slippage / Stalled stage / Low engagement / Pricing & procurement / Other)

- Next Step (text) + Next Step Date (date)

- Optional but powerful:

- Stakeholders Engaged (#, rollup/count)

- Last Meaningful Interaction Date (date)

- Close Date Moves (30d) (#, calculated from field history if possible)

Where to surface it (this is what creates visibility):

- Opportunity list views (sort by score, filter "At risk")

- Pipeline inspection / deal boards (status badge + root cause)

- Manager dashboard (at-risk by stage, at-risk by rep, root-cause trend) (If you need KPI templates, use revenue dashboards.)

The bands (keep these constant)

Use these thresholds so your org has a shared language:

- 0-49 = at risk

- 50-74 = neutral

- 75+ = healthy

The template: 10 signals with weights

Pick signals that exist in any CRM and don't require perfect instrumentation.

Category A - Momentum (40 points max)

- Stage velocity vs expected (0 to +15)

- Close date stability (0 to +10)

- Next step scheduled (0 to +15)

Category B - Engagement (30 points max)

- Response time (0 to +10)

- Weekly touchpoints (0 to +10)

- Meeting recency (0 to +10)

Category C - Deal structure (30 points max)

- Stakeholder coverage (0 to +15)

- Champion strength (0 to +10)

- Procurement path known (0 to +5)

Total = A + B + C, capped at 100.

Example point rules (add/subtract + caps + decay)

Use rules like these (copy/paste and adjust):

Next step hygiene (max +15, can go negative):

- +15 if next step date is within 7 days

- +5 if next step date is 8-14 days out

- -15 if no next step date exists

Stage velocity (max +15):

- +15 if time in stage ≤ expected

- +5 if 1.5x expected

- -10 if ≥ 2x expected (stalled)

Close date stability (max +10):

- +10 if close date hasn't moved in 14 days

- 0 if moved once in 14 days

- -10 if moved 2+ times in 30 days

Engagement recency with decay (max +10):

- +10 if there's a meaningful interaction in last 7 days

- +5 if last 8-14 days

- 0 if last 15-21 days

- -10 if 22+ days (ghosted)

Stakeholder coverage (max +15):

- +15 if 2+ stakeholders engaged and one is director+ (or equivalent)

- +8 if 2+ stakeholders but no power identified

- -10 if single-threaded

Discount/procurement (max +5, but can trigger a rule):

- +5 if procurement path is known when discounting is in play

- -5 if discount requested and no procurement contact identified

Mini-table you can paste into a spreadsheet

| Signal | Points | Cap |

|---|---|---|

| Next step date | -15 to +15 | 15 |

| Time in stage | -10 to +15 | 15 |

| Close date moves | -10 to +10 | 10 |

| Last interaction | -10 to +10 | 10 |

| Response time | 0 to +10 | 10 |

| Weekly touches | 0 to +10 | 10 |

| Meeting recency | 0 to +10 | 10 |

| Stakeholders | -10 to +15 | 15 |

| Champion | 0 to +10 | 10 |

| Procurement path | -5 to +5 | 5 |

Calibration tip: start with weights that feel obvious, run it for two weeks, then adjust based on what your best managers already know. I've run bake-offs where the "perfect" model lost to a simpler one because reps could actually explain it in a deal review, and that alone changed behavior.

The deterministic momentum rules that beat black-box scoring (when data's messy)

When your data's messy (and it usually is), deterministic rules win because they're enforceable. They also translate directly into workflows, alerts, and manager inspection queues.

Use this checklist as your "deal momentum constitution"--a lightweight assessment you can run even before you trust any AI model:

Communication + cadence

- If prospect response time is <48 hours, the deal's healthier.

- If you're not getting weekly touchpoints, assume drift is happening.

- If a proposal goes out, follow up in <72 hours. Waiting longer is basically conceding. (If you need a system, use a prospect follow up cadence.)

Next-step discipline

- No next step in 7 days = at risk. This one rule alone is worth building a scoring system for.

- Enforcing the 7-day next-step rule drives +11% win rate in SMB pipelines because it forces mutual action instead of "checking in."

Stakeholder coverage

- Adding a 2nd stakeholder lifts win rate by 18% because it reduces single-thread risk and surfaces real buying dynamics.

- If you're discounting >10%, you need a procurement contact. Otherwise you're negotiating blind.

Now turn those into if/then rules your CRM can act on:

- IF no next step date THEN set health = at risk and create a task for the owner due tomorrow.

- IF proposal sent AND no activity in 72h THEN manager notification + "proposal follow-up" task.

- IF stage ≥ mid-funnel AND stakeholders engaged <2 THEN require a multi-thread plan before moving stages.

- IF discount >10% AND procurement contact missing THEN block "Commit" forecast category.

Here's the thing: deterministic rules feel "less intelligent," but they're the fastest path to behavior change. AI scoring's great once your instrumentation is solid. Until then, rules keep you honest.

Deal health playbooks: what to do when a deal's at risk

A number with no playbook is decoration. If your CRM shows "42/100" and nobody knows what to do next, you've built a scoreboard, not a system.

This is where most teams blow it, and it drives me nuts: they spend months arguing about weights, then do nothing different when a deal turns red.

Tie actions to score bands:

- At risk (0-49): manager review + required recovery plan within 24h

- Neutral (50-74): rep executes playbook + manager spot-check

- Healthy (75+): protect momentum + expand stakeholders

| Root cause | Signals | Actions |

|---|---|---|

| No next step | No next step date; last activity stale | Book mutual next step; send agenda; add calendar hold |

| Single-threaded | 1 contact; no power | Map stakeholders; ask for intro; run "2-thread" outreach |

| Close date slippage | Close date pushed 2+ times | Re-baseline timeline; confirm decision process; reset forecast |

| Stalled stage velocity | Time-in-stage ≥2x norm | Run a "stuck deal" call; identify blocker; create mutual plan |

| Low engagement | No replies; no meetings | Change channel; reframe value; involve exec sponsor; verify contacts |

If you want one manager-friendly artifact: require a 5-line recovery plan for at-risk deals:

- What changed?

- What's the blocker?

- Who's missing?

- What's the next step (dated)?

- What's the fallback close date if it slips?

I've seen teams cut pipeline review time by a third just by forcing root-cause labeling. No more 10-minute monologues. Just: "It's at risk because it's single-threaded and close date slipped. Here's the multi-thread plan."

How major platforms calculate deal health (so you can copy the good parts)

Most "deal health" products do the same core job: combine timing, activity, and stakeholder signals into a score, then surface insights. The differences that matter are (1) what the score actually means and (2) how often it refreshes.

Salesforce Pipeline Inspection (Einstein Opportunity Scoring)

Salesforce operationalizes this inside Pipeline Inspection using Einstein Opportunity Scoring plus deal insights.

Instead of showing 1-99 raw scores, Pipeline Inspection groups deals into tiers:

- High: 67-99

- Med: 34-66

- Low: 1-33

It also shows activity metrics like calls/meetings/emails in the last 7 or 30 days, plus stakeholder summaries that exclude contacts with no activity in those windows. That's a subtle but important detail: "stakeholders" only count if there's recent engagement.

Einstein uses standard opportunity fields, field history, tasks/activities, and synced email/calendar data. The model refreshes weekly, while insights can update multiple times daily.

Pricing-wise, Einstein scoring is packaged with higher Sales Cloud editions and Einstein add-ons. Expect roughly $75-$200/user/mo equivalent depending on what you already own.

HubSpot deal score (AI)

HubSpot's deal score outputs a percentage probability (for example, 85 = 85% likelihood of winning) based on factors like amount, close date changes, time in stage, activity recency/volume, next scheduled activity, time since the next step was updated, and owner changes.

Operationally, HubSpot's explicit about timing:

- New deals get an initial score in ~36 hours (up to 48 hours).

- Existing deals update within ~6 hours when the score changes by ±3%.

- If nothing relevant changes for 2 weeks, HubSpot auto-updates anyway.

- Reopened deals don't receive a score (HubSpot recommends creating a new deal).

HubSpot gotcha: HubSpot sunset legacy scoring in 2026. If you've been in HubSpot for a while, audit your score properties and migrate to the newer scoring apps so dashboards don't quietly break.

Published pricing (Jan 2026 update): Starter $9/seat/mo annual (or $15 monthly), Pro $90/seat/mo annual (or $100 monthly) + $1,500 onboarding, Enterprise $150/seat/mo + $3,500 onboarding.

Outreach Deal Health (and why weights differ per deal)

Outreach's Deal Health is a 0-100 score with statuses (On-track / Needs review / At-risk). It's explicitly relative: health is compared to similar deals at similar stages.

The operational gotcha is refresh cadence: Outreach calculates the feature set once daily and produces scores at 10am, so actions taken today show up tomorrow. That 1-day lag matters if you're trying to run daily "save deals" motions.

The most useful concept Outreach explains is dynamic weighting. Weights aren't fixed. They're computed as the impact on score if a metric were replaced with the org average. So "meetings" might matter a lot for one deal and barely matter for another.

Outreach also has strict activity attribution logic (owner + account association), which is great for cleanliness and brutal if your CRM ownership's sloppy.

Deal Health requires Orchestrate and Outreach sells on annual enterprise contracts. Expect $1,200-$2,500/seat/year in many mid-market setups, plus modules.

Outreach reports 81% precision for its ML model vs 34% for traditional rules-based models.

Gong deal likelihood scores (percentile vs probability)

Gong's deal likelihood is the most commonly misread score in revenue teams.

Gong uses a multi-model system: a base model trained across customers plus customization using your last 2 years of closed deals. It runs daily and draws signals from:

- 50% conversation intelligence (pricing/legal mentions, competitor talk, call warnings)

- 50% activity/timing/contacts/historical performance

There are prerequisites: you need at least 50 closed-won and 150 closed-lost deals in the last 2 years (with minimum deal lifespan).

The big gotcha: Gong's score is a percentile rank, not a literal win probability. A score of 80 means the deal looks healthier than 80% of similar deals in your history, not that it has an 80% chance to close.

Gong also reports its AI is 21% more precise than sales reps at predicting winners as early as week 4 of the quarter.

Gong Forecast is enterprise-priced and typically bundled. Expect $20k-$80k+/year depending on seats and modules.

Platform comparison table (copy the good parts)

| Platform | Score meaning | Refresh | Key inputs | Gotcha |

|---|---|---|---|---|

| Salesforce | Tiered health | Weekly model | Fields + activity + history | Needs clean email/calendar sync |

| HubSpot | Probability % | ~6h / 2w | Activity + dates + owner | Reopens get no score |

| Outreach | Relative 0-100 | Daily 10am | Activity + buyers (30d) | 1-day lag |

| Gong | Percentile rank | Daily | Convos + activity + contacts | Not probability |

Also in the ecosystem (context, not a deep dive)

If you're shopping beyond the big four: Clari is the heavyweight for enterprise forecasting and pipeline inspection workflows, often landing in the $30k-$100k+/year range depending on scope and seats. Salesloft sits closer to the engagement layer; teams use it to drive consistent activity and then feed those activities into CRM-based models (or pair it with conversation intelligence).

Different jobs. Same outcome: fewer "mystery slips."

Data quality: the hidden dependency (and why "low engagement" is often your contact data)

Most teams interpret "low engagement" as "buyer isn't interested." Half the time it's simpler: you're emailing the wrong person, the email's bad, or you don't have a working number.

Here's a scenario I've watched play out more than once: an AE sends a proposal, follows up twice, and gets nothing back. The score drops, the manager calls it "stalled," and the rep swears the buyer's gone dark. Then someone checks the email and it's a catch-all that never delivered, the champion changed jobs two weeks ago, and the only phone number is the company's main line. The deal didn't go cold. You just couldn't reach the humans.

Bad contact data creates false unhealthy signals:

- Emails bounce, so your sequencer shows "sent" but the buyer never saw it.

- You're single-threaded because you can't find the second stakeholder.

- Calls don't connect because you're dialing HQ lines or outdated mobiles.

- Meetings don't happen because your "next step" is with someone who can't buy.

I've watched scoring models get blamed for being "inaccurate" when the real issue was missing or stale contacts. Fix the inputs and the score suddenly starts matching reality.

The practical fix is verified enrichment, not more scoring logic. Prospeo ("The B2B data platform built for accuracy") gives you 300M+ professional profiles, 143M+ verified emails, and 125M+ verified mobile numbers, refreshed every 7 days with 98% email accuracy and a 30% mobile pickup rate. When your reachability is real, "low engagement" starts meaning what you think it means. (If you need an SOP, start with how to keep CRM data clean.)

Use this checklist to clean up "engagement" signals fast:

- Enrich every opportunity's top 3 stakeholders (power, champion, user).

- Verify emails before sequences go out (especially for new domains). (Tooling options: email verifier websites.)

- Add mobiles for late-stage deals so "no response" doesn't equal "no reach." (See: B2B phone number.)

- Refresh contacts weekly so job changes don't silently kill deals.

First 30 days rollout plan (start simple → calibrate → automate)

This fails when it's launched as a "feature." It works when it's launched as a cadence with owners, agendas, and consequences.

Week 1 - Define the minimum viable model (Owner: RevOps)

- Lock stage definitions (buyer progress).

- Pick 8-12 signals and implement the 3 bands (0-49 / 50-74 / 75+).

- Create the field schema: Score, Status, Root Cause, Next Step, Next Step Date.

- Decide cadence: daily score refresh, weekly review.

Deliverable by Friday: a dashboard/list view that shows (1) at-risk deals, (2) root cause, (3) next step date. This becomes your baseline overview for managers.

Week 2 - Launch deterministic rules first (Owner: Sales leadership + RevOps)

- Turn on the 7-day next-step rule and single-thread detection.

- Add proposal follow-up SLA (<72h) and response-time expectations (<48h).

- Train managers to ask "root cause + next action," not "why is it red?"

My opinion: if you can't enforce the next-step rule in week 2, pause the entire rollout. You're not ready for scoring; you're ready for discipline.

Week 3 - Calibrate with real deals (Owner: Sales managers)

- Review 20 won + 20 lost deals and see which signals actually separated them.

- Adjust weights, caps, and decay.

- Add one automation per root cause (task, alert, or stage gate).

Manager calibration prompt: "Would my best manager call this deal healthy? If not, which signal is lying?"

Week 4 - Operationalize reinforcement (Owner: Sales managers + Enablement)

Add reinforcement moments so fields don't decay:

- Pre-meeting (rep): confirm next step + stakeholders

- Post-meeting (rep): update next step + close date confidence

- Weekly reviews (manager): root cause + recovery plan for at-risk deals

Weekly review agenda (45 minutes):

- 10 min: at-risk count trend (by stage, by rep)

- 25 min: top 5 at-risk deals (root cause only; no storytelling)

- 10 min: commit hygiene (close date moves, single-threaded commits)

If you're using Outreach Deal Health, plan around the 1-day lag: don't run same-day "fix it now" queues based solely on the score. Use deterministic rules for same-day triggers, and the score for next-day prioritization.

FAQ

Is deal health a real win probability or just a ranking?

Some tools output a probability-style percentage (HubSpot), while others present a percentile rank against similar historical deals (Gong). Treat it as prioritization first, then confirm whether your platform's number is probability, percentile, or a tier.

How often should a deal health score update?

For most teams, update daily and review weekly. Daily keeps the signal actionable; weekly keeps coaching realistic.

Why doesn't my reopened deal have a score in HubSpot?

HubSpot reopened deals don't receive a deal score, so the clean workaround is to create a new deal for the reopened motion and associate it to the same company and contacts. That keeps scoring, automation, and reporting consistent instead of leaving you with a blank record.

What's the fastest way to improve "engagement" signals without spamming?

Improve reachability: verify the right stakeholders, add direct dials for late-stage deals, and refresh contacts weekly so job changes don't kill momentum. Prospeo helps here with 98% verified email accuracy, 125M+ verified mobiles, and a 7-day refresh cycle - so "no response" is less likely to be "can't reach."

What should I do if reps try to game deal health?

Design it to be hard to game: weight buyer-driven signals (mutual next step, stakeholder coverage, close date stability) more than raw activity volume, and use decay so one burst of activity doesn't keep a deal healthy for weeks. Then require a root-cause label for at-risk deals so coaching stays specific.

Multi-threading by stage 2 requires actual contact data for power sponsors and end users - not just your original champion's email. Prospeo refreshes 300M+ profiles every 7 days and returns 50+ data points per contact, so reps can engage the full buying committee before the deal stalls.

You can't multi-thread deals with contacts you can't reach.

Summary: make deal health an operating system, not a widget

If you want this to work in 2026, keep it explainable (8-12 signals), enforce a few deterministic rules (especially the 7-day next step), and attach playbooks that trigger real behavior.

Do that, and deal health stops being a pretty CRM badge and starts becoming the early-warning system that saves deals before they slip.