Multi-Agent Sales Playbooks: What Works, What Fails, and How to Build Yours in 2026

Your CEO just came back from a conference where Jason Lemkin showed SaaStr's results - 20+ AI agents, $4.8M in pipeline, 1.2 humans doing the work of 10. Now your CEO wants the same thing by Q4. This playbook is for the person who has to actually make that happen.

The off-the-shelf version of multi-agent sales playbooks everyone's selling doesn't exist yet. What does exist are principles, patterns, and a handful of real case studies - most of which come with caveats the vendors conveniently leave out. Gartner predicts AI agents will outnumber human sellers 10x by 2028, but warns that fewer than 40% of sellers will report those agents actually improved their productivity. That's a staggering gap between hype and reality.

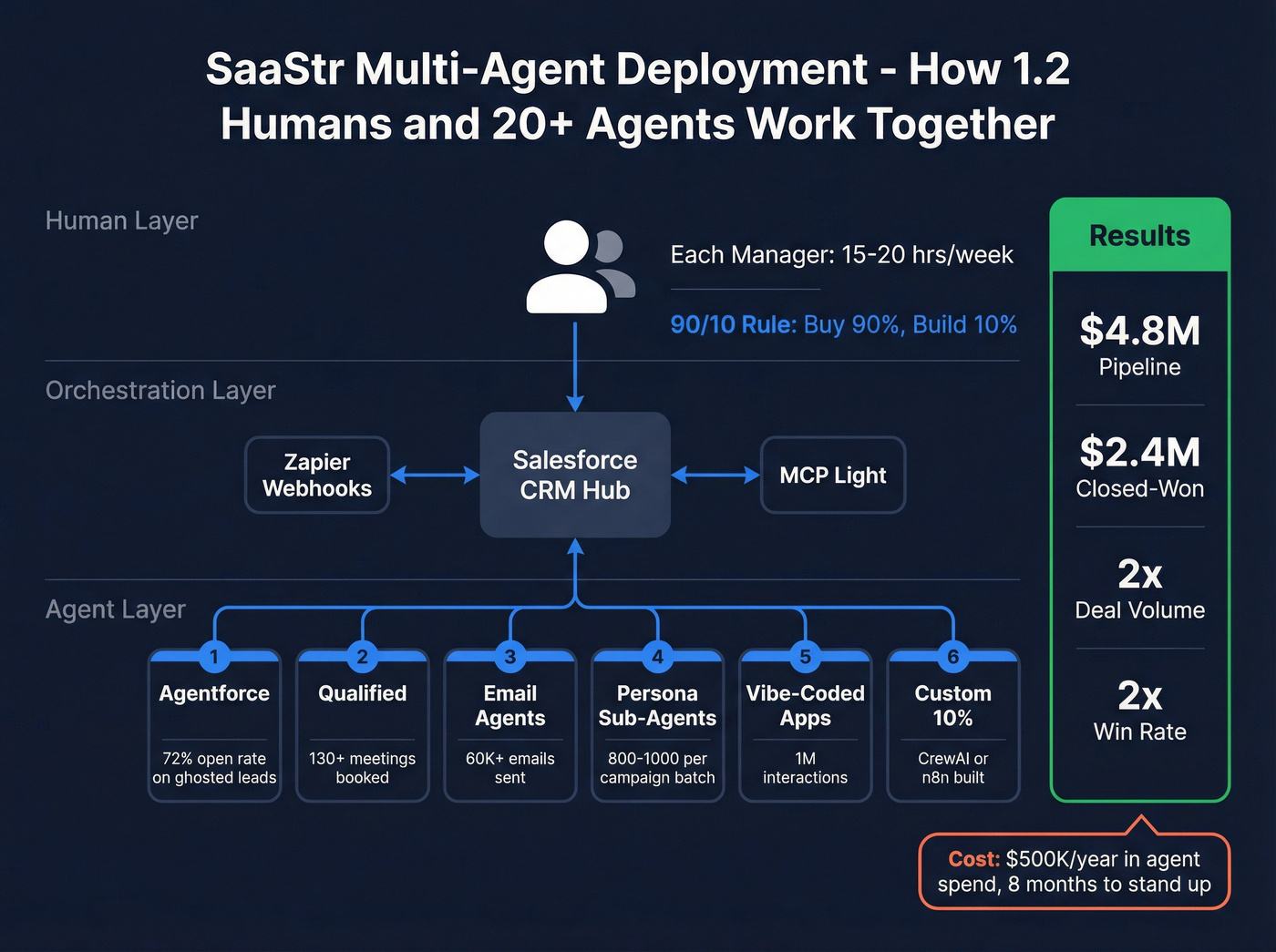

SaaStr's deployment is the most credible public case study available. $4.8M in additional pipeline. $2.4M closed-won. 20+ agents. But it runs $500K annually in AI agent spend and took 8 months to stand up. That's not a weekend project - it's a strategic bet with real money behind it.

This is the operational playbook I'd hand to the RevOps lead who just got the "make AI agents work" mandate. The wins are real. The failure modes are worse than anyone's telling you. And the gap between the two comes down to decisions you'll make in the first two weeks.

The Short Version

- Multi-agent deployments are real but immature. Over 40% of agentic AI projects will be canceled by end of 2027, per Gartner. The top failure modes: specification errors (42%), agent misalignment (37%), and missing verification layers (21%).

The one proven case study - SaaStr's deployment of 20+ agents - generated $4.8M in pipeline and $2.4M closed-won. It costs $500K/year in agent spend and took 8 months with dedicated human managers spending 15-20 hours per week each.

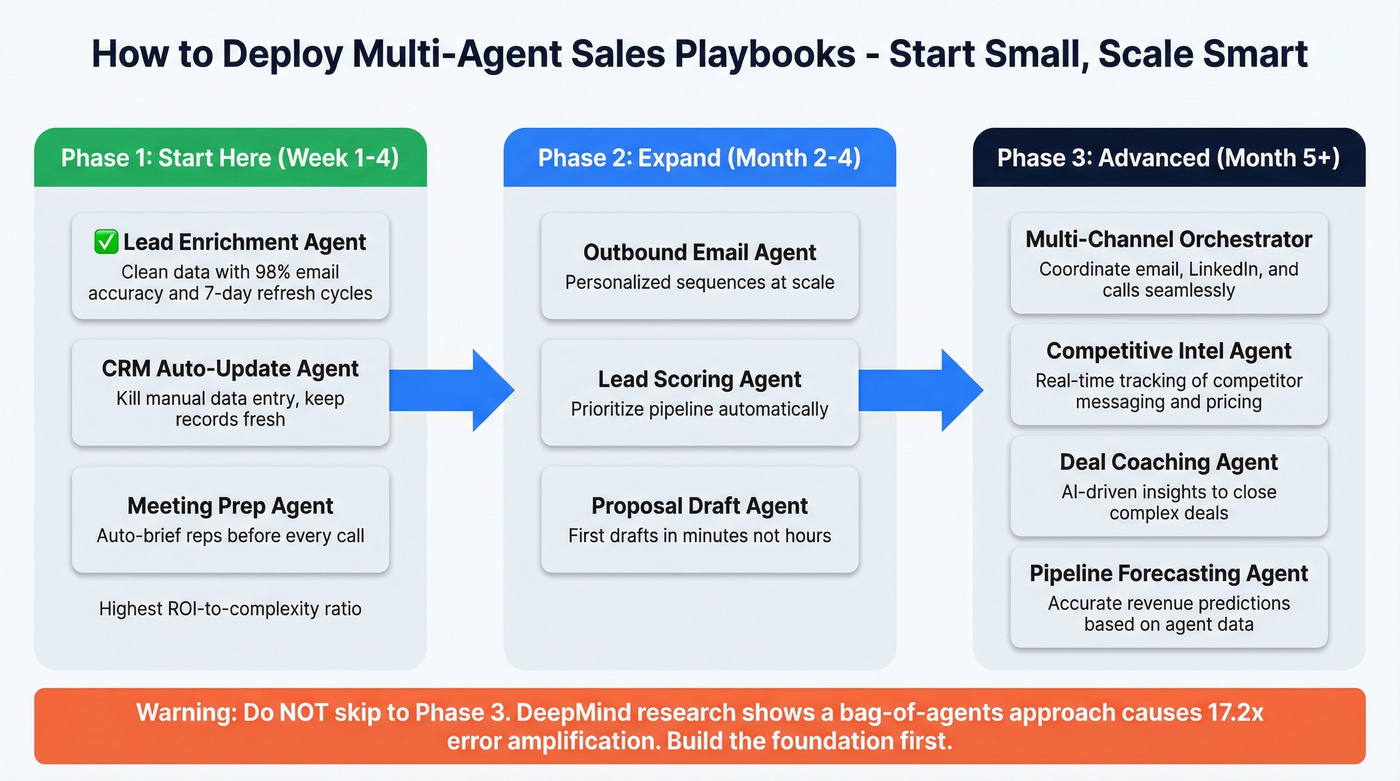

Start with 2-3 single-purpose agents (lead enrichment, CRM hygiene, meeting prep), not a 20-agent swarm. DeepMind research shows that a "bag of agents" approach can cause 17.2x error amplification.

Fix your data layer first. Agents automate failure when fed bad contact data. A 7-day refresh cycle and 98% email accuracy is a practical baseline your agents need - without clean inputs, every agent downstream compounds errors.

Buy 90%, build 10%. SaaStr's own rule. Use CrewAI or n8n for the custom 10% where no vendor does it well.

What Multi-Agent Sales Playbooks Actually Are

A multi-agent sales playbook isn't a traditional sales playbook with AI sprinkled on top. It's not a single chatbot answering questions. And it's not the same as having Copilot in your CRM.

It's a structured framework where multiple specialized AI agents handle distinct stages of the sales pipeline - prospecting, outreach, meeting prep, proposals, CRM updates - coordinated through an orchestration layer. Each agent has a narrow job, focused context, and defined inputs and outputs. The "playbook" part is the coordination logic: which agent fires when, what data passes between them, and where humans stay in the loop. Think of it as the foundation of a prospecting agent stack - each layer purpose-built, each handoff deliberate.

Why does this matter? Specialized agents outperform generalist ones. An agent tuned to write personalized icebreakers using enrichment data will crush a general-purpose LLM asked to "help with sales." Narrow context means fewer hallucinations, more consistent output, and easier debugging when something breaks.

Flowla's taxonomy breaks the tool landscape into three useful categories: agent builders (configure logic, no code - think n8n or CrewAI), AI-enhanced platforms (existing tools like Salesforce or HubSpot layering AI into their workflows), and digital workers (fully-formed agents that own an entire job, like an AI SDR that runs outbound end-to-end).

The best mental model: assistive AI is a co-pilot - it suggests, you decide. Agentic AI is a smart intern you trust to run with specific playbooks. The intern still needs supervision, clear instructions, and good data.

One critical warning: Gartner estimates only about 130 of the thousands of agentic AI vendors are real. The rest are "agent washing" - rebranding existing automation or chatbots as "agents" to ride the hype cycle. If a vendor can't explain how their agents coordinate, share memory, or handle failures, you're looking at a chatbot in a trench coat.

Most production deployments use shared memory with Salesforce or HubSpot as the hub. SaaStr calls this "MCP light" - Zapier webhooks plus Salesforce as the central nervous system. Think of each agent spec as a job description: mission, inputs, outputs, guardrails, and escalation criteria.

The Economics of Multi-Agent Sales Teams

The economic case for AI agents in sales isn't theoretical anymore. It's math - and the way companies measure success is shifting fast. Futurum Research found that "productivity gains" fell 5.8 percentage points as the #1 success measure for AI deployments. What replaced it? Direct financial impact. Companies stopped asking "are reps saving time?" and started asking "is this generating revenue?"

Sales reps spend only 28-30% of their week actually selling. The rest is research, data entry, CRM updates, meeting prep, and admin work. McKinsey estimates generative AI could add $2.6 to $4.4 trillion annually across use cases, with roughly 75% of that value concentrated in customer operations, marketing and sales, software engineering, and R&D. For sales teams specifically, multi-channel workflow optimization is where the biggest efficiency gains hide - automating the handoffs between email, phone, and direct mail that eat hours of rep time every week.

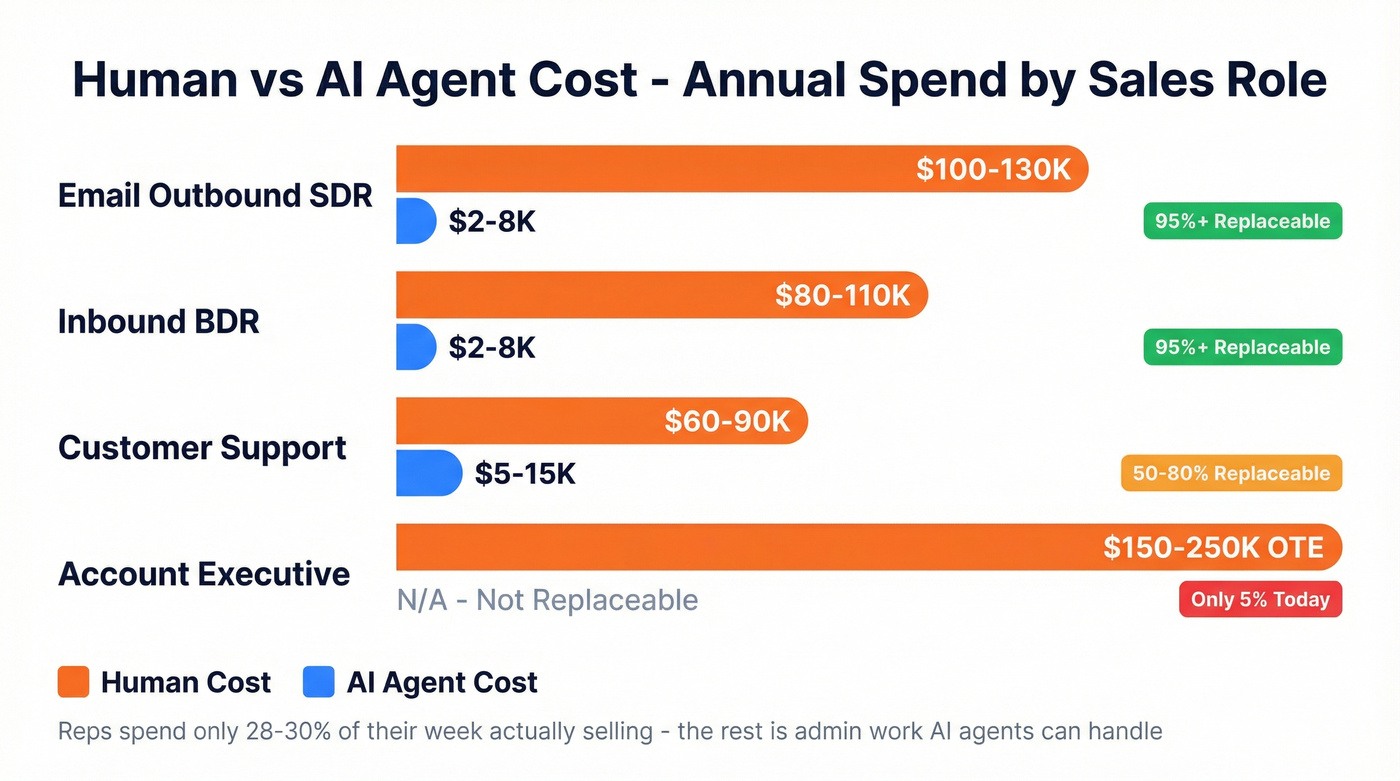

Here's the role-by-role breakdown:

| Role | Human Cost | AI Agent Cost | Replaceability |

|---|---|---|---|

| Email outbound SDR | $100-130K/yr | $2-8K/yr | 95%+ |

| Inbound BDR | $80-110K/yr | $2-8K/yr | 95%+ |

| Customer support | $60-90K/yr | $5-15K/yr | 50-80% |

| Account Executive | $150-250K OTE | N/A | ~5% today |

HubSpot's Customer Agent already resolves 50% of support tickets autonomously and cuts resolution time by 39%. Zendesk enterprise customers hit 60-80% automation - but only after months of training data.

Futurum's analysis of the Salesforce 2026 State of Sales research shows 87% of organizations using some form of AI, with 54% already deploying AI agents across the sales cycle. High-performing teams are 1.7x more likely to use AI agents for prospecting than underperformers. Meanwhile, 67% of sales professionals aren't on pace to hit quotas - the status quo isn't working either.

AI-native companies charge $50-100K/year for enterprise AI SDR packages because they're replacing $100-130K human SDRs. This is the new pricing logic - and it's why build-vs-buy math matters so much. A basic AI SDR tool at $2-8K/year delivers 70-80% of a human SDR's output. An enterprise package at $50-100K/year with forward-deployed engineer support gets closer to 90-95%.

Here's the thing that drives me crazy about this market: most vendors hide their pricing behind "talk to sales" walls. They price against the human they're replacing, not the software they're competing with. Salesforce's Agentforce runs roughly $2/conversation at the base tier, but enterprise deployments run into six figures annually when you factor in customization and support.

The economics only work if the agents actually perform. And 42% of companies abandoned most AI initiatives in 2025, up from 17% in 2024. The gap between "this should save us money" and "this actually saved us money" is where most projects die.

Multi-agent playbooks fail when the data layer is broken. 42% of agent errors come from bad specifications - and bad contact data is the fastest way to amplify those errors 17x across your stack. Prospeo's 7-day refresh cycle and 98% email accuracy give your agents the clean inputs they need to actually perform.

Fix the data layer before you deploy a single agent.

What a Real Multi-Agent Deployment Looks Like - The SaaStr Case Study

SaaStr's multi-agent deployment is the most detailed public case study in the space, and it's worth studying because it comes from a named company with a public reputation on the line - not a vendor's anonymized "Customer X" success story.

The headline numbers: SaaStr went from 10+ humans in sales to 1.2 humans and 20+ AI agents, with the same net productivity. Eight months in, they'd generated $4.8M in additional pipeline and $2.4M in closed-won revenue. Deal volume more than doubled. Win rates nearly doubled. All of it was additive - it didn't cannibalize existing inbound revenue.

The operational details are where it gets real.

Over 60,000 high-quality AI-generated emails went out on the sales side, with nearly 1 million interactions through vibe-coded apps. Agentforce specifically delivered a 72% open rate and 10%+ response rate on ghosted leads - leads that human SDRs had already given up on. Qualified booked 130+ meetings since August. Campaigns were segmented into batches of 800-1,000 max per campaign, with sub-agents handling each persona. This isn't "blast 50,000 emails and pray." It's surgical segmentation with agent-level specialization per audience.

The cost? $500,000 annually for AI agents versus roughly $10,000 on Salesforce CRM - 50x more on agents than on the CRM itself. Each human manager spends 15-20 hours per week managing agents. That's not a typo - each manager, not combined. Multi-agent management is a real job.

SaaStr's biggest operational lesson is what they call the "90/10 Rule": buy 90% of your AI stack, only build the 10% where no vendor does it well. Their orchestration layer is "MCP light" - Zapier webhooks plus Salesforce as the hub. Not elegant, but functional. They also found that agents requiring deep training can't be self-trained yet - you need forward-deployed engineers from the vendor.

I'll be blunt: SaaStr's results are real, but they aren't replicable at $50K. I've seen teams try. It doesn't work. The $500K price tag isn't just software licenses - it's the human investment in orchestration, monitoring, and continuous tuning. If your average deal size is under $10K and your total sales tech budget is $50K, you don't need a 20-agent swarm. You need 2-3 agents that nail enrichment, CRM hygiene, and meeting prep - and a human team that can actually close.

Now the counter-narrative. Klarna went aggressive on AI-only, cutting headcount by 22%. Then they reversed course and started hiring humans again. The lesson isn't that AI agents don't work - it's that the "replace all humans" narrative is premature. The winning model in 2026 is hybrid: AI handles volume and repetition, humans handle judgment and relationships.

When evaluating vendor claims, always ask: "Is this a SaaStr-level case study, or is this a vendor quoting their own marketing?" Landbase advertises up to 7x conversion rates. SaaStr shows you the receipts - specific dollar figures, timelines, team sizes, and failure modes.

The 10 Agents Every Sales Team Needs

You don't need 20 agents on day one. You need 2-3 that actually work.

Start with Lead Enrichment, CRM Auto-Update, and Meeting Prep - the three with the highest ROI-to-complexity ratio. Then scale from there. The design principle from AIMultiple's benchmark: each agent should handle a task that takes a human roughly 30-35 minutes. Doubling task duration quadruples the failure rate - it's exponential, not linear.

Prospecting Agents

ICP Scoring Agent. Ingests the enrichment data and scores leads against your ideal customer profile. Filters out bad fits before a human ever sees them. Needs clean, normalized company data (headcount, industry, revenue, tech stack) to score accurately.

Personalized Icebreaker Agent. The difference between "Hi [First Name], I noticed your company is growing" and a genuinely relevant hook that references their Series B announcement last week. Pulls recent news, job changes, funding rounds, and social signals to generate a custom opening line for each prospect.

Outreach Agents

Sequence Builder Agent. Takes the enrichment data, ICP score, and icebreaker, then assembles a multi-step outreach sequence - email cadence, follow-up timing, channel mix. The best implementations generate 3-5 variants per persona and A/B test automatically. This is where multichannel coordination matters most - weaving email, phone, and direct mail touches into a single sequence rather than running each channel in isolation. (If you need templates and rules, start with outreach sequences.)

Campaign Reporting Agent. The agent that tells you "Persona C in the fintech vertical has a 2% reply rate - kill it or rewrite." Monitors open rates, reply rates, bounce rates, and meeting conversions across all active sequences. Flags underperforming campaigns and suggests adjustments.

Meeting Agents

Every AE has scrambled to prep for a call 5 minutes before it starts. The Meeting Prep Agent eliminates that scramble entirely. It delivers a one-pager 2 hours before every call: company overview, recent news, attendee bios, past interactions from CRM, competitive intel, and suggested talking points. This is the single highest-ROI agent for AEs - we've seen teams report it's the one agent nobody wants to give up once they've used it.

Automated Scheduling Agent. Handles the back-and-forth of finding a meeting time. Integrates with calendar tools, sends booking links, handles rescheduling. Eliminates the 3-email chain that kills momentum. InsideSales data shows conversion rates are 8x higher in the first 5 minutes after lead submission - speed matters, and this agent delivers it (track this with speed to lead metrics).

Closing Agents

CRM Auto-Update Agent. CRM accuracy goes from roughly 40% to 95%+. It listens to calls, reads emails, and updates CRM fields automatically. This is the agent that finally solves the "reps don't update Salesforce" problem that's plagued every sales org since 2005. (Related: reduce sales admin time with AI.)

Proposal Generator Agent. Takes meeting notes and CRM data, generates a draft proposal within 30 minutes of the call ending - versus 2+ hours manually. The human reviews and personalizes; the agent handles the template, pricing tables, and boilerplate.

Follow-Up Agent. Not generic "just checking in" emails. This agent monitors deals for stalled momentum and triggers contextual follow-ups that reference the last conversation, address likely objections, and include relevant content. For enterprise deals, it becomes essential for multi-threading - ensuring follow-ups reach multiple stakeholders across the buying committee, not just the single champion who goes dark. (If you're building this, borrow the structure from a prospect follow up cadence.)

Agent Summary Table

| Agent | Stage | Data Flow | Time Saved | ROI |

|---|---|---|---|---|

| Lead Enrichment | Prospect | Domain -> Full dossier | 19.5 min | High |

| ICP Scoring | Prospect | Enrichment -> Score | 10 min | High |

| Icebreaker | Prospect | Profile + news -> Opener | 8 min | Medium |

| Sequence Builder | Outreach | All data -> Cadence | 25 min | High |

| Campaign Report | Outreach | Metrics -> Flags | 30 min | Medium |

| Meeting Prep | Meeting | CRM + web -> Brief | 20 min | Very High |

| Auto-Schedule | Meeting | Calendar -> Booking | 15 min | High |

| Proposal Gen | Close | Notes -> Draft | 90 min | High |

| CRM Auto-Update | Close | Calls + emails -> Fields | Ongoing | Very High |

| Follow-Up | Close | Deal history -> Email | 10 min | Medium |

The pattern is clear: automate the boring stuff - research, data entry, scheduling, reporting. The teams seeing 2x meetings booked and 60%+ more time selling aren't replacing reps. They're removing the 70% of non-selling activities that were drowning them.

Before You Deploy a Single Agent - The Data Quality Prerequisite

This is Step 0. The non-negotiable prerequisite everyone skips.

It's also the single biggest reason agentic sales projects fail.

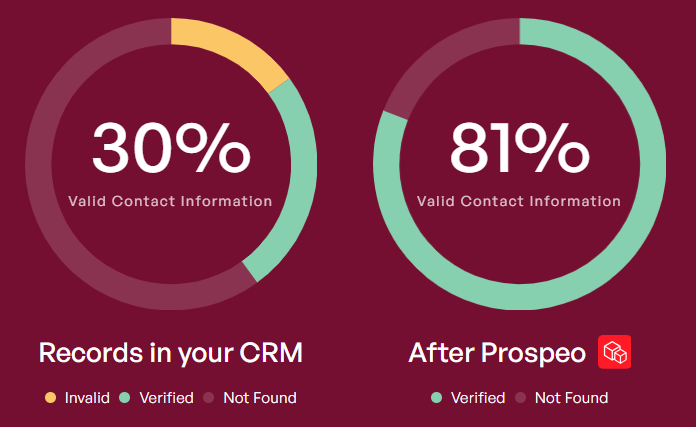

Here's the principle: AI agents scale bad decisions faster. If your CRM has 35% invalid emails, a lead enrichment agent will confidently build dossiers on people who don't exist at those addresses anymore. An outreach agent will send beautifully personalized emails that bounce. A campaign reporting agent will show you metrics that are meaningless because half the sends never arrived.

74% of high-performing professionals prioritize data cleansing and integration, versus just 54% of underperformers. That 20-point gap isn't a coincidence - it's the difference between agents that generate pipeline and agents that generate noise. (If you want a scorecard, start with data quality.)

Run these 10 CRM health checks before you deploy anything:

- Missing contact owner - orphaned leads nobody's working

- Invalid email addresses - bounced emails still marked deliverable

- Duplicate domains - same company appearing 3-4 times with different spellings

- Lifecycle stage mismatch - leads marked "customer" who never closed

- UTM parameter blanks - no attribution data on inbound leads

- Lead source = "Other" - the graveyard of lazy data entry

- No consent flag - GDPR liability hiding in your database

- Bounced email still marked marketable - actively damaging your sender reputation (common root cause of a 550 Recipient Rejected spike)

- Company size null - can't score ICP without headcount

- Country not normalized - "US" vs "USA" vs "United States" vs "U.S.A."

Once you've identified the gaps, you need a data layer that keeps records accurate and fresh - not a one-time cleanup, but a recurring automation.

Most data providers refresh every 6 weeks - by then, 30% of your B2B data has decayed. That decay is invisible until your agents start acting on it. Prospeo's enrichment layer runs a 7-day refresh cycle with 98% email accuracy, returning 50+ data points per contact. Connect it via API, push enriched records into Salesforce or HubSpot, and your agents inherit clean data by default.

The proof is in the results. Meritt went from a 35% bounce rate to under 4% after deploying this data layer - and their pipeline tripled from $100K to $300K per week. Snyk's 50 AEs saw bounce rates drop from 35-40% to under 5%, with AE-sourced pipeline up 180% and 200+ new opportunities per month.

SaaStr spends $500K/year on AI agents. Don't let yours burn budget on bounced emails and stale contacts. At $0.01 per verified email with 143M+ verified addresses, Prospeo is the data foundation that makes your enrichment agent, your outbound agent, and your CRM hygiene agent actually work.

Your agents deserve better data than your reps got. Give them Prospeo.

Skip this step and everything after it is building on sand.

Why 40% of Multi-Agent Deployments Get Canceled

Gartner's prediction is stark: over 40% of agentic AI projects will be canceled by end of 2027 due to escalating costs, unclear business value, or inadequate risk controls. And 95% of AI pilot programs fail to deliver measurable business impact.

These aren't scare tactics. They're the base rate you're working against.

Specification Errors - Agents That Don't Know When They're Done

This is the #1 failure mode, accounting for 42% of multi-agent failures in peer-reviewed studies. Agents get stuck in loops, produce hardcoded answers, or simply don't know when their task is complete. An enrichment agent that keeps enriching the same contact over and over. A sequence builder that generates infinite variants without converging.

The fix: every agent needs explicit completion criteria. "Enrichment is done when all 5 required fields are populated or 3 API calls have returned no results." That simple.

Inner Agent Misalignment - Agents Ignoring Their Teammates

37% of failures come from agents that don't coordinate properly. The ICP scoring agent uses one definition of "enterprise." The outreach agent uses another. Each agent works fine in isolation; together, they produce garbage.

LLM agents in group debate settings actually conform to the majority even when presented with weaker arguments - AI groupthink is real. Errors propagate and compound rather than getting caught and corrected.

Verification Failures - No Quality Checks

21% of failures stem from poor or missing final checks. The proposal generator produces a document with the wrong company name. The CRM auto-update agent overwrites a field a human just corrected.

Every agent chain needs a quality gate - either a human review step or a dedicated verification agent that checks outputs against known constraints before they hit production systems.

The 17x Error Trap

Google DeepMind research found that the "bag of agents" approach - throwing more LLMs at a problem without structured coordination - can cause 17.2x error amplification. Not 17% more errors. Seventeen times more errors.

The key finding: multi-agent coordination yields the highest returns when the single-agent baseline is below 45% accuracy. If your base model is already hitting 80%, adding more agents introduces more noise than value.

The Demo-to-Production Gap

Every vendor demo runs in 3 seconds with perfect data on a curated dataset. Production is different. Latency cascades from sequential agents turn that 3-second demo into 30-second delays. Observability becomes a black box - when something fails in a 5-agent chain, good luck figuring out which agent broke.

And then there's the sentiment gap. Landbase has a 4.8/5 rating on G2 - from 10 reviews, mostly small businesses. On Reddit, a user describes it as "the most awful, non-working product I've ever seen." Both can be true simultaneously: the demo is impressive, the production experience is painful.

Demand case studies with named companies, specific timelines, and failure modes - not highlight reels.

How to Structure Your Agent Team

Architecture matters more than agent count.

A well-coordinated team of 3 agents will outperform a chaotic swarm of 15 every time.

Three coordination patterns have emerged from both academic research and production deployments:

| Pattern | Best For | Risk | Example |

|---|---|---|---|

| Hierarchical | Multi-step workflows | Low | Planner delegates to workers |

| Parallel | Independent tasks | Medium | Multiple enrichment agents |

| Progressive | Quality-critical output | Low | Draft -> review -> polish |

Hierarchical supervision is the safest starting point. A planner agent decomposes a complex task into subtasks, delegates to specialist worker agents, and aggregates results. Cursor found that structured planner-worker decomposition works far better than a flat swarm. This pattern maps naturally to sales: an orchestrator agent receives a new lead, dispatches the enrichment agent, waits for results, triggers the ICP scorer, then routes qualified leads to the outreach agent.

Parallel execution with synchronization works when tasks are genuinely independent. Running enrichment, technographic lookup, and intent signal detection simultaneously, then merging results into a unified lead profile. The risk? Synchronization failures - what happens when one agent returns data and another times out?

Progressive refinement is ideal for quality-critical outputs like proposals and personalized outreach. A draft agent generates the first version, a review agent checks for accuracy and tone, a polish agent handles final formatting.

Reply.io's production workflow for sales forecasting uses 8 specialized agents with an orchestrator: Data Collection, Market Trends, Pipeline Monitoring, Deal Prediction, Lead Scoring, Sales Recommendations, Forecasting, and Performance Monitoring. That's a mature implementation. Don't start there.

If your single-agent baseline is already hitting 80% accuracy on a task, adding more agents introduces more noise than value. Test the single-agent version first. Only add coordination when you've proven the single agent can't handle the complexity alone.

Build vs. Buy - What Multi-Agent Sales Stacks Actually Cost

The build-vs-buy decision is the most consequential choice you'll make, and the answer is almost always "buy most of it."

3-Year TCO by Approach

| Approach | Setup Time | Monthly Cost | 3-Year TCO | Best For |

|---|---|---|---|---|

| Enterprise SaaS | 2-4 weeks | $5-20K | $230-920K | Turnkey teams |

| SMB SaaS | Days | $50-500 | $2-18K | Small teams |

| Custom build | 6-18 months | Maintenance | $208-480K | Unique workflows |

| Open source | 6-18 months | Infrastructure | $50-300K | Technical teams |

The enterprise SaaS category includes Agentforce (Salesforce), which runs roughly $2/conversation at the base tier but scales into six figures for production deployments. SMB SaaS covers tools like Lyzr (Free: 100 actions/mo, Starter: $19/2,000 actions, Pro: $99/10,000 actions, Org: $999/100,000 actions) and CrewAI's managed tier ($39-99/mo). Open source means self-hosting CrewAI, LangGraph, or AutoGen - free software, but you're paying in engineering time and infrastructure.

Orchestration Framework Comparison

| Framework | Architecture | Best For | Ecosystem |

|---|---|---|---|

| CrewAI | Role-based teams | Sales agent workflows | Growing |

| LangGraph | Graph-based nodes | Complex routing | LangChain |

| AutoGen | Conversational | Rapid prototyping | Microsoft |

CrewAI if you want the fastest path to a working sales agent team - its role-based metaphor maps naturally to sales pipeline stages. LangGraph if you need complex conditional routing where different lead types trigger entirely different agent chains. AutoGen if you're prototyping and want results fast, though note it's merging into the broader Microsoft Agent Framework. n8n (free/open source, cloud plans from $24/mo) is the best option for non-technical teams connecting agents via visual workflows.

SaaStr's 90/10 rule remains the best guidance I've seen: buy 90% of your stack, only build the 10% where no vendor does it well.

The hidden cost everyone underestimates? Integration time. Budget double what vendors quote. A "2-week implementation" becomes 6 weeks when you factor in CRM field mapping, data normalization, testing, and the inevitable "that's not how our process works" conversations. (If you want a checklist, see CRM integration for sales automation.)

Building Your Multi-Agent Sales Playbook - Weeks 1-12

This timeline is based on patterns from 100+ company deployments. The companies that succeed follow this sequence. The ones that fail skip to Week 5 and wonder why nothing works.

Weeks 1-2 - Fix Your Data Foundation

Audit your CRM against the 10 health checks from the data quality section. Deploy your data quality layer. Connect your enrichment API to your CRM - set up automated enrichment for new leads and a batch enrichment job for existing records. Establish baseline metrics: current bounce rate, CRM field completion rate, lead-to-meeting conversion rate, average time spent on research per rep.

Don't skip this. I've watched teams jump straight to deploying outreach agents on top of a CRM with 35% invalid emails. They burned through sending domains in two weeks and spent the next month rebuilding their sender reputation. Two weeks of data cleanup would've prevented two months of damage.

Weeks 3-4 - Deploy Your First Agent

Pick one high-ROI, low-complexity agent. Lead Enrichment or CRM Auto-Update are the safest starting points - they have clear inputs, measurable outputs, and low risk if something goes wrong. A bad enrichment record is fixable. A bad outbound email damages your domain.

Measure everything against your baseline. If your CRM field completion rate was 40% and the auto-update agent pushes it to 85% in two weeks, you've got a clear win to show leadership.

Weeks 5-8 - Add Agents 2 and 3

Meeting Prep is the next highest-ROI agent - AEs love it because it eliminates the pre-call scramble. Add one outreach agent (Sequence Builder or Personalized Icebreaker) and establish a human-in-the-loop review cadence. Every outreach the agent generates should be reviewed by a human for the first 2-3 weeks. Once accuracy stabilizes above 90%, reduce review to spot-checks.

The key lesson from practitioners who've done this 100+ times: start with repetitive tasks, not complex ones. "Where's my order" type queries, not "negotiate a custom enterprise deal." The boring stuff is where agents shine.

Weeks 9-12 - Introduce Multi-Agent Coordination

Now - and only now - connect agents via shared memory or an orchestrator. The enrichment agent feeds the ICP scorer, which feeds the sequence builder. Monitor for error amplification. If accuracy drops when agents start coordinating, you've hit the "bag of agents" problem - step back and add verification layers.

Budget 15-20 hours per week for agent management. This isn't a "set it and forget it" deployment. SaaStr's human managers spend this much time each, and they're running one of the most sophisticated multi-agent sales playbooks in the world. Your agents need a manager just like your reps do.

Change Management - The Part Everyone Skips

If your sales team doesn't understand what the agents do, they'll either ignore the outputs or actively sabotage the workflow. I've seen an e-commerce team deploy a customer support agent that reps quietly routed around because nobody explained what it did or why.

Brief the team early. Show them the wins. Make it clear that agents handle the admin work so reps can focus on selling - not that agents are coming for their jobs. The human side is where most integration friction lives, and no amount of technical sophistication fixes a team that doesn't trust the tools.

FAQ

What is a multi-agent sales playbook?

A structured framework where multiple specialized AI agents handle distinct sales pipeline stages - prospecting, outreach, meeting prep, proposals, CRM updates - coordinated through an orchestration layer with defined inputs, outputs, and human-in-the-loop checkpoints.

How many AI agents does a sales team actually need?

Start with 2-3: lead enrichment, CRM auto-update, and meeting prep. SaaStr runs 20+ agents but spends $500K/year with dedicated managers. Prove ROI with single-purpose agents before scaling to multi-agent orchestration.

Why do most multi-agent AI projects fail?

Over 40% will be canceled by end of 2027 per Gartner. Top causes: specification errors (42%), inner agent misalignment (37%), and verification failures (21%). Dirty CRM data and unrealistic vendor promises accelerate failure dramatically.

What data quality do AI sales agents need?

Agents require verified emails at 98%+ accuracy, current job titles, and normalized company data refreshed every 7 days. Most competitors refresh every 6 weeks, by which point 30% of records have decayed.

How much does a multi-agent sales stack cost?

SMB SaaS tools run $50-500/month, enterprise platforms $5-20K/month, custom builds $100-300K upfront plus maintenance. A human SDR costs $100-130K/year; an AI SDR equivalent runs $2-8K/year for basic tools or $50-100K for enterprise packages with dedicated support.