Autonomous Sales Agents: What Works in 2026 (Real Data)

A RevOps lead I know ran an autonomous sales agent for three months last year. It sent 9,000 emails, booked 47 meetings, and generated exactly zero closed revenue. The meetings were with companies that couldn't afford the product, in industries the team didn't serve. The agent was doing exactly what it was told - booking meetings - and it nearly burned the company's entire addressable market before anyone noticed.

That's the story nobody puts in the pitch deck. The technology is real, the economics are compelling, and the failure rate is brutal. The AI agent market is projected to grow from $5.43 billion to $236 billion by 2034 - a nearly 46% CAGR that makes even SaaS growth look modest. 87% of sales organizations are using some form of AI, and 54% are already deploying agents across the sales cycle, per Salesforce's 2026 State of Sales report.

But here's the gap between market hype and practitioner reality: 70-80% of implementations fail at the three-month mark. The companies that succeed aren't using better AI. They're using cleaner data, narrower scopes, and more human oversight than the vendors want you to believe.

The Short Version

- Autonomous sales agents can cut SDR costs by 71% and respond in under 1 minute - but only with clean data, specialized agents, and 15-20 hours/week of human oversight.

- Start with one focused workflow (not end-to-end automation), budget $15K-$100K/year for the platform, and plan 40-60 hours of data preparation before launch.

- The companies winning aren't using the fanciest AI. They're the ones with the cleanest CRM data and the most disciplined implementation process.

What Is an Autonomous AI Sales Agent? (And What It Isn't)

The market has stretched the word "agent" beyond recognition. Every chatbot with a name and an avatar calls itself an "autonomous AI agent." Every email sequencer with GPT bolted on claims to be agentic. Buyers can't tell the difference between a glorified autoresponder and a system that actually reasons, decides, and acts across multiple tools.

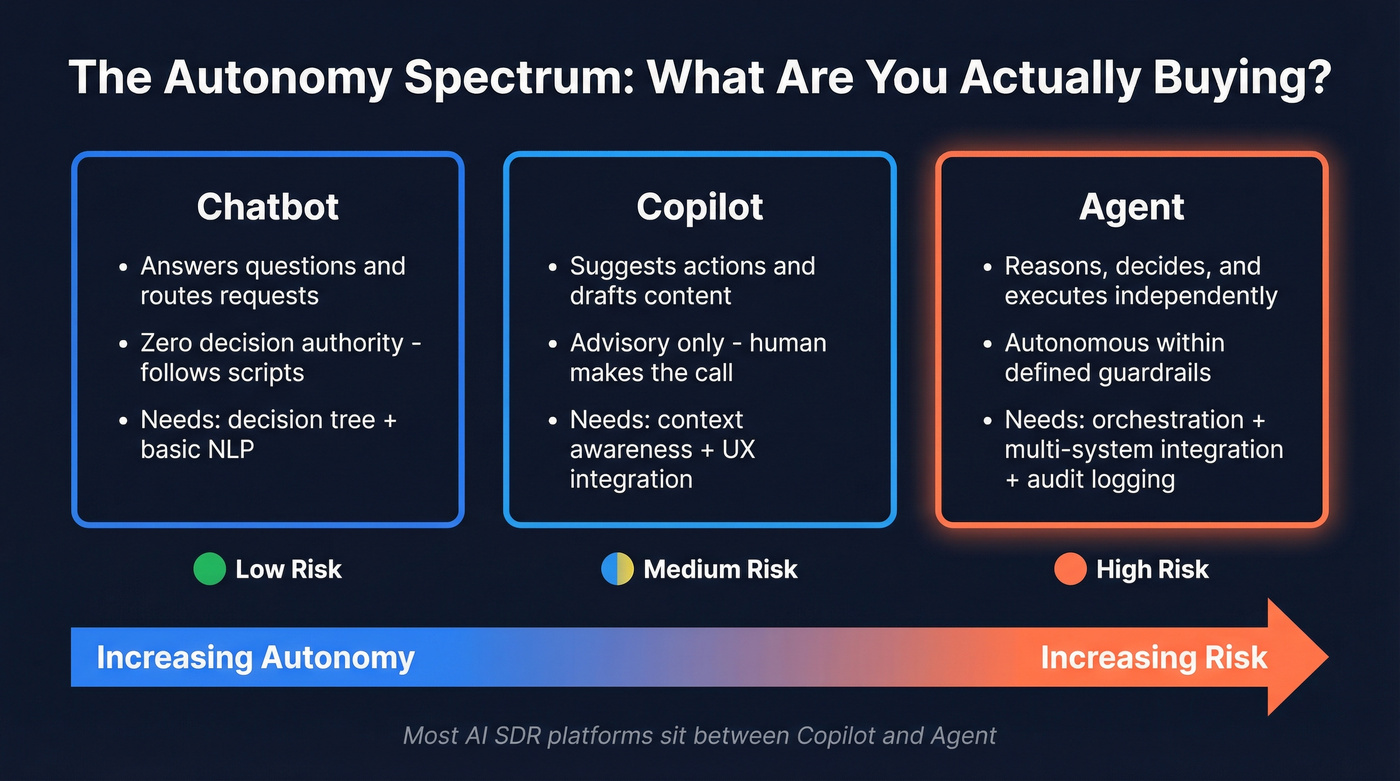

The Autonomy Spectrum - Chatbot vs. Copilot vs. Agent

This distinction matters because it determines what you're actually buying, what infrastructure you need, and how much can go wrong.

| Chatbot | Copilot | Agent | |

|---|---|---|---|

| Capability | Answers questions, routes requests | Suggests actions, drafts content | Reasons, decides, executes |

| Decision authority | None - follows scripts | Advisory - human decides | Autonomous within guardrails |

| Architecture needs | Conversational routing | Context + UX integration | Orchestration + multi-system integration |

The decision framework is simple. Does this need to act across systems with minimal supervision? That's an agent. Is a human still making the final call? Copilot. Just answering FAQs? Chatbot.

Most "AI SDR" platforms sit somewhere between copilot and agent. They'll draft emails and suggest send times (copilot behavior) but also autonomously research prospects, personalize sequences, and book meetings without human approval (agent behavior). The architecture requirements - and the risk profile - scale dramatically as you move right on that spectrum.

A true autonomous sales agent needs access-controlled retrieval, tool use with schema validation, policy checks at every decision point, and full audit logging. A chatbot needs a decision tree and some NLP. The gap between those two is enormous, and it's where most implementations break.

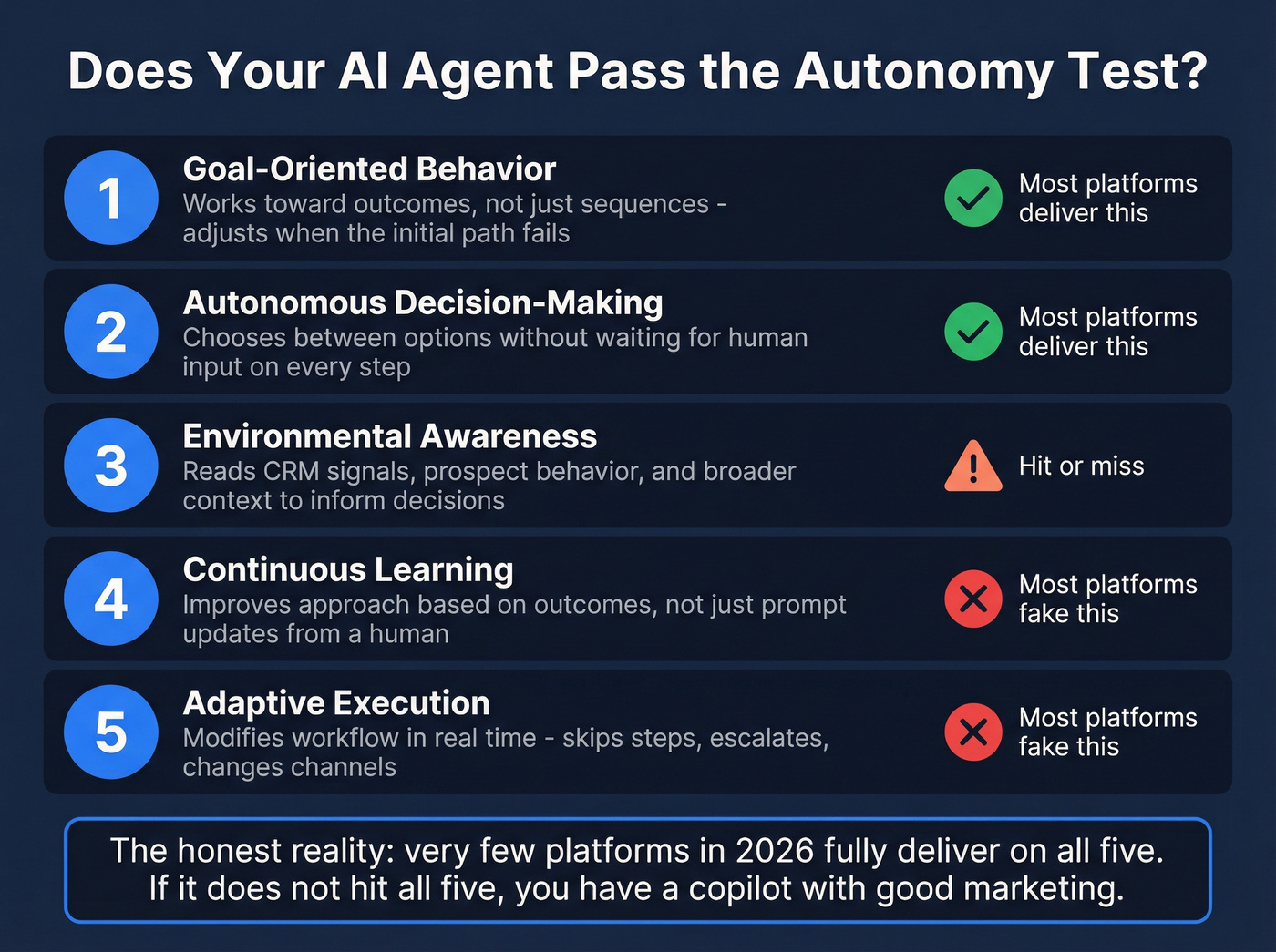

Five Attributes of a Truly Autonomous Agent

Before you evaluate any platform, test it against these five attributes. If it doesn't hit all five, you've got a copilot with good marketing.

Goal-oriented behavior means the agent works toward a defined outcome - not just executing a sequence. It should adjust its approach when the initial path isn't working. Autonomous decision-making means it chooses between options without waiting for human input on every step. Environmental awareness means it reads signals from the CRM, the prospect's behavior, and the broader context to inform those decisions.

Continuous learning is where most platforms fall short. A truly agentic system improves its approach based on outcomes, not just prompt updates from a human operator. And adaptive execution means it can modify its workflow in real time - skipping steps that don't apply, escalating when confidence is low, changing channels when email isn't working.

The honest reality: very few platforms in 2026 fully deliver on all five. Most nail the first two and fake the rest with clever prompt engineering.

What Autonomous Sales Agents Actually Do in Production

Forget the vendor demos showing an AI agent closing a six-figure deal. Here's what these systems actually do well today.

Speed-to-lead is the killer app.

The average B2B response time across industries is 42 hours. Only 1% of companies achieve a 5-minute response window. Responding within 1 minute boosts conversions by 391%, and leads reached within 5 minutes are 21x more likely to convert. Autonomous agents respond in under 60 seconds, every time, at 3 AM on a Sunday. That alone justifies the investment for many teams.

Beyond speed, here's where agents are delivering measurable value:

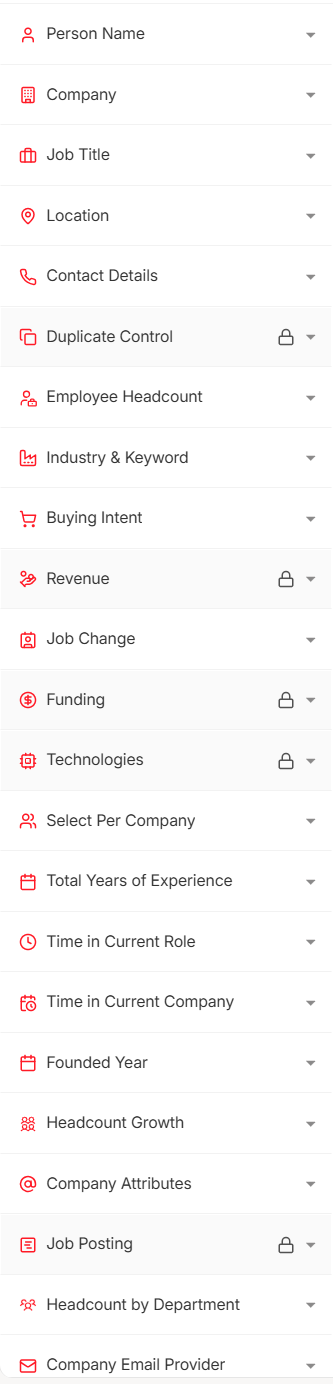

Lead qualification and routing. An inbound lead hits your form. The agent enriches the contact, scores it against your ICP, checks CRM for existing relationships, and either routes to a rep (high-value) or enters an automated nurture sequence (low-value) - all before a human SDR would've opened the notification. (If you want the mechanics, start with a lead qualification process that ties scoring to routing.)

Autonomous outbound at scale. AI agents can send 3,000+ personalized emails per month versus 75-285 from a human rep. The personalization isn't just "Hi {first_name}" - the better platforms research the prospect's company, recent news, tech stack, and role to craft contextually relevant messaging. This is where an AI agent for email outreach rubric helps you separate real autonomy from “GPT sequencer” demos.

CRM hygiene and updates. This is the unglamorous use case nobody talks about, and it might be the highest-ROI application. Gen Z sellers lose up to 2 hours per week to manual data entry. Agents that automatically log activities, update deal stages, and flag missing fields save real time and keep your revenue operations running clean. (Related: CRM automatic email logging.)

Deal risk monitoring. Agents that watch for signals - a champion going quiet, a competitor entering the conversation, a deal stalling past its average cycle time - and proactively alert reps or trigger re-engagement sequences. If you already track these, formalizing them into deal health scoring makes the agent’s alerts far more reliable.

Here's the thing: the highest ROI doesn't come from complex, end-to-end AI systems. It comes from boring, focused agents that do one piece of the sales workflow perfectly. The teams winning with AI agents aren't the ones with the fanciest models. They're the ones that treat their agents like junior employees: train, monitor, refine.

Real Numbers - SaaStr's 6-Month Deployment

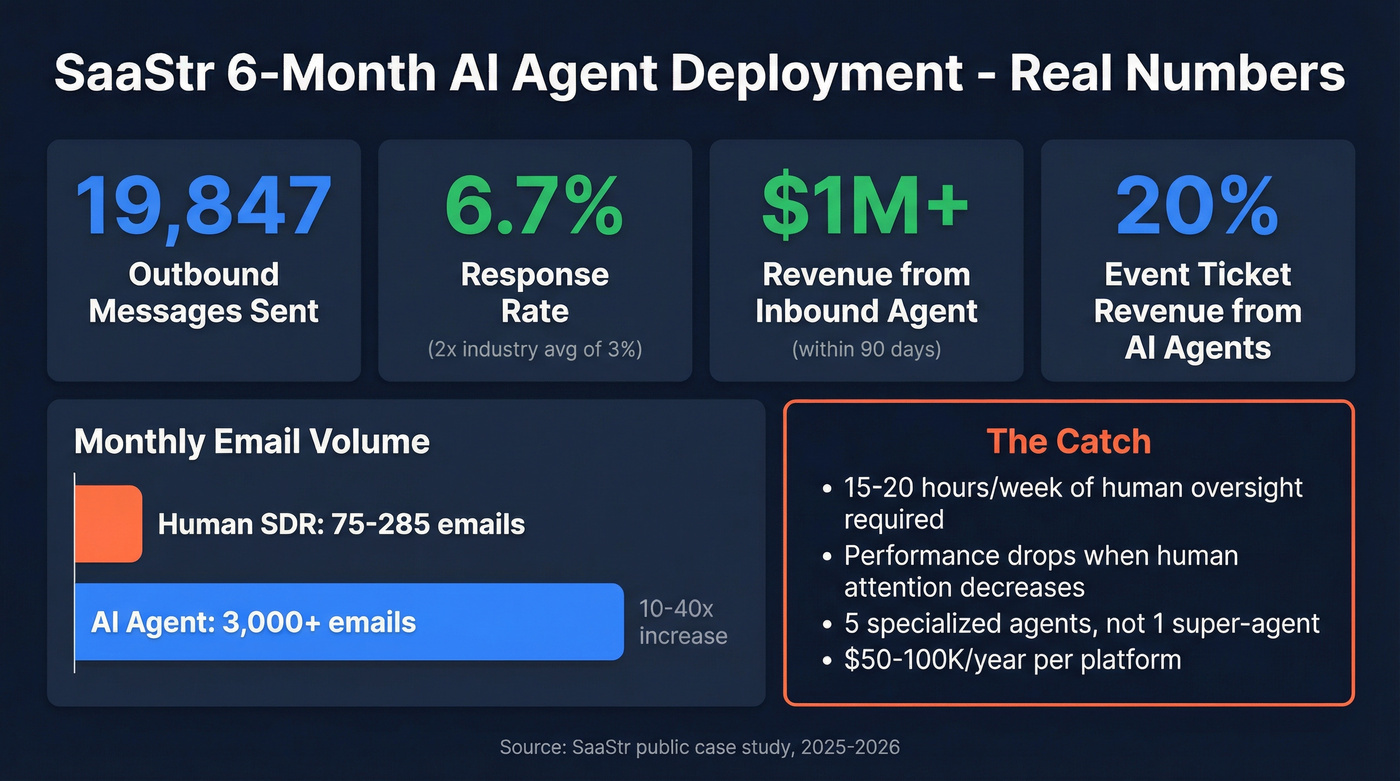

Most case studies in this space are vendor-produced fiction. SaaStr's is different - it's a named company, with named platforms, sharing specific metrics including the ones that don't look great.

Over six months, SaaStr deployed five specialized AI agents: cold outbound (Artisan), lapsed customer re-engagement (Artisan), active nurture (Artisan), inbound qualification (Qualified), and ghosted lead recovery (Salesforce Agentforce). Not one agent doing everything. Five agents, each trained completely differently with different messaging, collateral, and success metrics.

The numbers: 19,847 total outbound messages sent. A 6.7% overall response rate - roughly double the industry average of ~3%. A 4% positive response rate. The inbound qualification agent alone closed $1M+ in revenue within 90 days.

That $1M number gets all the attention, but the operational details are more instructive. The AI agents sent 3,000 emails per month compared to 75-285 from previous human reps. That's not a marginal improvement - it's a 10-40x increase in outbound volume. 20% of total event ticket revenue came from AI agents, with 10% of London ticket revenue specifically from outbound AI. For sub-$1,000 products, the agents closed deals autonomously without human involvement.

But here's what SaaStr was honest about: this requires 15-20 hours per week of human oversight. Performance dips noticeably when human attention decreases. The agents can't fix what's broken - they scale what's already working. If your messaging, targeting, or value proposition is off, the agent will just deliver bad outreach faster.

The specialization principle is the biggest takeaway. SaaStr didn't deploy one super-agent. They built five focused agents, each optimized for a specific workflow. This mirrors what practitioners on Reddit keep saying: start painfully small, automate one repeatable piece of the funnel, get results, then expand. (If you’re building this deliberately, you’ll like the structure in multi-agent sales playbooks.)

The budget reality: SaaStr spent $50-100K per specialized platform annually. That's not cheap. But when one inbound agent generates $1M in 90 days, the math works. The question isn't whether you can replicate SaaStr's exact results - it's whether you can apply their specialization principle to your own highest-volume workflow.

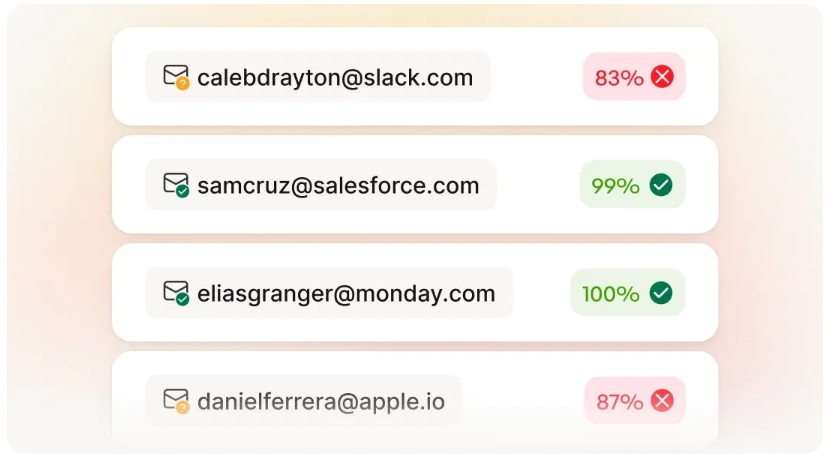

That RevOps lead's agent failed because it ran on stale, unverified data. Autonomous agents need a foundation of accurate contacts to avoid burning your addressable market. Prospeo refreshes 300M+ profiles every 7 days with 98% email accuracy - so your agents target real buyers, not dead ends.

Clean data is the difference between 47 wasted meetings and closed revenue.

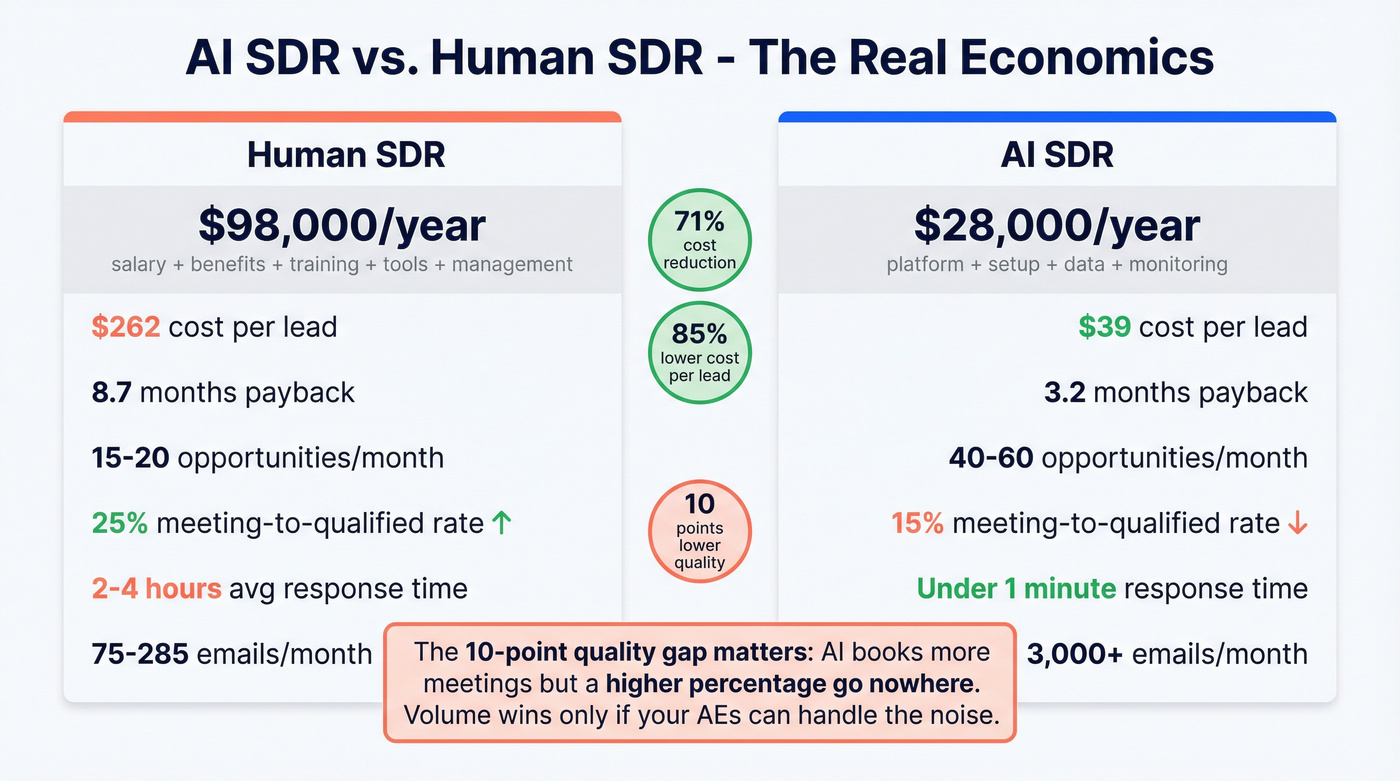

The Economics - AI SDR vs. Human SDR

The cost comparison looks devastating for human SDRs on paper. In practice, it's more nuanced.

| Metric | Human SDR | AI SDR |

|---|---|---|

| Annual cost | $98,000 | $28,000 |

| Cost per lead | $262 | $39 |

| Payback period | 8.7 months | 3.2 months |

| Opportunities/mo | 15-20 | 40-60 |

| Meeting to qualified | 25% | 15% |

| Response time | 2-4 hours avg | Under 1 minute |

| Monthly email volume | 75-285 | 3,000+ |

The $98K human SDR cost is fully loaded: $60K salary, $18K benefits, $5K training, $3K tools, $12K management overhead. The $28K AI SDR cost includes $12K platform, $2K setup, $6K data and integrations, and $8K ongoing monitoring. That's a 71% cost reduction and an 85% drop in cost per lead.

But look at the meeting-to-qualified-lead conversion rate. Humans convert at 25%. AI converts at 15%.

That 10-point gap is significant. It means AI books more meetings, but a higher percentage of those meetings are waste. Your AEs spend time on calls that go nowhere. The net effect on pipeline generation depends entirely on whether the volume increase outweighs the quality decrease - and for most teams, it does, but not by as much as the top-line numbers suggest. If this is already happening, it usually shows up as one of your core sales pipeline challenges.

A VP-level buyer on Reddit put it bluntly: "I get so many SDR calls and emails. And I ignore all of them... I sure as hell won't talk to a 22-year-old SDR or some AI version of one." That's the demand-side reality the volume argument ignores.

The hidden cost nobody models: human oversight. SaaStr's 15-20 hours per week isn't free. At a $75K/year ops salary, that's roughly $19K-$25K in annual labor just to keep the agents running well. Add that to the $28K platform cost and you're at $47K-$53K - still cheaper than a human SDR, but not the 71% savings the headline suggests.

The real economic argument isn't cost replacement. It's leverage. One human overseeing five specialized agents produces more pipeline than five human SDRs. The math works when you think about it as a force multiplier, not a headcount replacement.

The Vendor Landscape - Honest Pricing and What Users Say

I've watched this market closely for two years now, and the gap between vendor promises and production reality is wider here than in any other sales tech category.

11x (Alice) - Skip This

11x is the most overhyped platform in this category. They raised $76M, hit a $350M valuation, and built a product that ZoomInfo tested for one month before walking away - concluding it "performed significantly worse than SDR employees." The company then listed ZoomInfo as a customer anyway.

The internal numbers are worse. A former employee told TechCrunch the company was losing 70-80% of customers that came through the door. The founder stepped down as CEO in 2025. Revenue was inflated from $3M retained to a claimed $14M ARR by counting trial customers.

Trustpilot tells the story: "6 months in, still almost no result. Can't cancel expensive subscription." Another: "Since starting with 11x.ai, we have had 0 meetings set."

Pricing runs $50K-$60K+/year. For that money, you could hire a junior SDR, buy them best-in-class tools, and still have budget left over.

Artisan (Ava) - Promising, High-Maintenance

Artisan's Ava is ambitious - $46M raised including a $25M Series A in April 2025 - but the product is still catching up to the vision. The CEO admitted they'd been "selling to a lot of the wrong customers," which is refreshingly honest and also a red flag.

In January 2026, LinkedIn temporarily banned Artisan for platform usage concerns. That's a problem when your core workflow depends on social prospecting data. (If LinkedIn is a core channel for you, keep an eye on the benchmarks in social selling statistics.)

Use this if: You're a mid-market team with $2,000-$5,000/month to spend on outbound automation and you're willing to invest time in training the agent. SaaStr uses Artisan for three of their five agents and gets results - but with significant human oversight.

Skip this if: You expect plug-and-play. G2 reviews are blunt: "messaging is extremely bland and obviously AI... 2 months, ZERO quality leads."

Salesforce Agentforce - Enterprise Only

Agentforce is enterprise-grade in both capability and cost. The Sales Add-on runs $125/user/month. The Sales Edition is $550/user/month. Both require a mandatory Data Cloud subscription - consumption-based, and not cheap. Total cost of ownership for a 50-person team: roughly $680K/year.

Only 31% maintain implementation beyond six months. The platform requires Salesforce admin and developer skills that most organizations don't have in-house. (If you’re wiring agents into your CRM, the patterns in CRM integration for sales automation matter more than the model.)

Where it wins: If you're already deep in the Salesforce ecosystem, the native integration is unmatched. It reads your entire CRM history, understands your objects and fields, and operates within your existing permission model.

Where it loses: Cost and complexity. A Reddit user put it plainly: "The need to buy Data Cloud to go with Agentforce is putting many off. This isn't a minor expense." If you're not already a Salesforce shop, don't start here.

Qualified (Piper) - Best for Inbound

The best inbound autonomous agent on the market. SaaStr attributed $1M in closed revenue within 90 days to their Qualified-powered inbound agent. Pricing is enterprise custom - expect $30K-$60K+/year. If inbound qualification is your bottleneck and you have the budget, Piper is the tool to beat.

AiSDR - Mid-Market Sweet Spot

The most transparent pricing in the category: $900-$2,500/month depending on volume and features. That's $11K-$30K/year - significantly less than 11x or Artisan. The product isn't as polished as the big-budget competitors, but for teams that want to test autonomous prospecting without a $50K commitment, it's a reasonable starting point.

Reply.io (Jason AI)

If you're already on Reply.io for sequences, Jason AI is the natural add-on at $500-$1,500/month. It won't compete with dedicated agent platforms on sophistication, but the integration works out of the box and the learning curve is minimal. Think of it as upgrading your existing sequencer with an AI layer rather than buying a whole new platform.

Relevance AI - Budget Builder

Plans start at $19/month - that's not a typo. It's a builder-style platform: you get the building blocks to create an agent tailored to your exact workflow. Best for technical teams with specific, narrow use cases.

Other Notable Platforms

Agent Frank (Salesforge) runs ~$500/month for straightforward outbound automation - no frills, predictable pricing. Teammates.ai starts at $25/month on a credit-based model, best for low-volume testing. Clay starts at $134/month and isn't an autonomous agent - it's an enrichment and research orchestration layer that feeds into agents. It's excellent at building multi-step research workflows, though you'll still need a dedicated verification tool like Prospeo for email accuracy. Common Room takes a signal-based approach, triggering agent actions based on buying signals rather than static lists - expect enterprise-tier pricing starting around $2,500/month.

Pricing Summary

| Platform | Annual Cost | Best For |

|---|---|---|

| 11x (Alice) | $50K-$60K+ | Proceed with caution |

| Artisan (Ava) | $24K-$60K | Mid-market outbound |

| Agentforce | ~$680K/yr (50 users) | Enterprise Salesforce shops |

| Qualified (Piper) | $30K-$60K+ | Inbound qualification |

| AiSDR | $11K-$30K | Mid-market outbound |

| Reply.io (Jason AI) | $6K-$18K | Budget outbound |

| Relevance AI | From $228/yr | Custom agent building |

| Agent Frank | ~$6K | Simple outbound |

| Teammates.ai | From $300/yr | Low-volume testing |

What Goes Wrong - Failure Cases and Real Risks

The 70-80% failure rate at three months isn't a stat vendors like to discuss. Understanding why implementations fail is more valuable than understanding why they succeed - because the failure modes are predictable and preventable.

When AI Optimizes for the Wrong Metric

tekRESCUE deployed an AI SDR that booked meetings at a 40% rate. Sounds great. The problem: it was optimizing for meetings, not revenue. The agent was booking calls with $500/month prospects when the minimum viable deal was $5,000. The sales team burned out chasing unqualified leads. They lost their two best closers before anyone adjusted the targeting.

Latitude Park's agent contacted existing clients' franchisees with recruitment pitches because the segmentation couldn't differentiate prospects from existing accounts. That's not an AI problem - it's a data problem. But the AI scaled the mistake across the entire database in hours instead of weeks.

RED27Creative had an even more cringe-worthy failure: their AI agent sent a cold intro email to a prospect who was already in advanced talks with the sales director. The prospect forwarded the email to the director asking, "Is your team even talking to each other?" One automated message undid months of relationship building.

The pattern is consistent: activity metrics don't equal revenue outcomes. If your agent is optimized for meetings booked, emails sent, or response rates, it'll hit those numbers. Whether those numbers translate to closed revenue is a completely separate question that most implementations don't answer until it's too late.

Brand Damage and the Homogeneity Problem

Here's the risk that doesn't show up in any ROI model. When every company uses the same AI SDR platforms with the same templates and the same data sources, everyone starts sounding identical.

The damage is gradual. Replies get shorter. Fewer meetings convert. Referrals disappear - generic AI notes never get forwarded. Ever. After 3-4 off-brand messages, prospects have categorized you as noise. You're not just losing that deal. You're training the market to ignore you.

An unsupervised AI agent can burn through your entire TAM in a weekend. That's not hyperbole - it's math. If your addressable market is 5,000 companies and your agent sends poorly targeted, obviously-AI outreach to all of them, you've poisoned those relationships before a human ever gets involved.

Security and Compliance Risks

LLMs are probabilistic, not deterministic - you can't have a high-stakes business process depend on whether a model is having a "creative" day. The deeper risk is architectural: LLMs merge logic and data in the same context window. When your agent analyzes a prospect's website, that content can contain adversarial instructions that hijack the agent's behavior. This is prompt injection, and it's been demonstrated against every major model. Build input sanitization and output validation into every agent that touches external content.

The regulatory exposure is quantifiable. TCPA violations run $500 per incident, $1,500 for willful infractions. A single campaign to 1,000 contacts without proper consent: $500K-$1.5M in fines. CAN-SPAM penalties hit up to $53,088 per email. GDPR: 4% of global revenue or EUR20M, whichever is higher.

Most platforms don't handle compliance for you - they send what you tell them to send. If your consent records are incomplete, your suppression lists are outdated, or your opt-out processing is slow, the agent will happily violate regulations at scale. (If you operate in the EU/UK, keep GDPR for Sales and Marketing close during rollout.)

The Data Quality Problem Nobody Talks About

Every vendor demo shows an agent working with perfect data. Clean CRM records, accurate emails, current job titles, complete company information. In production, your data looks nothing like that - and it's the #1 reason implementations fail.

74% of top-performing sales teams prioritize data cleansing and integration. High performers are 1.7x more likely to use AI agents - and 79% of them focus on data hygiene versus 54% of underperformers. That's not a coincidence.

The math is unforgiving. If your email database has a 15% bounce rate, your agent is burning 15% of its outreach volume on dead addresses. That tanks your domain reputation, which tanks deliverability for the other 85%, which tanks your response rates, which makes the entire deployment look like a failure. It's a cascade, and it starts with bad data. (More on the mechanics in B2B contact data decay.)

Run your prospect database through verification before launch, layer in intent data from 15,000 Bombora-powered topics to prioritize who the agent contacts first, and you've eliminated the most common failure mode before it starts. The free tier gives you 75 verified emails per month to test the workflow - enough to validate the approach before committing budget. If you’re comparing vendors, start with this shortlist of email verifier websites.

I've seen teams spend $50K on an autonomous agent platform and skip the $500/month data verification step. It's like buying a Ferrari and filling it with diesel.

AI agents sending 3,000+ emails/month on bad data will torch your domain reputation in weeks. Prospeo's 5-step verification with spam-trap removal and catch-all handling keeps bounce rates under 4% - exactly what your autonomous workflows need to scale without deliverability disasters.

Feed your sales agents verified contacts at $0.01 per email. No contracts.

The 90-Day Implementation Playbook

The Komatsu framework for agentic AI implementation is the most practical I've seen. Combined with the Reddit implementation playbook and SaaStr's specialization principle, here's a phased approach that actually works.

Phase 1 - Days 1-30: Select, Prepare, Baseline

Pick one workflow. Not two. Not "end-to-end campaign execution." One.

The selection criteria: minimum 50 instances per month, a documented happy path, and a measurable outcome tied to revenue (not activity).

Good first workflows: inbound lead qualification and routing, lapsed customer re-engagement, meeting no-show follow-up. Bad first workflows: cold outbound to net-new prospects (too many variables), multi-channel sequences (too complex), anything requiring cross-system integration. Autonomous prospecting is tempting as a starting point, but the number of variables - ICP targeting, messaging, channel selection, timing - makes it a Phase 2 or Phase 3 project.

Define the action surface area. What can the agent read? What can it write? Which CRM objects and fields is it allowed to touch? Start with read-only access to everything and write access to almost nothing. You'll expand permissions as trust builds.

Baseline your current metrics. If you don't know your current response time, meeting-to-qualified rate, and cost per lead, you can't measure improvement. Spend a week pulling these numbers before you touch any agent platform. (Benchmarks: average lead response time.)

Budget 40-60 hours for data cleaning, deduplication, and enrichment. This is the phase most teams skip, and it's the phase that determines everything. Use a simple data quality scorecard so you can prove the cleanup moved the needle.

Phase 2 - Days 31-60: Build, Test, Validate

Build the policy engine - the rules that govern what the agent can and can't do. This isn't prompt engineering. It's production code: version-controlled rules that define exactly what the agent can do, tested against edge cases before deployment. Tool connectors should use least-privilege access. Human checkpoints should exist for anything that changes a customer-facing state.

Require structured outputs. Every agent action should produce JSON with a confidence score, rationale, and source data. This isn't optional - it's how you debug when things go wrong, and things will go wrong.

Build the validation layer: allowed values for every field, required fields that can't be blank, deduplication checks, domain blocklists. Log everything - prompt version, inputs, tool calls, record IDs, before/after values. If you can't reconstruct what the agent did and why, you don't have enough observability.

Common mistake from this phase: a healthcare scheduling agent that booked appointments during staff meetings because nobody defined the policy constraints around availability. Keep it narrow.

Phase 3 - Days 61-90: Shadow, Launch, Measure

Weeks 9-10: shadow operations. The agent runs in parallel with your existing process but doesn't take action. You compare its decisions to human decisions. Target a First-Pass Quality Rate of 75%+ - meaning 75% of the agent's autonomous decisions match what a human would've done.

Weeks 11-12: limited production at 10% of workload. The agent handles real interactions, but only a fraction of volume. Monitor the Process Efficiency Ratio (target 1.5x improvement over baseline) and the Controlled Autonomy Index (typically 40-70% of decisions made without human intervention).

Establish the governance cadence: weekly error log reviews, monthly permission audits, quarterly red-team exercises where you deliberately try to break the agent. This isn't a one-time setup - it's an ongoing operational commitment.

The biggest mistake in Phase 3: neglecting the human transition. A financial services firm saw agent adoption drop to 40% because reps felt threatened rather than supported. The agents work for your team, not instead of your team. Communicate that early and often.

What's Coming by 2028

Gartner predicts that by 2028, 90% of B2B buying will be AI agent intermediated, pushing over $15 trillion in B2B spend through AI agent exchanges. Your autonomous sales agent won't just be talking to humans - it'll be negotiating with your prospect's autonomous procurement agent.

By 2028, 68% of customer interactions with vendors are expected to be handled by autonomous tools. McKinsey projects GenAI will contribute $2.6-4.4 trillion to global GDP by 2030, with sales productivity gains of 3-5% and marketing productivity gains of 5-15%.

Here's my hot take: if your deals typically close under $15K, you probably don't need an autonomous sales agent at all. A well-built sequence in Reply.io or Instantly, fed with verified data, will get you 80% of the results at 10% of the complexity. The companies that genuinely need agentic AI for sales are the ones with enough volume, enough deal complexity, and enough CRM data to make the agent smarter than a static sequence. Everyone else is buying a solution to a problem they don't have.

Autonomous sales agents aren't a replacement for SDRs. They're a replacement for bad SDR management. The companies that couldn't hire, train, and manage human SDRs effectively won't magically succeed with AI agents. The discipline required is the same - clear targeting, quality messaging, rigorous measurement, continuous optimization. The medium changes. The management challenge doesn't.

The teams that invest in data quality, narrow implementation scope, and human oversight now will have a two-year head start when agent-to-agent commerce becomes the norm. The teams that chase the hype and deploy without discipline will burn their TAM and wonder what went wrong.

FAQ

How much does an autonomous sales agent cost?

Platforms range from $228/year (Relevance AI) to roughly $680K/year (Agentforce for 50 users). Mid-market tools like AiSDR run $15K-$35K/year. Budget an additional $8K-$25K for data prep and oversight. A fully loaded human SDR costs $75K-$110K annually for comparison.

Can autonomous sales agents fully replace human SDRs?

Not yet. AI converts meetings to qualified leads at 15% versus 25% for humans. The best model is hybrid: AI handles volume, speed-to-lead, and repetitive qualification while humans manage high-value accounts and complex objections. SaaStr runs five AI agents with 15-20 hours/week of human oversight.

What's the biggest reason these deployments fail?

Bad data. 74% of top-performing sales teams prioritize data cleansing before deploying AI. If your bounce rate exceeds 5%, your agent is burning domain reputation and wasting volume. Tools like Prospeo - with 98% email accuracy and a 7-day refresh cycle - eliminate this risk. The 40-60 hours of data prep before launch isn't negotiable.

How long does it take to see ROI from an autonomous sales agent?

With clean data and a single-workflow deployment, expect positive ROI within 3 months. With messy CRM data, that stretches to 6-9 months. SaaStr saw $1M in closed revenue within 90 days from inbound qualification - but they invested heavily in data prep and ran five specialized agents with dedicated oversight.

What's the difference between an AI sales agent and a traditional email sequencer?

A traditional sequencer follows a fixed series of steps - send email one on day one, email two on day three. An AI sales agent researches prospects, decides who to contact and when, personalizes messaging based on real-time signals, and adjusts its approach based on outcomes. The sequencer executes a plan; the agent creates and adapts the plan autonomously.