=== CURRENT ARTICLE (slug: unified-data-architecture-revenue) ===

Unified Data Architecture Revenue: Blueprint, Controls, and ROI (2026)

Revenue teams don't lose trust in data because a dashboard is ugly. They lose trust because the numbers change after close, and nobody can explain why.

Most "unified data" content is written for product analytics. Revenue's different: Quote-to-Cash has approvals, amendments, credits, service periods, and cash timing. Miss any of that and you'll ship reports that look right while money leaks between Quote -> Order -> Invoice.

We've tested this pattern across RevOps + Finance orgs that were tired of the monthly "which ARR is real?" argument. The fix isn't another dashboard. It's a revenue integrity system with a data backbone.

What "unified data architecture revenue" means (and what it doesn't)

Search results for "unified data architecture" usually fall into two buckets.

Bucket one: platform architecture. Lakehouse vs warehouse, ingestion patterns, governance, "unified data platforms." Useful, but generic.

Bucket two: what RevOps and Finance actually need. A unified revenue architecture that keeps bookings, billings, revenue, and retention metrics consistent across CRM, CPQ, billing, and the GL.

A unified revenue data architecture means you can answer questions like:

- "What's ARR right now?" and get one number.

- "Which opportunities became invoices?" and trace the chain.

- "Why did forecast miss?" and see whether it was pipeline, conversion, pricing, churn, or invoicing timing.

What it doesn't mean:

- It's not "a single database for everything." Keep systems of record where they belong (CRM for pipeline, billing for invoices, ERP for accounting) and still unify the model and metrics.

- It's not "company revenue" advice. A lot of off-intent results are about increasing revenue, not architecting revenue data.

- It's not "buy a CDP and call it done." CDPs help with identity and events, but they don't solve Quote-to-Cash reconciliation or metric governance out of the box.

The goal's simple: one canonical revenue model, one semantic definition of metrics, and automated controls that catch drift before Finance finds it in close week.

What you need (quick version)

If you do nothing else, do these three first:

Lock the revenue entity model Pick canonical objects (Account, Opportunity, Quote, Order, Invoice, Contract/Subscription, etc.) and define relationships and IDs. If you don't, every downstream "unified" dataset becomes a new interpretation.

Create semantic metrics (define once, reuse everywhere) ARR, NRR, pipeline, bookings, billings, churn, expansion, CAC payback - defined in one place with time logic and filters. This is how you stop "two ARR numbers."

Automate Quote -> Order -> Invoice reconciliation + monitoring Don't just sync records. Compare them. Alert on mismatches. Track exceptions with owners.

Then add the rest:

- CDC or near-real-time sync for revenue-critical objects

- A semantic layer that BI and AI both consume

- Reverse ETL to push "activation-ready" segments back into CRM/marketing/support

- Data quality gates (dedupe, identity, SKU mapping, tax fields, currency) (see data quality)

- Audit trail + exception workflows (Finance-grade)

Expect ~$30k-$150k/year in software for a mid-market unified revenue stack, plus implementation.

Revenue-first reference architecture that survives close week

Most "modern data stack" diagrams stop at dashboards. Revenue architecture has one more requirement: it has to survive audit questions and cash questions.

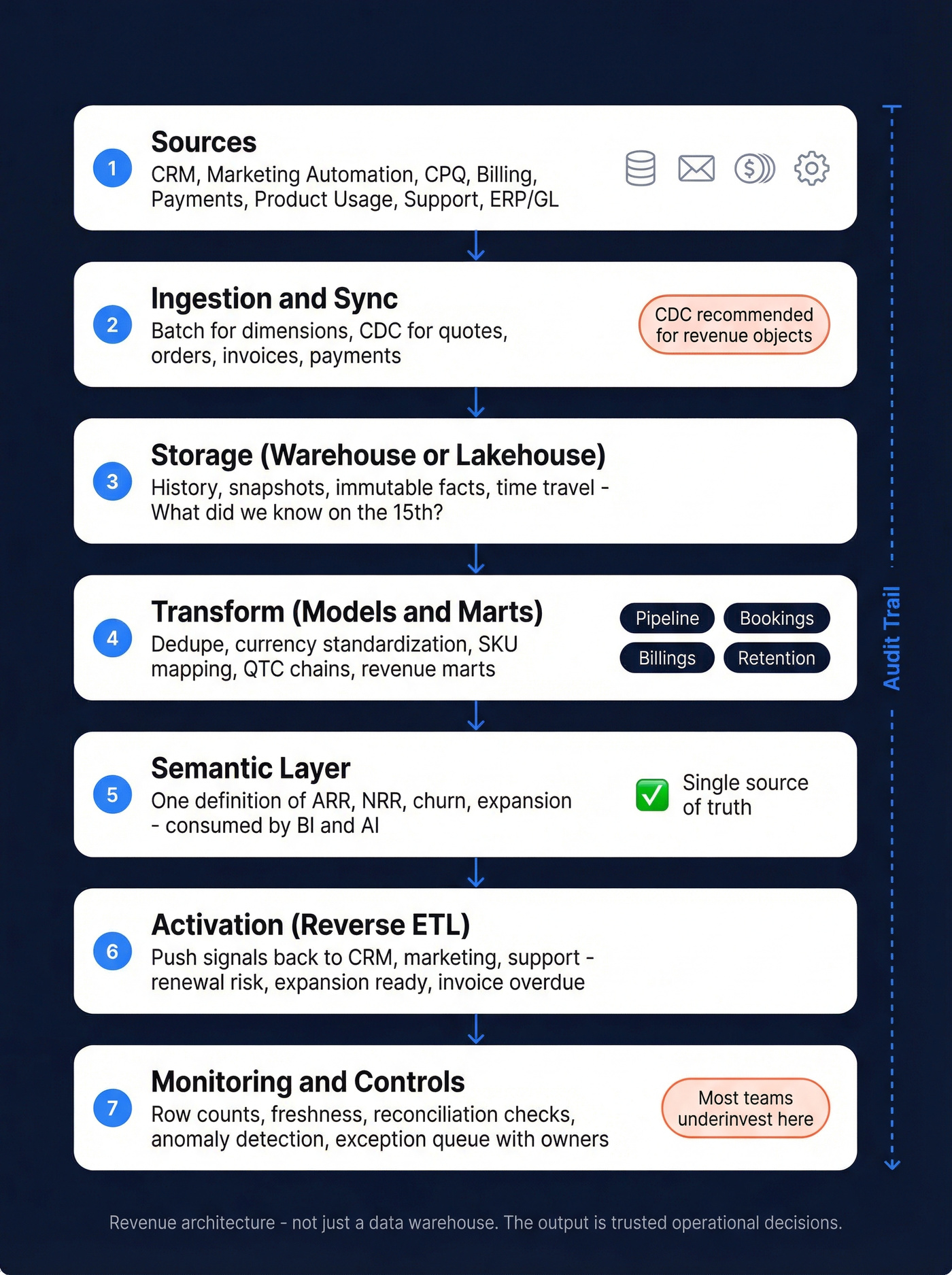

Think in layers, top to bottom:

1) Sources CRM (pipeline), marketing automation (attribution + lifecycle), CPQ (quotes + pricing), billing/subscription (invoices + subscriptions), payments, product usage, support, and ERP/GL.

2) Ingestion / sync (batch + streaming + CDC) Batch is fine for many dimensions. Revenue objects (quotes, orders, invoices, payments) deserve CDC patterns so changes propagate quickly and you don't "discover" missing invoices a week later.

3) Storage (warehouse/lakehouse) This is where you keep history, snapshots, and immutable facts. Revenue data needs time travel: "What did we know on the 15th?" matters.

4) Transform (models + marts) Clean, dedupe, standardize currencies, map SKUs, build QTC chains, and create revenue marts (pipeline, bookings, billings, retention). If you need KPI layouts, see revenue dashboards.

5) Semantic layer (metrics + entities + joins + policies) This is the "one definition" layer. Without it, every BI tool and every analyst rebuilds ARR.

6) Activation (reverse ETL) Push segments and signals back into operational tools: "renewal risk," "expansion-ready," "invoice overdue," "accounts with usage spike."

7) Monitoring / controls Row counts, freshness, reconciliation checks, anomaly detection, and an exception queue with owners. This is where most teams underinvest.

You're building data infrastructure for revenue teams, not "a data warehouse." The output is trusted operational decisions in pipeline, billing, and renewals.

Point-in-time strategy (the part that makes it audit-proof)

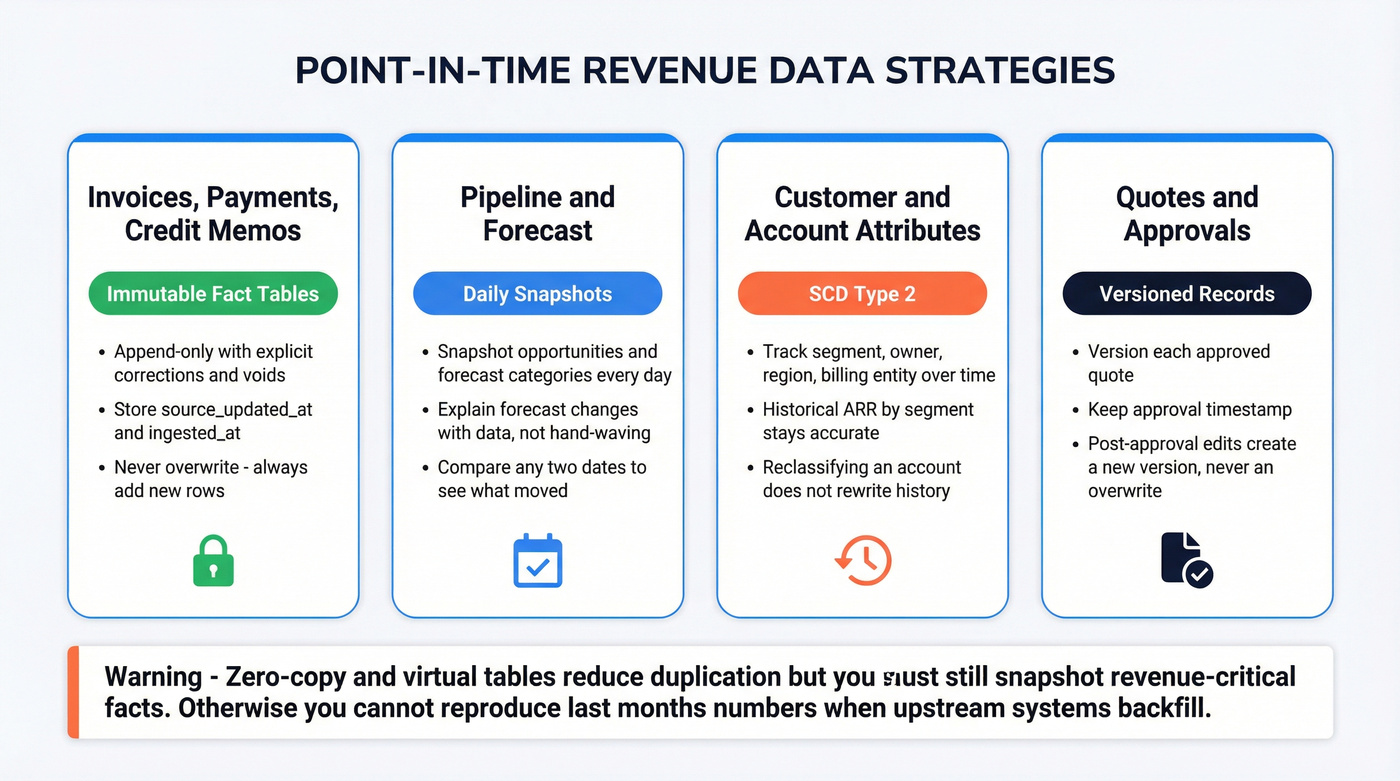

If you want Finance to trust the warehouse, you need point-in-time reconstruction by design:

- Invoices, invoice lines, payments, credit memos: store as immutable fact tables (append-only with explicit corrections/voids), plus

source_updated_atandingested_at. - Pipeline: take daily snapshots of opportunities and forecast categories so you can explain forecast changes without hand-waving. (Related: what is a sales forecast)

- Customer/account attributes: use SCD2 for fields like segment, owner, region, and "billing entity" so historical ARR/NRR by segment doesn't rewrite itself every time an account gets reclassified.

- Quotes: version approved quotes and keep the approval timestamp; treat post-approval edits as a new version, not an overwrite.

One nuance that trips teams up: "zero-copy" / virtual tables reduce duplication by federating data instead of moving it.

Use virtualization only if you also snapshot the revenue-critical facts. Otherwise you'll never reproduce last month's numbers when an upstream system backfills or "fixes" history.

A simple way to sanity-check your architecture:

| Layer | Revenue question it answers |

|---|---|

| Model | "What's an Order?" |

| Semantic | "What's ARR?" |

| Controls | "Is Invoice tied to Quote?" |

| Activation | "Who should Sales call?" |

A unified revenue architecture crumbles when CRM data is stale. Prospeo refreshes 300M+ profiles every 7 days - not every 6 weeks - so your pipeline, bookings, and retention metrics reflect reality, not last quarter's contacts.

Stop reconciling bad data. Start with contacts that are actually verified.

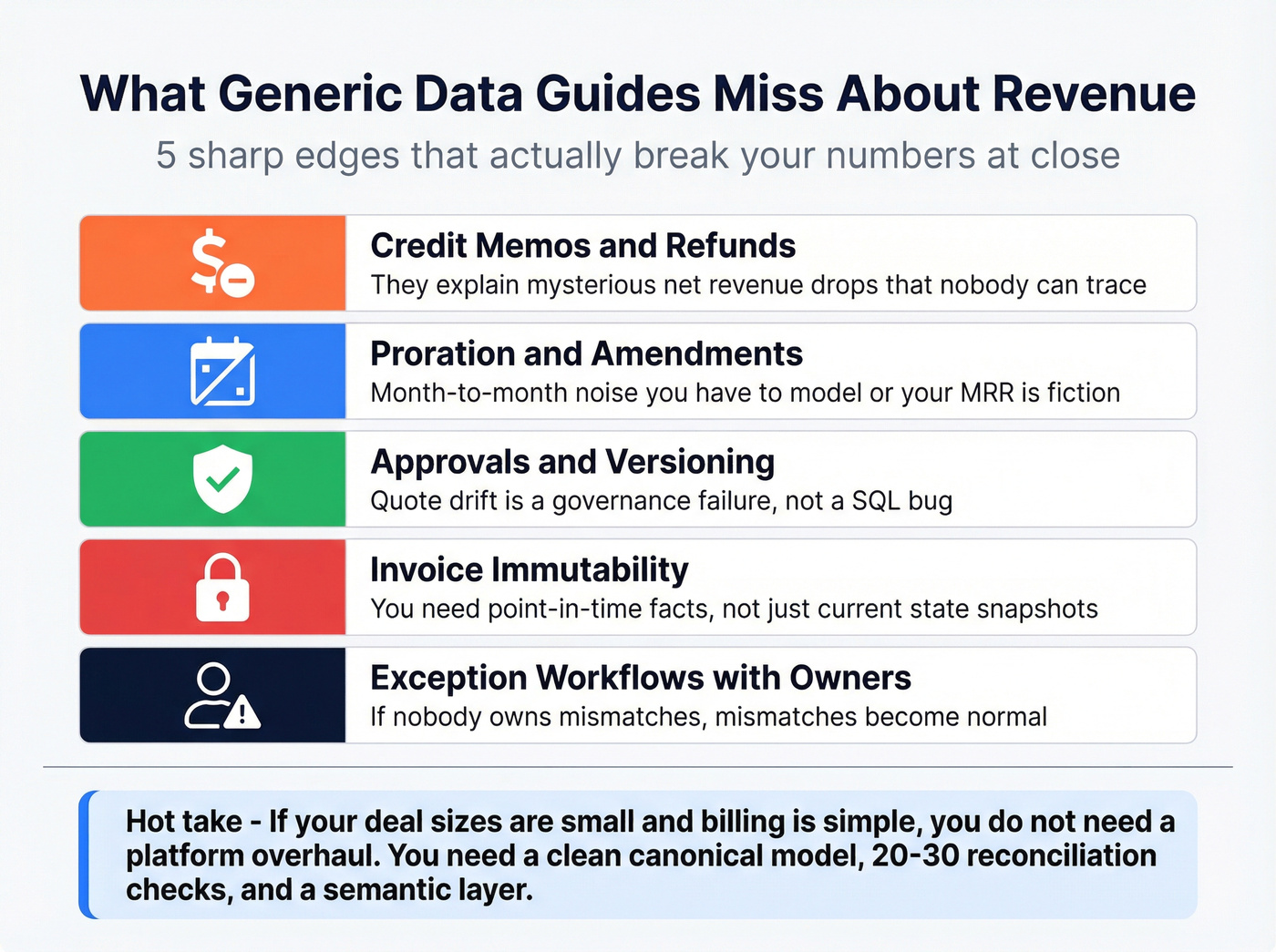

What generic unified data platform guides miss for revenue (the stuff that actually breaks numbers)

Most "unified data" content is written for analytics teams, not close week. Revenue has sharp edges:

- Credit memos and refunds (they explain "mysterious" net drops)

- Proration and amendments (the month-to-month noise you must model)

- Approvals + versioning (quote drift is a governance failure, not a SQL bug)

- Invoice immutability (you need point-in-time facts, not "current state")

- Exception workflows (if nobody owns mismatches, mismatches become "normal")

Hot take: if your average deal size is small and billing's straightforward, you don't need a platform overhaul. You need a clean canonical model, 20-30 reconciliation checks, and a semantic layer that stops metric re-implementation.

Everything else is optional.

Canonical unified revenue data model (the objects that prevent metric drift)

If you want unified revenue reporting, you need a canonical model that survives tool changes. I've seen teams rebuild their "revenue dataset" three times because they started with dashboards instead of entities, and every rebuild created a new definition of "customer" and "ARR."

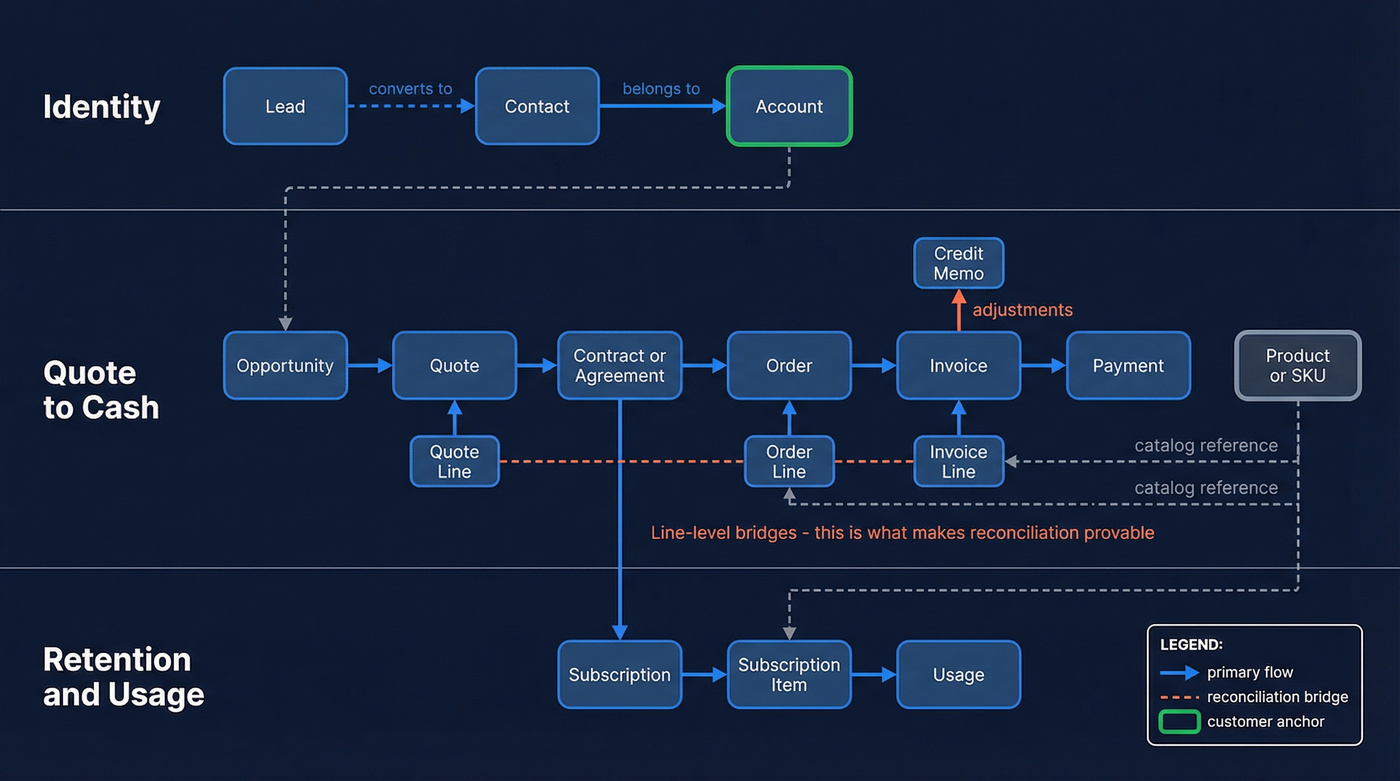

Start with identity, then QTC, then retention.

The canonical entities (and why they exist)

Below is a practical canonical model that maps cleanly to most stacks (Salesforce/HubSpot + CPQ + billing + ERP).

One important fix: you need a bridge entity between what Sales sold and what Billing billed. In many stacks that bridge is a Contract/Agreement plus line-level items (subscription items, order lines). Without those line-level bridges, reconciliation stays hand-wavy, and every "by product" report turns into a debate about mapping.

Canonical revenue model table (scannable version)

| Entity | Primary key | Core relationships | Revenue purpose |

|---|---|---|---|

| Account | account_id |

1->many Contacts, Opps, Subs | Customer anchor |

| Contact | contact_id |

belongs to Account | People + roles |

| Lead | lead_id |

may convert to Contact | Pre-account identity |

| Opportunity | opportunity_id |

belongs to Account | Pipeline + bookings |

| Quote | quote_id |

belongs to Opp/Account | Pricing snapshot |

| Quote Line | quote_line_id |

belongs to Quote, SKU | Line economics |

| Contract/Agreement | contract_id |

from Quote; to Sub/Orders | Commercial terms |

| Subscription Item | subscription_item_id |

belongs to Subscription | Recurring line truth |

| Order | order_id |

from Quote/Contract | Fulfillment trigger |

| Order Line | order_line_id |

belongs to Order, SKU | Fulfillment lines |

| Invoice | invoice_id |

from Order | Billings truth |

| Invoice Line | invoice_line_id |

belongs to Invoice, SKU | Billings by product |

| Payment | payment_id |

applies to Invoice | Cash + DSO |

| Subscription | subscription_id |

Account + Contract | Recurring contract |

| Usage | usage_id |

Subscription Item + time | UBP + expansion |

| Credit Memo | credit_memo_id |

references Invoice | Refunds/adjustments |

| Product/SKU | sku_id |

referenced by lines | Catalog governance |

Line-level bridges are what make "Quote Line <-> Invoice Line" provable. They also let you handle proration, partial shipments, multi-entity billing, and mid-term amendments without inventing logic in dashboards.

Relationships that matter (and the gotchas)

Account <-> Contact <-> Lead (identity spine) You need a survivable identity graph. Leads become contacts; contacts move companies; accounts merge; domains change.

Pick rules:

- One canonical Account per legal entity (or per billing entity if you're strict)

- A householding strategy for subsidiaries

- A dedupe policy (domain + name + external IDs)

Skip this and your "pipeline by ICP" and "NRR by segment" will drift every quarter. (See also: how to keep CRM data clean)

Opportunity <-> Quote (commercial intent vs commercial commitment) Opportunities are sales intent. Quotes are commercial commitment.

You need both because:

- Pipeline metrics live on Opps

- Pricing truth lives on Quotes (especially discounting, bundles, term length)

Control you want: once a quote's approved, version it. If reps can clone and edit post-approval, your downstream order won't match approved terms. That's governance, not "bad data."

Quote/Quote Lines <-> Contract/Subscription Items (the bridge) This is the sold vs contracted bridge. It's where term length, renewal dates, proration rules, and recurring vs one-time classification have to be explicit.

If you don't model contract + subscription items, you'll end up computing ARR from invoices because it's easier. Then you'll argue about "contracted ARR" forever.

Order/Order Lines <-> Invoice/Invoice Lines (billings truth) Invoices are the source of truth for billings. Period.

If your ARR is based on invoices, you need invoice lines with:

- service period start/end

- currency

- tax/VAT fields

- customer identifiers that match the Account model

Invoice <-> Payment (cash truth) Payments are how you compute DSO, collections risk, and cash forecasting.

If payments live in a processor and invoices live in billing, unify them with a payment application table (many payments can apply to one invoice, and vice versa).

Subscription Items <-> Usage (retention + expansion truth) For SaaS, subscription is the contract. Usage is the reality.

If you're doing usage-based pricing, usage becomes revenue-critical. You'll need usage events aggregated to billing periods, attribution to subscription items / SKUs, and late-arriving data handling (usage often arrives late and shows up right when you're trying to close the month).

Credit Memo (the silent revenue killer) Credit memos and refunds explain why billings and cash diverge. They also explain churn narratives.

If you don't model credit memos explicitly, your net numbers will be wrong in the exact months Finance cares about.

Walkthrough: how this prevents metric drift

When someone asks, "What's ARR?" you can compute it from the same canonical objects every time:

- Contracted recurring value: Contract + subscription items

- Billed recurring value: Invoice lines with recurring SKUs

- Collected recurring value: Payments applied to recurring invoice lines

Different teams can pick different "truths" (contracted vs billed vs collected), but they're all computed from the same model with explicit definitions. That's the difference between healthy disagreement and metric chaos.

Semantic/metrics layer: the mechanism that stops "two ARR numbers"

Real talk: most "unified data" projects fail because the warehouse has one truth and the BI layer has five.

A semantic/metrics layer is where you define metrics once and reuse them everywhere. dbt's Semantic Layer (MetricFlow) is the cleanest articulation of this: define metrics on top of models, let the layer handle joins, and when the metric changes, it updates everywhere it's invoked.

The three patterns (and how to pick)

| Pattern | Where logic lives | Best for | Tradeoffs | Example tools |

|---|---|---|---|---|

| Warehouse-native | In warehouse | Central governance | Tighter coupling | Snowflake/Databricks |

| Transform-layer | In dbt/models | Metrics-as-code | Needs PR discipline | dbt MetricFlow |

| OLAP accel | Headless semantic | Fast BI/embedded | Extra infra | Cube.dev |

Pick the center of gravity explicitly. If you don't, you'll get the worst outcome: metrics split across SQL, dbt, and BI "calculated fields," and nobody knows which one's real.

A concrete revenue metric pattern (contracted vs billed vs collected ARR)

Here's the pattern that stops arguments:

arr_contracted: sum normalized recurring value from subscription items active in the period (handles term, proration rules, and amendments).arr_billed: sum recurring invoice line value allocated to service periods (handles billing schedules and back-billing).arr_collected: sum payments applied to recurring invoice lines (handles cash timing and collections).

Then publish them as three separate metrics with clear names and one shared entity grain (Account + Subscription Item + month). Finance and RevOps can disagree about which one to use for a decision, but they won't be disagreeing about how the number was computed.

Use this if / skip this if (practical guidance)

Warehouse-native semantics Use it if you want governance enforced closest to the data and you've got a strong platform team.

Skip it if your analytics team moves faster than your warehouse governance process. You'll end up with shadow metrics in BI anyway.

Transformation-layer semantics (dbt MetricFlow / dbt Semantic Layer) Use it if you already run dbt as the modeling backbone and you want metrics-as-code.

Skip it if your org won't treat metric definitions like code (PRs, reviews, versioning). If metrics get edited in meetings, you'll hate this.

OLAP acceleration semantics (Cube-style) Use it if performance is your bottleneck and you need consistent metrics across many BI/embedded use cases.

Skip it if your main problem is upstream identity and QTC reconciliation. Faster wrong numbers are still wrong numbers.

Governance components you can't skip

A real semantic layer codifies entities, metrics, joins, and policies once. Not just a glossary.

Minimum governance kit:

- Entity definitions (Account, Subscription Item, Invoice Line)

- Metric definitions (ARR, NRR, bookings, billings)

- Join rules (how usage ties to subscription items)

- Policies (row-level security, column masking)

- A change log (what changed, when, and why)

Do this well and "two ARR numbers" becomes a bug, not a recurring argument.

Controls & reconciliation for Quote-to-Cash (the part most teams skip)

I've watched teams spend six months building a beautiful unified model, then lose trust in it in one close cycle because nobody built controls. The warehouse kept loading. The numbers kept drifting. Everyone went back to spreadsheets.

The principle that fixes this is simple: automate the check, not just the transaction.

Where it breaks (common failure modes)

In CPQ->billing programs, two failure patterns show up repeatedly: tax/identifier issues when Finance isn't involved early, and silent integration failures when nobody monitors retries.

Other common breakpoints:

Happy-path automation ignores renewals/amendments/usage Teams automate new business quotes, then defer renewals, amendments, proration, and usage-based edge cases. Those edge cases are where rev rec and churn narratives live.

Quote drift after approval Reps clone quotes, edit terms, or bypass approvals. Orders and invoices diverge from what was approved. Fix it with version control and permissions.

SKU mapping breaks downstream Bundles and add-ons don't map to billing SKUs. Invoice lines don't match quote lines. You can't reconcile revenue by product if product identity isn't stable.

Minimum viable controls (MVC): what to implement first (and how)

If you want a control system that works in the real world, build it in three parts: tests, anomaly checks, and an exception workflow.

1) dbt tests that catch structural breakage (fast, deterministic)

Start with tests that fail loudly:

- Uniqueness + not-null on primary keys (

invoice_id,invoice_line_id,subscription_item_id) - Referential integrity

invoice.order_idmust exist in Ordersinvoice_line.invoice_idmust exist in Invoicessubscription_item.subscription_idmust exist in Subscriptions

- Accepted values for currency codes, invoice status, recurring vs one-time flags

- Relationship cardinality checks (where you expect 1->many) Example: one invoice line maps to exactly one SKU; one payment application maps to one invoice.

These tests prevent the "we changed a field name and nobody noticed" disasters.

2) Reconciliation tests that catch money drift (line-level, not totals)

Then add revenue-specific checks that compare systems:

- Quote Line total vs Order Line total (by currency)

- Order Line total vs Invoice Line total

- Recurring classification consistency (quote line recurring flag matches subscription item and invoice line)

- Service period coverage (no recurring invoice line without start/end dates)

- Tax/VAT presence and validity for taxable regions

- Credit memo linkage (every credit memo references an invoice and line items)

This is where you stop leakage.

3) Anomaly checks that catch "looks valid, but wrong" scenarios

Deterministic tests won't catch everything. Add anomaly detection on:

- Daily invoice volume and invoice amount (rolling median + thresholds)

- Credit memo rate spikes

- Payment application lag (DSO trend breaks)

- "Orphan" growth (orders without invoices, invoices without payments) by cohort

Opinion: anomaly detection's only useful if it creates tickets someone actually closes. If it just posts charts to Slack, it'll become background noise in a week.

Exception table schema (the backbone of operational trust)

Controls only matter if exceptions are trackable and owned. Create a single exceptions table (or model) that every check writes into.

Example schema:

| Field | Type | Notes |

|---|---|---|

exception_id |

string | UUID |

exception_type |

string | e.g., order_invoice_mismatch |

entity_type |

string | invoice_line, payment, quote_line |

object_ids |

variant/string | list of IDs involved |

detected_at |

timestamp | when the check fired |

source_updated_at |

timestamp | last upstream change |

severity |

string | low/med/high/close_blocker |

owner_role |

string | RevOps, Finance, CS, Billing Ops |

status |

string | open/triaged/in_progress/resolved/wont_fix |

resolution_note |

string | what happened + why |

resolved_at |

timestamp | closure time |

Then build two simple views:

- Close dashboard: open exceptions by severity + owner + age

- Root-cause dashboard: top exception types over time (this is how you justify fixing upstream process)

Revenue governance that matters (the primitives that beat generic guides)

This is the governance layer revenue teams actually feel:

- Immutable invoice facts with explicit voids/credits (no silent overwrites)

- Quote approval + versioning (approved terms are a record, not a suggestion)

- Audit log retention for changes to key revenue fields (price, term, currency, tax)

- Metric change management (ARR definition changes require review + changelog)

- PII handling for activation (masking, access policies, and purpose limitation)

- Consent + opt-out enforcement for contact data used in outbound (see GDPR for Sales and Marketing)

- Schema enforcement for revenue-critical objects (breaking changes block deploys)

- Separation of duties for close-critical overrides (especially in billing/ERP)

That's how you build a system Finance trusts without turning your data team into the "no" department.

Activation layer: turning unified data into pipeline (reverse ETL + clean contact inputs)

A unified revenue dataset that only powers dashboards is a cost center. Activation is where it turns into pipeline and retention.

Reverse ETL is the pattern: push cleaned, modeled warehouse data back into operational tools (CRM, marketing, support).

How to activate unified revenue data (steps that work)

Step 1: Build activation-ready tables Create "operational marts" designed for syncing:

accounts_activation(tier, ICP fit, open pipeline, renewal date, health) (see account segmentation)contacts_activation(role, seniority, verified email/phone, last engagement) (see BDR contact data)opps_activation(stage, risk flags, next step, forecast category) (see B2B sales pipeline management)

Keep them stable. Reverse ETL hates schema churn.

Step 2: Define activation rules (not just segments)

Examples:

- If renewal's in 90 days and usage dropped 30%, create a CS task

- If account has intent spike + no opp, create an SDR account task

- If invoice is overdue 15 days, route to collections owner

Step 3: Sync to destinations

Typical destinations:

- CRM (Salesforce/HubSpot): tasks, fields, account tiers

- Marketing automation: suppression lists, lifecycle stages

- Support/CS: health flags, renewal risk, playbooks

Pick a reverse ETL tool based on reliability, observability, and how it handles deletes/merges. Reliability beats features here; a flaky sync destroys rep trust.

Step 4: Protect deliverability and rep trust with clean contact inputs Activation fails when contact data's stale. You push a "hot accounts" segment, reps email it, and bounce rates spike. Then everyone blames "the data team." (Related: B2B contact data decay)

This is where Prospeo fits naturally: before you sync segments into execution tools, verify and enrich contacts so activation doesn't poison deliverability. Prospeo is "The B2B data platform built for accuracy" with 300M+ professional profiles, 143M+ verified emails, and 125M+ verified mobile numbers, used by 15,000+ companies and 40,000+ Chrome extension users. It delivers 98% email accuracy, a 7-day refresh cycle, and enrichment that returns 50+ data points with a 92% API match rate and 83% enrichment match rate.

In practice, set activation rules like:

- Only sync contacts where

email_status = verified(see email verification list) - Prefer

mobile_verified = truefor call sequences - Enrich missing fields (title, department, company) before routing tasks

ROI model: how unified revenue architecture pays back (with example math)

Unified revenue architecture pays back in three buckets: leakage reduction, forecast accuracy, and productivity. The math doesn't need to be fancy; it needs to be honest.

Benchmarks to anchor the model

- Revenue leakage often lands in the 1%-5% range due to misconfigurations, fragmentation, and manual failures.

- Forecast accuracy tends to tighten when data isn't fragmented (a common before/after range teams cite is +-7% to +-3%).

- Poor data quality gets expensive fast; plenty of orgs end up eating multi-million-dollar impacts once you count rework, disputes, and missed collections.

- Teams routinely burn a big chunk of time searching, cleaning, and re-building the same datasets.

Use these as inputs, not promises.

Scenario A: $20M revenue (mid-market SaaS) - lightweight ROI model

Assume:

- $20M annual revenue

- 70% gross margin

- 10-person RevOps/Finance ops team (blended cost $150k fully loaded)

- Current leakage: 2% (inside the 1%-5% range)

- Stack cost: $80k/year software + $150k one-time implementation

Now compute:

| Value driver | Input | Impact | Annual value |

|---|---|---|---|

| Leakage reduction | 2% -> 1% | +1% revenue | $200k |

| Forecast accuracy | +-7% -> +-3% | fewer surprises | $100k-$300k |

| Productivity | 10 ppl x 10% | time back | $150k |

Even if you haircut the forecast benefit, you're still around $450k/year in value on an $80k/year stack. That's before you count fewer comp disputes, fewer billing escalations, and fewer "why's this customer delinquent?" fire drills.

Scenario B: $5M ARR PLG vs $50M enterprise - sensitivity to leakage + DSO

$5M ARR PLG SaaS (lean team, high automation)

Assume:

- $5M ARR

- Leakage improvement: 1.5% -> 1.0% (0.5% gain)

- Ops team: 3 people at $150k fully loaded

- DSO improvement: 45 -> 40 days (collections + payment application clarity)

- Stack: $35k/year software + $50k implementation

Back-of-napkin:

- Leakage value: 0.5% x $5M = $25k/year

- Productivity: 3 x 8% x $150k = $36k/year

- DSO value (cash impact): revenue per day ~= $5M/365 ~= $13.7k; 5 days faster ~= $68k cash freed (not profit, but real working capital)

PLG takeaway: ROI's real, but the win is operational calm. Keep the architecture lean: canonical model + semantic metrics + MVC controls.

$50M enterprise (complex billing, higher leakage risk)

Assume:

- $50M annual revenue

- Leakage improvement: 3% -> 1.5% (1.5% gain)

- Ops team: 20 people at $170k fully loaded

- DSO improvement: 60 -> 50 days

- Stack: $150k/year software + $250k implementation

Back-of-napkin:

- Leakage value: 1.5% x $50M = $750k/year

- Productivity: 20 x 8% x $170k = $272k/year

- DSO cash freed: $50M/365 ~= $137k/day; 10 days faster ~= $1.37M cash freed

Enterprise takeaway: leakage + DSO dominate. This is where reconciliation controls and exception workflows pay for themselves fast.

Cost ranges (so you can budget without guessing)

Mid-market unified revenue stacks typically land at $30k-$150k/year software-only, depending on ingestion volume, reverse ETL seats, and monitoring. Implementation is usually $50k-$250k one-time equivalent (or 0.5-2 FTEs) because the hard part is model + controls, not tools.

Why "SSoT" fails in real orgs (and the "preferred truth" design)

The most honest take on SSoT comes from practitioners: "single source of truth" becomes a slogan.

I've seen a VP promise "one number forever" in a kickoff, then watch the team spend the next two quarters arguing about whether invoices or contracts define ARR, whether credits count in churn, and why the CRM still shows an opp as Closed Won when billing never issued an invoice. That wasn't a tooling problem. It was a design problem.

So don't design for SSoT. Design for preferred truth.

Preferred truth means:

- You explicitly name the authoritative source per entity (Invoices from billing, payments from processor/ERP, pipeline from CRM).

- You unify them in a canonical model.

- You enforce controls and publish metric definitions.

- You align incentives so upstream teams care (comp plans, close process, approvals).

Do:

- Treat metric definitions like product requirements

- Build exception workflows with owners

- Make "data quality" part of close hygiene

Don't:

- Promise one magical dashboard that ends arguments

- Let every team redefine ARR in their own tool

- Skip Finance until UAT week

90-day implementation roadmap (RevOps + Finance + Data)

If you try to boil the ocean, you'll still be arguing about ARR in six months. A 90-day plan works because it forces sequencing.

Phase 1 (Days 1-30): Identity + CRM/marketing unification

Goal: one identity spine and one lifecycle view.

- Integrate CRM + marketing automation first.

- Unify Lead/Contact/Account with dedupe rules and external IDs.

- Create a data dictionary for lifecycle stages (MQL/SQL/SAL) and ownership.

Engineering conventions (steal these from GTM engineering playbooks):

- Objects are singular nouns (

Account,Invoice) - Fields are

snake_case - Calculated fields start with

calc_ - Integration-only fields start with

sync_

Add a "field creation tax": every new field needs an owner, definition, and downstream impact. This one policy prevents years of metric drift.

Phase 2 (Days 31-60): Quote-to-Cash reconciliation controls

Goal: stop leakage and stop surprises.

- Map QTC objects: Quote(+lines) -> Contract -> Order(+lines) -> Invoice(+lines) -> Payment -> Credit memo.

- Implement automated reconciliation checks and anomaly detection.

- Stand up an exceptions queue with routing and SLAs.

- Lock point-in-time rules: immutable invoice facts, daily pipeline snapshots, SCD2 for account attributes.

This is where you'll feel the stack cost. Monitoring and exception workflows aren't "nice to have." They're the difference between trusted numbers and ignored numbers.

Phase 3 (Days 61-90): Activation + AI readiness

Goal: turn unified data into action, safely.

- Build activation marts and sync them into CRM/marketing/support via reverse ETL.

- Add anomaly detection for pipeline, billings, churn signals.

- Prepare for AI carefully: in finance, AI doesn't fail loudly; it fails quietly. (If you're operationalizing agents, see AI sales agent.)

Concrete AI use case that actually works (when your foundations are solid): an agent flags renewal risk when usage drops, invoices go overdue, and support severity spikes, then opens a task with the exact account, subscription item, and invoice IDs. That only works with unified IDs, semantic metrics, and controls; otherwise it's just confident nonsense.

RACI mini-table (keep it simple)

| Workstream | RevOps | Finance | Data/Eng |

|---|---|---|---|

| Identity model | A/R | C | R |

| Metric defs | A/R | A/R | C |

| QTC controls | C | A/R | R |

| Exception ops | R | A/R | C |

| Activation | A/R | C | R |

A = Accountable, R = Responsible, C = Consulted

You're building reconciliation checks between Quote, Order, and Invoice. But if the account and contact records feeding your CRM are wrong, every downstream metric drifts. Prospeo's CRM enrichment returns 50+ data points per contact at a 92% match rate - for roughly $0.01 per email.

Fix the source layer first. Enrich your CRM with data you can trust.

FAQ: Unified revenue data architecture

What's the difference between a unified data platform and a unified revenue data architecture?

A unified data platform is general-purpose ingestion/storage/governance. A unified revenue data architecture is revenue-specific: canonical QTC entities, semantic definitions for ARR/NRR/bookings, and reconciliation controls so Finance and RevOps get consistent, auditable numbers.

What are the minimum systems to unify first for revenue reporting?

Start with CRM + billing (and CPQ if quoting's complex). Pipeline without invoices is forecasting theater; invoices without pipeline hides future risk. Unify identity first, then connect Opportunity -> Quote/Contract -> Order -> Invoice so you can trace bookings to billings.

How do you stop ARR/NRR metric drift across Finance and RevOps?

Define ARR/NRR once in a semantic layer with explicit time logic, filters, and joins. Publish contracted vs billed vs collected variants so teams stop mixing definitions. Enforce a changelog and review process so metrics don't get silently redefined in dashboards.

What reconciliation checks prevent Quote-to-Cash revenue leakage?

Do line-level checks: Quote Lines <-> Order Lines <-> Invoice Lines (SKU, quantity, price, currency), plus service period dates for recurring lines. Validate tax/VAT identifiers, and reconcile invoice balances against applied payments and credit memos. Route exceptions to owners with SLAs.

How do you activate unified segments without hurting deliverability?

Gate activation on contact quality: verified emails, deduped identities, and recent refresh. Sync only reachable contacts into outbound and lifecycle tools, and keep a suppression list for invalid/catch-all risk. Treat deliverability as a revenue control, not a marketing preference.

Summary: what to build first (so the numbers stop drifting)

If you want unified data architecture revenue teams actually trust, don't start with dashboards or a generic "unified data platform" diagram. Start with the canonical revenue model, publish semantic metrics (so ARR stops getting reinvented), and ship reconciliation controls with an exception workflow that has real owners.

Do that and you'll cut leakage, tighten forecasting, and make activation workflows (reverse ETL, renewals, collections, outbound) run on clean, auditable data instead of spreadsheet folklore.