AI Sales Agent: What It Is, What Works, and How to Deploy in 2026

Your SDR manager pings you at 9:12am: "Bounce rate is 6% and climbing. Did the new ai sales agent change anything?" Now your domain reputation's wobbling, sequences are pausing, and leadership wants "more automation" next week.

Here's the operator's guide for 2026: what this category actually is, what's real vs hype, and the rollout plan that won't torch deliverability or compliance. You'll get a pass/fail 6-point agent test, hard deliverability thresholds (so you know when to stop), and a 30-day deployment plan with ownership, audit logs, and a "when metrics spike" runbook.

What you need (quick version)

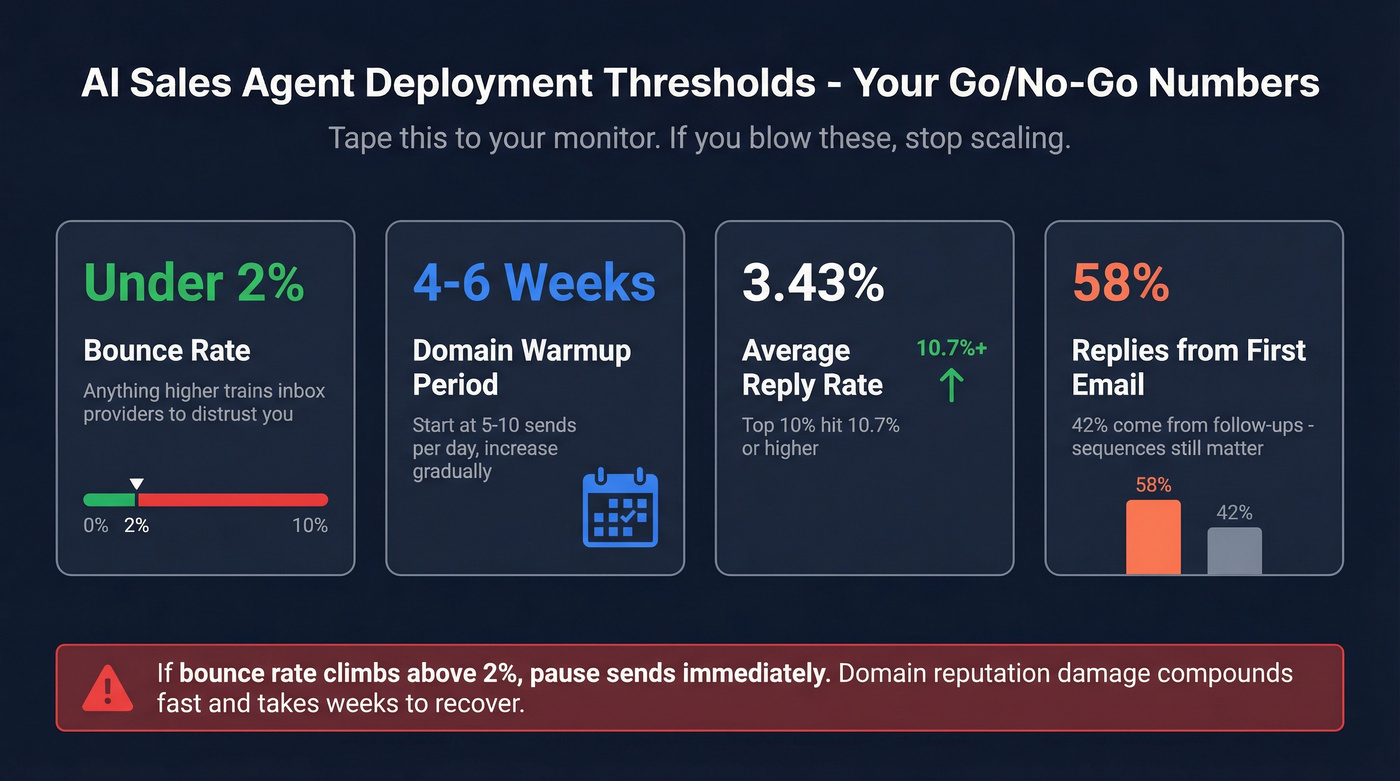

Print this and tape it to your monitor.

Most "AI SDR" projects fail for boring reasons: bad data, rushed sending, and zero governance.

Quick-start checklist (do these in order):

1) Verify + refresh your data weekly (non-negotiable). Your goal is bounce <2%. Anything higher trains inbox providers to distrust you.

2) Start with a single-task agent + strict handoffs. Pick one job (like "route inbound leads to the right owner" or "draft first-touch emails for human approval"). If you can't explain the agent's job in one sentence, it's too complex.

3) Lock compliance + audit logs before voice/text. Email's the lowest-risk channel operationally. Voice and SMS are where companies get sued. Don't ship anything that can't produce an audit trail: trigger → action → data used → message sent → human override.

Benchmarks to hold yourself to (or stop scaling):

- Bounce: under 2%

- Warmup: 4-6 weeks, starting at 5-10 sends/day

- Reply rates (directional): average 3.43%; top 10% 10.7%+

- Sequence reality (directional): 58% of replies come from the first email, 42% from follow-ups

What is an AI sales agent (and how it differs from assistants and chatbots)?

"Agent" is the most abused word in sales tech right now. Half the market's a chatbot with a new label.

Use this heuristic: an assistant responds to you; an agent responds to the environment. An assistant waits for a prompt. An agent watches for signals (events) and takes action - often across multiple steps.

Salesforce's framing is useful: assistive vs autonomous agents. Assistive agents help a human complete work. Autonomous agents execute work with minimal human input, inside guardrails.

AI sales agent definition (environment-triggered workflows)

An AI sales agent is an environment-triggered system that can:

- detect a signal (new lead, form fill, intent spike, bounced email, "pricing" page visit, meeting no-show)

- decide what to do next (plan)

- take action (send, route, enrich, schedule, update CRM)

- keep state across steps (context/memory)

- hand off to a human when it hits a boundary condition

Here's the thing: the "AI" part isn't the magic. The magic is orchestration - connecting data, channels, and rules so the agent can actually do work.

Minimal agent architecture (what has to exist for it to work)

If a vendor can't explain this clearly, the product's a demo, not a system.

Trigger → Context → Policy → Action → Logging → Handoff

- Trigger: event fires (form submit, reply received, stage change, intent spike).

- Context fetch: agent pulls only the data it's allowed to use (CRM fields, account notes, enrichment, suppression status).

- Policy/guardrails: rules decide what's permitted (claims library, compliance rules, stop conditions, escalation triggers).

- Action: agent executes via tool permissions (CRM update, task creation, email draft/send, routing, booking).

- Logging: every step's recorded (inputs, decision path, final output).

- Human handoff: when it hits a boundary (pricing, security, angry reply), it escalates with full context.

Where failures happen in real life:

- Bad context retrieval (wrong persona/account, stale fields, missing suppression)

- Over-permissioned tools (agent can send when it should only draft)

- No traceability (nobody can answer "why did it send that?")

AI sales assistant vs agent vs chatbot (common mislabeling)

AI sales assistant: reactive co-pilot. Drafts an email when asked, summarizes calls, updates CRM fields, suggests next steps. Great for rep productivity. Not a replacement for workflow design.

AI sales agent: proactive executor. It triggers off events and completes multi-step tasks. Great for operational throughput - if you constrain scope.

Chatbot: conversational UI. It answers questions. It might qualify. It often doesn't integrate deeply enough to close the loop (routing, booking, CRM updates, suppression lists).

A lot of "AI SDR" tools are assistants wearing an agent costume. If it can't act without you prompting it, it's not an agent.

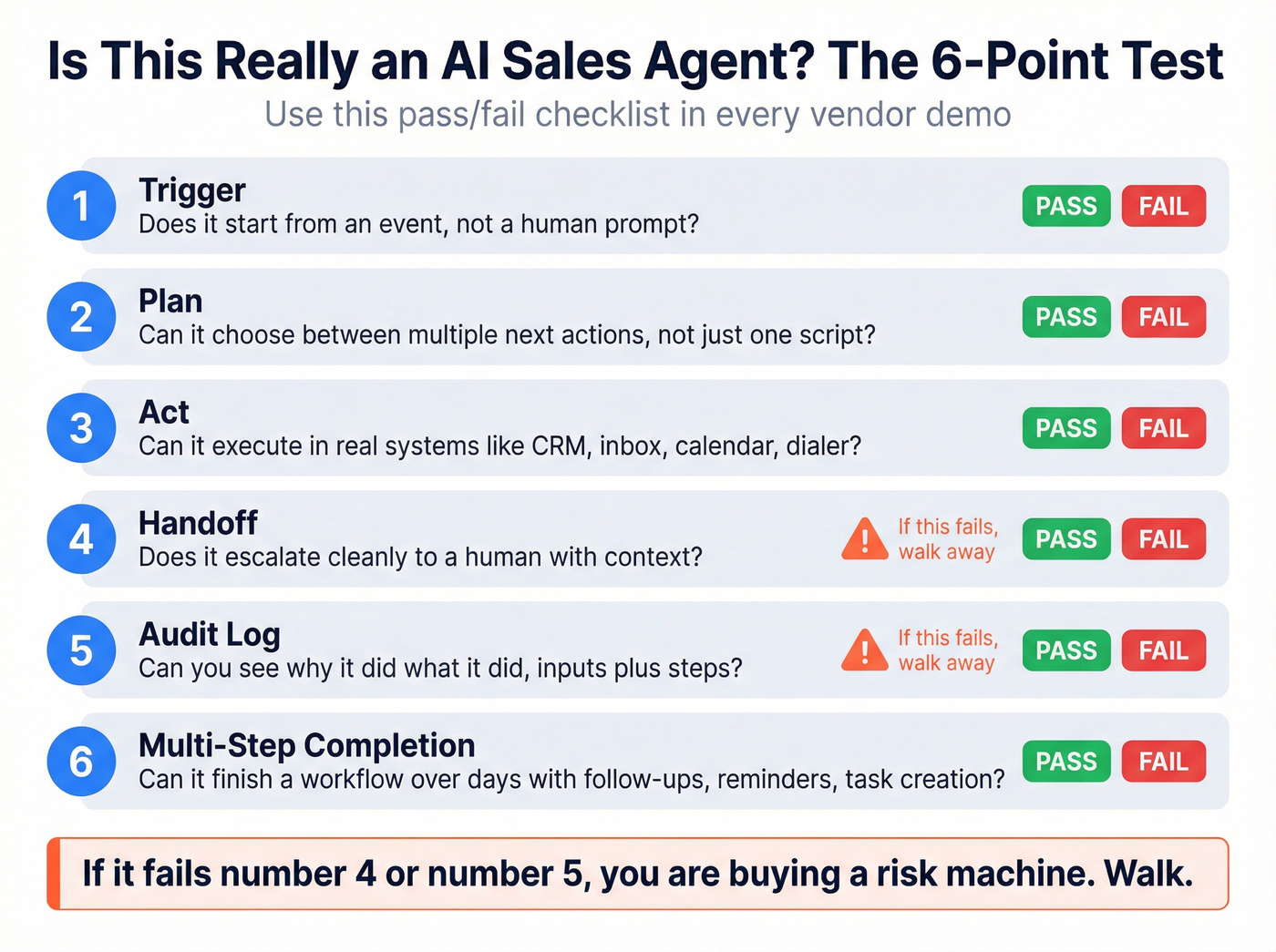

"Is this really an agent?" 6-point test

Use this pass/fail test in demos:

- Trigger: Does it start from an event (not a human prompt)?

- Plan: Can it choose between multiple next actions (not just one script)?

- Act: Can it execute in real systems (CRM, inbox, calendar, dialer)?

- Handoff: Does it escalate cleanly to a human with context?

- Audit log: Can you see why it did what it did (inputs + steps)?

- Multi-step completion: Can it finish a workflow over days (follow-ups, reminders, task creation)?

If it fails #4 or #5, walk.

You're buying a risk machine.

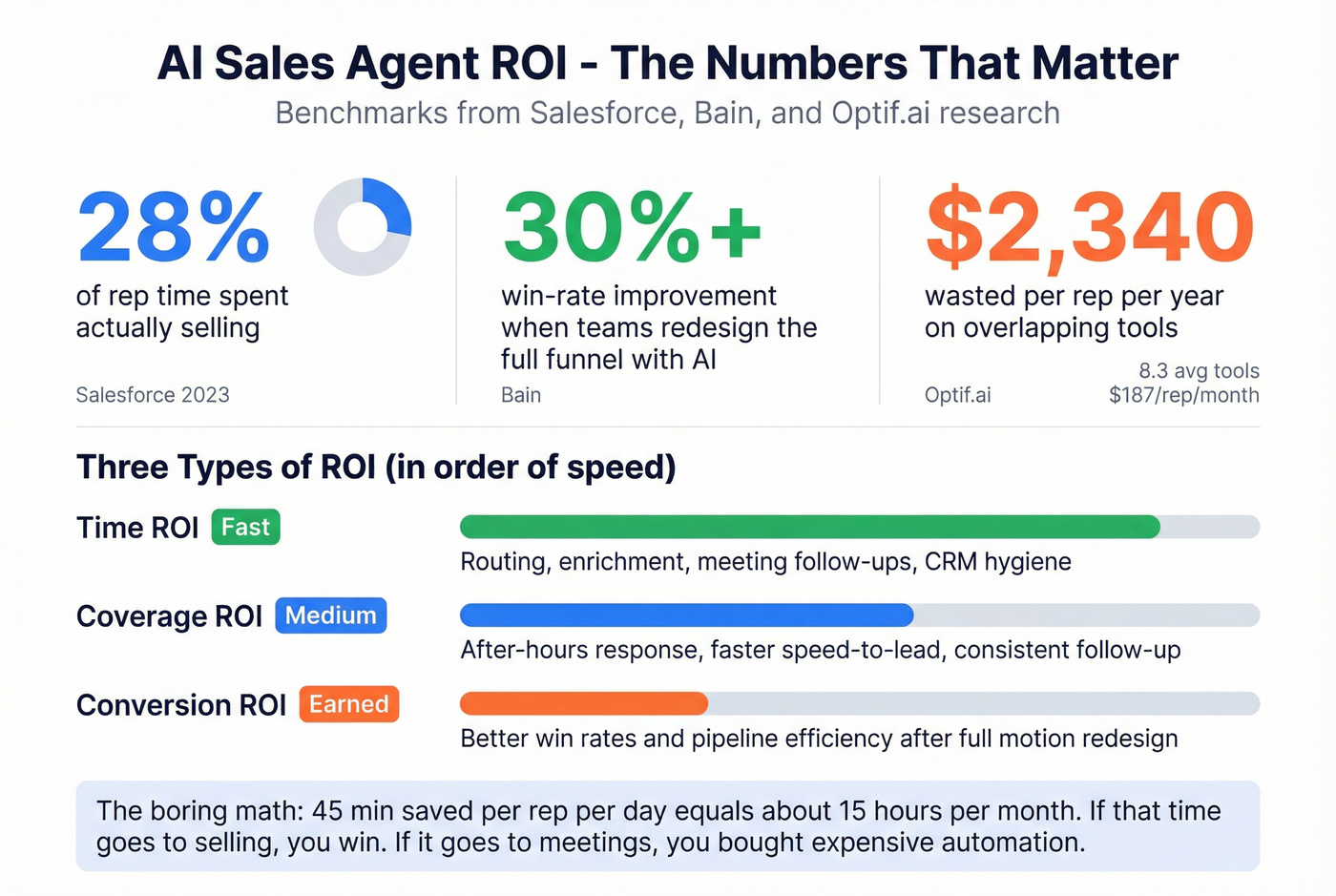

Why AI sales agents exist now (and what ROI is realistic)

Sales teams didn't wake up and decide "we love robots." They ran out of time.

Salesforce research puts reps at roughly 28% of time actually selling. That gap is why agents exist: they turn admin work into throughput - routing, enrichment, follow-ups, and hygiene.

Hot take: if your average deal size is small and your funnel's simple, you don't need a "fully autonomous AI SDR." You need clean data, fast speed-to-lead, and ruthless follow-up discipline. Most teams try to buy autonomy when they really need operations.

What ROI looks like when it's real:

- Time ROI (fast): routing, enrichment, meeting follow-ups, CRM hygiene.

- Coverage ROI (medium): after-hours response, faster speed-to-lead, consistent follow-up.

- Conversion ROI (earned): better win rates and pipeline efficiency after you redesign the motion.

Bain reports 30%+ win-rate improvement in early AI successes when teams redesign end-to-end funnel steps, not when they "add AI emails" on top of a broken motion.

Tool sprawl is the hidden tax. Optif.ai's benchmark shows teams average 8.3 sales tools and spend about $187 per rep/month, and overlap wastes about $2,340 per rep/year. A good rollout removes tools and steps; a bad rollout adds another dashboard and calls it "innovation."

Mini callout: the boring math that matters If an agent saves each rep 45 minutes/day, that's ~15 hours/month. If that time turns into more selling, you win. If it turns into more internal meetings, you bought expensive automation.

You read it above: bounce under 2% or stop scaling. Your AI sales agent can't hit that threshold on stale data. Prospeo refreshes 300M+ profiles every 7 days and delivers 98% email accuracy - so your agent sends to real inboxes, not spam traps.

Fix the data layer before you automate anything else.

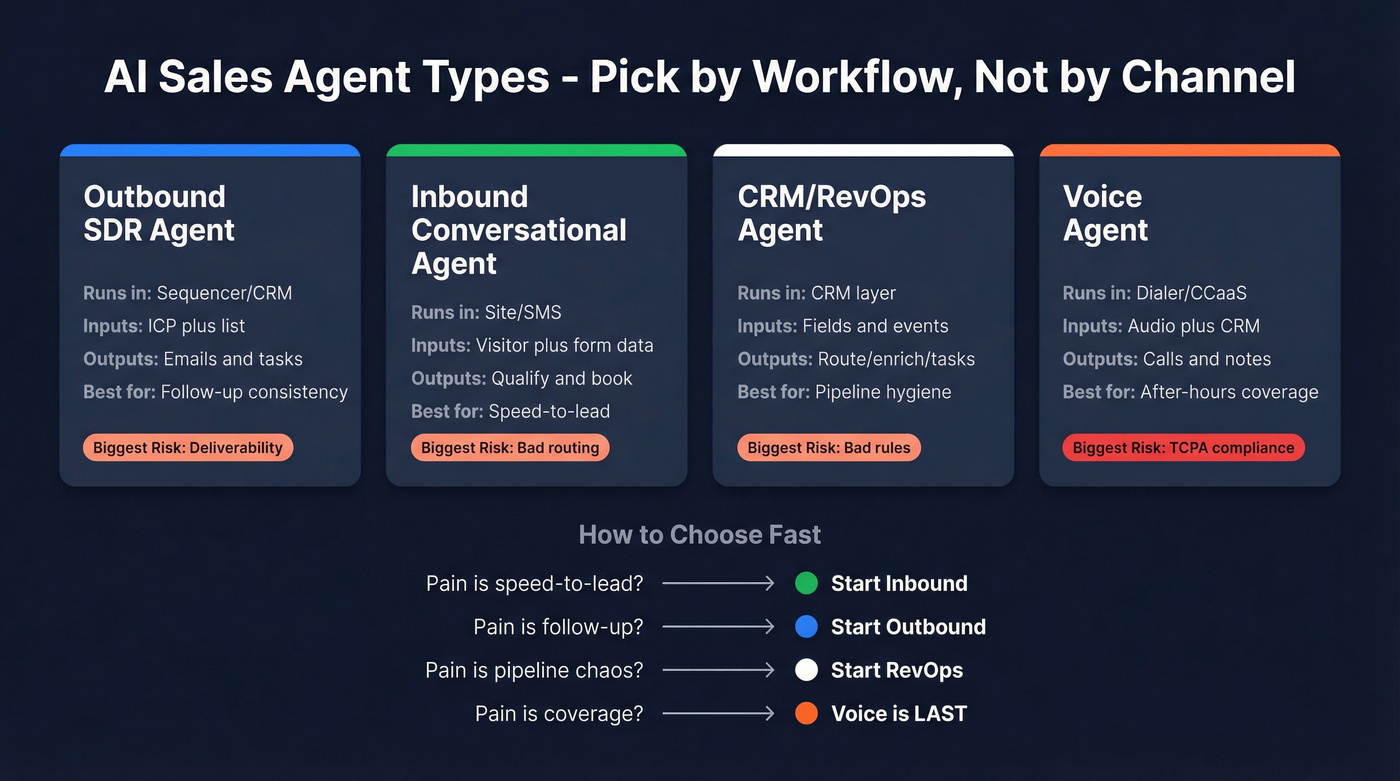

Types of AI sales agents (with best-fit use cases)

Most teams pick the wrong agent type because they start with the channel ("we want voice!") instead of the workflow ("we want qualified meetings booked with clean handoffs").

Below is a practical taxonomy. Keep the table tight; put nuance in the bullets.

| Agent type | Runs in | Inputs | Outputs | Best for | Biggest risk | Pricing |

|---|---|---|---|---|---|---|

| Outbound SDR | Sequencer/CRM | ICP + list | Emails/tasks | Follow-up | Deliverability | Seat + credits |

| Inbound convo | Site/SMS | Visitor + form | Qualify/book | Speed-to-lead | Bad routing | Seat + usage |

| CRM/RevOps | CRM layer | Fields/events | Route/enrich | Hygiene | Bad rules | Seat/usage |

| Voice agent | Dialer/CCaaS | Audio + CRM | Calls/notes | After-hours | TCPA | Per-min/call |

How to choose fast:

- If your pain's speed-to-lead, start with inbound.

- If your pain's follow-up consistency, start with outbound - but only after deliverability's stable.

- If your pain's pipeline chaos, start with RevOps agents.

- If your pain's coverage, voice is last, not first.

Outbound SDR agents (prospecting → sequences → routing)

Best fit: high-volume outbound where the bottleneck's research + first draft + follow-up consistency.

Do this well:

- pick the right persona at the right account

- draft messages from approved inputs

- route replies correctly (positive/neutral/negative)

- stop when signals say stop (bounce, opt-out, angry reply)

Don't do this at all: let the agent freestyle your positioning. That's how you get confidently irrelevant outreach that reads personal and lands wrong.

Inbound conversational agents (site/chat → qualification → booking)

Best fit: inbound-heavy teams where speed-to-lead matters and qualification's repetitive.

They win when:

- qualification rules are explicit (industry, size, geo, use case)

- booking rules are simple

- handoff to an AE is immediate

They lose when qualification requires nuance, or routing rules are a mess (territory exceptions, product lines, partner vs direct).

CRM/RevOps agents (routing, enrichment, task creation, forecasting hygiene)

Best fit: RevOps teams drowning in "small" work that breaks big systems.

These agents move metrics without drama:

- dedupe + merge suggestions

- enrichment on new leads

- SLA monitoring (speed-to-lead, follow-up compliance)

- stage hygiene nudges

- renewal risk summaries

I've seen these deliver more value than outbound "AI SDRs" because they're easier to scope, easier to govern, and harder to break.

Voice agents (where they work; where they don't)

A voice agent is a stack: ASR (speech-to-text) + NLU (intent) + TTS (voice) + orchestration + context memory.

Voice works for after-hours coverage, basic qualification, scheduling and confirmations, and simple follow-ups.

Voice fails at complex objection handling, pricing negotiations, and anything regulated without airtight consent + disclosures.

Reality check for 2026: what works in production (and what fails)

Fully autonomous sales agents that you trust with your brand, your domain reputation, and your legal risk?

Not in production.

What works is narrower, and that's good news, because narrow ships.

What actually worked (real examples you can copy)

These are the wins I see repeatedly when teams stop chasing "autonomy" and start shipping workflows:

- Inbound speed-to-lead: response time dropped from 4 hours to 45 minutes by letting an agent qualify and route instantly, then handing off to a human for the first real conversation.

- Rep time back: teams reclaimed ~15 hours/week by automating CRM hygiene + meeting follow-ups + routing.

- Renewal risk: a RevOps agent generated weekly "renewal risk" briefs (usage dip + ticket volume + champion change) so CSMs stopped getting surprised in QBR season.

One pattern shows up every time: the teams that win treat agents like production systems, not like prompts.

What works: single-task agents (one-sentence scope rule)

Use this rule: if you can't explain it in one sentence, it's too complex.

Great single-task scopes:

- "When a lead replies, classify intent and route to the right owner."

- "When a meeting's booked, create tasks + pre-call brief."

- "When a lead enters ICP, enrich and assign sequence A or B."

- "When an inbound form's submitted, qualify and offer times."

Predictable beats clever.

Every time.

What fails: end-to-end "AI SDR replaces team"

The failure pattern's consistent:

- the agent sends a lot of messages

- deliverability degrades

- replies are low-quality or angry

- the team spends more time cleaning up than they saved

The worst version is personalization theater: creepy openers + mismatched pitch. Better grammar doesn't make spam less spam.

If you're building outbound workflows, start with the guardrails and mistakes list in AI cold email campaigns and AI cold email personalization mistakes.

Three "skip this if..." rules (save yourself a quarter)

- Skip voice agents if you can't prove consent capture + revocation handling end-to-end.

- Skip outbound autonomy if bounce is >2% today. Fix the list first.

- Skip any agent that can't show trigger → action audit logs and suppression handling in the demo.

Look, if a vendor can't show audit logs and suppression handling, you're not buying "AI." You're buying future incident reports.

Guardrails that prevent brand drift (claims library, tone rules, escalation triggers)

If you deploy outbound agents, you need guardrails like you need seatbelts:

- Approved claims library: what you can say (and what you can't)

- Tone rules: direct, not hypey; no fake familiarity; no assumptions

- Escalation triggers: pricing, security, legal, competitor mentions, angry replies

- Stop conditions: bounce, opt-out, spam complaint, "remove me" variants

- Human approval gates: required for new sequences and new segments

You can't prompt your way out of governance. You need policy.

Deliverability + data quality for an AI sales agent (the real bottleneck)

If you're deploying an ai sales agent for outbound, deliverability's the whole game. Not prompts. Not "personalization." Deliverability.

A widely shared community summary of Instantly's 2026 benchmark figures is blunt (treat these as directional because ICP and offer swing results hard):

- Average reply rate: 3.43%

- Top 25%: 5.5%+

- Top 10%: 10.7%+

- Replies: 58% from the first email, 42% from follow-ups

If your agent doubles volume but tanks inbox placement, you'll end up with less pipeline and a damaged domain.

If you need the updated baseline rules, see email deliverability.

The non-negotiables: bounce thresholds, warmup timeline, throttling

Bounce rate stays under 2%. At 6% bounce, you're not testing. You're burning sender reputation.

Warmup's mandatory:

- Warmup: 4-6 weeks

- Start at 5-10 sends/day per mailbox

- Increase slowly, with daily caps and per-domain throttles

A throttle rule that keeps you alive:

- New mailbox: 10/day week 1

- Mature mailbox: 30-50/day (only if replies and bounces are healthy)

- If bounces spike or spam complaints appear: cut volume immediately and fix list quality

Monitoring dashboard (what to watch daily vs weekly)

If you don't instrument this, you're flying blind.

Daily (operator checks):

- Bounce rate (hard + soft) by mailbox and by domain

- Spam complaints (any non-zero is a fire)

- Unsubscribe/opt-out rate

- Reply classification mix (positive/neutral/negative)

- Block signals (sudden drop in sends delivered, provider errors)

Weekly (management checks):

- Inbox placement sampling (seed tests across Gmail/Microsoft)

- Reply rate by segment (ICP slice, persona, industry)

- Meeting show rate and stage conversion (quality, not volume)

- Suppression list growth and "stop" handling accuracy

- Top 20 subject lines and templates by complaint rate

When things go wrong: deliverability troubleshooting runbook

| Symptom | Likely cause | Immediate action (today) | Prevention (next 2 weeks) |

|---|---|---|---|

| Bounce >2% | stale list, catch-alls, bad enrichment | Pause sends to that segment; re-verify list; suppress risky domains | Weekly refresh; verify before sequencing; dedupe across sources |

| Spam complaints >0 | volume spike, misleading copy, bad targeting | Stop new sends; reduce volume 50-80%; tighten claims + CTA | Add per-domain throttles; stricter ICP; claims library + approvals |

| Reply rate drops | inbox placement decline, offer fatigue | Cut volume; rotate copy; check placement via seeds | Segment-level reporting; refresh data; improve relevance triggers |

| Open rate collapses | provider filtering, domain reputation hit | Pause; warm up again; move to backup domain/mailboxes | Slow ramp; consistent cadence; avoid sudden list swaps |

| Sudden blocks/errors | provider rate limits, authentication issues | Pause; check SPF/DKIM/DMARC; reduce concurrency | Pre-flight auth checks; mailbox health monitoring; gradual scaling |

Opinion: most teams waste weeks rewriting prompts when the fix is "pause, clean the list, slow down."

Follow-up math: why sequences matter (58/42 split)

That 58/42 split changes how you design agentic outbound. If you only optimize the first email, you're leaving a huge chunk of replies behind.

A sequence pattern that works:

- Email 1: short, single CTA, under ~80 words

- Email 2: reply-style follow-up (not "bumping this")

- Email 3: add one new piece of relevance (trigger, use case, constraint)

- Email 4+: stop if there's no signal; don't harass

Agents are great at consistent follow-ups. They're also great at consistently annoying people if you don't set stop rules.

If you want copy/paste structures, use B2B cold email sequence and follow up email sequence strategy.

List hygiene playbook: verify → dedupe → refresh weekly → suppress risky domains

This workflow's repeatable and measurable:

- Verify every email before it ever hits a sequencer

- Dedupe across sources (CRM + enrichment + exports)

- Refresh weekly (job changes and disabled mailboxes are constant)

- Suppress risky patterns (role accounts, burned domains, high-complaint segments)

Here's a real scenario I've watched play out: a team imported "fresh" contacts from three places, didn't dedupe, and their shiny new agent hit the same person twice in 48 hours from two different domains. The prospect replied (angry), the AE got pulled into damage control, and the SDR manager shut the whole project down by Friday. That wasn't an AI problem. That was basic list hygiene.

For the SOP, see email verification list and B2B contact data decay.

Compliance & security guardrails (especially for voice/text)

Outbound automation is a compliance multiplier. If you do something wrong at human speed, it's a mistake. If an agent does it at scale, it's a lawsuit.

Here are the numbers legal teams care about:

- GDPR: up to EUR20M or 4% of global revenue

- TCPA: up to $1,500 per violation

- HIPAA: up to $1.5M/year per category

Corporate Compliance Insights reports a ~95% YoY increase in TCPA lawsuits. That's why "we'll fix compliance later" isn't a plan.

Channel-by-channel compliance checklist (copy/paste)

Email (lowest-risk operationally)

- Clear sender identity + truthful subject lines

- One-click unsubscribe or clear opt-out instruction

- Global suppression list enforced across tools

- No sending to suppressed/opted-out contacts - ever

- Audit log: who sent what, when, and why

SMS

- Consent capture stored with timestamp + source

- STOP/UNSUBSCRIBE handling works on every number and every tool

- Quiet hours + frequency caps

- Message templates approved (no misleading urgency)

Calling / voice

- Consent basis documented (especially for automated/prerecorded/AI voice)

- Required disclosures at call start (automated system + recording, where applicable)

- Transfer-to-human path always available

- Revocation honored immediately and globally

- Call recordings/transcripts retention policy defined before launch

2026 TCPA reality: consent, revocation, state mini-TCPAs

Operational rules that matter in 2026:

- AI-generated voices are treated as "artificial/prerecorded" in many TCPA contexts, so prior express written consent is the safe baseline.

- Consent revocation happens by any reasonable method. If someone says "stop," "unsubscribe," "don't contact," or any reasonable variant, your systems must honor it.

- The "revoke all" requirement was delayed to April 2026. Build global suppression now anyway.

- State mini-TCPA laws are stricter than federal rules in practice. You can be "fine" federally and still get clipped.

Voice data governance (bystanders, biometrics, retention)

Voice introduces risks email never touches:

- Bystander capture: speakerphone and shared spaces mean you can record people who never consented. Your policy must address this, and your system must support deletion requests.

- Biometrics/voiceprints: some vendors derive voiceprints or biometric identifiers for speaker recognition. If you don't need it, disable it. If you do need it, treat it as sensitive data with explicit controls.

- Inference risk: transcripts can reveal health info, union membership, financial distress, or other sensitive attributes. Don't store what you don't need. Don't feed it into targeting.

- Retention: define how long you keep recordings and transcripts, and who can access them. Short retention beats "keep forever."

Procurement questions that surface real risk fast

- Where are recordings and transcripts stored (region, subprocessors)?

- Are voiceprints/biometric identifiers created or stored?

- Can we disable vendor training on our data by default?

- Can we set retention by channel (e.g., 30/60/90 days) and delete on request?

- Do audit logs include transcript access events (who viewed/exported)?

Security checklist for procurement (TLS/AES/audit logs/data retention)

Bake these into vendor selection up front:

- TLS 1.2+ in transit

- AES-256 at rest

- least-privilege access (role-based permissions)

- audit logs for agent actions (who/what/when/why)

- data retention controls (prompts, transcripts, PII)

- DPA availability and subprocessors list

If a vendor can't answer these quickly, they're not enterprise-ready, no matter how good the demo looks.

Implementation plan to deploy an AI sales agent (first 14 days, first 30 days)

If you try to deploy an AI sales agent platform in one shot, you'll end up with a science project. Roll it out like RevOps: tight scope, measurable gates, and boring controls.

Budget realistically. All-in outbound ops (domains/inboxes + warmup + data verification + sequencer + monitoring + QA time) runs $500-$2,000/month per active outbound seat depending on volume and how enterprise your stack is, and the number jumps fast if you add voice, multiple regions, or heavy security reviews because those programs need real people, real process, and real audit trails.

Day 1-3: pick one workflow + define handoffs + verify data

Pick one workflow that's high-volume and low-judgment.

Good starters:

- inbound lead qualification + booking

- reply classification + routing

- enrichment + assignment + task creation

Write the one-sentence scope. If you can't, simplify.

Then do the unsexy part: verify and enrich your contact data on day one. Clean inputs keep your agent from scaling bounces and bad routing.

Define handoffs:

- what the agent can do alone

- when it must escalate

- what context it must include in the handoff

Day 4-14: integrate (CRM, inbox, calendar), QA loop, guardrail library

Integrations that matter:

- CRM (objects, fields, ownership, routing rules)

- inbox/sending tool (throttles, suppression, reply handling)

- calendar (booking rules, buffers, round-robin)

- ticketing/Slack (escalations)

Build a QA loop:

- sample 20-50 agent actions/day

- tag failures (wrong persona, wrong claim, wrong routing, compliance risk)

- update rules and the approved claims library weekly

Treat prompts like code: version them, review them, and don't let reps freestyle production prompts.

For agent instruction quality, OpenAI's guide is a solid baseline: A practical guide to building agents (PDF).

If you're enriching inside your CRM, use a dedicated enrichment layer with API support so routing rules have reliable fields. Example: https://prospeo.io/b2b-data-enrichment

Day 15-30: scale safely (throttles, monitoring, weekly refresh, audit reviews)

Scaling rules that keep you alive:

- increase volume weekly, not daily

- keep per-mailbox caps

- monitor bounce, complaints, and reply quality

- refresh lists weekly (stale data is silent failure)

Run weekly audit reviews:

- random sample of messages sent

- review opt-out handling

- review escalation correctness

- review edge-case failures and add rules

RACI: who owns what (so it doesn't die in Slack)

- RevOps (Responsible): routing rules, CRM fields, suppression plumbing, audit logs

- SDR Manager (Responsible): messaging library, QA sampling, escalation playbooks

- Legal (Accountable): consent language, disclosures, retention policy, state-by-state rules

- Security/IT (Consulted): vendor review, access controls, data residency

- Sales leadership (Informed): weekly metrics, scale gates, risk incidents

Success metrics: bounce, spam complaints, reply rate bands, meeting quality

Start with metrics that tell you if you're harming yourself:

- Bounce rate: target <2%

- Spam complaints: zero tolerance mindset

- Reply rate bands: use the directional bands from the deliverability section

- Meeting quality: show rate, conversion to stage 2, and "right persona" rate

If meeting quality drops while volume rises, your agent's generating activity, not pipeline.

Tool landscape (lightweight): examples + pricing models (not a giant list)

This isn't a "best tools" list. It's a map so you understand what you're buying and how you'll get billed.

Pricing models explained (seat vs credits vs consumption)

- Per-seat: predictable, but features get gated by tier.

- Credits: flexible, but credit burn becomes a RevOps tax if every action costs the same.

- Consumption (per conversation / per action): clean for finance, but you need usage monitoring or costs creep.

Tier 1-2 examples (what it is, best for, meter, gotcha)

Salesforce Agentforce

Best for: teams already deep in Salesforce that want an enterprise control plane for agents.

Metering: $2 per conversation (pre-purchase), Flex Credits ($500 per 100k credits), add-ons from $125/user/mo, editions from $550/user/mo.

Gotcha: you still need clean data, routing rules, and governance. Agentforce doesn't fix messy ops by itself.

IBM watsonx Orchestrate

Best for: enterprise orchestration across many internal systems with governance.

Metering: AWS Marketplace annual tiers around $6,360/year (Essentials), $76,320/year (Standard), $216,000/year (Premium).

Gotcha: powerful, but heavy. Overkill if your problem's simply "book more meetings."

Clay

Best for: building enrichment, scoring, routing, and research workflows (the workflow glue).

Metering: credit-based; tiers shown around $134/mo, $314/mo, $720/mo (annual billing), plus free. Clay's priced per workspace with unlimited users, so cost's mostly about credit burn, not seats.

Gotcha: without a spec and ownership, Clay turns into a beautiful workflow museum nobody maintains.

Jeeva AI

Best for: SMB teams that want an approachable outbound motion fast.

Metering: self-serve seat pricing around $95/mo (Growth) and $239/mo (Scale) per seat, plus credits; free tier and 5-day free trial.

Gotcha: credit economics punish sloppy workflows. Instrument usage from day one.

Artisan

Best for: teams that want "AI SDR" packaged with deliverability ops and managed execution.

Metering: typically annual/quote-based; expect roughly $24k-$80k+/year depending on volume and support.

Gotcha: it won't rescue weak positioning. It'll scale whatever message you give it.

Tier 3 (short list: common stack pieces)

RingCentral AI Conversation Expert

Best for: call/conversation workflows inside a CCaaS motion. Pricing starts around $60/user/mo.

Gong

Best for: revenue intelligence (recording, coaching, deal inspection). Typical market pricing lands around $1,200-$1,800/user/year plus a platform fee, depending on package and scale.

Close CRM

Best for: SMB execution (calling + email + pipeline) without enterprise overhead. Pricing starts around $35/user/month.

Common "AI SDR" vendors and price bands (directional)

Buyers will see these in-market. Price bands vary by seats, volume, and channels. Use this as a starting range:

- 11x: often $1,000-$3,000+/month depending on scope and seats

- AiSDR: commonly $1,000-$2,500/month range for outbound automation packages

- Qualified (inbound pipeline platform): frequently $1,500-$5,000+/month for mid-market deployments

- Factors.ai (revenue/intent + pipeline analytics): often $1,000-$4,000+/month depending on data sources and accounts tracked

Suite vs layer (how to choose without buying the wrong thing)

Suites promise "everything in one." Layers win when you already have a stack and need one job done perfectly (data quality, orchestration, calling, analytics).

My recommendation: buy the smallest surface area that solves the bottleneck you can measure.

If you're evaluating data and workflow layers, check integration coverage early so you don't end up building brittle Zap spaghetti. For example, integration lists like https://prospeo.io/integrations tell you quickly whether your CRM/sequencer is supported.

If you're comparing categories, this pairs well with AI agent for email outreach and best AI email outreach tools.

FAQ about AI sales agents

What is an AI sales agent, and how do AI sales agents work?

An AI sales agent is an environment-triggered system that detects signals (like a form fill or reply), pulls approved context, applies guardrails, and takes action (routing, drafting/sending, booking, CRM updates) with audit logs and clean handoffs. In production it's basically: trigger → context → policy → action → logging → escalation.

What's the difference between an AI sales agent and an AI sales assistant?

An AI sales assistant is reactive and usually prompt-driven (drafts, summaries, CRM updates), while an agent is event-driven and can execute multi-step workflows across tools. In practice, assistants boost rep productivity; agents boost operational throughput, if you enforce permissions, stop conditions, and audit logs.

Can an AI sales agent run outbound end-to-end without humans in 2026?

No. Plan on at least 1 human approval gate plus weekly QA sampling (20-50 actions/day) if you care about brand and deliverability. Humans still own positioning, edge-case judgment, and compliance exceptions, and they're the backstop when replies turn sensitive (pricing, security, legal, angry).

Do AI voice sales agents require written consent under TCPA?

Often yes. AI-generated voices are treated like artificial/prerecorded calls in many TCPA contexts, so prior express written consent is the safest baseline. You also need disclosures at call start, immediate revocation handling, and state-law checks; one broken consent workflow can create $1,500-per-call exposure.

What's a good free tool to reduce bounces before you automate outreach?

Use a verifier with a real free tier and refresh your list weekly; aim for <2% bounce before scaling volume. Prospeo's free plan includes 75 email credits plus 100 Chrome extension credits/month, and it verifies in real time with 98% email accuracy on a 7-day refresh cycle.

The article's clear: bad context retrieval kills agents. Prospeo's enrichment API returns 50+ data points per contact at a 92% match rate - giving your AI agent the accurate, fresh context it needs to route, personalize, and act.

Feed your AI sales agent data it can actually trust.

Clean inputs, strict guardrails, and a narrow workflow beat "full autonomy" every time. The ai sales agent shift is operational, not magical.