AI Cold Email Personalization Mistakes (and How to Fix Them) in 2026

Bad AI personalization isn't a prompt problem. It's a systems problem.

If your AI cold email personalization mistakes are killing replies, it's usually because your inputs are wrong, your "personalization" isn't relevant, or deliverability is quietly wrecking inbox placement before anyone even sees your opener.

Here's the thing: if your deal size is small and you're blasting 1,000+ contacts a week, you don't need "hyper-personalization." You need clean data, tight segments, and a reason to email that doesn't read like a bot wrote it at 2 a.m.

What you need (quick version)

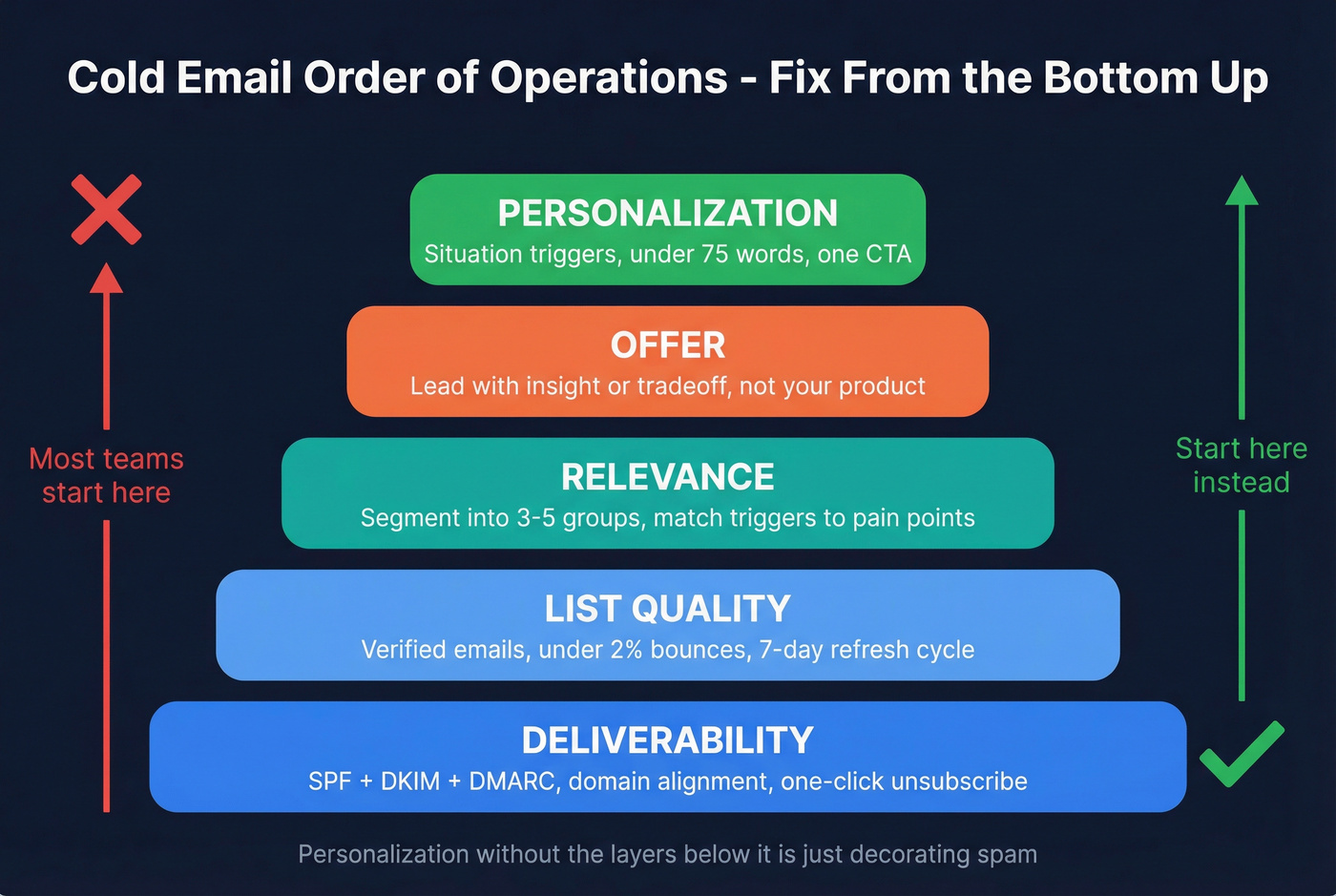

- Order of operations: deliverability -> list quality -> relevance -> offer -> personalization

- Authenticate properly: SPF + DKIM + DMARC, aligned domains

- Unsubscribe done right: one-click header + visible link, processed fast

- Spam complaint target: keep complaints <0.3% (bulk-sender requirement for Yahoo/Gmail; treat it as your universal ceiling)

- Bounce target: keep bounces <2% (if you're above that, stop scaling)

- Bulk threshold: sending 5,000/day to Gmail/Yahoo puts you in "bulk sender" rules

- Verify + refresh your list: stale contacts turn "personalization" into confidently wrong statements

- Segment before you personalize: 3-5 segments beats 500 "unique" openers (and it holds up at volume)

- Switch identity openers -> situation triggers: "loved your post" is dead; "saw you're hiring X" still works

- Keep copy short: aim <75 words, one idea, one CTA

- Cap follow-ups: more emails can mean more complaints, not more replies

- Run a tracking test: try turning off open tracking for a week

The three actions I'd take this afternoon:

- Authenticate + unsubscribe correctly (so your best copy actually lands).

- Verify/refresh your list (so AI isn't writing around bad inputs).

- Replace identity-based openers with situation triggers (so you sound like a human with a reason to email).

Why AI personalization backfires in 2026 (the new definition)

Most "personalization" today is just identity tokens glued onto a template. Prospects recognize it instantly. Once they bucket you as automated outreach, you lose attention, replies, and eventually inbox placement.

We've tested this across different ICPs, and the pattern stays the same: the more your opener sounds like it was generated, the more it gets treated like noise.

What prospects actually hate (themes you'll see in replies):

- "Fake compliment" openers that could be sent to anyone

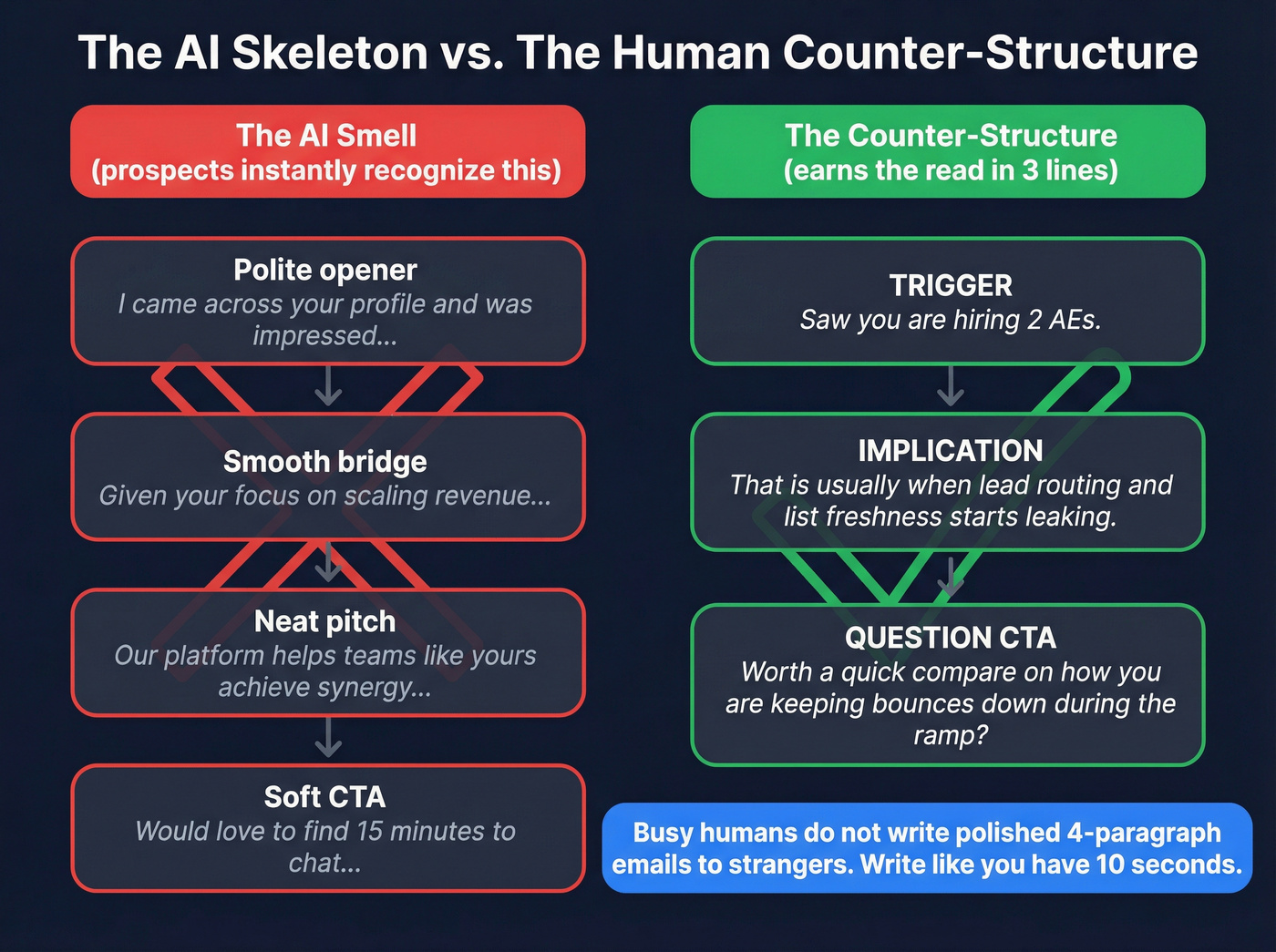

- The unmistakable "GPT tone" (polite -> smooth bridge -> pitch -> soft CTA)

- Creepy details that feel like you dug too deep

2026 operator rule (print this): Personalization isn't "about them." It's why now + why you + why this is relevant without being creepy or factually wrong.

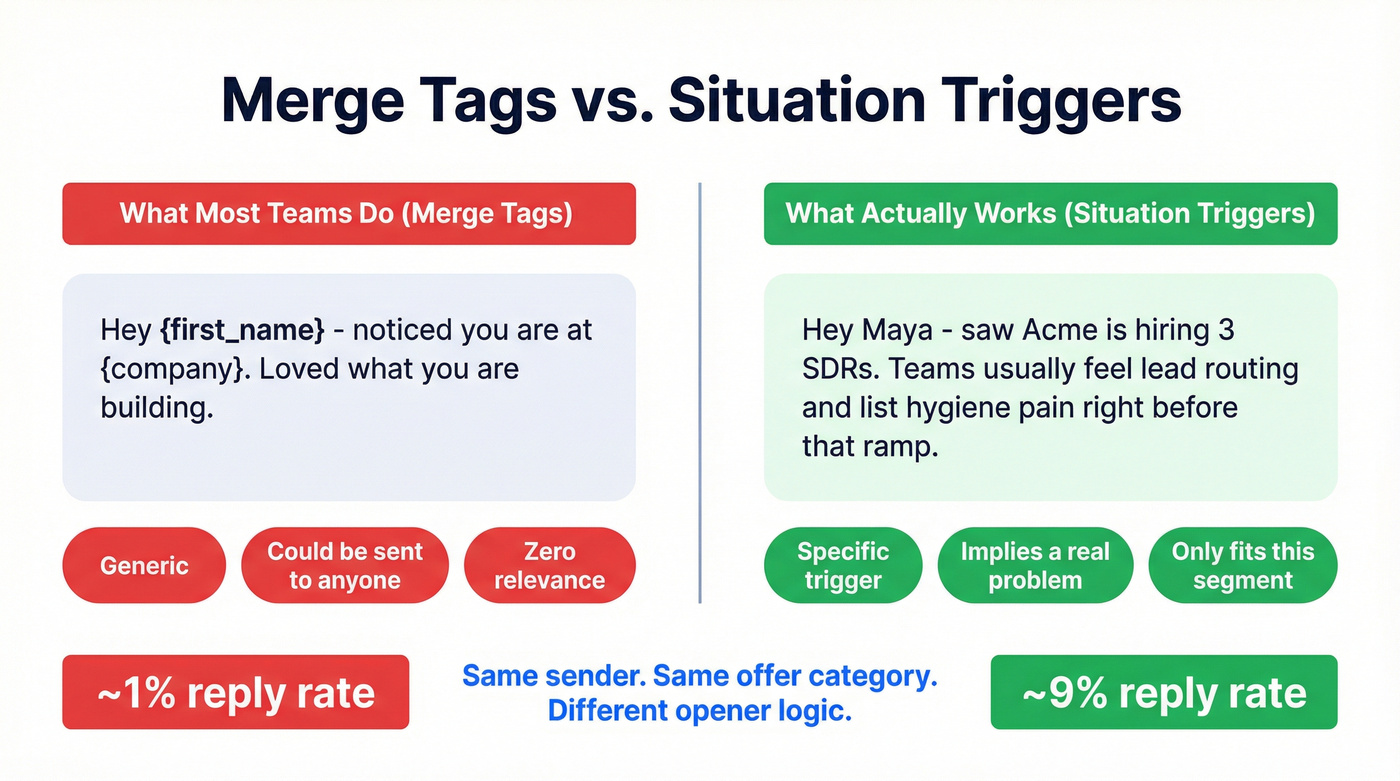

One practitioner split test captured the vibe: the "generic AI personalization" version (job title, school, "impressive background") got 1% replies, while the situational/trigger version got 9%. Same sender, same offer category, different opener logic.

AI cold email personalization mistakes that tank replies (with fixes + examples)

Mistake: Confusing merge tags with relevance

Merge tags don't create relevance. They just make a template look filled in.

Fix: segment first, then write a reason-to-email line that only makes sense for that segment.

Before: "Hey Maya - noticed you're at Acme. Loved what you're building."

After: "Hey Maya - saw Acme's hiring 3 SDRs. Teams usually feel lead routing + list hygiene pain right before that ramp."

Mistake: Identity-based openers ("loved your post...")

After reviewing 200+ cold emails, the pattern's blunt: "personalized" emails often perform worse than clean generic emails because the personalization is obviously templated (fake compliment, generic admiration, recycled public crumbs).

Fix: open on a trigger that implies a problem they might actually be dealing with.

Before: "Loved your recent post about scaling revenue operations..."

After: "Noticed you're hiring for RevOps. That's usually when outbound data quality and routing get stress-tested."

Specific-but-safe trigger lines you can steal (no risky facts):

- "Careers page shows 3 open SDR roles posted in the last 14 days."

- "Your security page was updated recently - teams often tighten vendor review during that window."

- "Docs mention a 'migration' - data quality usually breaks mid-switch."

- "New 'Locations' page went live - expansion tends to change territory + targeting fast."

Mistake: The recognizable AI skeleton

This is the easiest "AI smell" to spot: polite opener -> smooth bridge -> neat pitch -> soft CTA. Busy humans don't write like that.

Fix: use a blunt, human structure that earns the email in 3 lines:

Counter-structure (use this): Trigger -> implication -> question CTA

Example:

- "Saw you're hiring 2 AEs."

- "That's usually when lead routing + list freshness starts leaking."

- "Worth a quick compare on how you're keeping bounce/complaints down during the ramp?"

Also, delete the "synergy" sentence. I'm genuinely annoyed at how often it shows up in outbound that otherwise had a shot.

Mistake: Hallucinating facts about the prospect/company

AI will invent details, and it loves to invent them in the first sentence.

An arXiv evaluation of RAG-based systems found hallucination rates around 17%-33% in a legal research context. Cold email inputs are noisier than curated legal corpora, so treat that range as a floor for risk, not a precise rate.

Fix: adopt a "no unverifiable claims" rule for openers. If you couldn't defend the sentence with a link in 10 seconds, cut it.

Before: "Congrats on your Series B - excited to see you scaling fast."

After: "Looks like you're hiring across sales + CS. That usually signals a push for pipeline and retention at the same time."

Mistake: Personalizing with stale/unverified contact + firmographic data

This is the quiet campaign killer. Stale data turns personalization into confident errors: wrong title, wrong company, wrong domain routing (bounces), wrong assumptions ("you're hiring" when the post closed).

Once bounces climb, everything else gets harder. Keep bounces <2% as a hard baseline.

Fix (workflow that holds up):

- Refresh firmographics (company name, domain, headcount band)

- Verify emails and suppress risky ones (catch-alls, traps)

- Generate situational openers from safe inputs

- Send small, watch bounces/complaints, then scale

In our experience, the fastest way to "fix AI copy" is to stop feeding it junk fields and old records. The copy gets better without changing the prompt, because the model finally has something real to work with.

Prospeo: the accuracy layer that keeps AI from sounding confidently wrong

Prospeo is "The B2B data platform built for accuracy," and it's built for the part most teams skip: making sure the inputs are true before you generate a single line of "personalization." You get 300M+ professional profiles, 143M+ verified emails, and 125M+ verified mobile numbers, with 98% verified email accuracy and a 7-day data refresh cycle (while a lot of the market refreshes closer to every 6 weeks).

It also runs 5-step verification with catch-all handling plus spam-trap and honeypot filtering, so you're not scaling a list that quietly poisons your domain. If you're building a workflow in Clay or sending in Instantly/Smartlead/Lemlist, this is the kind of verification + refresh layer that keeps your "personalization" from turning into bounces and angry replies.

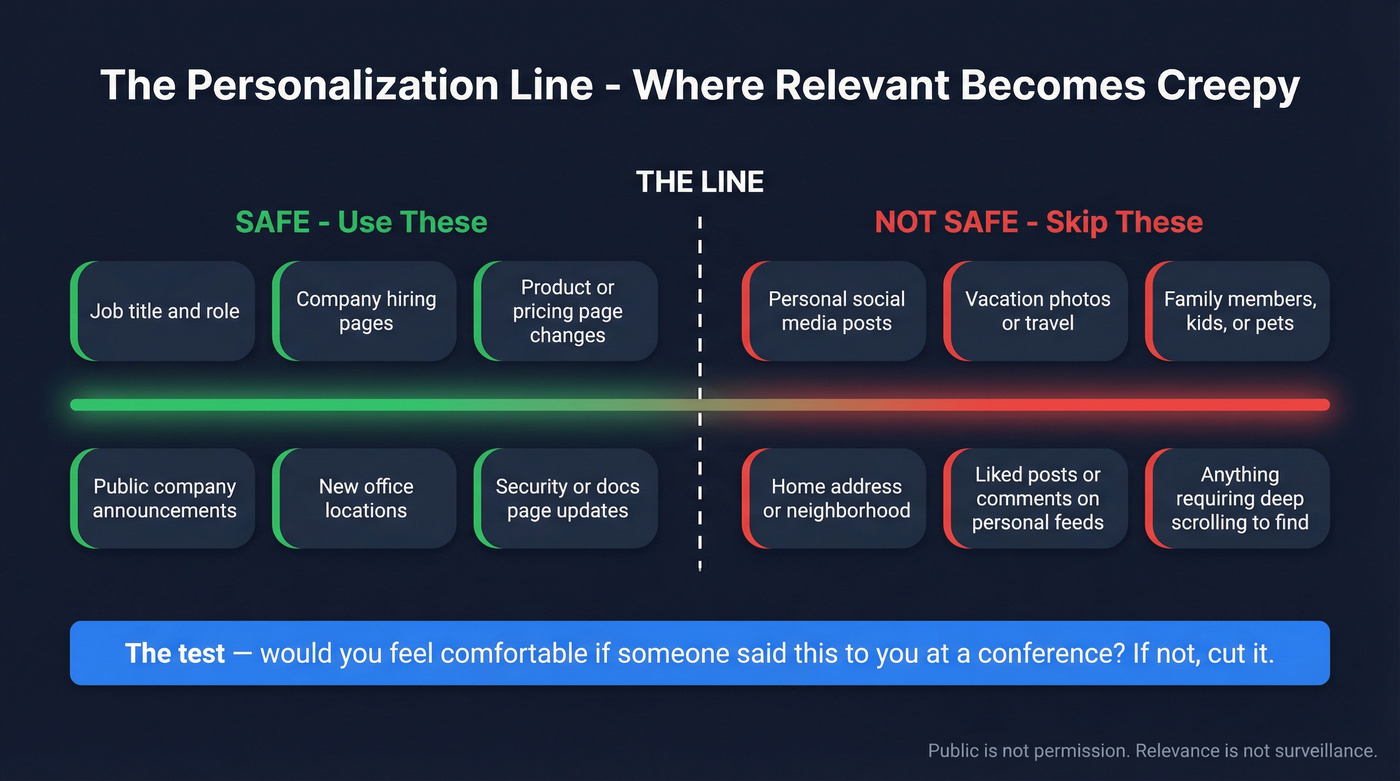

Mistake: Creepy over-personalization

Public isn't permission. If your opener references personal life, photos, family, or anything that feels like "I looked too hard," you spike distrust.

Fix: stay on the safe side of the line.

Safe: role, company initiatives, hiring, product changes, public announcements Not safe: personal socials, home location specifics, kids/pets, "saw you liked..."

Before: "Saw your vacation pics - hope you had a great time in Italy!"

After: "Saw you're hiring a RevOps lead. That's usually when teams formalize outbound data + routing."

Skip this entirely if your "personalization" requires scrolling someone's personal feed. It's not worth the downside.

Mistake: Overwriting (too long, too many ideas)

Long emails don't feel more personal. They feel like homework.

One operator ran 500+ emails over 20 days and landed around 4.2% reply while keeping messages under 75 words. Short forces clarity.

Fix: one idea, one CTA, one ask.

A tight closer that works: "Worth a quick compare? If not you, who owns outbound data quality on your side?"

Mistake: Personalization to compensate for a weak offer

Personalization can't rescue "we're amazing, want a demo?" The reader still doesn't know why they should care.

Fix: lead with an insight or tradeoff, not your product, and make it specific enough that a real operator would nod even if they never buy from you.

A pattern that keeps earning replies:

- "Most teams doing X hit Y problem"

- "The usual fix is A, but it creates B"

- "We help with C - worth a quick compare?"

Also, don't pretend email's the only channel. In one practitioner test, social touchpoints outperformed email by an order of magnitude from the same list. The lesson isn't "go all-in on social." It's "stop relying on one inbox decision." (If you want numbers to back that up, see our social selling statistics.)

Tool note (Reply.io): Reply.io's a solid choice when you want sequencing plus guardrails that reduce spammy automation patterns. Use it to enforce shorter steps, rotate copy, and keep follow-ups from turning into complaint magnets.

Mistake: Too many follow-ups (fatigue + complaints)

More follow-ups don't just annoy people. They increase the odds you get marked as spam.

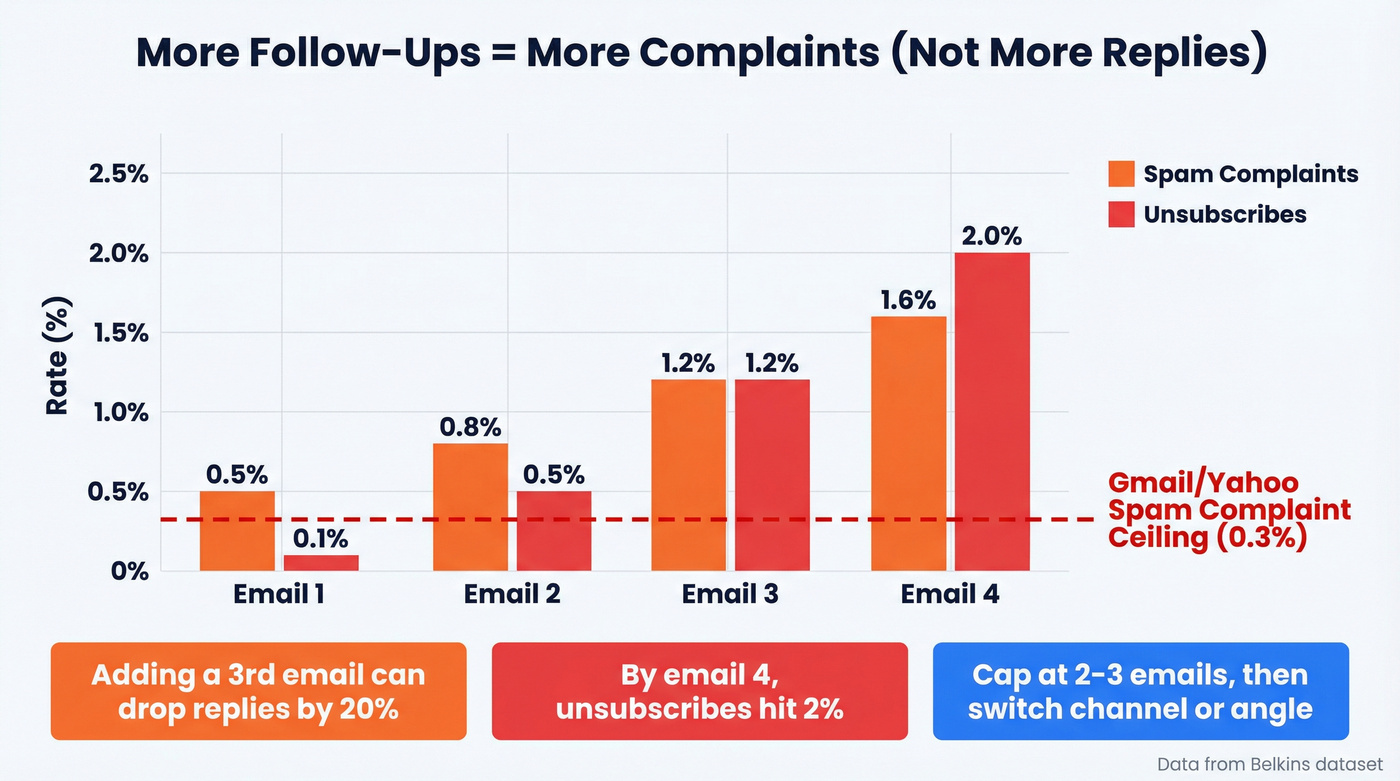

Belkins' dataset shows spam complaints rising from 0.5% on email #1 to 1.6% by email #4. Unsubscribes jump from 0.1% to 2% by round #4. Adding a third email can drop replies by up to 20%.

Fix: cap sequences and change your approach, not just your subject line.

- 2-3 emails max for most segments

- then switch channel or switch angle (new trigger, new insight)

Operator warning: if complaints are climbing and you "fix it" by adding follow-ups, you're optimizing yourself into the spam folder.

Mistake: Tracking everything (open pixels) and looking spammy

Open tracking's noisy, and it can make you look like a marketer instead of a person.

Belkins saw a 3% higher response rate when open tracking was turned off.

Fix: run a clean test:

- Week A: open tracking on

- Week B: open tracking off Hold everything else constant.

If replies go up and complaints go down, keep it off.

Every AI personalization mistake in this article traces back to one root cause: bad inputs. Prospeo's 7-day data refresh cycle and 98% verified email accuracy mean your AI writes around real triggers - not hallucinated facts and dead addresses. 300M+ profiles. Under 2% bounces. $0.01/email.

Stop fixing prompts. Start fixing the data that feeds them.

Personalization decision tree (when to do none vs segment vs 1:1)

Use this to stop over-personalizing the wrong campaigns.

If you don't have clean deliverability (SPF/DKIM/DMARC) or bounces <2% -> do zero personalization. Fix infrastructure and list quality first.

If your list is broad (1,000+ recipients) -> do segmentation personalization, not 1:1. Hunter's data shows <=50 recipients hit 5.8% reply while 1,000+ drops to 2.1%. Narrowing beats "unique openers" at scale.

If you can't articulate a reason-to-email for the segment -> do none. "71% ignore due to irrelevance." Tokens don't fix irrelevance.

If the account's high-value and you have a real trigger -> do 1:1. Examples: exec hire, expansion, competitor move, tech migration, public initiative.

If you're guessing facts (funding, customer counts, tech usage) -> don't personalize that detail. Use safer triggers (hiring, headcount shifts, role-based pain).

Personalization QA checklist (hallucination-proof + freshness rules)

If you're using AI to generate openers, QA's the job. Not a nice-to-have.

Trigger quality score (0-5): send only if >=4

Give the trigger 1 point for each:

- Verified (you can show the source)

- Recent (ideally <=30 days)

- Implies a real pain (not just trivia)

- Ties to your offer (clear "why you")

- Non-creepy (would feel normal if said in person)

If it scores 3 or less, don't prompt harder. Pick a better trigger.

Pre-send checklist (copy + facts)

- Opener is situational, not identity-flattery

- No unverifiable claims

- One idea only

- <75 words (unless you've proven longer works for your ICP)

- One CTA (15 minutes, or "who owns X?")

- No "AI skeleton" transitions ("hope you're well," "I wanted to reach out...")

- No creepy details (public isn't permission)

Freshness rules (inputs)

- Contact role/company verified recently

- Trigger still true (job post still open, initiative still active)

- Freshness window: if the data point is older than ~30 days, treat it as suspect

- Bounce target: <2% before scaling volume

We've seen teams cut complaint rates simply by enforcing "verified + recent + non-creepy" as a gate before any AI generation.

Red flags (stop the send)

Red flags: funding mentions, customer counts, "saw you use X tech," "congrats on..." lines, or any opener you couldn't defend with a link in 10 seconds.

Prohibited claim types unless you've verified them:

- Funding stage/amount

- Customer counts / revenue

- Tech stack usage (unless you're 100% sure)

- "You're struggling with..." mind-reading statements

Situation-signal library (steal these triggers + openers)

Rule: no cringe flattery. Your opener should read like a colleague pointing out a relevant situation.

| Signal | Implies | Opener | CTA |

|---|---|---|---|

| Hiring reps | Ramp strain | "Saw you're hiring reps." | "Worth 15 min?" |

| Headcount drop | Efficiency | "Noticed headcount tightened." | "Open to compare?" |

| Tech change | Migration risk | "Looks like systems are changing." | "Who owns this?" |

| Intent topic | Active eval | "Teams researching X hit Y." | "Want notes?" |

| Competitor move | Pressure | "Competitor move usually shifts X." | "Worth a chat?" |

| Expansion | New motion | "Expansion stresses targeting fast." | "Send a POV?" |

A few longer, concrete opener examples (use sparingly):

- "Careers page shows 3 SDR roles posted in the last 2 weeks - usually when routing + list freshness starts leaking."

- "Docs mention a 'migration' - data quality breaks mid-switch more often than teams expect."

- "Security page update usually means vendor review is active - are you tightening outbound compliance this quarter?"

Benchmarks for 2026 (what "good" looks like + what breaks it)

Benchmarks are sanity checks, not trophies. Use them to spot when you're failing for a mechanical reason (bad list, too many follow-ups, spammy tracking), not to justify sending more volume.

Here's the baseline I use in 2026: if you're under 1% replies, something's structurally wrong (deliverability, list quality, irrelevance, or offer). If you're in the 3-6% range, you're in the game. If you're consistently 6%+, you've earned the right to scale carefully.

| Source | Typical reply rate | "Good" baseline | "Excellent" | What breaks it |

|---|---|---|---|---|

| GMass | 1%-5% | 2%-4% | 5%+ | Bad list, weak offer |

| Hunter | 4.1% avg | 4%-5% | 6%+ | Irrelevance (71%) |

| Belkins (16.5M emails) | 5.8% avg | 4%-6% | 6%-8% | Follow-up fatigue, complaints |

Belkins also found the best-performing length was 6-8 sentences, producing 6.9% reply rate. That's not permission to write long emails - it's a reminder that "short" means complete, not vague. Six tight sentences can still be under ~75 words if you cut the fluff, keep the nouns concrete, and stop trying to sound "professional."

What to do with that:

- Write for one breath. If you can't read it out loud without pausing, it's too long.

- Make sentence #1 earn sentence #2. Your opener must contain a trigger that implies a real situation, not admiration.

- Treat follow-ups as risk, not free inventory. Complaint and unsubscribe escalation (0.5% -> 1.6% complaints by email #4; 0.1% -> 2% unsub by #4) is exactly how "working sequences" turn into domain damage.

One more benchmark-driven reality: Hunter's "95.9% unanswered" stat is the cold shower. Most emails die because they're not relevant, not because they're "not personalized enough." That's why segmentation beats 1:1 at scale.

Tool notes (Instantly + Hunter + Clay): Instantly's a practical choice for deliverability-aware sending and controlled ramp-ups; it's also where many teams run their "open tracking off" tests cleanly. Hunter's a fast way to sanity-check list quality and segmentation performance (especially when you're comparing small, tight sends vs big blasts). Clay's the operator tool for building enrichment workflows: pull a trigger (hiring, tech signals), enrich, verify, then generate openers from only the fields you trust.

If you want one external benchmark to anchor expectations, Instantly publishes a living report; start with their cold email benchmark report for 2026 and then compare it to your own numbers.

Deliverability guardrails that make personalization mistakes irrelevant

If you ignore deliverability and compliance, personalization becomes decoration on emails that never land, or worse, emails that land and get reported.

Deliverability guardrails (non-negotiable)

- Spam complaints <0.3% (bulk-sender requirement for Yahoo; treat as your ceiling everywhere)

- SPF + DKIM + DMARC configured and aligned

- One-click unsubscribe via List-Unsubscribe header + visible link

- For bulk senders into Yahoo/Gmail: honor unsubscribes within 2 days

- Bulk threshold: if you send 5,000/day to Gmail/Yahoo, you're in the "bulk sender" ruleset

- Watch behavior signals: sudden volume spikes, repetitive copy, low engagement

Yahoo's sender requirements are laid out here: https://senders.yahooinc.com/best-practices/ DMARCian's explainer helps with rollout details: https://dmarcian.com/yahoo-and-google-dmarc-required/

Compliance guardrails (UK/EU reality check)

If you're marketing to people in the UK, "B2B" doesn't get you out of privacy law. The UK ICO's explicit: B2B marketing still involves personal data, so UK GDPR applies. Start here: https://ico.org.uk/for-organisations/direct-marketing-and-privacy-and-electronic-communications/business-to-business-marketing/

If you're leaning on legitimate interests, do the 3-part test (purpose, necessity, balancing): https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/lawful-basis/legitimate-interests/what-is-the-legitimate-interests-basis/

Fix it this week (7-day sprint plan)

This is the fastest path I've seen to stop AI cold email personalization mistakes without rebuilding your whole outbound motion.

Day 1: Deliverability audit SPF/DKIM/DMARC alignment, From domain consistency, unsubscribe link + one-click header. (If you need a deeper infrastructure walkthrough, use this email sending infrastructure guide.)

Day 2: List triage Suppress risky segments (old lists, scraped lists, unknown sources). Set the bounce target: <2%.

Day 3: Verify + refresh before scaling Run your list through a verification + refresh workflow and update key fields (role, company, domain). This is where you stop "confidently wrong" personalization before it ships. (Use a repeatable email verification list SOP.)

Day 4: Rewrite openers as situation triggers Pick 3-5 triggers (hiring, tech change, expansion, competitor move). Write one opener per trigger, not per person. (If you want more first-line patterns, grab these email opener examples.)

Day 5: Shorten everything Force <75 words, one CTA, one idea. Remove the AI skeleton transitions.

Day 6: Sequence trim + tracking test Cap to 2-3 emails. Run the "open tracking off" test (Belkins saw +3% response when off). If you want a cadence baseline, use a B2B cold email sequence framework.

Day 7: Ramp slowly Plan 4-6 weeks to ramp a new domain, starting at 5-10/day and increasing gradually. Scale only when bounces and complaints stay stable. (More on pacing in email pacing and sending limits.)

Stale contacts turn "personalization" into confident errors - wrong titles, closed job posts, bounced emails. Prospeo refreshes 300M+ profiles every 7 days (not every 6 weeks), verifies emails through a 5-step process, and kills catch-alls and spam traps before they wreck your sender reputation.

Clean data makes AI copy better without changing a single prompt.

FAQ

Is AI personalization actually hurting reply rates in 2026?

Yes. Identity-based AI openers often reduce replies because recipients recognize the pattern and ignore it, while trigger-based lines can lift performance (for example, 1% vs 9% in a practitioner split test). Use situational triggers like hiring, tech change, or expansion and keep the email under ~75 words.

What spam complaint rate do I need to stay under in 2026?

Stay under 0.3% spam complaints as a hard ceiling, especially if you meet bulk-sender rules for Gmail/Yahoo. If you're near 0.3%, pause scaling and fix list quality, relevance, and follow-up count before you touch prompts or templates.

What's the safest type of personalization that doesn't feel creepy?

Company-and-role relevance tied to a verifiable trigger (like recent hiring within 14-30 days) plus one operational implication. Avoid personal-life references and forced familiarity, because public info still isn't permission and it increases spam complaints.

How do I stop AI from making up facts in my openers?

Ban unverifiable claims and block high-risk details (funding, revenue, customer counts, tech stack) unless you can link a source in 10 seconds. Use a freshness window of about 30 days, and only send triggers that score >=4/5 on verified, recent, implies pain, ties to offer, and non-creepy.

What's a good free tool to reduce bounces before I personalize at scale?

Prospeo's free tier includes 75 emails + 100 extension credits/month and focuses on accuracy (98% verified email accuracy with a 7-day refresh cycle), which helps keep bounces under 2% before you scale. For quick spot-checks, basic verification in tools like Hunter can help, but freshness and catch-all handling matter most at volume.

Summary: the mistakes to stop making

Most AI cold email personalization mistakes come from treating personalization like a writing problem instead of a systems problem. Fix deliverability first, verify and refresh your data so you stop sending confident errors, and switch from identity-flattery to situation triggers that create a real reason to email. When those three are in place, AI becomes a multiplier instead of a liability.