How to A/B Test Your Lead Generation Campaigns (and What to Test First)

Sixty-one percent of marketers say lead generation is their biggest challenge. That tracks - the average cost per lead across industries is $198. You're spending real money to get someone's attention, and then most teams squander it by testing the wrong things in the wrong order.

A disciplined approach to A/B testing lead generation campaigns is the difference between compounding gains and compounding waste.

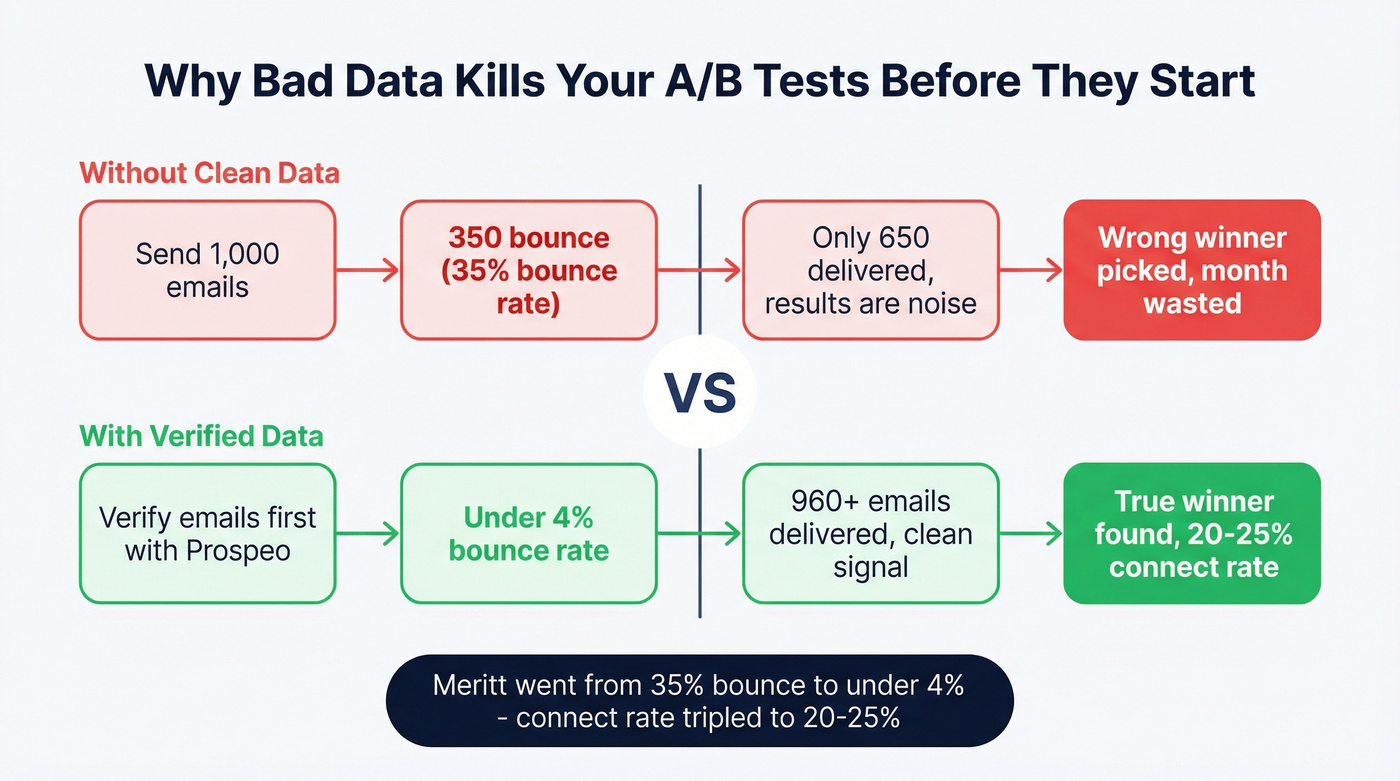

Here's a scenario I see constantly: a B2B team spends three weeks building an elaborate subject line test. They split their list, craft two variants, set up tracking, and wait. Two weeks later, the results come in - and they're meaningless. Not because the subject lines were bad, but because 35% of the emails bounced. They weren't testing messaging. They were testing whether their contact data was real.

That team wasted a month. And they're not unusual. Most split testing advice for lead gen skips the boring prerequisites and jumps straight to "try a different button color." Button colors don't matter when a third of your audience never sees the email.

Hot take before we start: If your deals average under $15K, you probably don't need a $36K/year testing platform. You need clean data, a clear offer, and the discipline to test one thing at a time.

The Cheat Sheet

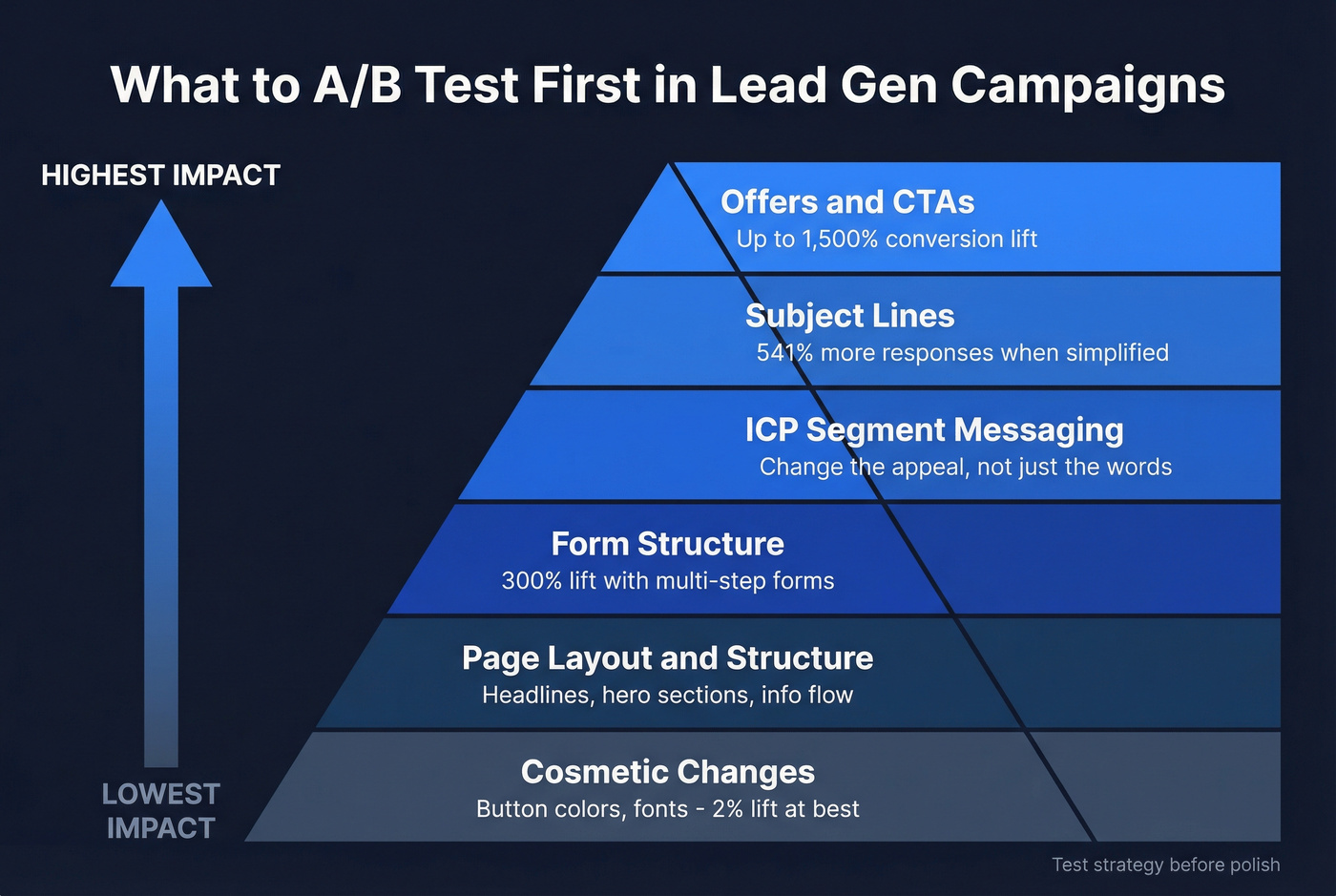

Test in this order: Offers and CTAs -> subject lines -> form structure -> page layout -> cosmetic changes. A button color change might lift conversions 2%. A CTA rewrite can lift them 1,500%.

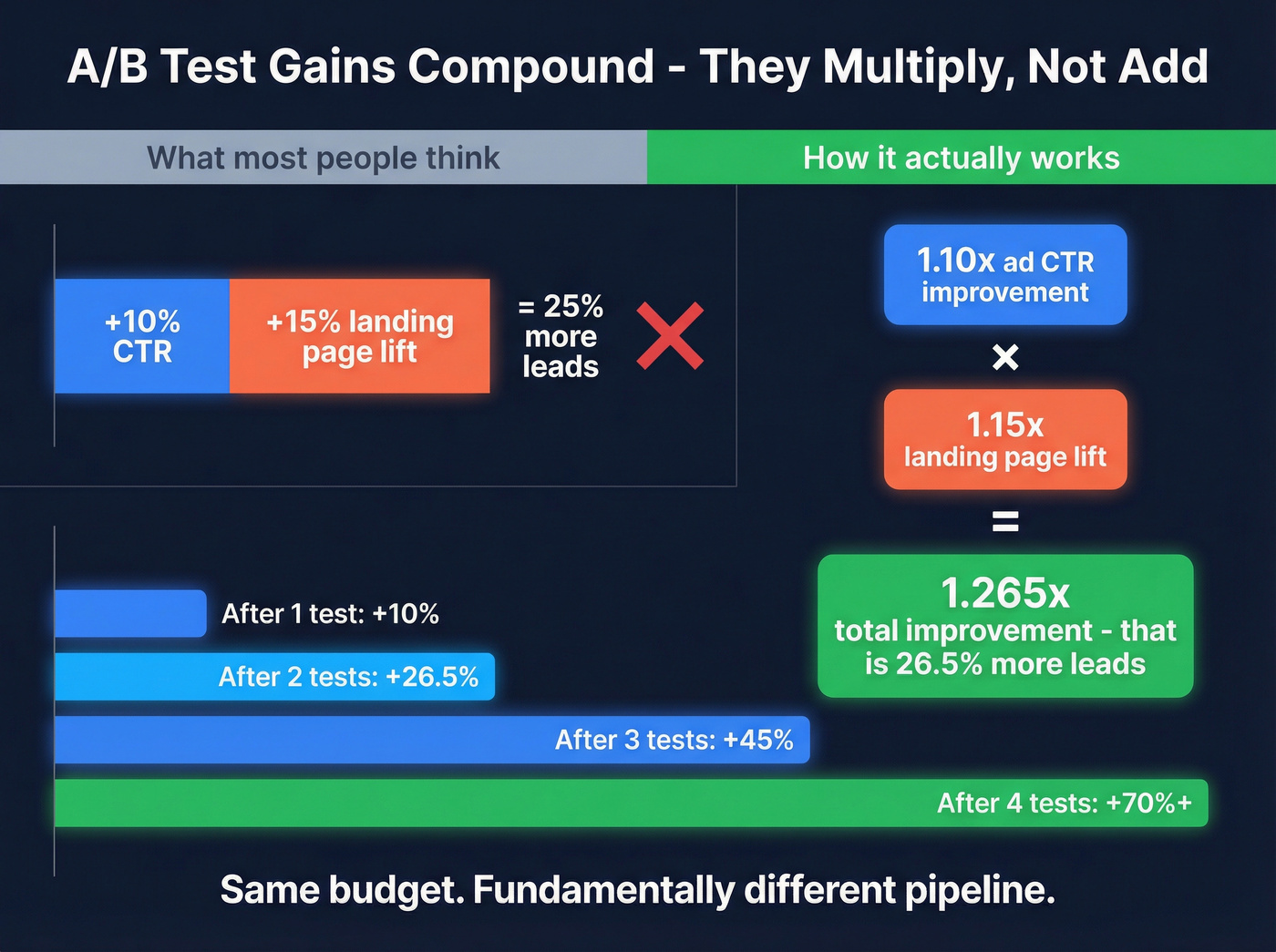

The math compounds. A 10% CTR improvement on your ads plus a 15% landing page conversion lift doesn't give you 25% more leads. It gives you 26.5% - because the gains multiply. Stack three or four of these and you're looking at a fundamentally different pipeline from the same budget.

The prerequisite nobody mentions: if your email list is garbage, you're not testing messaging. You're testing deliverability. Clean your data first.

The uncomfortable truth: only 25-30% of A/B tests produce statistically significant results. That's normal. Plan for 3-5 tests before finding a winner.

What Split Testing Actually Means for Lead Gen

A/B testing for lead generation means splitting your audience into two groups, showing each a different version of one element, and measuring which version generates more leads or better-quality leads. One variable. Two versions. A clear winner.

Don't confuse this with multivariate testing, which changes multiple elements simultaneously and requires significantly more traffic to reach significance. For most B2B teams, split testing is the right starting point because it isolates variables cleanly.

The numbers back this up: A/B tests that reach statistical significance boost conversion rates by up to 49%. In the U.S., 93% of firms run A/B tests on email marketing. Companies that test systematically grow revenue 1.5 to 2x faster than those that don't.

The gap isn't whether to test. It's knowing what to test first.

The Priority Framework for Testing Lead Gen Campaigns

Most teams test whatever's easiest to change. That's backwards. Here's the priority order, ranked by potential impact:

1. Offers and CTAs. This is where the biggest lifts live. SalesHive documented a case where changing a CTA from a tour request to a free trial produced a roughly 1,500% conversion lift. That's not a typo. The offer itself - what you're asking someone to do - matters more than anything else on the page.

2. Subject lines (for email). Simple subject lines generate 541% more responses than "creative" ones. Personalized subject lines increase opens by 26%. This is the highest-ROI test for cold email campaigns, and it takes five minutes to set up.

3. ICP segment messaging. Test positioning by persona - cost-savings messaging for CFOs vs. revenue-growth messaging for VPs of Sales. This is a higher-leverage test than copy tweaks because you're changing the appeal, not just the words.

4. Form structure. Multi-step forms increase conversions by 300% over single long forms. Chatbots convert at 3x the rate of static forms. The way you collect information changes how many people give it to you.

5. Page layout and structure. Headlines, hero sections, the flow of information. These matter, but less than the four above.

6. Cosmetic changes. Button colors, font sizes, image swaps. Test these last. They rarely move the needle more than a few percentage points.

The principle: test the strategy before the polish. A perfectly designed landing page with the wrong offer will always lose to an ugly page with an irresistible one.

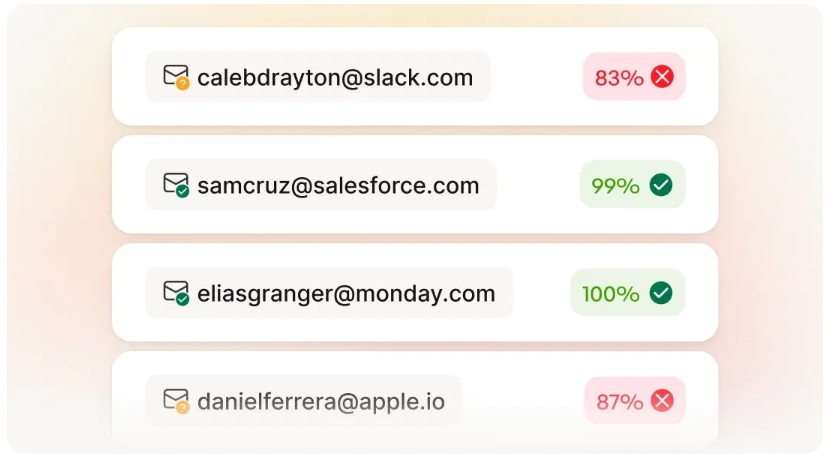

You read it above: 35% bounce rates kill A/B tests before they start. Prospeo's 98% verified email accuracy and 7-day data refresh mean every split test measures what it should - your messaging, not your data quality.

Fix the prerequisite. Test messaging, not deliverability.

Landing Page A/B Tests That Actually Move the Needle

Most landing page testing advice focuses on the wrong elements. Let's fix that.

Headlines, CTAs, and the Multiple-Button Surprise

Conventional wisdom says one CTA per page. Conventional wisdom is wrong - at least some of the time.

A home inspection business shared results on Reddit that flipped the script. Their single "Contact Now" button converted at 17% with a 29% close rate and $68 CAC. When they added a second button - "Contact Now" plus "Free Quote" - the "Contact Now" button jumped to 20% conversion with a 38% close rate, while "Free Quote" pulled 23% conversion at the same 29% close rate. Same keywords, same ad creative, only the landing page changed. The CAC dropped because they were capturing leads at different intent levels - high intent (contact now) and mid intent (free quote).

The sweet spot? Two to three buttons in the hero section, each targeting a different commitment level.

Personalized CTAs - ones that adapt based on visitor behavior or segment - convert 202% better than generic ones. That's not a small lift. That's a fundamentally different conversion rate.

Forms: The Multi-Step vs. Chatbot Decision

Go multi-step if you've got a form with 5+ fields and decent traffic. Breaking a 7-field form into three steps of 2-3 fields each feels less intimidating, even though you're collecting the same information. The result: 300% higher conversions.

Go chatbot if you want the highest possible conversion ceiling and can handle the implementation. Businesses using AI chatbots see 3x higher conversion rates compared to static web forms. The conversational format - one question at a time - feels natural instead of interrogative.

Go progressive profiling if you have repeat visitors. Ubisoft used this on their "For Honor" campaign, showing different form questions based on what they already knew about the lead. The result: 12% more leads with improved quality over a roughly 3-month test. You're shortening the form while actually collecting more data over time.

Social Proof and Trust Signals

WorkZone ran one of the cleanest A/B tests I've seen documented. They changed their customer testimonial logos from color to black-and-white next to their demo request form. One change.

Result: 34% increase in form submissions, 99% statistical significance, 22-day test. The theory? Color logos distracted from the CTA. Black-and-white logos provided social proof without pulling attention away from the form.

Groove's case study is equally instructive. They rewrote their landing page copy using actual customer language pulled from interviews. Conversion rate jumped from 2.3% to 4.3% - an 87% lift. Your customers describe your product better than your marketing team does.

Email A/B Tests - From Subject Lines to Send Times

Email generates 40x more leads than social media and returns $36-$42 for every $1 spent. But those numbers only hold if your tests are actually measuring what you think they're measuring.

What to Test in Lead Generation Email Campaigns

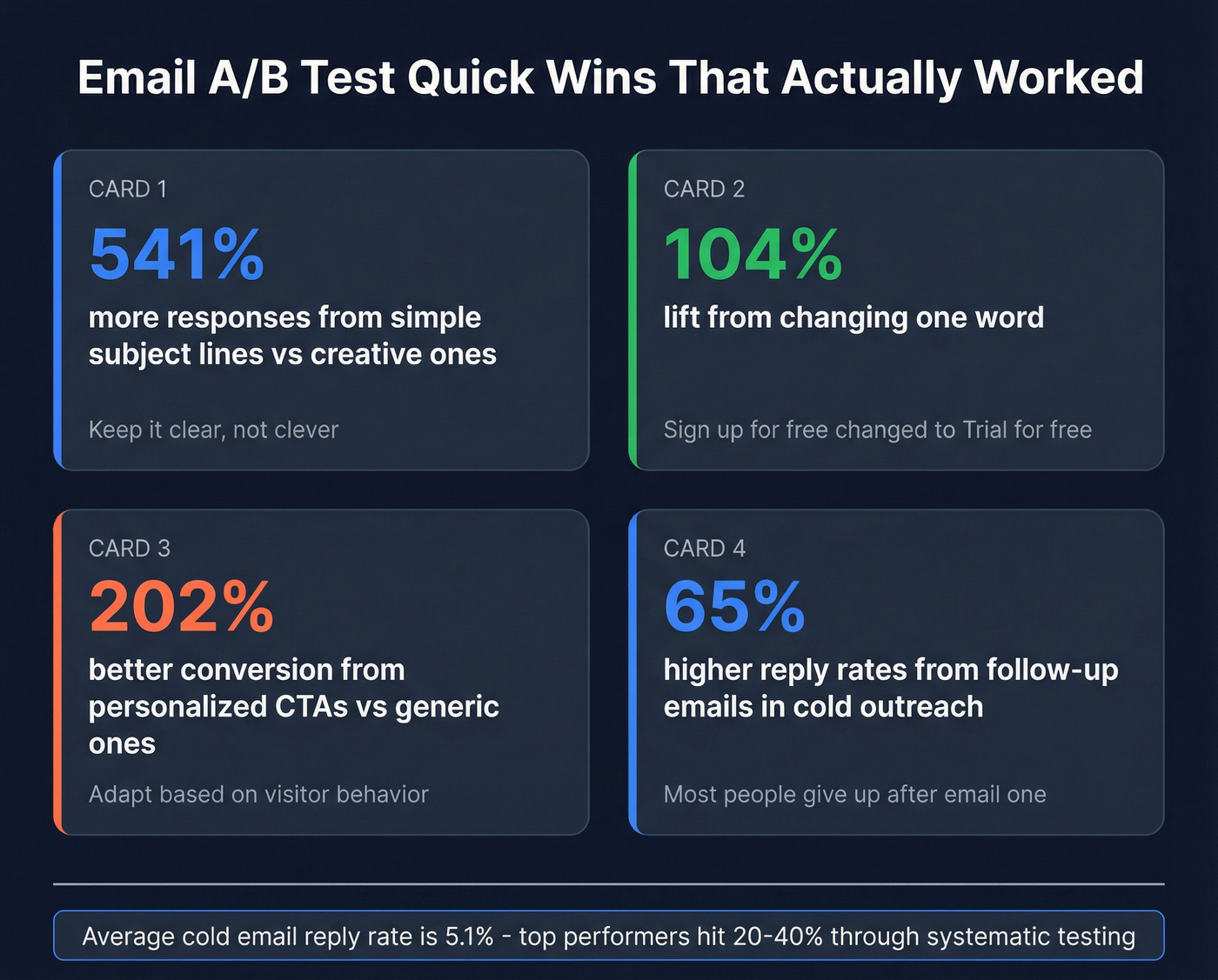

Subject lines first. Simple subject lines generate 541% more responses than clever ones. Personalized subject lines (using the recipient's name, company, or role) increase opens by 26%. Subject-line testing alone improves email conversions by 29%.

Then test CTAs. Going, a travel deals company, changed their CTA from "Sign up for free" to "Trial for free" - one word - and saw a 104% month-over-month increase in premium trial starts. One word. 104% lift.

Don't sleep on sender names. Emails from a sales rep with an established relationship often outperform emails from the CEO or a generic company address. Test "Sarah from [Company]" against "[Company] Team."

For cold outreach, follow-up emails can increase reply rates by up to 65%. The average B2B cold email reply rate sits at 5.1%, but top performers hit 20-40% through systematic testing.

2026 Email Benchmarks

You can't improve what you don't measure against:

| Industry | Open Rate | CTR | Notes |

|---|---|---|---|

| B2B (median) | 36.7%-42.35% | 2.0%-4.0% | Top quartile: 50%+ opens |

| Advertising | 25.48% | 3.29% | Below average opens |

| Business/Finance | 33.18% | 3.36% | Solid engagement |

| Entertainment | 55-60% | 4-6% | Highest opens |

| Financial Services | 64-68% | 5-7% | Niche, engaged lists |

| Ecommerce | 25.74% | 2.86% | High volume, lower engagement |

| Cold outreach | 30-50% | Reply rate: 5.8% avg | Highly variable by list quality |

One critical caveat: Apple Mail Privacy Protection inflates open rates by pre-loading tracking pixels. Weight your analysis toward CTR and reply rates instead.

Cold outreach reply rates dropped from 6.8% in 2023 to 5.8% in recent data. Inbox fatigue is real and getting worse. Every percentage point of improvement from testing is more valuable than it was two years ago.

The Data Quality Prerequisite

Here's the thing: if 35% of your emails bounce, you're not testing messaging. You're testing deliverability. And you're destroying your sender reputation in the process.

I've watched teams spend weeks crafting the perfect subject line test, only to have the results corrupted because a third of their list was invalid. The "winning" variant didn't actually win - it just happened to land in more real inboxes.

Meritt went from a 35% bounce rate to under 4% after switching to Prospeo, and their connect rate tripled to 20-25%. That's not an A/B test improvement - that's the prerequisite for your experiments to work at all.

Paid Ads A/B Tests - The Gap Nobody Covers

Every testing guide covers email and landing pages. Almost none cover paid ads properly. That's a massive blind spot, because the compounding math is identical: a 10% CTR boost plus a 15% landing page improvement equals 26.5% more leads from the same budget.

Google Ads - Campaign Experiments Framework

Google's Campaign Experiments tool is your best friend for serious testing:

- Create a draft of your existing campaign

- Modify ONE element (headline, description, ad asset, landing page)

- Set a 50/50 traffic split

- Run both versions simultaneously

- Let Google's statistical engine tell you the winner

Priority order for Google Ads tests: Headlines and descriptions -> ad assets (sitelinks, callouts, structured snippets) -> landing page copy and structure -> bidding strategies.

Test trust-focused assets ("Locally owned since 2005") against competitive advantage assets ("Guaranteed 24hr reply"). Test multi-step lead forms against single-step. Only test automated bidding strategies like "Maximize Conversions" vs. "Target CPA" after you've got enough conversion volume for the algorithm to learn from.

Meta Ads - The 70/80 Rule

Product, offer, angle, and creatives account for 70-80% of your Meta Ads results. Settings, audience, and campaign setup account for the remaining 20-30%.

Most teams obsess over the 20-30%.

Think of the algorithm like a snow globe: every change you make shakes it. It performs best in a calm, settled state. Make deliberate, isolated changes and give them time.

Practical rules for Meta testing:

- Set budgets for 1-2 conversions per ad set per day. If your average cost per purchase is $50, your daily budget should be at least $50 - ideally $100-150.

- Give tests 3-5 days minimum before drawing conclusions.

- Ads not getting spend doesn't mean they're bad. Duplicate and exclude winners to find new ones.

- If something works, leave it alone.

One ad can sell 19.5x as much as another. The difference is finding the right appeal.

LinkedIn Ads - B2B's Highest-Converting Platform

LinkedIn lead generation forms average a 13% conversion rate - roughly 3.5x the global average website conversion rate of 3.68%. For B2B, it's the highest-converting paid platform.

Visual customer testimonials produce 40% higher CTR than other ad formats on LinkedIn. Targeting by industry, company size, and function generates 45% more qualified leads compared to non-segmented campaigns.

The mistake most B2B teams make on LinkedIn? Testing creative before testing targeting. Get the audience right first - then optimize the creative within that audience.

Real Case Studies: A/B Testing Lead Generation Across Channels

Theory is nice. Numbers are better.

| Company | What They Tested | Result | Duration |

|---|---|---|---|

| WorkZone | Logo colors (color -> B&W) | 34% more submissions | 22 days |

| Going | CTA wording (1 word) | 104% more trial starts | 1 month |

| Broomberg | Timed pop-ups (100s delay) | 72% more blog leads | N/A |

| Groove | Customer language rewrite | 87% lift (2.3% -> 4.3%) | N/A |

| Ubisoft | Progressive profiling | 12% more leads | ~3 months |

| Bannersnack | Bigger "show timeline" button | 12% feature adoption | N/A |

| Yatter | Case studies + video on product page | 10% more conversions | N/A |

Bannersnack and Yatter are the ones to study closely. Bannersnack used session replays to discover users were ignoring a key button - they made it bigger and got a 12% lift. Yatter added case studies and video to their product page, not their checkout page, and saw a 10% conversion increase. The problem was one step before where they assumed it was.

The lesson: test the right thing, not just test things. Use analytics and session recordings to identify where the friction is before deciding what to change.

And don't forget what happens after the form. Responding to a lead within 5 minutes increases contact rates 900%. If you're benchmarking this, start with average lead response time.

Personalized CTAs convert 202% better - but only if your emails reach real inboxes. Prospeo gives you 143M+ verified emails at $0.01 each, so your A/B tests compound real gains instead of compounding waste.

Stack conversion wins on data that actually delivers.

Statistical Significance - Stop Guessing, Start Calculating

Here's the uncomfortable truth most testing guides bury: only 25-30% of tests produce statistically significant results. That's normal. If every test you run is a "winner," your methodology is broken.

Sample Size and Duration

The industry standard is 95% confidence with 80% statistical power. Use CXL's free calculator to determine your required sample size before launching any test. You need your baseline conversion rate, the minimum detectable effect you care about, and your weekly traffic or send volume.

HubSpot's email testing strategy is smart: send the A/B test to the smallest portion of your list needed for significance, pick the winner, then send the winner to the remainder. You get statistical rigor without sacrificing reach.

Minimum test duration: two weeks. Always. Even if you hit your sample size in three days. Weekly cycles matter - Tuesday traffic behaves differently than Saturday traffic. The "peeking" problem is real: checking results daily and stopping when one variant looks good leads to false positives. Set your duration in advance and don't touch it.

When to Kill a Test

For pages with 1,000+ weekly visitors: expect 2-4 weeks to reach significance. For pages with 200-500 weekly visitors: plan for 4-8 weeks. For cold email campaigns, you need a minimum of 100-200 prospects per variant.

If a test hasn't reached significance after the planned duration, the difference between variants is too small to matter. That's a valid result. Move on to testing something with higher potential impact - go back to the priority framework and test a bigger lever.

Plan for 3-5 tests before finding a significant winner. That's not failure. That's the process.

A/B Testing and CRO Tools - What to Use (and What to Skip)

The optimization and testing tool market jumped from 230 to 271 tools in a single year. You don't need to evaluate all of them. Here's what matters by budget:

| Tool | Best For | Starting Price | Budget Tier |

|---|---|---|---|

| Unbounce | Landing pages + A/B | $74/mo | Budget |

| Prospeo | Email data quality | Free / ~$0.01/email | Budget |

| VWO | Full-stack testing | ~$200-400/mo | Mid-Market |

| Convert | Privacy-focused (GDPR) | $199/mo | Mid-Market |

| Statsig | Multi-armed bandit | $150/mo | Mid-Market |

| LaunchDarkly | Feature flags + tests | $12/mo | Budget |

| Optimizely | Enterprise CRO | ~$36K-$150K+/yr | Enterprise |

VWO is the best overall pick for marketing teams. Bayesian SmartStats means you don't need a statistics degree to interpret results, the visual editor requires zero developer dependency, and their case study library is genuinely useful for test ideation.

Unbounce is the best value for teams focused on landing page testing. At $74/mo, you get a landing page builder with built-in A/B testing and AI copywriting. If landing pages are your primary testing surface, this is where I'd start.

Prospeo sits in a different category: email data quality. It's the prerequisite for email experiments to produce valid results. With 98% email accuracy and a 5-step verification process that catches spam traps and honeypots, it ensures your test variants actually reach real inboxes. If you want to go deeper on verification, see How to Verify an Email Address and our list of email verifier websites. The free tier gives you 75 email verifications per month, and paid plans run about $0.01 per email with no contracts.

Convert at $199/mo is the privacy-focused option - solid for GDPR-conscious teams. Optimizely is the enterprise standard at $36K-$150K+/year, and overkill for most teams under 100 employees. LaunchDarkly at $12/mo is primarily a feature flagging tool with testing capabilities - great for product teams, less relevant for marketing. Statsig at $150/mo offers multi-armed bandit testing that automatically shifts traffic to winning variants.

One frustration worth calling out: Apollo moved A/B testing to their Professional tier - a 5x price increase from their lower plans. Basic experimentation shouldn't be an enterprise feature. If you're on Apollo's lower tiers and need email testing, you'll need a separate tool (here are cold email outreach tools worth considering).

Skip any guide that still recommends Google Optimize. It was discontinued in September 2023.

AI-Powered A/B Testing in 2026

AI is changing three things about testing lead generation campaigns, and they're all about speed.

Predictive modeling lets tools forecast winning variations before the test begins, using historical data patterns. Instead of running a test for four weeks, the AI suggests which variants are most likely to win. Several enterprise platforms - VWO, AB Tasty, Dynamic Yield - offer versions of this.

Real-time learning (multi-armed bandit) adapts continuously during a test, automatically shifting traffic toward higher-performing variants. Traditional A/B testing waits until the end to declare a winner. Multi-armed bandit approaches reduce wasted impressions on losing variants while the test is still running.

Smarter resource allocation means AI can test multiple headline, visual, and audience combinations in parallel - something that would require impossibly large sample sizes with traditional methods.

The numbers: AI-driven email personalization yields a 13.44% CTR versus 3% for non-AI campaigns. And 64% of marketers now use AI for email. This isn't a future trend - it's the current baseline. If your testing tool doesn't have some form of AI-assisted optimization in 2026, it's falling behind.

FAQ

How long should I run an A/B test?

Minimum two weeks, always - even if you hit sample size sooner. Weekly traffic patterns skew results, and stopping early causes false positives. Low-traffic pages (200-500 weekly visitors) need 4-8 weeks to reach 95% confidence.

What should I A/B test first in a lead gen campaign?

Offers and CTAs - they drive the biggest lifts (up to 1,500%). Work down the priority framework: offers/CTAs -> subject lines -> ICP segment messaging -> form structure -> page layout -> cosmetic changes.

Can I run multiple A/B tests at the same time?

Yes, but on different pages or channels - never on the same page. Running two tests on one page makes it impossible to isolate which change caused the result. Separate surfaces, separate experiments.

How do I make sure my email A/B tests reach real inboxes?

Verify your contact data before testing. If a significant portion of your list bounces, you're measuring deliverability, not messaging. Tools like Prospeo (75 free verifications/month), NeverBounce, or ZeroBounce can clean your list - without verified data, even well-designed experiments produce unreliable results.